By Ravi Malhotra February 8, 2022

Co-authors: Manoj Iyer and Yichen Jia

Due to the large amount of data managed in the cloud, it needs to be compressed before storing it for efficient use of storage media. Various algorithms have been developed to compress and decompress various data types in flight. In this blog, we'll introduce two well-recognized algorithms, Zstandard and Snappy, and compare their performance on Arm servers.

background

There are various types of data compression algorithms - some of which are customized according to the type of data - for example, video, audio, image/graphics. However, most other types of data require a general lossless compression algorithm that provides good compression ratios across different datasets. These compression algorithms can be used in several applications.

- File or object storage systems such as Ceph, OpenZFS, SquashFS

- Database or analytics applications such as MongoDB, Kafka, Hadoop, Redis, etc.

- Web or HTTP – NGINX, curl, Django, etc.

- Archive software - tar, winzip, etc.

- Several other use cases, such as Linux kernel compression

Compression and Speed

A key challenge for compression algorithms is whether they are optimized to achieve higher compression ratios or to compress/decompress at higher speeds. One of them optimizes storage space, while the other helps to save computing cycles and reduce operational latency. Some algorithms, such as Zstandard[1] and zlib[2], provide multiple presets that allow users/applications to choose their own tradeoffs based on usage. While others (such as Snappy[3]) are designed for speed.

Zstandard is an open source algorithm developed by Facebook that provides a maximum compression ratio comparable to the DEFLATE algorithm, but is optimized for higher speed, especially for decompression. Since its introduction in 2016, it has become very popular in several sets of applications and has become the default compression algorithm of the Linux kernel.

Snappy is an open-source algorithm developed by Google that aims to optimize compression speed with a reasonable compression ratio. It is very popular in database and analytical applications.

The Arm software team optimized both algorithms for high performance on the Arm server platform based on the Arm Neoverse core. These optimizations use the power of the Neon vector engine to speed up certain parts of the algorithm.

Performance comparison

We took the latest optimized versions of the Zstandard and Snappy algorithms and benchmarked them on similar cloud instances on AWS (Amazon Web Services).

- 2xlarge instance - using AWS Graviton2 based on Arm Neoverse N1 core

- 2xlarge instance – using Intel Cascade Lake

Both algorithms were benchmarked in two different scenarios:

Focus on raw algorithm performance - we tested using the lzbench tool on a Silesia corpus containing different industry standard data types.

Application-level performance of the popular NoSQL database MongoDB - Use the YCSB tool to test the impact of using these compression algorithms on the throughput and latency of database operations, and measure the overall compression of the database.

raw algorithm performance

Bandwidth (speed) comparison

This test focuses on the raw aggregated compression/decompression throughput of 16 parallel processes on different datasets. For Zstandard, we observed an overall performance improvement of 30-67% for C6g instances when compressing and 11-35% for decompressing.

Considering the 20% lower price of C6g instances, you can save up to 52% per MB of compressed data.

Figure 1: Zstd8 Compression Throughput Comparison - C5 vs G6g

Figure 2: Zstd8 decompression throughput comparison - C5 vs G6g

Using Snappy as the compression algorithm, we observe that Snappy has higher compression and relatively similar decompression speed compared to the expected Zstandard. Overall, Snappy performs 40-90% better on various datasets on C6g instances compared to C5.

Considering the 20% lower price of the C6g instance, there is a 58% savings per MB of compressed data.

Figure 3: Snappy Compression - C5 and C6g

Figure 4: Snappy unzip - C5 and C6g

Compression ratio

We also compare the compression ratios of the two algorithms on different datasets on C6g and C5 instances. In both cases, the same compression ratio is obtained, which shows that the algorithm works as efficiently as expected.

application level performance

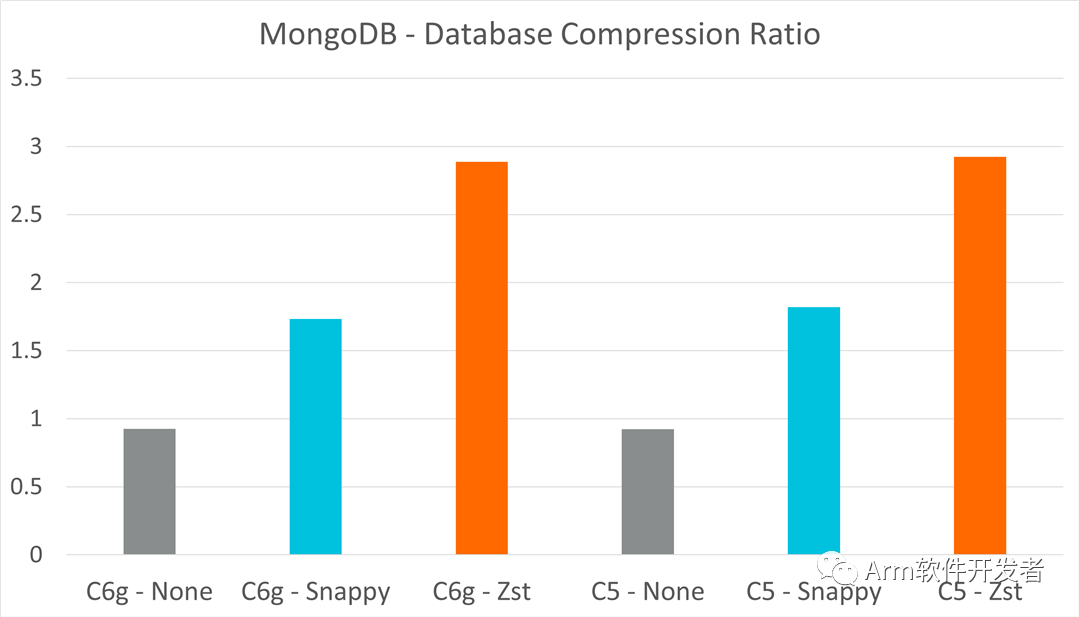

The MongoDB WiredTiger storage engine supports several compression modes: snappy, zstd, zlib, etc. Here we are testing the compression mode snappy, zstd none. We used a dataset of 10,000 sentences of English text that was randomly generated using a Python faker.

Separate AWS instances are used as test objects and test hosts. Documents are inserted into the MongoDB database and account for 5GB (approximately) of data. The test subject instances used were Arm (c6g.2xlarge) and Intel (c5.2xlarge). After filling the MongoDB database with 5GB of data, we use the "dbstat" command to get the storage size.

Snappy vs Zstandard – Speed vs Compression

Between Snappy and Zstandard, we observed that Zstandard compresses the overall database size better than expected.

Figure 5: MongoDB: Database Compression Ratio

Snappy provides better throughput in insert operations, which are write (compression) intensive operations. However, read/modify/write operations involving a mix of compression and decompression show little difference between the two algorithms

Figure 6: MongoDB: Insert Throughput - Snappy vs Zstd

Figure 7: MongoDB: Read/Modify/Write Throughput - Snappy vs Zstd

in conclusion

Generic compression algorithms such as Zstandard and Snappy can be used in a variety of applications and are very versatile in compressing different types of generic datasets. Both Zstandard and Snappy are optimized for Arm Neoverse and AWS Graviton2, and we observed two key results compared to Intel-based instances. First, Graviton2-based instances can achieve 11-90% better compression and decompression performance compared to similar Intel-based instance types. Second, Graviton2-based instances can cut data compression costs in half. For real-world applications like MongoDB, these compression algorithms add little overhead to typical operations while significantly reducing database size.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。