Author: KubeVela Community

In the current wave of machine learning, AI engineers not only need to train and debug their own models, but also need to deploy the models online to verify the effects of the models (of course, sometimes, this part of the work is done by AI system engineers. ). This part of the work is tedious and consumes extra effort for AI engineers.

In the cloud-native era, our model training and model serving are also usually performed on the cloud. Doing so not only improves scalability, but also improves resource utilization. This is very effective for machine learning scenarios that consume a lot of computing resources.

But it is often difficult for AI engineers to use cloud-native capabilities. The concept of cloud native has become more complex over time. To deploy a simple model service on cloud native, AI engineers may need to learn several additional concepts: Deployment, Service, Ingress, etc.

As a simple, easy-to-use, and highly scalable cloud-native application management tool, KubeVela allows developers to quickly and easily define and deliver applications on Kubernetes without knowing any details about the underlying cloud-native infrastructure. KubeVela has rich extensibility. Its AI plug-in provides functions such as model training, model service, and A/B testing, covering the basic needs of AI engineers and helping AI engineers quickly conduct model training and model training in a cloud-native environment. Serve.

This article mainly introduces how to use KubeVela's AI plug-in to help engineers complete model training and model services more easily.

KubeVela AI plugin

KubeVela AI plug-in is divided into two plug-ins: model training and model service. The model training plug-in is based on KubeFlow's training-operator, which can support distributed model training of different frameworks such as TensorFlow, PyTorch, and MXNet. The model service plug-in is based on Seldon Core, which can easily use the model to start the model service, and also supports advanced functions such as traffic distribution and A/B testing.

Through the KubeVela AI plug-in, the deployment of model training tasks and model services can be greatly simplified. At the same time, the process of model training and model services can be combined with KubeVela's own workflow, multi-cluster and other functions to complete production-available services. deploy.

Note: You can find all source code and YAML files in KubeVela Samples[1]. If you want to use the model pretrained in this example, the style-model.yaml and color-model.yaml in the folder will copy the model into the PVC.

model training

First start the two plugins for model training and model serving.

vela addon enable model-training

vela addon enable model-servingModel training includes two component types, model-training and jupyter-notebook, and model-serving includes the component type model-serving. The specific parameters of these three components can be viewed through the vela show command.

You can also choose to consult the KubeVela AI plugin documentation [2] for more information.

vela show model-training

vela show jupyter-notebook

vela show model-servingLet's train a simple model using the TensorFlow framework that turns gray images into colors. Deploy the following YAML file:

Note: The source code for model training comes from: emilwallner/Coloring-greyscale-images[3]

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: training-serving

namespace: default

spec:

components:

# 训练模型

- name: demo-training

type: model-training

properties:

# 训练模型的镜像

image: fogdong/train-color:v1

# 模型训练的框架

framework: tensorflow

# 声明存储,将模型持久化。此处会使用集群内的默认 storage class 来创建 PVC

storage:

- name: "my-pvc"

mountPath: "/model"At this point, KubeVela will pull up a TFJob for model training.

It's hard to see the effect just by training the model. Let's modify this YAML file and put the model service after the model training step. At the same time, because the model service will directly start the model, and the input and output of the model are not intuitive (ndarray or Tensor), therefore, we deploy a test service to call the service and convert the result into an image.

Deploy the following YAML file:

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: training-serving

namespace: default

spec:

components:

# 训练模型

- name: demo-training

type: model-training

properties:

image: fogdong/train-color:v1

framework: tensorflow

storage:

- name: "my-pvc"

mountPath: "/model"

# 启动模型服务

- name: demo-serving

type: model-serving

# 模型服务会在模型训练完成后启动

dependsOn:

- demo-training

properties:

# 启动模型服务使用的协议,可以不填,默认使用 seldon 自身的协议

protocol: tensorflow

predictors:

- name: model

# 模型服务的副本数

replicas: 1

graph:

# 模型名

name: my-model

# 模型框架

implementation: tensorflow

# 模型地址,上一步会将训练完的模型保存到 my-pvc 这个 pvc 当中,所以通过 pvc://my-pvc 指定模型的地址

modelUri: pvc://my-pvc

# 测试模型服务

- name: demo-rest-serving

type: webservice

# 测试服务会在模型训练完成后启动

dependsOn:

- demo-serving

properties:

image: fogdong/color-serving:v1

# 使用 LoadBalancer 暴露对外地址,方便调用

exposeType: LoadBalancer

env:

- name: URL

# 模型服务的地址

value: http://ambassador.vela-system.svc.cluster.local/seldon/default/demo-serving/v1/models/my-model:predict

ports:

# 测试服务的端口

- port: 3333

expose: true

部署之后,通过 vela ls 来查看应用的状态: $ vela ls

training-serving demo-training model-training running healthy Job Succeeded 2022-03-02 17:26:40 +0800 CST

├─ demo-serving model-serving running healthy Available 2022-03-02 17:26:40 +0800 CST

└─ demo-rest-serving webservice running healthy Ready: 1/1 2022-03-02 17:26:40 +0800 CST

可以看到,应用已经正常启动。通过 vela status <app-name> --endpoint 来查看应用的服务地址。

$ vela status training-serving --endpoint

| CLUSTER | REF(KIND/NAMESPACE/NAME) | ENDPOINT |

|---|---|---|

| Service/default/demo-rest-serving | tcp://47.251.10.177:3333 | |

| Service/vela-system/ambassador | http://47.251.36.228/seldon/default/demo-serving | |

| Service/vela-system/ambassador | https://47.251.36.228/seldon/default/demo-serving |

该应用有三个服务地址,第一个是我们的测试服务的地址,第二个和第三都是原生模型的地址。我们可以调用测试服务来查看模型的效果:测试服务会读取图像的内容,并将其转成 Tensor 并请求模型服务,最后将模型服务返回的 Tensor 转成图像返回。

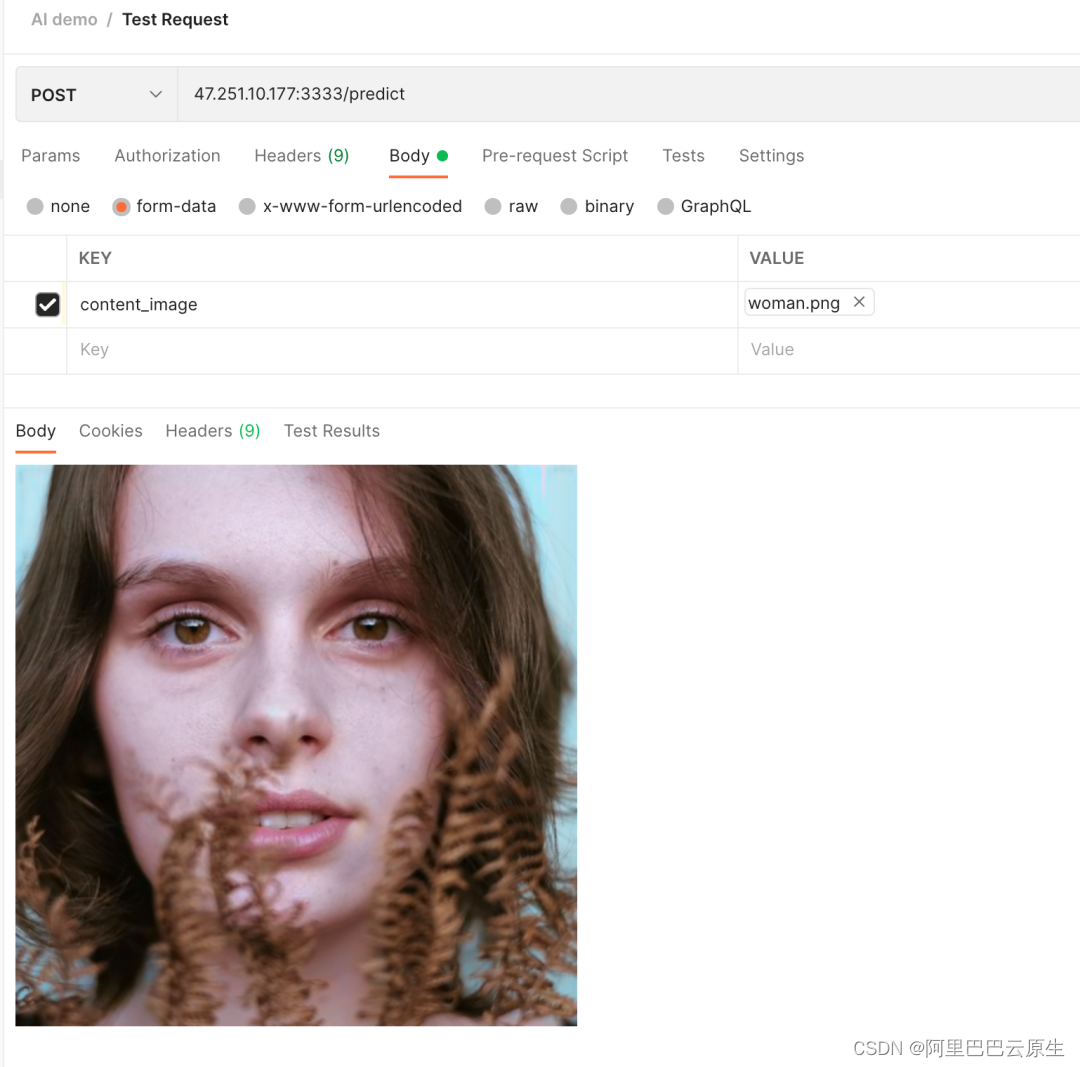

我们选择一张黑白的女性图片作为输入:

请求后,可以看到,输出了一张彩色图片:

## 模型服务:灰度测试

除了直接启动模型服务,我们还可以在一个模型服务中使用多个版本的模型,并对其分配不同的流量以进行灰度测试。

部署如下 YAML,可以看到,v1 版本的模型和 v2 版本的模型都设置为了 50% 的流量。同样,我们在模型服务后面部署一个测试服务:

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: color-serving

namespace: default

spec:

components:

name: color-model-serving

type: model-serving

properties:

protocol: tensorflow

predictors:- name: model1 replicas: 1 # v1 版本的模型流量为 50 traffic: 50 graph: name: my-model implementation: tensorflow # 模型地址,在 color-model 这个 pvc 中 /model/v1 路径下存放了我们的 v1 版本模型,所以通过 pvc://color-model/model/v1 指定模型的地址 modelUri: pvc://color-model/model/v1 - name: model2 replicas: 1 # v2 版本的模型流量为 50 traffic: 50 graph: name: my-model implementation: tensorflow # 模型地址,在 color-model 这个 pvc 中 /model/v2 路径下存放了我们的 v2 版本模型,所以通过 pvc://color-model/model/v2 指定模型的地址 modelUri: pvc://color-model/model/v2name: color-rest-serving

type: webservice

dependsOn:- color-model-serving

properties:

image: fogdong/color-serving:v1

exposeType: LoadBalancer

env:- name: URL value: http://ambassador.vela-system.svc.cluster.local/seldon/default/color-model-serving/v1/models/my-model:predictports:

- port: 3333 expose: true

当模型部署完成后,通过 vela status <app-name> --endpoint 查看模型服务的地址:

| $ vela status color-serving --endpoint | ||

|---|---|---|

| CLUSTER | REF(KIND/NAMESPACE/NAME) | ENDPOINT |

| Service/vela-system/ambassador | http://47.251.36.228/seldon/default/color-model-serving | |

| Service/vela-system/ambassador | https://47.251.36.228/seldon/default/color-model-serving | |

| Service/default/color-rest-serving | tcp://47.89.194.94:3333 |

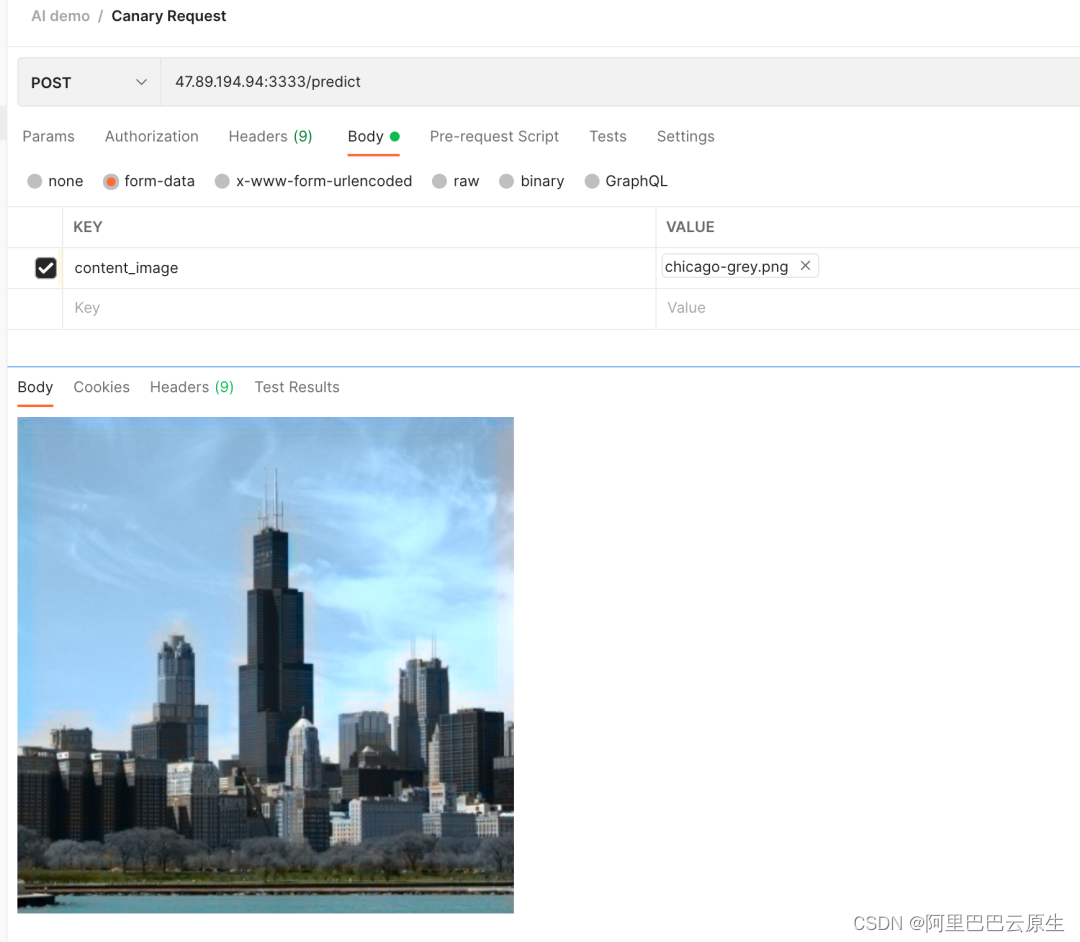

使用一张黑白的城市图片请求模型:

可以看到,第一次请求的结果如下。虽然天空和地面都被渲染成彩色了,但是城市本身还是黑白的:

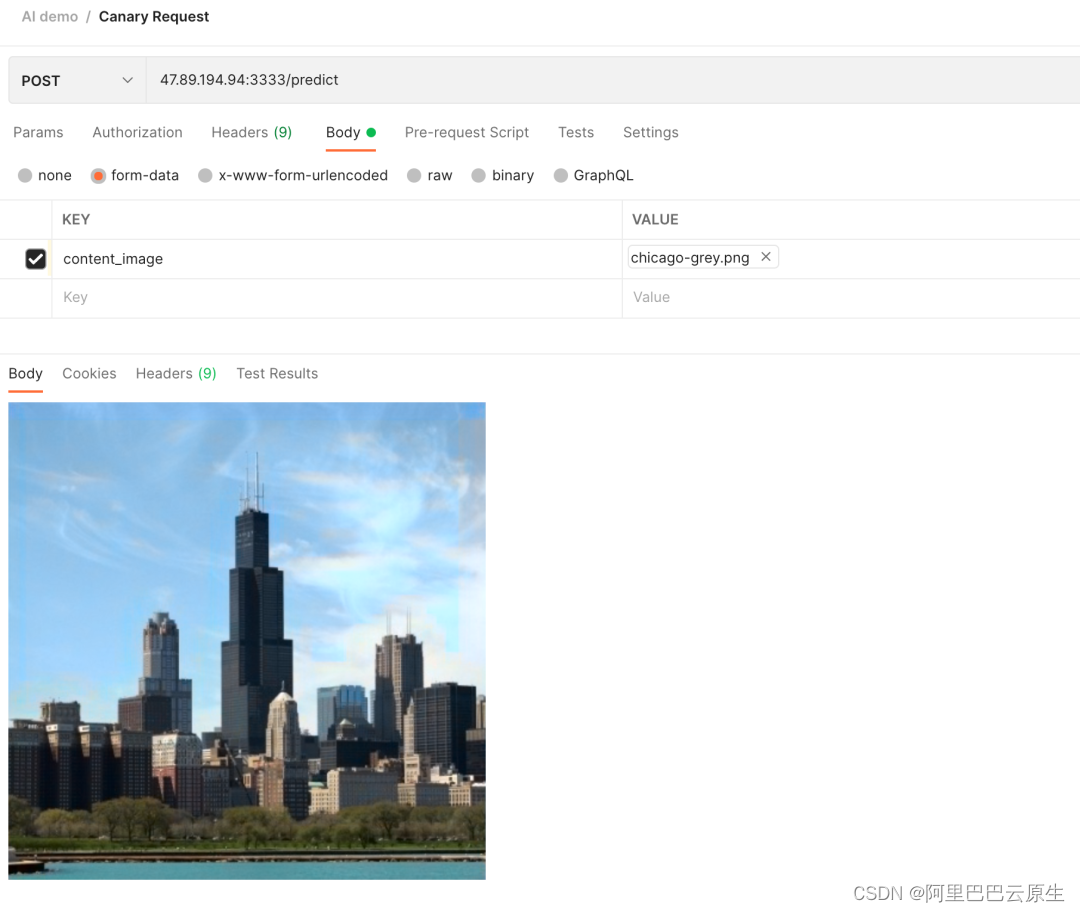

再次请求,可以看到,这次请求的结果中,天空、地面和城市都被渲染成了彩色:

通过对不同版本的模型进行流量分发,可以帮助我们更好地对模型结果进行判断。

## 模型服务:A/B 测试

同样一张黑白的图片,我们既可以通过模型将其变成彩色的,也可以通过上传另一张风格图片,对原图进行风格迁移。

对于用户来说,究竟是彩色的图片好还是不同风格的图片更胜一筹?我们可以通过进行 A/B 测试,来探索这个问题。

部署如下 YAML,通过设置 customRouting,将 Header 中带有 style: transfer 的请求,转发到风格迁移的模型。同时,使这个风格迁移的模型与彩色化的模型共用一个地址。

注:风格迁移的模型来源于 TensorFlow Hub[4]

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: color-style-ab-serving

namespace: default

spec:

components:

name: color-ab-serving

type: model-serving

properties:

protocol: tensorflow

predictors:- name: model1 replicas: 1 graph: name: my-model implementation: tensorflow modelUri: pvc://color-model/model/v2name: style-ab-serving

type: model-serving

properties:

protocol: tensorflow

# The model of style migration takes a long time, set the timeout time so that the request will not be timed out

timeout: "10000"

customRouting:# 指定自定义 Header header: "style: transfer" # 指定自定义路由 serviceName: "color-ab-serving"predictors:

- name: model2 replicas: 1 graph: name: my-model implementation: tensorflow modelUri: pvc://style-model/modelname: ab-rest-serving

type: webservice

dependsOn:- color-ab-serving

- style-ab-serving

properties:

image: fogdong/style-serving:v1

exposeType: LoadBalancer

env:- name: URL value: http://ambassador.vela-system.svc.cluster.local/seldon/default/color-ab-serving/v1/models/my-model:predictports:

- port: 3333 expose: true

部署成功后,通过 vela status <app-name> --endpoint 查看模型服务的地址:

| $ vela status color-style-ab-serving --endpoint | ||

|---|---|---|

| CLUSTER | REF(KIND/NAMESPACE/NAME) | ENDPOINT |

| Service/vela-system/ambassador | http://47.251.36.228/seldon/default/color-ab-serving | |

| Service/vela-system/ambassador | https://47.251.36.228/seldon/default/color-ab-serving | |

| Service/vela-system/ambassador | http://47.251.36.228/seldon/default/style-ab-serving | |

| Service/vela-system/ambassador | https://47.251.36.228/seldon/default/style-ab-serving | |

| Service/default/ab-rest-serving | tcp://47.251.5.97:3333 |

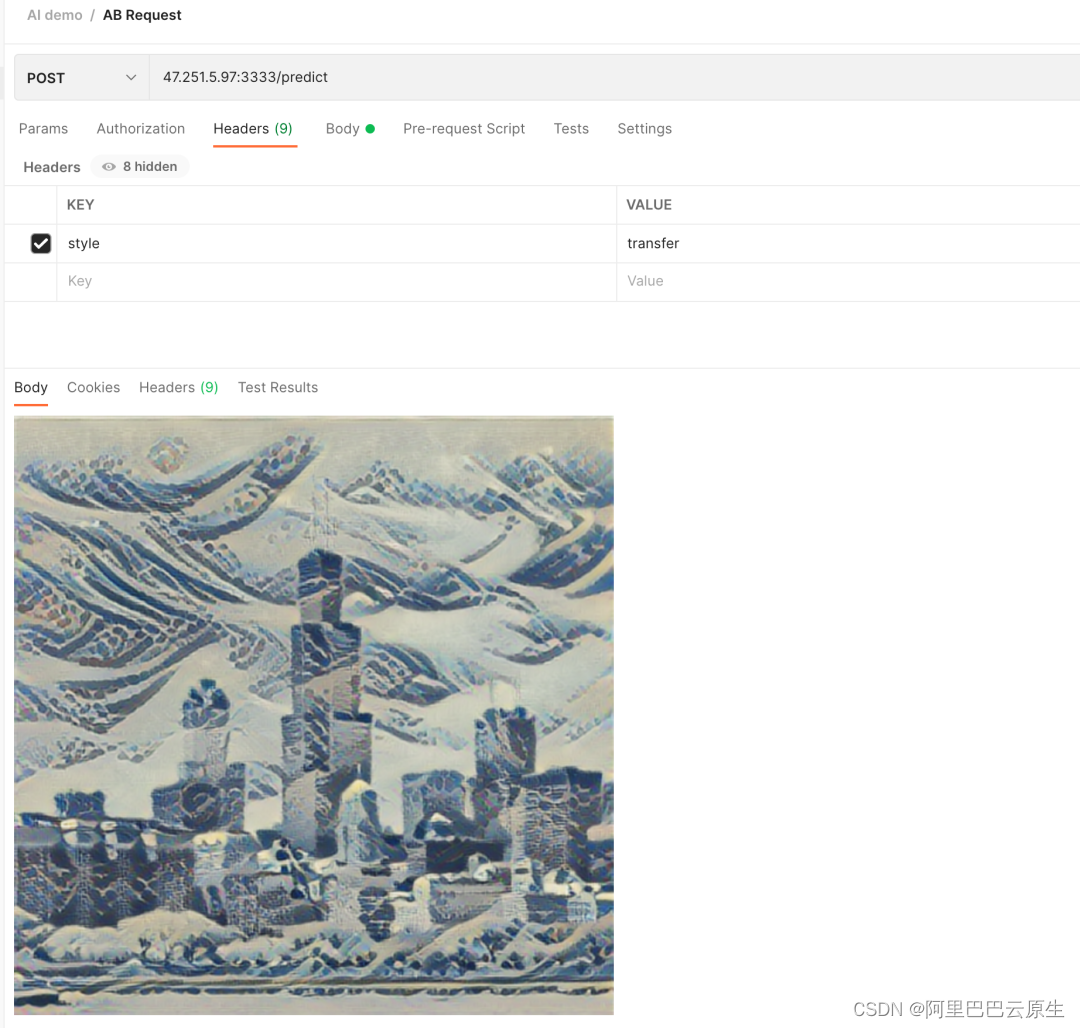

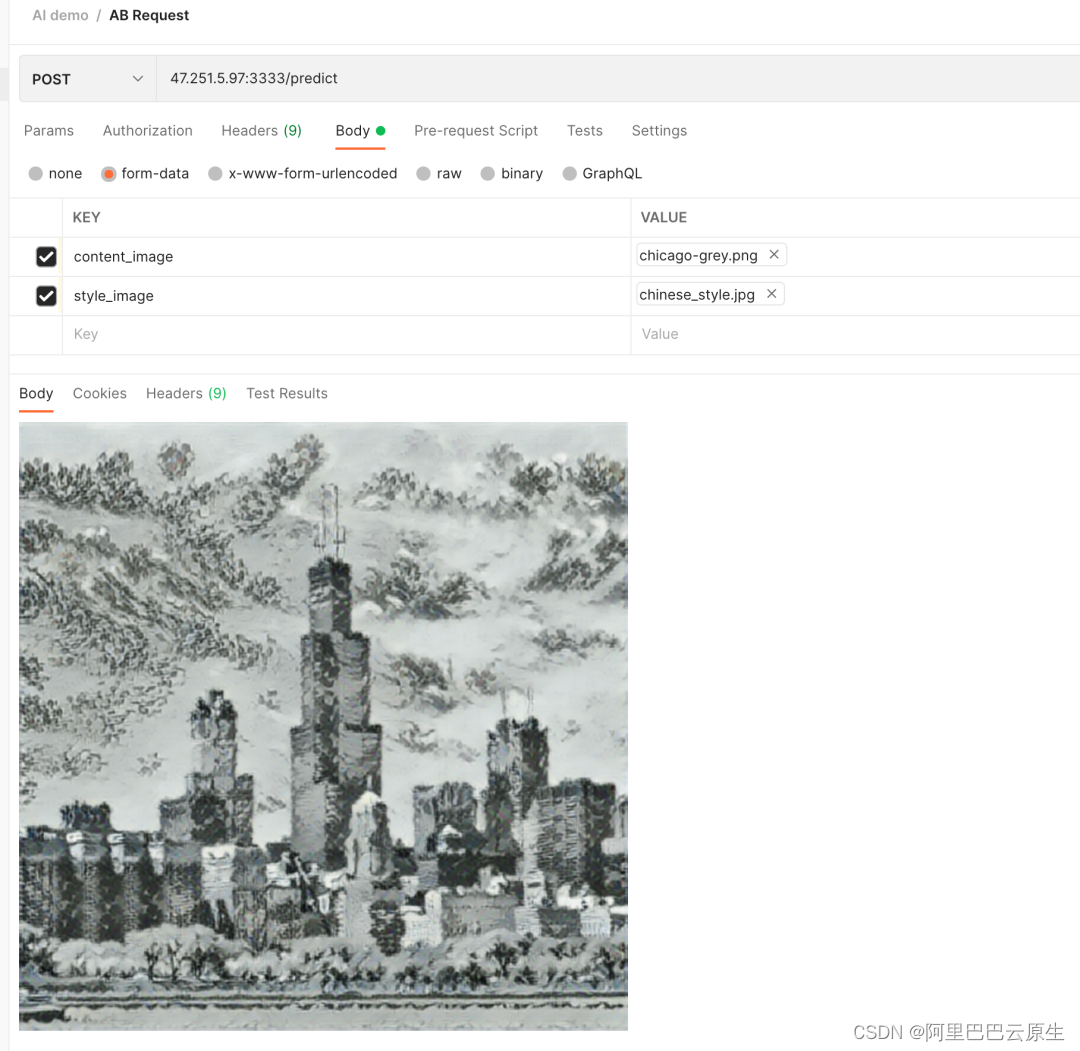

这个应用中,两个服务各自有两个地址,但是第二个 style-ab-serving 的模型服务地址是无效的,因为这个模型服务已经被指向了 color-ab-serving 的地址中。同样,我们通过请求测试服务来查看模型效果。

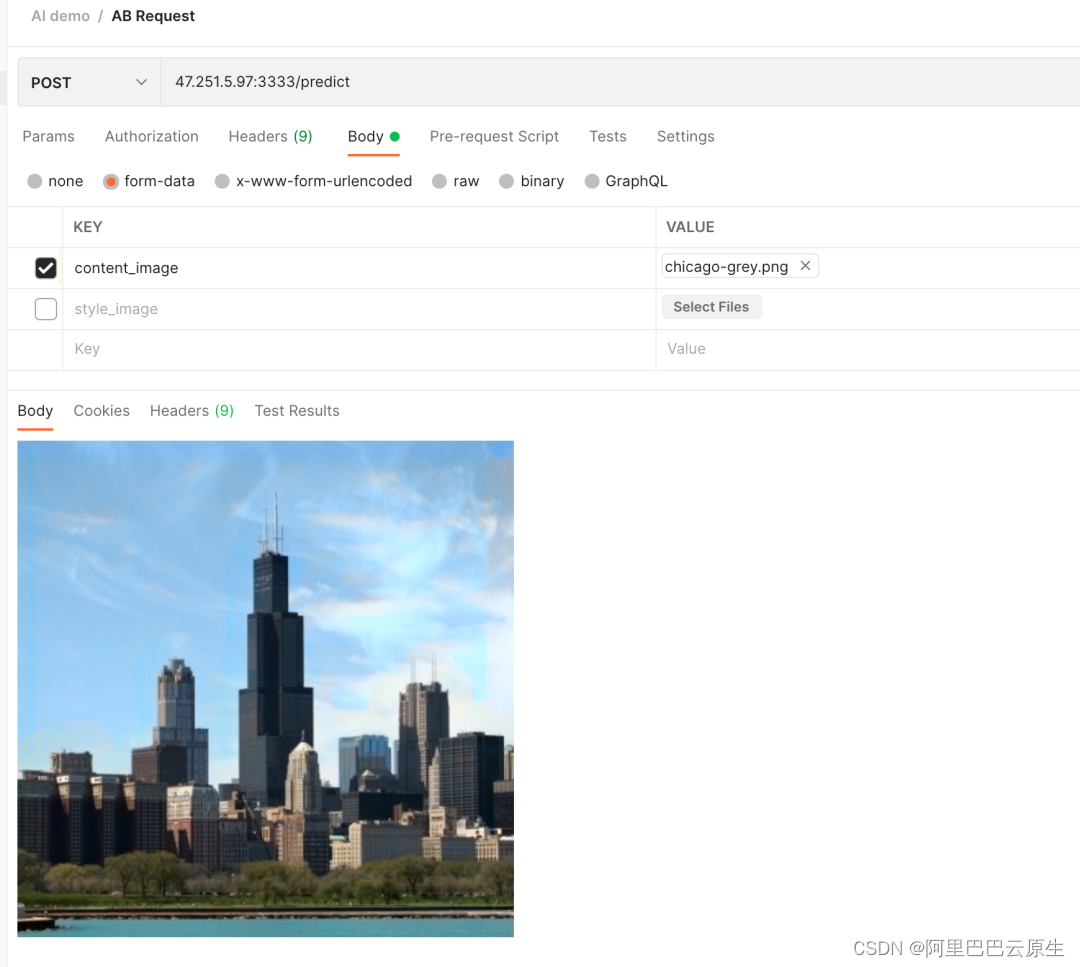

首先,在不加 header 的情况下,图像会从黑白变为彩色:

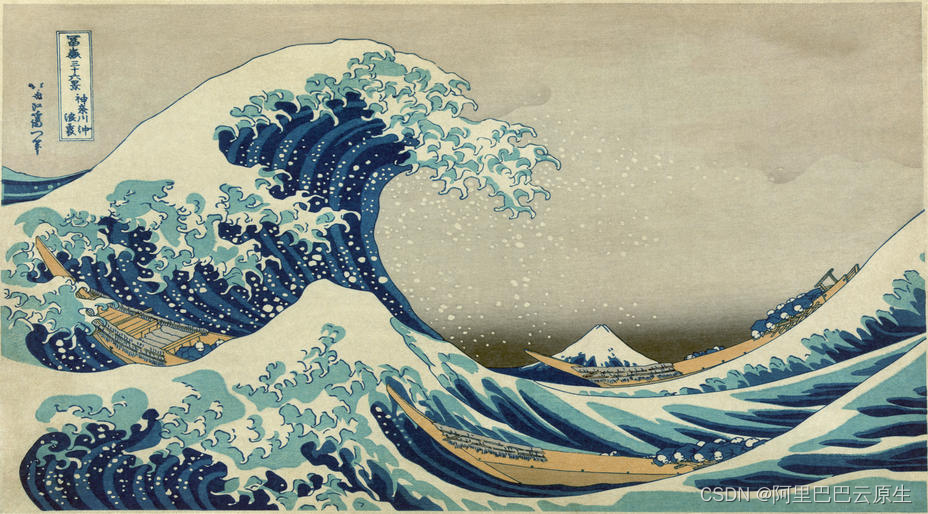

我们添加一个海浪的图片作为风格渲染:

我们为本次请求加上 style: transfer 的 Header,可以看到,城市变成了海浪风格:

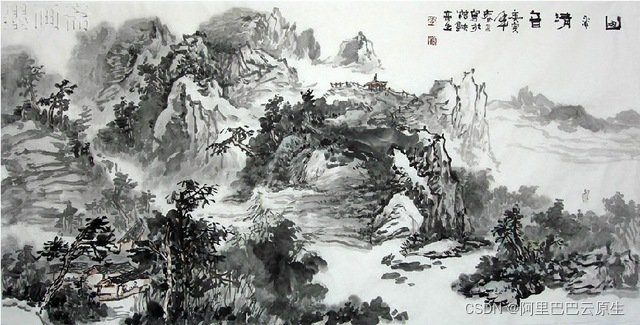

我们还可以使用一张水墨画的图片作为风格渲染:

可以看到,这次城市变成了水墨画风格:

## 总结

通过 KubeVela 的 AI 插件,可以帮助你更便捷地进行模型训练与模型服务。

除此之外,通过与 KubeVela 的结合,我们还能将测试完效果的模型通过 KubeVela 的多环境功能,下发到不同的环境中,从而实现模型的灵活部署。

## 相关链接

[1] KubeVela Samples

https://github.com/oam-dev/samples/tree/master/11.Machine_Learning_Demo

[2] KubeVela AI 插件文档

https://kubevela.io/zh/docs/next/reference/addons/ai

[3] emilwallner/Coloring-greyscale-images

https://github.com/emilwallner/Coloring-greyscale-images

[4]TensorFlow Hub

https://tfhub.dev/google/magenta/arbitrary-image-stylization-v1-256/2

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。