Author: Li Kai (Kaiyi)

EvenBridge Integration Overview

EventBridge is a serverless event bus launched by Alibaba Cloud. Its goal is to expand the event ecosystem, break the data silos between systems, and build an event integration ecosystem. Provide unified event standardized access and management capabilities, improve integration and integrated channels, help customers quickly realize event-driven core atomic functions, and quickly integrate EventBridge into BPM, RPA, CRM and other systems.

EventBridge expands the EventBridge event ecosystem through event standardization, access standardization, and component standardization as fulcrums:

• Event standardization : embrace the CloudEvents 1.0 open source community standard protocol, natively support the CloudEvents community SDK and API, and fully embrace the open source community event standard ecosystem;

• Access standardization : Provides a standard event push protocol PutEvent, and supports two event access models, Pull and Push, which can effectively reduce the difficulty of event access and provide a complete standardization process for event access on the cloud;

• Component standardization : encapsulates standard event downstream component tool chain system, including schema registration, event analysis, event retrieval, event dashboard, etc. Provide a complete event toolchain ecosystem.

In the integration field, EventBridge focuses on creating two core scenarios of event integration and data integration. The following will describe these two scenarios in detail.

event integration

At present, EventBridge already has 80+ cloud product event sources and 800+ event types. The entire event ecology is gradually enriched.

So, how does EventBridge implement event integration for cloud products?

• First, you can see an event bus named default in the EventBridge console, and events of cloud products will be delivered to this bus;

• Then click Create Rule, you can select the cloud product you care about and its related events to monitor and deliver events.

Let's take two examples as examples to see how EventBridge events are integrated.

OSS event integration

Take OSS event source as an example to explain how to integrate OSS events.

OSS events are now mainly divided into four categories: operation audit-related, cloud monitoring-related, configuration audit-related, and cloud product-related events such as PutObject uploading files and so on. The event sources of other cloud products are similar and can basically be divided into these types of events.

The following demonstrates the event-driven online file decompression service:

• Under the OSS Bucket, there will be a zip folder to store the files to be decompressed, and an unzip folder to store the decompressed files;

• When a file is uploaded to the OSS Bucket, a file upload event will be triggered and delivered to the EventBridge cloud service dedicated bus;

• Then an event rule will be used to filter the event of the zip bucket and deliver it to the HTTP Endpoint of the decompression service;

• After receiving the event, the decompression service will download and decompress the file from OSS according to the file path in the event, and transfer the file to the unzip directory after decompression;

• At the same time, there will be an event rule that monitors the file upload event in the unzip directory, and pushes the event to the DingTalk group after conversion.

Let's take a look at how this is accomplished:

Go to the link below to view the video:

https://www.bilibili.com/video/BV1s44y1g7dk/

1) First create a bucket, there is a zip directory for storing the uploaded compressed files, and an unzip directory for storing the decompressed files.

2) Deploy the decompression service and expose the public network access address.

The source address of the decompression service is:

https://github.com/AliyunContainerService/serverless-k8s-examples/tree/master/oss-unzip?spm=a2c6h.12873639.article-detail.15.5a585d52apSWbk

You can also use ASK to deploy directly, the yaml file address is:

https://github.com/AliyunContainerService/serverless-k8s-examples/blob/master/oss-unzip/hack/oss-unzip.yaml

3) Create an event rule to monitor the event of uploading files in the zip directory, and deliver it to the HTTP Endpoint of the decompression service.

Here subject is used, matching the zip directory.

4) Create another event rule to monitor the events of the unzip directory, and deliver the unzip events to the DingTalk group.

Here also subject is used, matching the unzip directory.

For the configuration of variables and templates, please refer to the official documentation:

https://help.aliyun.com/document_detail/181429.html .

EventBridge will extract parameters from the event through JSONPath, then put these values into variables, and finally render the final output through the template definition and deliver it to the event target. You can also refer to the official documentation for the event format of the OSS event source:

https://help.aliyun.com/document_detail/205739.html#section-g8i-7p9-xpk , and use JSONPath to define variables according to actual business needs. 5) Finally, upload a file through the oss console for verification.

You can see that the just uploaded eventbridge.zip has been decompressed and uploaded, and you can also receive a notification that the decompression is complete in the DingTalk group. In addition, you can also view the track where the content of the event has been delivered on the event tracking side.

You can see that there are two upload events: one is uploaded through the console, and the other is uploaded after decompressing the file.

You can view the trajectory, and all of them are successfully delivered to the HTTP Endpoint of the decompression service and the DingTalk robot.

Integrate cloud products by customizing event sources as well as cloud product event targets

The demo just demonstrated is an event source for integrating cloud services. Let's take a demo to see how to integrate cloud products by customizing event sources and cloud product event targets.

Go to the link below to view the video:

https://www.bilibili.com/video/BV1QF411M7xv/

The final effect of this demo is to automatically clean data through EventBridge and deliver it to RDS. The event content is a JSON with two fields, a name and an age, and now it is hoped that users older than 10 years old will be filtered out and stored in RDS.

The overall architecture is shown in the figure, using an MNS Queue as a custom event source, and filtering and converting events through EventBridge and finally outputting them directly to RDS.

1) First, an MNS Queue has been created, an RDS instance and a database table have been created. The table structure is as follows:

2) Create a custom event bus, select the event provider as MNS, and the queue as the queue created in advance;

After it is created, we can see an already running event source in the event source;

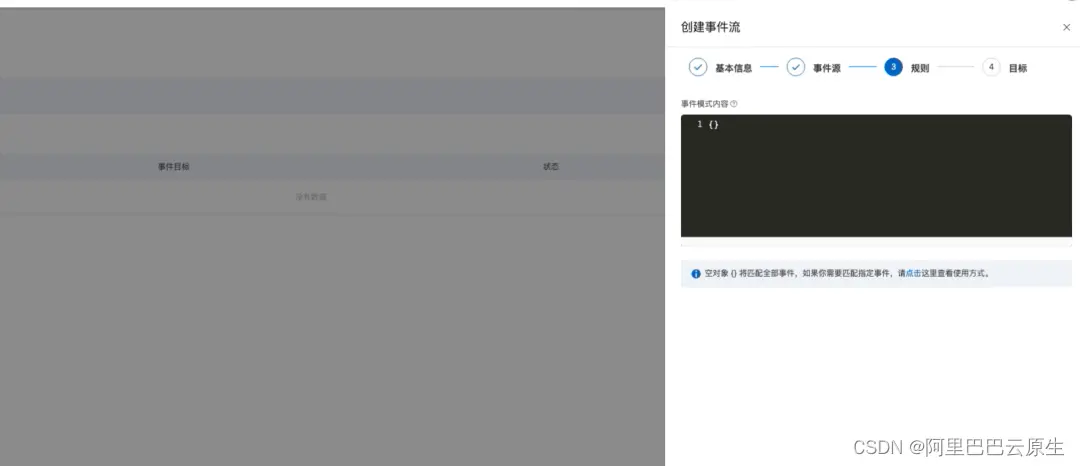

3) Next, create a rule and send it to RDS

The content of the configured event mode is as follows:

{

"source": [

"my.user"

],

"data": {

"messageBody": {

"age": [

{

"numeric": [

">",

10

]

}

]

}

}

} Numerical matching can refer to the official documentation:

https://help.aliyun.com/document_detail/181432.html#section-dgh-5cq-w6c

4) Click Next, select the event target as the database, fill in the database information, configure the conversion rules, and complete the creation.

5) Finally, use MNS Queue to send a message, the age of this is greater than 10.

You can see that this event is output to RDS.

Next, send a message less than 10 to MNS Queue.

This event is filtered out and not output to RDS.

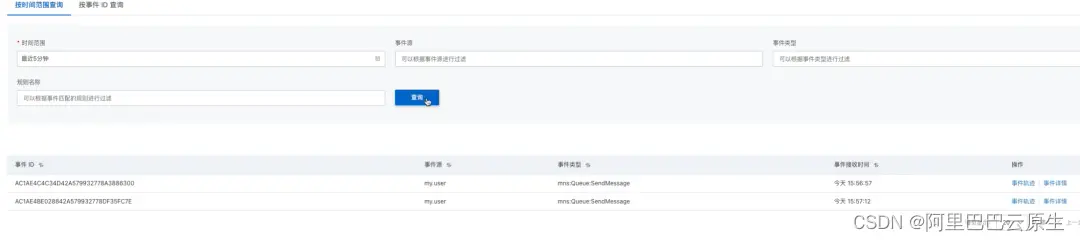

Events can also be viewed through event tracking:

It can be seen that an event was successfully delivered to the RDS, and an event was filtered out and not delivered.

data integration

Event stream is a more lightweight, real-time end-to-end event stream test channel provided by EventBridge for data integration. The main goal is to synchronize events between two endpoints and provide filtering and transformation functions. Currently, event flow between Alibaba Cloud messaging products has been supported.

Different from the event bus model, in the event flow, the event bus is not needed. The 1:1 model is more lightweight, and the direct-to-target method also makes the event more real-time; through the event flow, we can realize the integration of different systems. Inter-protocol conversion, data synchronization, and cross-regional backup capabilities.

The following will use an example to explain how to use event flow to route RocketMQ messages to MNS Queue to integrate the two products.

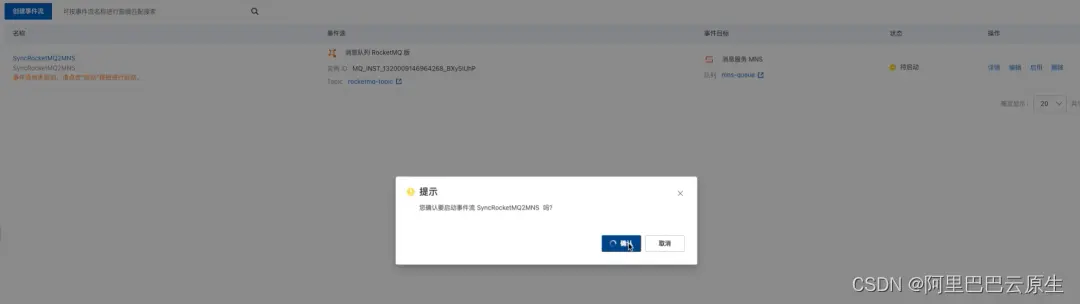

The overall structure is shown in the figure. The message with the TAG of MNS in RocketMQ is routed to the MNQ Queue through EventBridge.

Let's see how to do it together:

Go to the link below to view the video:

https://www.bilibili.com/video/BV1D44y1G7GK/

• First create an event stream, select the source RocketMQ instance, and fill in the Tag as mns.

• Leave the event pattern content blank to match all.

• Select MNS as the target, and select the target queue to complete the creation.

• After completing the creation, click Start to start the event flow task.

After the event flow is started, we can send messages to the source RocketMQ Topic through the console or SDK. When a Tag is mns, we can see that the message is routed to mns; when a Tag is not mns, the message will not be routed to mns.

Summarize

This article mainly shares with you how to integrate cloud product event sources through EventBridge, how to integrate cloud product event targets, and how to integrate message products through event streams. To learn more about EventBridge, scan the QR code below to join the DingTalk group ~

Click here to learn more about EventBridge~ Author: Li Kai (Kaiyi)

EvenBridge Integration Overview

EventBridge is a serverless event bus launched by Alibaba Cloud. Its goal is to expand the event ecosystem, break the data silos between systems, and build an event integration ecosystem. Provide unified event standardized access and management capabilities, improve integration and integrated channels, help customers quickly realize event-driven core atomic functions, and quickly integrate EventBridge into BPM, RPA, CRM and other systems.

EventBridge expands the EventBridge event ecosystem through event standardization, access standardization, and component standardization as fulcrums:

• Event standardization : embrace the CloudEvents 1.0 open source community standard protocol, natively support the CloudEvents community SDK and API, and fully embrace the open source community event standard ecosystem;

• Access standardization : Provides a standard event push protocol PutEvent, and supports two event access models, Pull and Push, which can effectively reduce the difficulty of event access and provide a complete standardization process for event access on the cloud;

• Component standardization : encapsulates standard event downstream component tool chain system, including schema registration, event analysis, event retrieval, event dashboard, etc. Provide a complete event toolchain ecosystem.

In the integration field, EventBridge focuses on creating two core scenarios of event integration and data integration. The following will describe these two scenarios in detail.

event integration

At present, EventBridge already has 80+ cloud product event sources and 800+ event types. The entire event ecology is gradually enriched.

So, how does EventBridge implement event integration for cloud products?

• First, you can see an event bus named default in the EventBridge console, and events of cloud products will be delivered to this bus;

• Then click Create Rule, you can select the cloud product you care about and its related events to monitor and deliver events.

Let's take two examples as examples to see how EventBridge events are integrated.

OSS event integration

Take OSS event source as an example to explain how to integrate OSS events.

OSS events are now mainly divided into four categories: operation audit-related, cloud monitoring-related, configuration audit-related, and cloud product-related events such as PutObject uploading files and so on. The event sources of other cloud products are similar and can basically be divided into these types of events.

The following demonstrates the event-driven online file decompression service:

• Under the OSS Bucket, there will be a zip folder to store the files to be decompressed, and an unzip folder to store the decompressed files;

• When a file is uploaded to the OSS Bucket, a file upload event will be triggered and delivered to the EventBridge cloud service dedicated bus;

• Then an event rule will be used to filter the event of the zip bucket and deliver it to the HTTP Endpoint of the decompression service;

• After receiving the event, the decompression service will download and decompress the file from OSS according to the file path in the event, and transfer the file to the unzip directory after decompression;

• At the same time, there will be an event rule that monitors the file upload event in the unzip directory, and pushes the event to the DingTalk group after conversion.

Let's take a look at how this is accomplished:

Go to the link below to view the video:

https://www.bilibili.com/video/BV1s44y1g7dk/

1) First create a bucket, there is a zip directory for storing the uploaded compressed files, and an unzip directory for storing the decompressed files.

2) Deploy the decompression service and expose the public network access address.

The source address of the decompression service is:

https://github.com/AliyunContainerService/serverless-k8s-examples/tree/master/oss-unzip?spm=a2c6h.12873639.article-detail.15.5a585d52apSWbk

You can also use ASK to deploy directly, the yaml file address is:

https://github.com/AliyunContainerService/serverless-k8s-examples/blob/master/oss-unzip/hack/oss-unzip.yaml

3) Create an event rule to monitor the event of uploading files in the zip directory, and deliver it to the HTTP Endpoint of the decompression service.

Here subject is used, matching the zip directory.

4) Create another event rule to monitor the events of the unzip directory, and deliver the unzip events to the DingTalk group.

Here also subject is used, matching the unzip directory.

For the configuration of variables and templates, please refer to the official documentation:

https://help.aliyun.com/document_detail/181429.html .

EventBridge will extract parameters from the event through JSONPath, then put these values into variables, and finally render the final output through the template definition and deliver it to the event target. You can also refer to the official documentation for the event format of the OSS event source:

https://help.aliyun.com/document_detail/205739.html#section-g8i-7p9-xpk , and use JSONPath to define variables according to actual business needs. 5) Finally, upload a file through the oss console for verification.

You can see that the just uploaded eventbridge.zip has been decompressed and uploaded, and you can also receive a notification that the decompression is complete in the DingTalk group. In addition, you can also view the track where the content of the event has been delivered on the event tracking side.

You can see that there are two upload events: one is uploaded through the console, and the other is uploaded after decompressing the file.

You can view the trajectory, and all of them are successfully delivered to the HTTP Endpoint of the decompression service and the DingTalk robot.

Integrate cloud products by customizing event sources as well as cloud product event targets

The demo just demonstrated is an event source for integrating cloud services. Let's take a demo to see how to integrate cloud products by customizing event sources and cloud product event targets.

Go to the link below to view the video:

https://www.bilibili.com/video/BV1QF411M7xv/

The final effect of this demo is to automatically clean data through EventBridge and deliver it to RDS. The event content is a JSON with two fields, a name and an age, and now it is hoped that users older than 10 years old will be filtered out and stored in RDS.

The overall architecture is shown in the figure, using an MNS Queue as a custom event source, and filtering and converting events through EventBridge and finally outputting them directly to RDS.

1) First, an MNS Queue has been created, an RDS instance and a database table have been created. The table structure is as follows:

2) Create a custom event bus, select the event provider as MNS, and the queue as the queue created in advance;

After it is created, we can see an already running event source in the event source;

3) Next, create a rule and send it to RDS

The content of the configured event mode is as follows:

{

"source": [

"my.user"

],

"data": {

"messageBody": {

"age": [

{

"numeric": [

">",

10

]

}

]

}

}

} Numerical matching can refer to the official documentation:

https://help.aliyun.com/document_detail/181432.html#section-dgh-5cq-w6c

4) Click Next, select the event target as the database, fill in the database information, configure the conversion rules, and complete the creation.

5) Finally, use MNS Queue to send a message, the age of this is greater than 10.

You can see that this event is output to RDS.

Next, send a message less than 10 to MNS Queue.

This event is filtered out and not output to RDS.

Events can also be viewed through event tracking:

It can be seen that an event was successfully delivered to the RDS, and an event was filtered out and not delivered.

data integration

Event stream is a more lightweight, real-time end-to-end event stream test channel provided by EventBridge for data integration. The main goal is to synchronize events between two endpoints and provide filtering and transformation functions. Currently, event flow between Alibaba Cloud messaging products has been supported.

Different from the event bus model, in the event flow, the event bus is not needed. The 1:1 model is more lightweight, and the direct-to-target method also makes the event more real-time; through the event flow, we can realize the integration of different systems. Inter-protocol conversion, data synchronization, and cross-regional backup capabilities.

The following will use an example to explain how to use event flow to route RocketMQ messages to MNS Queue to integrate the two products.

The overall structure is shown in the figure. The message with the TAG of MNS in RocketMQ is routed to the MNQ Queue through EventBridge.

Let's see how to do it together:

Go to the link below to view the video:

https://www.bilibili.com/video/BV1D44y1G7GK/

• First create an event stream, select the source RocketMQ instance, and fill in the Tag as mns.

• Leave the event pattern content blank to match all.

• Select MNS as the target, and select the target queue to complete the creation.

• After completing the creation, click Start to start the event flow task.

After the event flow is started, we can send messages to the source RocketMQ Topic through the console or SDK. When a Tag is mns, we can see that the message is routed to mns; when a Tag is not mns, the message will not be routed to mns.

Summarize

This article mainly shares with you how to integrate cloud product event sources through EventBridge, how to integrate cloud product event targets, and how to integrate message products through event streams. To learn more about EventBridge, scan the QR code below to join the DingTalk group ~

Click here to learn more about EventBridge~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。