Author: Zhang Kai

foreword

Cloud Native [1] is one of the fastest growing and most concerned directions in the field of cloud computing in the past five years. The CNCF (Cloud Native Computing Foundation, Cloud Native Computing Foundation) 2021 annual survey report [2] shows that there are already more than 6.8 million cloud native technology developers in the world. During the same period, the field of artificial intelligence also continued to flourish under the impetus of "deep learning algorithm + GPU computing power + massive data". Interestingly, there is a lot of correlation between cloud native technologies and AI, especially deep learning.

A large number of AI algorithm engineers are using cloud-native container technology to debug and run deep learning AI tasks. Many enterprise AI applications and AI systems are built on container clusters. In order to help users build AI systems in a container-based environment more easily and efficiently, and improve the ability to produce AI applications, Alibaba Cloud Container Service ACK will launch a cloud-native AI suite product in 2021. This article will introduce and sort out our thinking and positioning of the new field of cloud-native AI, and introduce the core scenarios, architecture and main capabilities of cloud-native AI suite products.

A Brief History of AI and Cloud Native

Looking back at the history of AI development, we will find that this is no longer a new field. From the first definition at the Dartmouth Symposium in 1956 to the "Deep Believe Network" proposed by Geoffery Hinton in 2006, AI has experienced three waves of development. Especially in the past 10 years, AI technology has made remarkable progress, driven by the accumulation of massive production data based on deep learning (Deep Learning) as the core algorithm and the large computing power represented by GPU. Different from the previous two times, this time AI has achieved breakthroughs in technologies such as machine vision, speech recognition, and natural language understanding, and has successfully landed in many industries such as business, medical care, education, industry, security, transportation, etc., and even gave birth to automatic Driving, AIoT and other new fields.

However, with the rapid advancement and wide application of AI technology, many enterprises and institutions have also found that it is not easy to ensure the efficient operation of the flywheel of "algorithm + computing power + data" and to produce AI capabilities with commercial landing value on a large scale. Expensive computing power investment and operation and maintenance costs, low AI service productivity, and AI algorithms that lack interpretability and generality have all become barriers to AI users.

Similar to the history of AI, the representative technology in the cloud native field, container, is not a new concept. It can be traced back to the UNIX chroot technology that was born in 1979, and began to show the prototype of the container. Next, a series of kernel-based lightweight resource isolation technologies appeared, such as FreeBSD Jails, Linux VServer, OpenVZ, and Warden. Until Docker was born in 2013, it redefines the interface for using data center computing resources with perfect container object encapsulation, standard API definition and friendly development and operation experience. We all know the story that followed, where Kubernetes won the race against Docker Swarm and Mesos and became the de facto standard for container orchestration and scheduling. Kubernetes and containers (including Docker, Containerd, CRI-O and other container runtime and management tools) constitute the cloud-native core technology defined by CNCF. After five years of rapid development, the cloud native technology ecosystem has covered all aspects of IT systems such as container runtime, network, storage and cluster management, observability, elasticity, DevOps, service mesh, serverless architecture, database, and data warehouse.

Why cloud-native AI is emerging

According to the CNCF 2021 annual related survey report, 96% of the surveyed enterprises are using or evaluating Kubernetes, which includes the needs of many AI-related businesses. Gartner has predicted that by 2023, 70% of AI applications will be developed based on containers and serverless technologies.

In reality, in the process of developing Alibaba Cloud Container Service ACK[3] (Alibaba Container service for Kubernetes) product, we see that more and more users want to manage GPU resources in Kubernetes container clusters, develop and run deep learning and big data tasks , deploy and flexibly manage AI services, and users come from all walks of life, including fast-growing companies such as the Internet, games, and live broadcasts, as well as emerging fields such as autonomous driving, AIoT, and even traditional industries such as government and enterprise, finance, and manufacturing. .

Users encounter many common challenges when developing, generating, and using AI capabilities, including high AI development thresholds, low engineering efficiency, high costs, complex software environment maintenance, decentralized management of heterogeneous hardware, uneven distribution of computing resources, and storage access. cumbersome and so on . Many of these users are already using cloud-native technologies and have successfully improved the development, operation and maintenance efficiency of applications and microservices. They hope to replicate the same experience in the AI field and build AI applications and systems based on cloud-native technologies.

In the initial stage, users can use Kubernetes, Kubeflow, and nvidia-docker to quickly build GPU clusters, access storage services through standard interfaces, and automatically implement AI job scheduling and GPU resource allocation. The trained model can be deployed in the cluster, which basically realizes AI development and production processes. Immediately afterwards, users have higher requirements for production efficiency and encounter more problems. For example , GPU utilization is low, distributed training scalability is poor, jobs cannot be elastically scaled, training data access is slow, data sets, models and task management are lacking, real-time logs, monitoring, and visualization cannot be easily obtained, and model release lacks quality and performance verification. Later, there is a lack of service-oriented operation and maintenance and governance methods, the threshold for Kubernetes and containers is high, the user experience does not conform to the usage habits of data scientists, team collaboration and sharing are difficult, resource contention often occurs, and even data security issues, etc.

To fundamentally solve these problems, the AI production environment must be upgraded from the "single-person workshop" model to the " resource pooling + AI engineering platform + multi-role collaboration" model. In order to help " cloud-native + AI" users with such demands to achieve flexible, controllable and systematic evolution, we launched the cloud-native AI suite [4] product on the basis of ACK. As long as users have an ACK Pro cluster, or any standard Kubernetes cluster, they can use the cloud native AI suite to quickly customize and build their own AI platform. Free data scientists and algorithm engineers from complicated and inefficient environmental management, resource allocation and task scheduling, and leave more of their energy to "brain hole" algorithms and "alchemy".

Figure 0: AI production environment evolves to platform

How to define cloud-native AI

With the gradual and in-depth evolution of enterprise IT architecture to cloud computing, cloud-native technologies represented by containers, Kubernetes, and service grids have helped a large number of application services to quickly land on cloud platforms, and have improved their flexibility, microservices, and serviceless. , DevOps and other scenarios to obtain great value. At the same time, IT decision makers are also considering how to use cloud-native technologies to support more types of workloads with a unified architecture and technology stack. In order to avoid the use of different architectures and technologies for different loads, it will bring the burden of "chimney" system, repeated investment and fragmented operation and maintenance.

Deep learning and AI tasks are just one of the important workloads that the community seeks for cloud-native support. In fact, more and more deep learning systems and AI platforms have been built on top of containers and Kubernetes clusters. In 2020, we will clearly put forward the concept, core scenarios and reference technical architecture of "cloud native AI", in order to provide a concrete definition, a feasible roadmap and best practices for this new field.

Alibaba Cloud Container Service ACK's definition of cloud-native AI - Make full use of the cloud's resource elasticity, heterogeneous computing power, standardized services, and cloud-native technical means such as containers, automation, and microservices to provide AI/ML with high engineering efficiency and low cost , scalable, reproducible end-to-end solution.

### Core Scenarios We focus on two core scenarios in the field of cloud-native AI: continuous optimization of heterogeneous resource efficiency, and efficient operation of heterogeneous workloads such as AI.

Figure 1: Core scenarios of cloud-native AI

Scenario 1. Optimizing Heterogeneous Resource Efficiency

Abstract various heterogeneous computing (such as CPU, GPU, NPU, VPU, FPGA, ASIC), storage (OSS, NAS, CPFS, HDFS), network (TCP, RDMA) resources in Alibaba Cloud IaaS or customer IDC, Unified management, O&M, and allocation, and continuous improvement of resource utilization through flexibility and synergistic optimization of software and hardware.

Scenario 2. Running heterogeneous workloads such as AI

Compatible with mainstream or user-owned computing engines and runtimes such as Tensorflow, Pytorch, Horovod, ONNX, Spark, Flink, etc., run various heterogeneous workload processes in a unified manner, manage job life cycles in a unified manner, and schedule task workflows in a unified manner to ensure Task size and performance. On the one hand, it continuously improves the cost performance of running tasks, and on the other hand, it continuously improves the development operation and maintenance experience and engineering efficiency.

Around these two core scenarios, more user-customized scenarios can be expanded, such as building MLOps processes that conform to user habits; or for CV (Computer Vision, Computer Vision) AI service characteristics, mixed scheduling CPU, GPU, VPU ( Video Process Unit) supports data processing pipelines at different stages; it can also expand support for higher-order distributed training frameworks for large model pre-training and fine-tuning scenarios, and optimize large models with task and data scheduling strategies, as well as elastic and fault-tolerant solutions. Training task cost and success rate.

Reference Architecture <br>In order to support the above core scenarios, we propose a cloud-native AI reference technology architecture.

Figure 2: Cloud-native AI reference architecture diagram

The cloud-native AI reference technology architecture is based on the white-box design principles of componentization, extensibility, and assembly, and exposes interfaces with Kubernetes standard objects and APIs, allowing developers and operators to select any component on demand, assemble and secondary development, and quickly Customize and build your own AI platform.

In the reference architecture, the Kubernetes container service is used as the base, which encapsulates the unified management of various heterogeneous resources downward, and provides the standard Kubernetes cluster environment and API upward to run various core components, realize resource operation and maintenance management, AI task scheduling and elastic scaling , data access acceleration, workflow orchestration, big data service integration, AI job life cycle management, various AI product management, unified operation and maintenance and other services. Going up to the main links in the AI production process (MLOps), it supports AI data set management, AI model development, training, evaluation, and model inference services; and through a unified command line tool, multi-language SDK and console interface, Support users to use directly or customize development. And through the same components and tools, it can also support the integration of AI services on the cloud, open source AI frameworks, and third-party AI capabilities.

What cloud-native AI capabilities do enterprises need

We define cloud-native AI concepts, core scenarios, design principles, and reference architectures. Next, what kind of cloud-native AI products need to be provided to users? Based on user research on AI tasks running on ACK, observations from communities such as cloud native, MLOps, and MLSys, combined with the analysis of some containerized AI products in the industry, we summarize the characteristics and key capabilities that a cloud native AI product needs to have.

Figure 3: What cloud-native AI capabilities do enterprises need

• High efficiency <br>Mainly including: utilization efficiency of heterogeneous resources, AI job operation and management efficiency, tenant sharing and team collaboration efficiency and other three dimensions

• Good compatibility <br>Compatible with common AI computing engines and models. It supports various storage services and provides general data access performance optimization capabilities. It can be integrated with a variety of big data services and can be easily integrated by business applications. In particular, it must also meet the requirements of data security and privacy protection.

• Extensible <br>Product architecture realizes extensibility, assembly and reproducibility. Provide standard APIs and tools for easy use, integration and secondary development. Under the same architecture, the product implementation is adapted to the delivery of various environments such as public cloud, proprietary cloud, hybrid cloud, and edge.

Alibaba Cloud Container Service ACK Cloud Native AI Suite

Based on the cloud-native AI reference architecture and facing the above-mentioned core scenarios and user needs, the Alibaba Cloud Container Service team officially released the ACK cloud-native AI suite product [5] in 2021, which has been launched in 27 regions around the world for public beta testing, helping Kubernetes users quickly Customize and build your own cloud-native AI platform.

ACK cloud native AI suite is mainly aimed at AI platform development and operation and maintenance teams, helping them to manage AI infrastructure quickly and cost-effectively. At the same time, the functions of each component are encapsulated into command line and console tools for direct use by data scientists and algorithm engineers. After the user installs the cloud-native AI suite in the ACK cluster, all components are ready to use out of the box, quickly realizing unified management of heterogeneous resources and running AI tasks with low thresholds. For product installation and usage, please refer to the product documentation [6].

Architecture design

Figure 4: Big Picture of Cloud Native AI Suite Architecture

The functions of the cloud native AI suite are divided from bottom to top:

- Heterogeneous resource layer , including heterogeneous computing power optimization and heterogeneous storage access

- AI scheduling layer , including various scheduling strategy optimizations

- AI elastic layer , including elastic AI training tasks and elastic AI inference services

- AI data acceleration and orchestration layer , including dataset management, distributed cache acceleration, big data service integration

- AI job management , including AI job lifecycle management services and toolsets

- AI operations layer , including monitoring, logging, autoscaling, troubleshooting, and multi-tenancy

- AI artifact repository , including AI container images, models, and AI experiment records

The following will briefly introduce the main capabilities and basic architecture design of the heterogeneous resource layer, AI scheduling layer, AI elastic task layer, AI data acceleration and orchestration layer, and AI job management layer in cloud native AI suite products.

1. Unified management of heterogeneous resources

The cloud native AI suite adds support for various heterogeneous resources such as Nvidia GPU, Hanguang 800 NPU, FPGA, VPU, RDMA high-performance network, etc. on ACK, basically covering all device types on Alibaba Cloud, making it possible in Kubernetes The way to use these resources is as simple as using CPU and memory, as long as you declare the required number of resources in the task parameters. For more expensive resources such as GPU and NPU, a variety of resource utilization optimization methods are also provided.

Figure 5: Unified management of heterogeneous resources

GPU monitoring

Provides multi-dimensional monitoring capabilities for GPUs, making the allocation, usage and health status of GPUs clear at a glance. Automatically detect and alert GPU device anomalies through the built-in Kubernetes NPD (Node Problem Detector).

Figure 6: GPU Monitoring

GPU Elastic Scaling

Combined with the multiple elastic scaling capabilities provided by the ACK elastic node pool, the GPU can be automatically scaled on-demand at both the number of resource nodes and the number of running task instances. The conditions for triggering elasticity include GPU resource usage indicators and user-defined indicators. The scaling node type supports common EGS instances, as well as ECI instances, Spot instances, etc.

Figure 7: GPU Auto Scaling

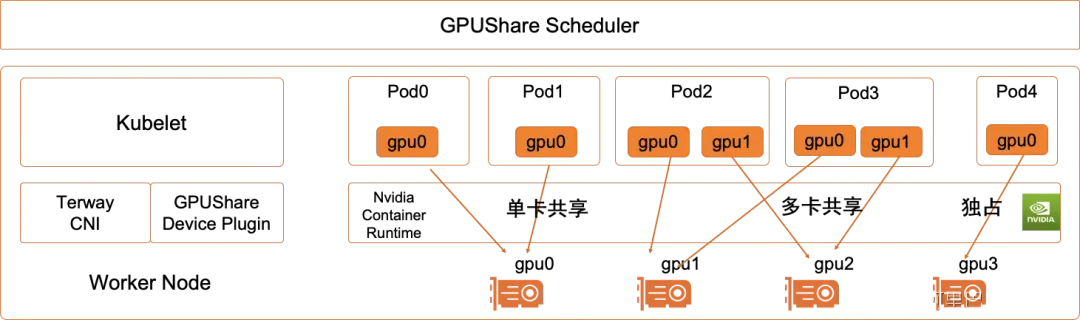

GPU Shared Scheduling

Native Kubernetes only supports scheduling at the granularity of the entire GPU card, and the task container will exclusively use the GPU card. If the task model is relatively small (computation amount, video memory amount), it will cause idle waste of GPU card resources. The cloud-native AI suite provides GPU sharing scheduling capability, which can allocate a GPU card to multiple task containers for shared use according to the GPU computing power and video memory requirements of the model. In this way, more tasks can theoretically be used to maximize GPU resources.

At the same time, in order to avoid mutual interference between multiple containers sharing the GPU, Alibaba Cloud cGPU technology is also integrated to ensure the security and stability of the shared GPU. The use of GPU resources between containers is isolated from each other. There will be no overuse, or affect each other when errors occur.

GPU shared scheduling also supports a variety of allocation strategies, including single container and single GPU card sharing, which is often used to support model inference scenarios; single container multi-GPU card sharing, which is often used for debugging distributed model training in the development environment; GPU card Binpack/Spread strategy, Allocation density and availability of GPU cards can be balanced. Similar fine-grained shared scheduling capabilities are also applicable to Alibaba's self-developed Hanguang 800 AI inference chip in the ACK cluster.

Figure 8: GPU Shared Scheduling

GPU shared scheduling and cGPU isolation have obvious improvements in GPU resource utilization, and there have been many use cases. For example, a customer divides and pools hundreds of GPU instances of different models uniformly based on the video memory dimension, and uses GPU shared scheduling and video memory automatic elastic scaling to deploy hundreds of AI models. It not only greatly improves resource utilization, but also significantly reduces the complexity of operation and maintenance.

GPU topology-aware scheduling

With the increase in the complexity of deep learning models and the amount of training data, distributed training on multiple GPU cards has become very common. For algorithms optimized by gradient descent, data needs to be frequently transmitted between multiple GPU cards, and the data communication bandwidth often becomes a bottleneck limiting GPU computing performance.

The GPU topology-aware scheduling function obtains the connection topology between all GPU cards on the computing node. When the scheduler selects GPU resources for multi-card training tasks, it considers all interconnected links such as NVLINK, PCIe Switch, QPI, and RDMA network cards according to the topology information. Automatically select the GPU card combination that can provide the maximum communication bandwidth to avoid bandwidth limitation affecting GPU computing efficiency. Enabling GPU topology-aware scheduling is completely configurable, with zero intrusion to the model and training task code.

Figure 9: GPU topology-aware scheduling

By comparing the performance of ordinary Kubernetes scheduling and running multi-card GPU distributed training tasks, it can be found that for the same computing engine (such as Tensorflow, Pytorch, etc.) and model tasks (Resnet50, VGG16, etc.), GPU topology-aware scheduling can be achieved at zero cost. to improve efficiency. Compared with the smaller Resnet50 model, due to the large amount of data transmission introduced in the training process of the VGG16 model, the better GPU interconnection bandwidth will have a more obvious effect on improving the training performance.

Figure 10: Training performance of GPU topology-aware scheduling vs common multi-card GPU scheduling

2. AI task scheduling (Cybernetes)

AI distributed training is a typical batch task type. A training task (Job) submitted by a user is generally completed by a group of sub-tasks (Task) jointly running at runtime. Different AI training frameworks have different strategies for grouping subtasks. For example, a Tensorflow training task can use a grouping of multiple Parameter Servers subtasks + multiple Workers subtasks. Horovod uses a Launcher + multiple Workers when running Ring all-reduce training. strategy.

In Kubernetes, a subtask generally corresponds to a Pod container process. Scheduling a training task is transformed into the co-scheduling of one or more groups of subtask Pod containers. This is similar to Hadoop Yarn's scheduling of MapReduce and Spark batch jobs. The Kubernetes default scheduler can only use a single Pod container as the scheduling unit, which cannot meet the needs of batch task scheduling.

The ACK scheduler (Cybernetes[7]) extends the native scheduling framework of Kubernetes and implements many typical batch scheduling strategies, including Gang Scheduling (Coscheduling), FIFO scheduling, Capacity Scheduling, Fair sharing, Binpack/Spread, etc. A new priority task queue is also added to support custom task priority management and tenant elastic resource quota control; it can also integrate Kubeflow Pipelines or Argo cloud native workflow engine to provide workflow orchestration services for complex AI tasks. The architecture composed of "task queue + task scheduler + workflow + task controller" provides basic support for building a batch task system based on Kubernetes, and also significantly improves the overall resource utilization of the cluster.

At present, the cloud-native AI suite environment can not only support Tensorflow, Pytorch, Horovod and other deep learning AI task training, but also support big data tasks such as Spark and Flink, and can also support MPI high-performance computing jobs. We also contributed some batch task scheduling capabilities to the upstream open source project of the Kubernetes scheduler, and continued to promote the evolution of the community to a cloud-native task system infrastructure. You can go to Github to learn about the relevant project details: https://github.com/kubernetes-sigs/scheduler-plugins

Figure 13: ACK Cybernetes Scheduler

Examples of typical batch task scheduling policies supported by the ACK scheduler are as follows:

Figure 14: Typical task scheduling strategy supported by ACK

• Gang Scheduling

Only when the cluster can meet the needs of all sub-tasks of a task can resources be allocated to the task as a whole, otherwise no resources are allocated to it. Avoid large tasks crowding out small tasks due to resource deadlock.

• Capacity Scheduling

By providing elastic quota and tenant queue settings, it ensures that the overall resource utilization can be improved through elastic quota sharing on the basis of meeting the minimum resource demand allocation of tenants.

• Binpack Scheduling

Jobs are preferentially allocated to a certain node, and when node resources are insufficient, they are allocated to the next node in turn, which is suitable for single-machine multi-GPU card training tasks and avoids cross-machine data transmission. It can also prevent resource fragmentation caused by a large number of small jobs.

• Resource Reservation Scheduling

Reserve resources for specific jobs, target them, or reuse them. It not only ensures the certainty that a specific job obtains resources, but also accelerates the speed of resource allocation, and also supports user-defined reservation policies.

3. Elastic AI tasks

Elasticity is the most important fundamental capability of cloud-native and Kubernetes container clusters. Considering resource status, application availability and cost constraints, and intelligently scaling the number of service instances and cluster nodes, it is necessary to meet the basic service quality of the application and avoid excessive cloud resource consumption. The cloud native AI suite supports elastic model training tasks and elastic model inference services respectively.

Elastic Training

Supports flexible scheduling of distributed deep learning training tasks. During the training process, the number of subtask worker instances and nodes can be dynamically scaled, while basically maintaining the overall training progress and model accuracy. When cluster resources are idle, add more workers to accelerate training, and when resources are tight, some workers can be released to ensure the basic running progress of training. This can greatly improve the overall utilization of the cluster, avoid the impact of computing node failures, and significantly reduce the time users spend waiting for jobs to start after submitting jobs.

It is worth noting that in addition to resource elasticity, elastic deep learning training also requires the support of computing engines and distributed communication frameworks, as well as algorithms, data segmentation strategies, training optimizers, etc. The method to ensure the adaptation of global batch size and learning rate) can ensure the accuracy target and performance requirements of model training. At present, there is no general method in the industry to achieve highly-reproducible elastic training on common models. Under certain constraints (model features, distributed training methods, resource scale and elastic range, etc.), such as ResNet50 within 32-card GPU, BERT using Pytorch and Tensorflow have stable methods to achieve satisfactory elastic training benefits.

Figure 15: Elastic AI training

Elastic Inference

Combined with Alibaba Cloud's rich resource elasticity capabilities, it makes full use of various resource types including non-recycling during downtime, elastic resource pools, timing elasticity, spot instance elasticity, and Spotfleet mode to automatically scale up and down AI inference services. Maintain a more optimal balance between service performance and cost.

In fact, the elasticity of AI model online inference service and service-based operation and maintenance are similar to microservices and web applications. Many existing cloud-native technologies can be directly used in online inference services. However, AI model reasoning still has many special features. There are special demands and processing methods for model optimization methods, pipeline service, refined scheduling, and adaptation of heterogeneous operating environments, which will not be discussed here.

4. AI data orchestration and acceleration (Fluid)

Running tasks such as AI and big data on the cloud through the cloud-native architecture can enjoy the advantages of elastic computing resources, but at the same time, it also encounters the data access delay caused by the separation of computing and storage architecture and the high overhead of remote data pulling bandwidth. challenge. Especially in GPU deep learning training scenarios, iterative remote reading of large amounts of training data will seriously slow down GPU computing efficiency.

On the other hand, Kubernetes only provides a standard interface for accessing and managing heterogeneous storage services (CSI, Container Storage Interface), and does not define how applications use and manage data in container clusters. When running training tasks, data scientists need to be able to manage dataset versions, control access permissions, dataset preprocessing, accelerate heterogeneous data reads, and more. But there is no such standard solution in Kubernetes yet, which is one of the important capabilities missing from the cloud-native container community.

The ACK cloud native AI suite abstracts the "process of using data for computing tasks", proposes the concept of elastic dataset Dataset, and implements it in Kubernetes as a "first class citizen". Around the elastic dataset Dataset, ACK has created the data orchestration and acceleration system Fluid to realize the capabilities of Dataset management (CRUD operations), permission control and access acceleration.

Fluid can configure a cache service for each Dataset, which can automatically cache the data locally in the computing task during the training process for the next round of iterative calculation, and can also schedule new training tasks to run on the computing nodes where the cached data of the Dataset already exists. . Coupled with data set preheating, cache capacity monitoring, and elastic scaling, the overhead of remote data pulling for tasks can be greatly reduced. Fluid can aggregate multiple different types of storage services as data sources (such as OSS, HDFS) into the same Dataset, and can also access storage services in different locations to achieve data management and access acceleration in a hybrid cloud environment.

Figure 16: Fluid Dataset

Algorithm engineers use Fluid Dataset in AI task container in a similar way to using PVC (Persistent Volume Claim) in Kubernetes. They only need to specify the name of the Dataset in the task description file, and mount it as a volume to the container for the task to read data. path. We hope that adding GPU resources to training tasks will speed up model training nearly linearly. However, it is often limited by the bandwidth limitation of more GPUs pulling OSS buckets concurrently. Simply adding GPUs cannot effectively speed up training. By using the distributed cache capability of Fluid Dataset, the performance bottleneck of remote concurrent data pull is effectively resolved, enabling distributed training to expand GPU to obtain better speedup ratio.

Figure 17: Fluid distributed cache acceleration model training performance comparison

The ACK team, together with Nanjing University and Alluxio, jointly launched the Fluid open source project [8] and hosted it as a CNCF sandbox project, hoping to work with the community to promote the development and implementation of cloud-native data orchestration and acceleration. Fluid's architecture can be extended and compatible with a variety of distributed cache services through the CacheRuntime plugin. Currently, Alibaba Cloud EMR's JindoFS, open source Alluxio, Juicefs and other cache engines have been supported. Good progress has been made in both the co-construction of the open source community and the landing of users on the cloud.

Figure 18: Fluid System Architecture

Key capabilities of Fluid cloud-native data orchestration and acceleration include:

• Through data affinity scheduling and distributed cache engine acceleration, the integration between data and computing is realized, thereby accelerating computing's access to data.

• Manage data independently of storage, and isolate resources through the Kubernetes namespace to achieve data security isolation.

• Combine data from different storages to perform operations, so as to have the opportunity to break the data island effect caused by the differences of different storages.

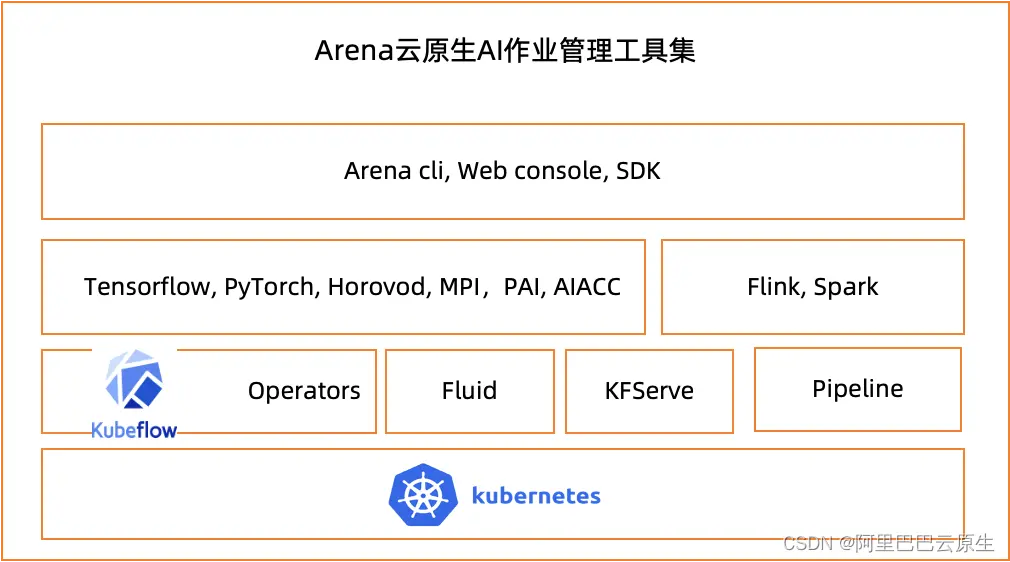

5. AI Job Lifecycle Management (Arena)

All components provided by the ACK cloud-native AI suite are delivered to AI platform developers and operators in the form of Kubernetes standard interfaces (CRDs) and APIs. This is very convenient and easy to use for building a cloud-native AI platform based on Kubernetes.

But for data scientists and algorithm engineers to develop and train AI models, Kubernetes syntax and operations are a "burden". They are more accustomed to debugging code in IDEs such as Jupyter Notebook, and using command line or web interface to submit and manage training tasks. Logging, monitoring, storage access, GPU resource allocation, and cluster maintenance when tasks are running are preferably built-in capabilities that can be easily operated using tools.

The production process of AI services mainly includes stages such as data preparation and management, model development and construction, model training, model evaluation, and online operation and maintenance of model inference services. In these links, the model is continuously optimized and updated according to the changes of online data in an iterative manner, and the model is released online through the inference service, new data is collected, and the next round of iteration is entered.

Figure 19: Deep Learning AI Task Lifecycle

The cloud-native AI suite abstracts the main work in this production process and manages it with the command-line tool Arena[9]. Arena completely shields the complexity of underlying resource and environment management, task scheduling, GPU allocation and monitoring, and is compatible with mainstream AI frameworks and tools, including Tensorflow, Pytorch, Horovod, Spark, JupyterLab, TF-Serving, Triton, etc.

In addition to command line tools, Arena also supports golang/java/python language SDK, which is convenient for secondary development. In addition, considering that many data scientists are accustomed to using the web interface to manage resources and tasks, the cloud native AI suite also provides a simple operation and maintenance dashboard and development console to meet the needs of users to quickly browse the cluster status and submit training tasks. Together, these components form Arena, a cloud-native AI job management toolset.

Kubeflow[10] is a mainstream open source project in the Kubernetes community that supports machine learning workloads. Arena has built-in Kubeflow's TensorflowJob, PytorchJob, MPIJob task controller, and the open source SparkApplication task controller, and can also integrate KFServing and Kubeflow Pipelines projects. Users do not need to install Kubeflow separately in the ACK cluster, they only need to use Arena.

It is worth mentioning that the ACK team also contributed the arena command line and SDK tools to the Kubeflow community in the early days. Many enterprise users have built their own AI platform MLOps processes and experiences by extending and packaging arena.

Figure 20: Arena Architecture

The cloud native AI suite mainly solves problems for the following two types of users:

• AI developers , such as data scientists and algorithm engineers • AI platform operators , such as AI cluster administrators

AI developers can use the arena command line or the development console web interface to create a Jupyter notebook development environment, submit and manage model training tasks, query GPU allocation, view training task logs in real time, monitor and visualize data, and compare and evaluate different model versions. The selected model is deployed to the cluster, and AB testing, grayscale publishing, flow control and elastic scaling can also be performed on the online model inference service.

# 提交Tensorflow分布式训练任务

arena submit mpijob

--name=tf-dist-data --workers=6 --gpus=2 --data=tfdata:/data_dir \

--env=num_batch=100 --env=batch_size=80 --image=ali-tensorflow:gpu-tf-1.6.0 \

"/root/hvd-distribute.sh 12 2” # 查看训练任务状态

arena get tf-dist-data

NAME STATUS TRAINER AGE INSTANCE NODE

tf-dist-data RUNNING tfjob 3d tf-dist-data-tfjob-ps-0 192.168.1.120

tf-dist-data SUCCEEDED tfjob 3d tf-dist-data-tfjob-worker-0 N/A

tf-dist-data SUCCEEDED tfjob 3d tf-dist-data-tfjob-worker-1 N/A

Your tensorboard will be available on:

192.168.1.117:32594 Figure 21: Arena Development Console

AI cluster administrators can use the Arena operation and maintenance dashboard to understand and manage cluster resources, tasks, configure user permissions, tenant quotas, and data set management.

Figure 22: Arena operation and maintenance market

User case

The cloud-native AI suite has already gained interest and testing from many users during its early public beta. The user industry includes heavy AI users such as the Internet, online education, and autonomous driving. Two representative user cases are shared here.

The Weibo deep learning platform [11] uses the Kubernetes hybrid cloud cluster to uniformly manage GPU instances on Alibaba Cloud and resources in IDC.

Unified management of a large number of GPU resources through heterogeneous resource scheduling, monitoring and elastic scaling capabilities. Using the shared scheduling capability of GPUs, the utilization of GPUs can be greatly improved in AI model inference service scenarios.

By encapsulating Arena, Weibo developed Weibo's own AI task management platform to centrally manage all deep learning training tasks, and the engineering efficiency was improved by 50%.

Efficient scheduling of large-scale Tensorflow and Pytorch distributed training tasks by using the AI task scheduler.

By using Fluid + EMR Jindofs distributed caching capabilities, especially optimized for massive small file caching in HDFS, the end-to-end speed of Pytorch distributed training tasks has been improved several times. In the extreme case of user testing, the training time was shortened from two weeks to 16 hours [12].

• Momo Zhixing

Maomo Zhixing is an intelligent driving star startup invested by Great Wall Motors. Momo started the work of building a unified AI engineering platform based on cloud native technology very early. Momo first used Kubeflow, arena toolset, GPU unified scheduling and other capabilities to build its own machine learning platform. Furthermore, Fluid+JindoRuntime is integrated, which significantly improves the efficiency of cloud training and inference, especially for some small models. JindoRuntime for training and inference in the cloud can effectively solve the IO bottleneck problem, and the training speed can be increased by up to about 300%. At the same time, it also greatly improves the efficiency of cloud GPU usage and accelerates the data-driven iteration efficiency in the cloud.

Figure 23: Mimozhixing AI platform uses Fluid dataset to accelerate distributed GPU training

Momo Zhixing not only shared the application exploration and implementation practice of ACK-based cloud-native AI in the field of autonomous driving [13], but also co-constructed with the cloud-native AI community such as Fluid, and plans to further strengthen:

• Supports scheduled tasks and dynamic capacity scaling • Provides a performance monitoring console • Supports full lifecycle management of multiple data sets in a large-scale Kubernetes cluster • Supports dynamic deletion of cached data and cached metadata

Outlook

In the tide of enterprise IT transformation, the demand for digitization and intelligence is getting stronger and stronger. The most prominent demand is how to quickly and accurately dig out new business opportunities and model innovations from massive business data, so as to better cope with the ever-changing, Uncertain market challenges. AI Artificial intelligence is undoubtedly one of the most important means of helping companies achieve this goal now and in the future. Obviously, how to continuously improve the efficiency of AI engineering, optimize the cost of AI production, continuously lower the threshold of AI, and realize the universal benefit of AI capabilities has great practical value and social significance.

We clearly see that the advantages of cloud native in distributed architecture, standardized APIs and ecological richness are rapidly becoming a new interface for users to efficiently use cloud computing to help businesses improve service agility and scalability. More and more users are also actively exploring how to improve the productivity of AI services based on cloud-native container technology. In turn, the same technology stack can be used to support various heterogeneous workloads within the enterprise.

Alibaba Cloud ACK provides cloud-native AI suite products, ranging from underlying heterogeneous computing resources, AI task scheduling, AI data acceleration, to upper-level computing engine compatibility and AI job lifecycle management, supporting AI production and operation and maintenance processes in the full stack. With a concise user experience, scalable architecture, and unified and optimized product implementation, it accelerates the process of customizing users to build a cloud-native AI platform. We also share some of the results with the community to jointly promote the development and implementation of cloud-native AI.

Related Links

[1] Cloud Native

https://github.com/cncf/toc/blob/main/DEFINITION.md

[2] 2021 Annual Survey Report

https://www.cncf.io/blog/2021/12/20/new-slashdata-report-5-6-million-developers-use-kubernetes-an-increase-of-67-over-one-year/

[3] Alibaba Cloud Container Service ACK

https://help.aliyun.com/product/85222.html

[4] Cloud Native AI Kit

https://help.aliyun.com/document_detail/270040.html

[5] Released ACK cloud-native AI suite products

https://yqh.aliyun.com/live/cloudnative_ai_release

[6] Install Cloud Native AI Suite Product Documentation

https://help.aliyun.com/document_detail/201997.html

[7] ACK Scheduler (Cybernetes)

https://help.aliyun.com/document_detail/214238.html

[8] Fluid Open Source Project

https://github.com/fluid-cloudnative/fluid

[9] Arena command line tool

https://github.com/kubeflow/arena

[10] Kubeflow

https://github.com/kubeflow/kubeflow

[11] Weibo Deep Learning Platform

https://www.infoq.cn/article/MXpf_TOJH9P10Ppdq9hl

[12] Training duration reduced from two weeks to 16 hours

https://www.infoq.cn/article/FClx4Cco6b1jomi6UZSy

[13] Application exploration and landing practice of cloud native AI in the field of autonomous driving

https://www.infoq.cn/article/YkTwXpZGaE86E29MdVo2

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。