background

This series will focus on TiFlash itself, and readers need to have some basic knowledge of TiDB. You can learn about some concepts in the TiDB system through these three articles, "Talking about Storage", "Talking about Computing", and "Talking about Scheduling".

Today's protagonist - TiFlash is a key component of TiDB HTAP form. It is a column storage extension of TiKV, asynchronously replicated through the Raft Learner protocol, but provides the same snapshot isolation support as TiKV. We use this architecture to solve the problem of isolation and column-store synchronization in HTAP scenarios. Since the introduction of MPP in 5.0, the computing acceleration capability of TiDB in real-time analysis scenarios has been further enhanced.

The above figure describes the division of the overall logic module of TiFlash, which is connected to the multi-raft system of TiDB through Raft Learner Proxy. We can look at TiKV: the MPP of the computing layer can exchange data between TiFlash and have stronger analysis and computing capabilities; as a column storage engine, we have a schema module responsible for synchronizing with the table structure of TiDB, and The data synchronized by TiKV is converted into the form of columns and written into the column storage engine; the bottom block is the column storage engine that will be introduced later, and we name it the DeltaTree engine.

Users who have continued to pay attention to TiDB may have read "How is TiDB's Columnar Storage Engine Implemented?" 》 This article, with the recent open source of TiFlash, there are also new users who want to know more about the internal implementation of TiFlash. This article will introduce some details of the internal implementation of TiFlash from a closer to the code level.

Here are some important module divisions in TiFlash and their corresponding positions in the code. In today's sharing and subsequent series, the modules inside will be gradually introduced.

Code location corresponding to TiFlash module

dbms/

└── src

├── AggregateFunctions, Functions, DataStreams # 函数、算子

├── DataTypes, Columns, Core # 类型、列、Block

├── IO, Common, Encryption # IO、辅助类

├── Debug # TiFlash Debug 辅助函数

├── Flash # Coprocessor、MPP 逻辑

├── Server # 程序启动入口

├── Storages

│ ├── IStorage.h # Storage 抽象

│ ├── StorageDeltaMerge.h # DeltaTree 入口

│ ├── DeltaMerge # DeltaTree 内部各个组件

│ ├── Page # PageStorage

│ └── Transaction # Raft 接入、Scehma 同步等。 待重构 https://github.com/pingcap/tiflash/issues/4646

└── TestUtils # Unittest 辅助类 Abstraction of some basic elements in TiFlash

The code for this TiFlash engine was fork from ClickHouse in 18 years. ClickHouse provides TiFlash with a very powerful vectorized execution engine, which we use as TiFlash's stand-alone computing engine. On this basis, we have added connection to TiDB front-end, MySQL compatibility, Raft protocol and cluster mode, real-time update of column storage engine, MPP architecture, etc. Although it is completely different from the original Clickhouse, the code naturally inherits the TiFlash code from ClickHouse, and also uses some abstractions of CH. for example:

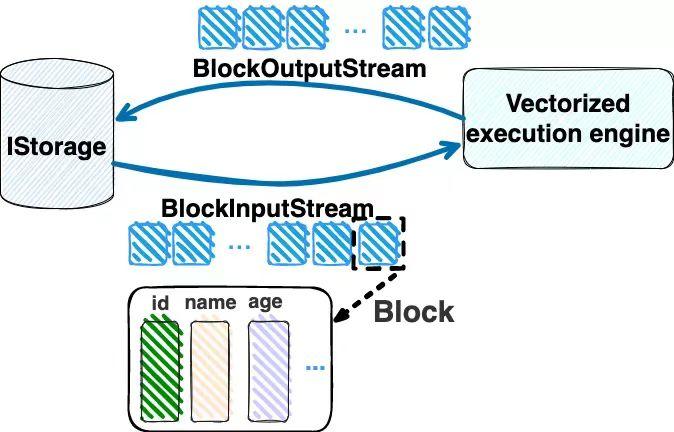

IColumn represents data organized in columns in memory. IDataType is an abstraction of data types. Block is a data block composed of multiple IColumns, which is the basic unit of data processing during execution.

During the execution process, Blocks will be organized in the form of streams, and "flow" from the storage layer to the computing layer in the form of BlockInputStream. BlockOutputStream, on the other hand, generally "writes out" data from the execution engine to the storage layer or other nodes.

IStorage is an abstraction of the storage layer, which defines basic operations such as data writing, reading, DDL operations, and table locks.

DeltaTree engine Although TiFlash basically uses CH's vectorized computing engine, the storage layer does not use CH's MergeTree engine in the end, but re-developed a set of column storage engine that is more suitable for HTAP scenarios, we call it DeltaTree, corresponding to the code in the code. "StorageDeltaMerge".

What problem does the DeltaTree engine solve

A. Native support for high-frequency data writing, suitable for docking with TP systems, and better support for analysis work in HTAP scenarios.

B. Better read performance under the premise of supporting real-time update of column storage. Its design goal is to give priority to Scan read performance, which may partially sacrifice write performance compared to CH's native MergeTree

C. Comply with TiDB's transaction model and support MVCC filtering

D. The data is managed by shards, which can provide some column storage features more conveniently, so as to better support analysis scenarios, such as supporting rough set index

Why do we say that the DeltaTree engine has the above features🤔? Before answering this question, let's review what's wrong with CH's native MergeTree engine. The MergeTree engine can be understood as a column storage implementation of the classic LSM Tree (Log Structured Merge Tree), and each of its "part folders" corresponds to SSTFile (Sorted Strings Table File). In the beginning, the MergeTree engine did not have WAL. Each time it was written, even if there was only one piece of data, the data would need to be generated into a part. Therefore, if the MergeTree engine is used to undertake high-frequency writing data, a large number of fragmented files will be formed on the disk. At this time, the write performance and read performance of the MergeTree engine will fluctuate severely. This problem was not partially relieved until 2020, when CH introduced WAL to the MergeTree engine ClickHouse/8290.

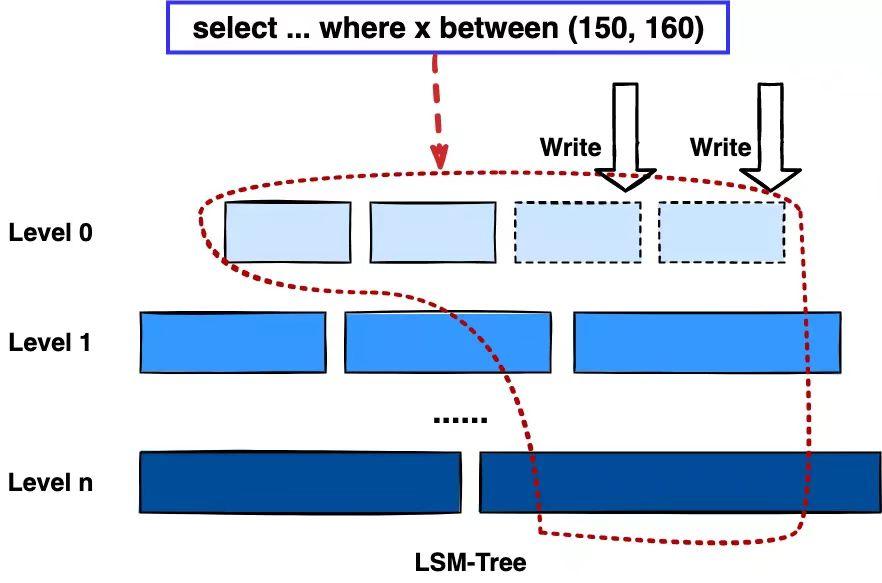

So with WAL, can the MergeTree engine carry TiDB data well? Not enough. Because TiDB is a relational database that implements Snapshot Isolation-level transactions through MVCC. This determines that the load carried by TiFlash will have more data update operations, and the read requests carried will need to be filtered by the MVCC version to filter out the data to be read. If the data is organized in the form of LSM Tree, when processing the Scan operation, it will be necessary to merge and filter the data in the form of heap sort from all files in L0 and all files in other layers that have overlaps with the query key-range. In the process of merging data into and out of the heap, the CPU branch is often missed, and the cache hit is also very low. The test results show that when processing Scan requests, a lot of CPU is consumed in the process of this heap sort.

In addition, using the LSM Tree structure, the cleaning of expired data can usually only be cleaned up during the level compaction process (that is, the Lk-1 layer and the Lk layer overlap files are compacted). The write amplification caused by the level compaction process will be more serious. When the background compaction traffic is relatively large, it will affect the performance of writing and reading data in the foreground, resulting in unstable performance.

The three points above the MergeTree engine: write fragmentation, serious CPU cache miss during Scan, and compaction when cleaning expired data, resulting in a transactional storage engine built on the MergeTree engine, in the HTAP scenario with data updates, read and write There will be large fluctuations in performance.

DeltaTree's solution and module division

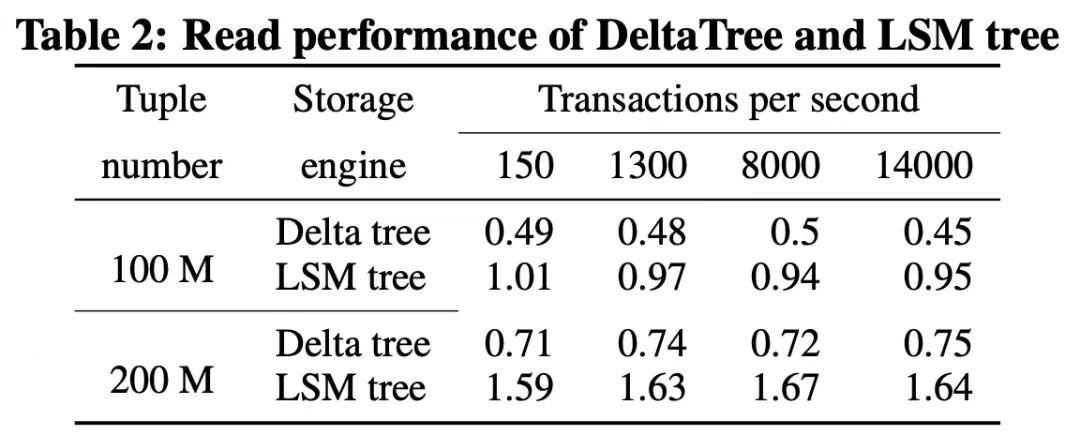

Before looking at the implementation, let's take a look at how DeltaTree works. The above figure is a comparison of the read (Scan) time consumption of Delta Tree and the column storage engine with transaction support based on MergeTree under different data volumes (Tuple numbers) and different update TPS (Transactions per second). It can be seen that the read performance of DeltaTree in this scenario can basically reach twice that of the latter.

So how is DeltaTree specifically designed to face the above problems?

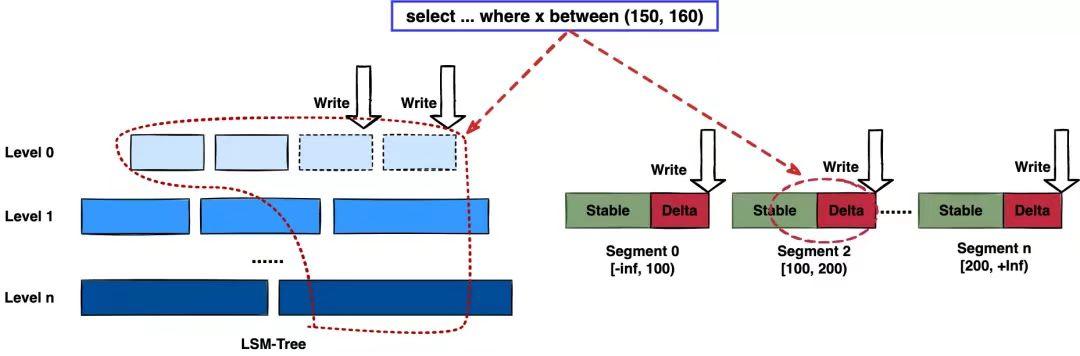

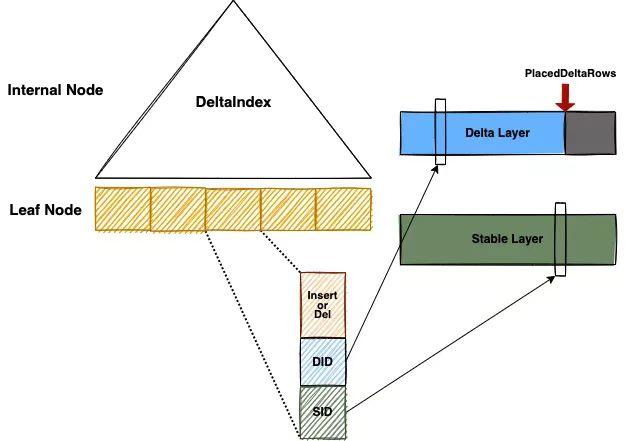

First, in the table, we divide the data horizontally according to the key-range of the handle column for data management, and each segment is called a Segment. In this way, during compaction, data between different segments is independently organized, which can reduce write amplification. This aspect is somewhat similar to the idea of PebblesDB[1].

In addition, in each segment, we adopt the form of delta-stable, that is, when the latest modified data is written, it is organized at the end of a write-optimized structure ( DeltaValueSpace.h ), which is periodically merged into a read-optimized structure in the optimized structure ( StableValueSpace.h ). The Stable Layer stores relatively old data with a large amount of data. It cannot be modified, but can only be replaced. When the Delta Layer is full, perform a Merge with the Stable Layer (this action is called Delta Merge) to obtain a new Stable Layer and optimize the read performance. Many column stores that support update use a form similar to delta-stable to organize data, such as Apache Kudu[2]. Interested readers can also look at the paper "Fast scans on key-value stores" [3], which analyzes the advantages and disadvantages of how to organize data, MVCC data organization, and expired data GC. Finally, The author also chose the form of delta-main plus column storage.

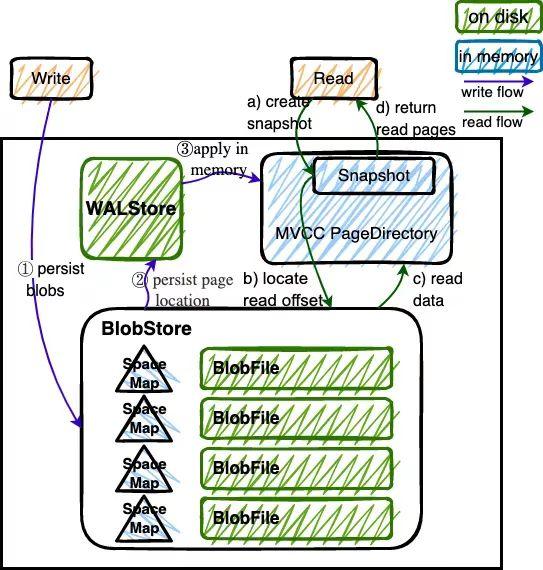

For Delta Layer data, we store data through a PageStorage structure. For Stable Layer, we mainly store data through DTFile and manage the life cycle through PageStorage. In addition, there is the meta information of Segment, DeltaValueSpace, and StableValueSpace, which we also store through PageStorage. The above three correspond to the log, data and meta of the StoragePool data structure in DeltaTree respectively.

PageStorage module

As mentioned above, the data of the Delta Layer and some metadata of the DeltaTree storage engine, such smaller data blocks, are serialized into byte strings and written to PageStorage as "Page" for storage. PageStorage is a storage abstraction component in TiFlash, similar to object storage. It is mainly designed for the high-frequency reading of the Delta Layer: for example, on the snapshot, the scene is checked by PageID (or multiple PageIDs); and relatively high-frequency writing compared to the Stable Layer. The typical size of the "Page" data block of the PageStorage layer is several KiB ~ MiB.

PageStorage is a relatively complex component, and its internal structure will not be introduced today. Readers can first understand that PageStorage provides at least the following three functions:

Provides the WriteBatch interface to ensure the atomicity of writing to WriteBatch Provides the Snapshot function to obtain a read-only view that does not block writing

Provides the ability to read partial data within the Page (read-only selected column data)

Read IndexDeltaTree Index

As mentioned earlier, multi-way merge on LSM-Tree consumes more CPU, so can we avoid having to do it again every read? The answer is yes. In fact, some in-memory databases already practice a similar idea. The specific idea is that after the first Scan is completed, we try to save the information generated by the multi-channel merging algorithm, so that the next Scan can be reused. This information that can be reused is called Delta Index, which is implemented by a B+ Tree. Using Delta Index, combine Delta Layer and Stable Layer together to output a sorted Stream. Delta Index helps us convert the merge operation that is CPU bound and has many cache misses into copy operations of some contiguous memory blocks in most cases, thereby optimizing the performance of Scan.

Rough Set Index

Many databases will add statistical information to the data blocks, so that the data blocks can be filtered when querying, and unnecessary IO operations can be reduced. Some call this auxiliary structure KnowledgeNode, and some call it ZoneMaps. TiFlash refers to the open source implementation of InfoBright [4], and adopts the name of Rough Set Index, which is called coarse-grained index in Chinese.

TiFlash adds a MvccQueryInfo structure to the SelectQueryInfo structure, which will carry the query key-ranges information. When DeltaTree is processing, it will first do segment-level filtering based on key-ranges. In addition, the filter of the query will be converted into the structure of RSFilter from the DAGRequest, and when the data is read, the RSFilter will be used to filter the data block level in the ColumnFile.

Doing Rough Set Filter in TiFlash is different from the general AP database, mainly in that the influence of coarse-grained index on the correctness of MVCC needs to be considered. For example, the table has three columns a, b and the written version tso, where a is the primary key. A row of Insert (x, 100, t0) is written at time t0, which is in the data block of Stable VS. A delete marker Delete(x, 0, t1) is written at time t1, and this marker is stored in the Delta Layer. At this time, there is a query select * from T where b = 100. Obviously, if we do index filtering in both the Stable Layer and the Delta Layer, then the Stable data block can be selected, and the Delta data block is filtered out. This will cause the line (x, 100, t0) to be incorrectly returned to the upper layer, because its delete marker was discarded by us.

Therefore, the data block of the TiFlash Delta layer only applies the index of the handle column. The Rough Set Index on the non-handle column is mainly used for the filtering of Stable data blocks. In general, the amount of Stable data accounts for 90%+, so the overall filtering effect is not bad.

Below the code module is the code location corresponding to each module in the DeltaTree engine. Readers can recall the previous article, which part of the previous article they correspond to ;)

The code location corresponding to each module in the DeltaTree engine

dbms/src/Storages/

├── Page # PageStorage

└── DeltaMerge

├── DeltaMergeStore.h # DeltaTree 引擎的定义

├── Segment.h # Segment

├── StableValueSpace.h # Stable Layer

├── Delta # Delta Layer

├── DeltaMerge.h # Stable 与 Delta merge 过程

├── File # Stable Layer 的存储格式

├── DeltaTree.h, DeltaIndex.h # Delta Index

├── Index, Filter, FilterParser # Rough Set Filter

└── DMVersionFilterBlockInputStream.h # MVCC Filteringsummary

This article mainly introduces the overall module layering of TiFlash, and how to optimize the DeltaTree engine of the storage layer in the HTAP scenario of TiDB. The composition and function of components in DeltaTree are briefly introduced, but some details are omitted, such as the internal implementation of PageStorage, how DeltaIndex is constructed and updated, and how TiFlash is connected to multi-Raft. More code reading content will be gradually expanded in the following chapters, so stay tuned.

Experience the new one-stack real-time HTAP database, sign up for TiDB Cloud today, and try the TiDB Developer Tier for one year for free.

related articles

[1] SOSP'17: PebblesDB: Building Key-Value Stores using Fragmented Log-Structured Merge Trees

[2] Kudu: Storage for Fast Analytics on Fast Data

[3] VLDB'17: Fast scans on key-value stores

[4] Brighthouse: an analytic data warehouse for ad-hoc queries

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。