foreword

Amazon Cloud Technology provides free tiers for more than 100 products. Among them, the computing resource Amazon EC2 is free for 12 months in the first year, 750 hours/month; the storage resource Amazon S3 is free for 12 months in the first year, with a standard storage capacity of 5GB.

Amazon Nitro System has been secretly developed since 2013, officially released in 2017, and has iterated to the fifth generation by 2021.

As a pioneer of hardware virtualization technology that has become popular in the market in recent years, the purpose of the birth of Amazon Nitro System has also been given various interpretations and meanings by people from all walks of life. But as Swami, vice president of Amazon Cloud Technology, said: "At Amazon Web Services, 90% to 95% of our new projects are based on customer feedback, and the remaining 5% are also innovative attempts from the customer's perspective. "

As an old user of Amazon Cloud Technology, the author believes that the "cause" of the birth of Amazon Nitro System is First principle thinking, which is to meet customers' diverse needs for EC2 computing instance types. And now we're seeing significant performance gains and cost reductions from the Amazon Nitro System, just a "cause" of a "cause".

Therefore, in this article, I hope to review the evolution of Amazon Nitro System from a technical point of view and return to the original "cause".

In addition, Amazon Cloud Technology provides free packages for more than 100 products for the majority of cloud computing developers. Among them, the computing resource Amazon EC2 is free for 12 months for the first year, 750 hours/month; the storage resource Amazon S3 is free for the first year for 12 months, with a standard storage capacity of 5GB. Interested friends can click the link to get it for free.

Amazon Nitro System Overview

The new architecture of the Nitro System has enabled the explosive growth of EC2 instance types since 2017, and now has more than 400 types. Starting in 2022, new EC2 instance types such as M1, M2, M3, C1, C3, R3, I2, and T1 will be built on Nitro System. E.g:

- Amazon EC2 high-memory instances, supporting up to 24TiB of memory, are designed for running large in-memory databases.

- Amazon EC2 compute-optimized instances that support up to 192 vGPU + 384GiB Memory, designed for compute-intensive workloads.

- Amazon EC2 compute-accelerated instances supporting up to 8 NVIDIA A100 Tensor Core GPUs, 600 GB/s NVSwitch, 400 Gbps Bandwith ENA and EFA, transitioning to AI/ML and HPC designs.

For more Amazon EC2 instance types, please click the link for details.

- https://aws.amazon.com/cn/ec2/instance-types/?trk=24e40fb1-f5cc-4b8c-a6a2-d3f22bc5c5c4&sc_channel=el

In the context of the "post-Moore's Law" era, it is obviously not an easy task to keep up with the growth rate of business needs and continue to introduce higher-specification computing instance types. On the one hand, the virtualization technology of the infrastructure layer of the cloud computing platform is required to be able to achieve the level of Near Bare-metal, and even to provide a more thorough elastic bare metal instance service; The optimized technical means are unsustainable, and a more revolutionary idea and form is needed. Software-hardware integration acceleration is one of the ways. Offloading (unloading) the system components of the cloud platform infrastructure layer to the dedicated hardware platform as much as possible, freeing up the precious Host CPU core, and making the server resources more extreme to support customer needs.

As Anthony Liguori, Principal Engineer at Amzion Nitro System, said: "The Nitro System is used to ensure that EC2 compute instances can fully open up the entire underlying server resources to customers."

Based on this idea, Amazon Nitro System has opened the road to commercialized products accelerated by the integration of software and hardware.

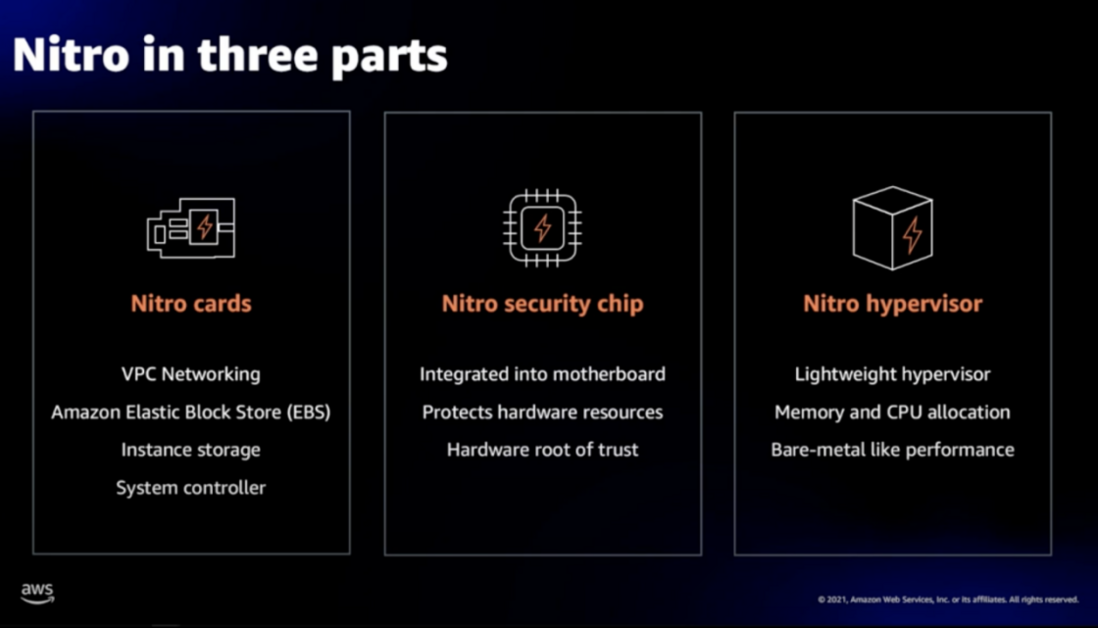

As the name suggests, the Amazon Nitro System is not just a single dedicated hardware device, but a complete set of hardware and software fusion collaborative systems. It consists of three major parts: Nitro Hypervisor, Nitro I/O Accelerator Cards, and Nitro Security Chip. This year, it will continue to join the two major parts of Nitro Enclaves and NitroTPM (Coming soon in 2022). Cooperate with each other and be independent of each other.

- Nitro Hypervisor: It is a Lightweight Hypervisor (lightweight hypervisor) that is only responsible for managing the allocation of CPU and Memory, hardly occupying Host resources, and all server resources can be used to perform customer workloads.

- Nitro Cards: A series of co-processing peripheral cards for Offloading and Accelerated (offloading and acceleration), carrying network, storage, security and management functions, greatly improving network and storage performance, and providing natural security.

- Nitro Security Chip: As more functions are offloaded to dedicated hardware devices, the Nitro Security Chip provides security protection capabilities for dedicated hardware devices and their firmware, including limiting cloud platform maintainers' access to devices, eliminating artificial Incorrect operation and malicious tampering.

- Nitro Enclaves: In order to further protect the personal information protection and data security of EC2 users, Nitro Enclaves further provides the ability to create a computing environment with complete isolation of CPU and Memory based on Nitro Hypervisor to protect and securely process highly sensitive data.

- Nitro TPM (Trusted Platform Module): Supporting the TPM 2.0 standard, Nitro TPM allows EC2 instances to generate, store and use keys, and then supports cryptographic verification of instance integrity through the TPM 2.0 authentication mechanism, which can effectively protect EC2 instances to prevent unauthorized users from accessing users' personal privacy data.

The Evolution of Virtualization Technology of Amazon Cloud Technology EC2

Virtualization technology has always been the cornerstone of cloud computing. Through virtualization technology, inseparable hardware resources are abstracted into logical units that can be reconfigured, so that existing computing, storage and network resources can be allocated and shared on demand.

There is a well-known diagram of the evolution of Amazon Cloud Technology's virtualization technology, drawn by Brendan Gregg in 2017, who explained the origin and technical architecture of the Nitro System in his blog ( https://www.brendangregg.com /blog/2017-11-29/aws-ec2-virtualization-2017.html ).

As we can see from the figure, Brendan splits the key technologies of virtualization according to their functions and sorts them according to their importance. Obviously, the most important ones are CPU and Memory, followed by Network I/O, and then Local Storage I. /O and Remote Storage I/O, and finally Motherboard (interrupt) and Boot (motherboard), etc.

Amazon EC2 instances adopted Xen PV (Para-Virtualization, para-virtualization) from the earliest to later Xen HVM (Hardware-assisted virtualization, hardware-assisted full virtualization), and then gradually added SR-IOV NIC hard acceleration technology, And finally in 2017, the Nitro System, which integrates software and hardware, was launched.

The context of the entire technological evolution is clear, but we cannot stop here, and we need to continue to explore the reasons behind it.

Nitro Hypervisor

As mentioned earlier, the first EC2 instance type m1.small launched by Amazon Cloud Technologies since 2006 uses Xen virtualization technology. The first batch of Amazon cloud technology users can get a cloud host equivalent to 1.7G main frequency Intel Xeon CPU, 1.75GiB Memory, 160GB Disk and 250Mb/s Bandwidth for 10 cents per hour.

The origins of Xen virtualization technology can be traced back to 1990, when Ian Pratt and Keir Fraser created the initial code project for XenServer. Xen 1.0 version was released in 2003, and XenSource company was established. In the era when Intel and AMD had not yet introduced CPU hardware virtualization technology, Xen chose the technical direction of PV (para-virtualization) in order to improve the performance of virtual machines.

As Ian Pratt, the father of Xen, said: "This project was done by myself and some students in the Computer Science Laboratory of the University of Cambridge. We realized that to make virtualization work better, we needed to get Help with the hardware, and keep changing the CPU, changing the chipset, and changing some of the I/O setups so that they can adapt to the needs of virtualization."

In those days, the PV virtualization technology represented by Xen symbolized the ability to have a virtual machine with advanced performance. So soon, solutions based on Xen virtualization technology were integrated by Linux distributions such as RedHat, Novell and Sun as the default virtualization solution.

- In 2005, Xen 3.0.0 was released, which ran on 32-bit servers as the first hypervisor to support Intel VT-x technology. This allows the Xen virtual machine to run a completely unmodified version of GuestOS, the version that is actually available for Xen.

- In 2006, RedHat made the Xen virtual machine a default feature of enterprise RHEL.

- In 2007, Novell added Xen virtualization software to its enterprise-class SLES (Suse Linux Enterprise Server) 10.

- In June 2007, RedHat included Xen virtualization capabilities in all platforms and management tools.

- In October 2007, Citrix acquired XenSource for $500 million and became the owner of the Xen virtual machine project. Then launched the virtualization product Citrix Delivery Center.

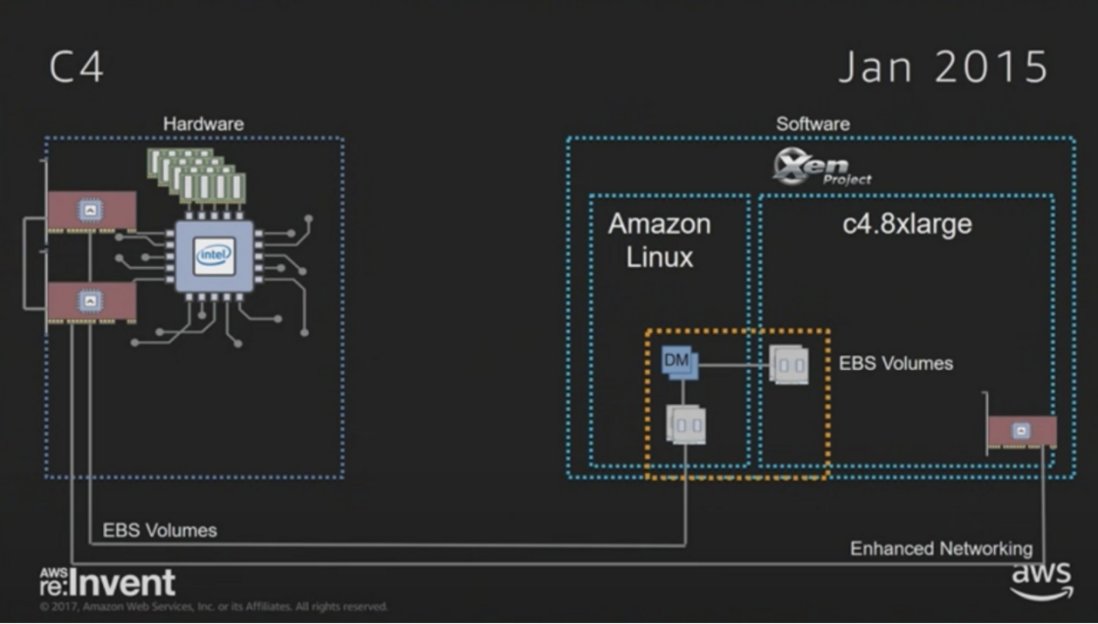

Amazon Cloud Technology, which was born in 2006, naturally chose Xen as the underlying support of EC2 virtualization technology, and in the following ten years, it has successively released 27 EC2 instances based on Xen virtualization technology.

Xen realizes the virtual machine's CPU and memory virtualization, but the I/O functions of the virtual machine, including network and storage, also need to pass a Front-end (front-end module) in the virtual machine and the Backend (backend (backend) in Domain 0) end modules) and are finally implemented by Device drivers in Domain 0.

Domain 0 here is a privileged virtual machine of Xen (the only virtual machine running on the Xen Hypervisor), running a modified Linux kernel, which has access to physical I/O resources. Domain 0 needs to be started before other business virtual machines are started, and is used to provide the peripheral emulation function of business virtual machines.

In 2013, the architecture of the cr1.8xlarge EC instance of Amazon Cloud Technology using Xen PV virtualization technology is shown in the following figure. It can be seen that both the user's business virtual machine and the Domain 0 management virtual machine of Xen should be run on the Host. The local storage, EBS Volume and VPC network access of business virtual machines are all implemented through Domain 0 virtual machines.

Obviously, such I/O paths are very lengthy, which inevitably reduces I/O performance, and Domain 0 will also preempt Host's CPU/Memory/IO resources with business virtual machines, making it difficult to manage virtual machines and business virtual machines. The balance between, and avoid performance jitter. Moreover, with the development of business requirements, the performance of storage and network is constantly required, which means that Host needs to reserve more CPU cores to simulate these peripherals. When the scale reaches a certain level, the problem will become very obvious. .

These problems have prompted Amazon Cloud Technology to seek new methods to improve the hypervisor architecture implemented by pure software, and explore more flexible and feasible means of virtual machine management. The result of the exploration is the Amzon Nitro System.

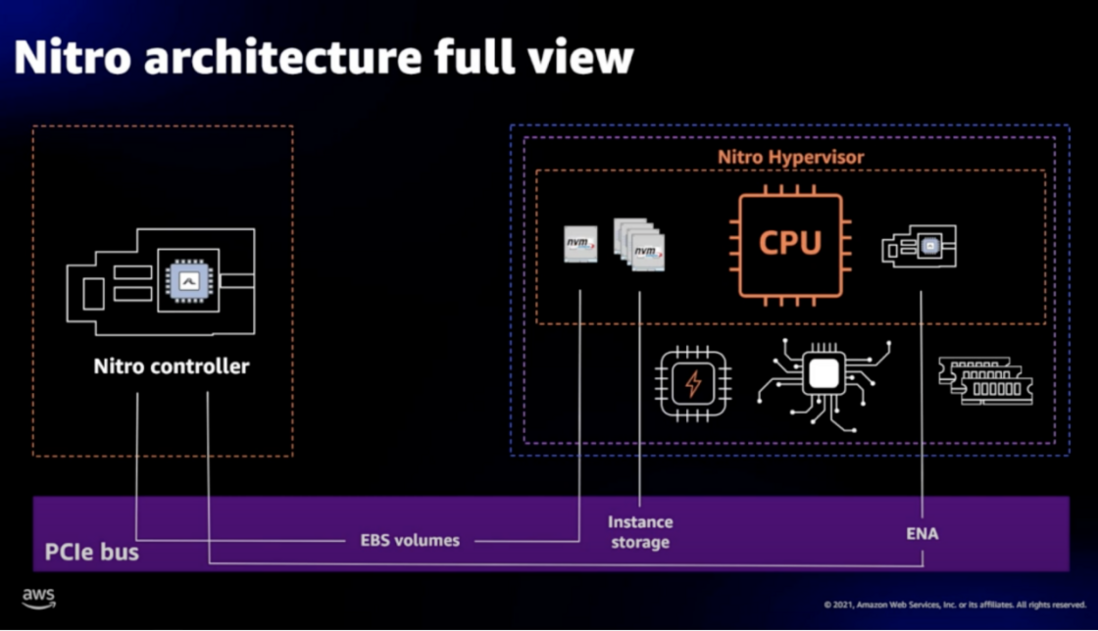

Nitro System uses a new Nitro Hypervisor to replace the Xen virtualization technology adopted by Amazon Cloud Technology in the early days. It is based on KVM and runs in a very simplified customized Linux Kernel. As a Lightweight Hypervisor, it is only responsible for managing the allocation of CPU and Memory, and Assigning Nitro I/O Acceleration Cards to compute instances does not implement any I/O virtualization capabilities by itself. Therefore, the Nitro Hypervisor can have the performance of BareMetal-Like.

Anthony Liguori, chief engineer of Amazon EC2, said: "Nitro is based on Linux KVM technology, but it does not contain the I/O components of a general-purpose operating system. Nitro Hypervisor is mainly responsible for providing virtual isolation of CPU and Memory for EC2 instances. Nitro Hypervisor The software component of the host system is eliminated, providing better performance consistency for EC2 instances, while increasing the compute and memory resources available to the host, making almost all server resources directly available to customers."

KVM (Kernel-based Virtual Machine, kernel-based virtual machine) was integrated into Linux Kernel 2.6.20 on February 5, 2007, and is one of the most popular virtualization technologies today. The essence of KVM is a virtualization function module kvm.ko (kvm-intel.ko/kvm-AMD.ko) embedded in the Linux Kernel, which utilizes some of the operating system capabilities (eg task scheduling, On the basis of memory management and hardware device interaction), the ability of CPU and Memory virtualization is added, so that Linux Kernel has the conditions to become Hypervisor VMM.

The biggest feature of KVM is that it only provides the virtualization function of CPU and Memory, but cannot simulate any device. Therefore, the usual KVM must also use a VMM to simulate the virtual devices required by the virtual machine (eg network card, graphics card, storage controller and hard disk), and provide an operation entry for the GuestOS. The most common is to choose QEMU (Quick Emulator) as a supplement. Therefore, the KVM community launched the QEMU-KVM branch distribution after making a slight modification to QEMU.

QEMU is a free, open source, purely software-implemented, and powerful VMM. Until QEMU 4.0.0 was released in 2019, it was announced that it could simulate almost all devices. However, since these simulations are implemented purely in software, their performance is low.

One of the biggest differences between the Nitro System and the QEMU-KVM solution commonly used in the industry is that it does not use QEMU, but offloading the device simulation function provided by QEMU to the Nitro I/O Acceleration Cards implementation, which in turn provides Nitro EC2 instances. storage, networking, management, monitoring and security capabilities.

But it does not mean that the native KVM has the Hardware Offloading framework and can be directly migrated. As far as I know, it will inevitably involve a lot of adaptive custom development. Therefore, the Nitro System team did not start from this aspect, but first solved the problem of the hypervisor after solving the offload acceleration of the network and storage. Of course, the most important thing in Amazon cloud technology is the technical giants in the field of virtualization, such as James Hamilton, who is the vice president and distinguished engineer of Amazon cloud technology. He is one of the few engineers who is allowed to publish major technical ideas in his personal blog. In James Hamilton's personal blog ( https://perspectives.mvdirona.com/ ), we can read a lot of technical insider related to the Nitro System.

Back on topic, it wasn't until November 2017 that Amazon Cloud Technologies released the C5 instance type, which replaced Xen for the first time with the KVM-based Nitro hypervisor. It can be seen that the Xen Domain 0 management virtual machine has been completely removed in the new virtualization architecture, which greatly frees up Host resources.

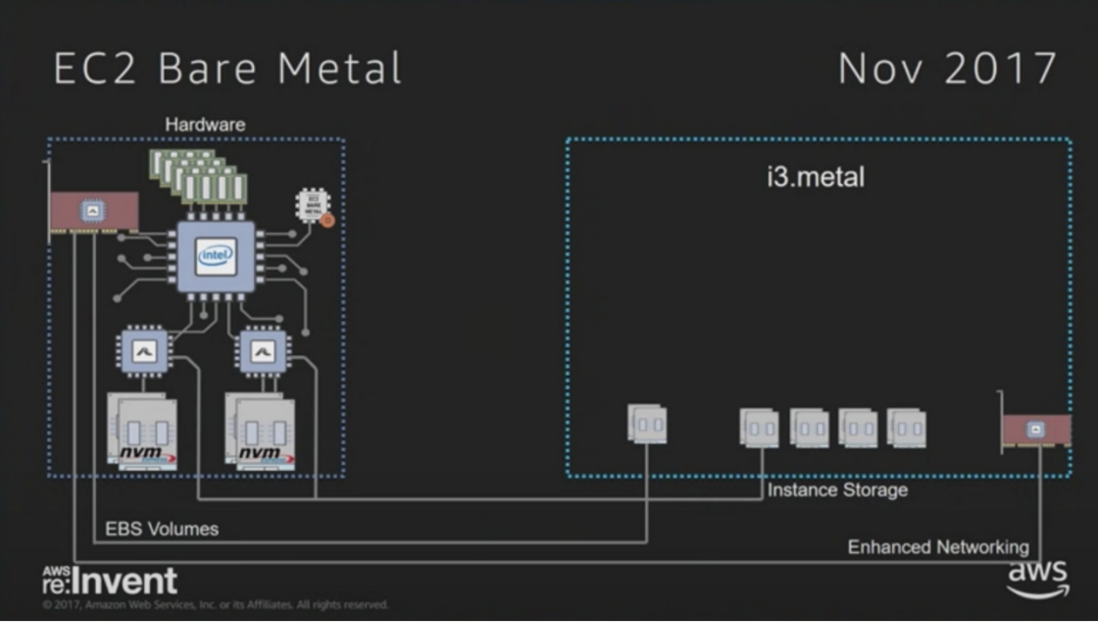

It is also worth noting that in recent years, although cloud services such as IaaS and PaaS have been very perfect, in some specific scenarios (such as complete exclusive isolation at the physical level), users still put forward demands for elastic bare metal cloud services.

Different from traditional data center computer room leasing, elastic bare metal cloud services require the same agile provisioning, pay-as-you-go, and cost-effective features as conventional cloud services. Therefore, the technical difficulty in delivering elastic bare metal cloud services is: how to efficiently manage the resource pooling of bare metal server clusters without installing any intrusive cloud platform infrastructure management components on bare metal servers?

From the current point of view, only offloading cloud platform infrastructure management components to dedicated hardware devices is a more feasible solution. While using elastic bare metal services, you can continue to use basic cloud services such as EBS, ELB, and VPC. During the delivery of bare metal instances, the server network and network-based shared storage settings are automatically completed, and cloud host features such as migration of bare metal instances are supported.

Therefore, also in 2017, Amazon Cloud Technology also released i3.metal, the first elastic bare metal instance type based on Nitro System. i3.metal instances have virtually no host performance overhead, and users can run any desired application directly on the server, such as: Xen, KVM, ESXi, containers, FireCracker micro-VMs, and more.

In summary, after introducing the Nitro Hypervisor and offloading more I/O peripherals to Nitro I/O Acceleration Cards, Nitro System rebuilt the infrastructure layer of the Amazon Cloud Platform. The main and intuitive benefit of offloading storage, network and security functions to dedicated hardware is that EC2 instances can provide GuestOS with almost all of the CPU and memory of the Host, and the performance has also been greatly improved (Bypass Kernel redundant I/O paths), while also supporting the delivery of elastic bare metal services.

As Jeff Bar, chief technical evangelist at Amazon Cloud Technologies, wrote in a blog post: "EC2 instances with Nitro Hypervisior are only about 1% worse than bare metal servers, and the difference is hard to detect. "

As can be seen from the test results in the figure below, the C5 instance using the Nitro Hypervisor has only a small additional overhead compared to the i3.metal bare metal instance, and the performance is very stable, which can fully meet the SLA requirements of the application. The virtualization performance provided by the Nitro Hypervisor is very close to raw devices.

Nitro Cards

Nitro Cards have the following 6 types, which are still essentially PCIe peripheral cards that can be connected to Nitro instances using various Passthrough technologies. Nitro Cards are transparent to users as part of the Amazon Cloud Technology cloud platform infrastructure.

- Nitro Controllere Card (Management)

- Nitro VPC Card (Network)

- Nitro EBS Card (remote storage)

- Nitro Instance Storage Card (local storage)

- Nitro Security Chip Card (Security)

In this way, the combination of Nitro Hypervisor and Nitro Cards can "assemble" all the computer components required by a virtual machine to make up the system components, apply more server hardware equipment resources to production needs, and provide customers with more available computing Instance types without having to reserve host computing resources for a series of cloud platform infrastructure (management, monitoring, security, network or storage I/O).

Nitro Controller Card

As a system, Nitro System has a logically centralized Nitro Controller as a central control role. The Nitro Controller Card implements the collaborative control capability of Accelerator Cards, and loads corresponding Device Drivers and Control Plane Controllers for each different type of Nitro EC2 instance. E.g:

- Provide ENA Controller through Nitro VPC Card;

- Provide NVMe Controller through Nitro EBS Card;

- Provide Hardware Root Of Trust through Nitro Security Chip to support instance monitoring, metering and authentication;

- Provide data security protection capabilities and more through the Nitro Enclave.

Nitro VPC Card

Network-enhanced EC2 instances were the first entry point chosen by the Amazon Nitro System technical team, and C3, released in 2013, first adopted SR-IOV Passthrough technology.

SR-IOV (Single-Root I/O Virtualization, single root I/O virtualization) is a PCIe device virtualization technology standard launched by PCI-SIG, which is a virtualization solution based on physical hardware. SR-IOV technology allows PCIe devices to be shared between virtual machines, and VF/PF Bypass Hypervisor can be directly connected to virtual machines through SR-IOV Passthrough, which effectively improves the performance and scalability of physical I/O devices. Since SR-IOV is implemented based on hardware, the virtual machine obtains I/O performance comparable to that of the host machine.

As shown in the figure below, an SR-IOV NIC is added to the Host, and the VPC virtual interface of the EC2 instance is no longer provided by Xen Domain 0, but directly connected to the SR-IOV PF/VF. If you are using Intel 82599 NIC, you only need to install ixgbe drivers in the EC2 instance to have up to 10Gbps network throughput.

However, SR-IOV actually has its limitations, and there are three main problems: 1) limited scalability, only supports a certain number of VFs; 2) limited flexibility, does not support Live Migration; 3) limited computing power, unable to provide More advanced network virtualization capabilities.

Therefore, based on the concept of SR-IOV, the Nitro System team released the ENA (Elastic Network Adapter) technology in 2016 and applied it to the X1 instance type, with a maximum throughput of 25Gbps. ENA is a part of the Nitro System project. The Nitro VPC Card based on ENA technology was originally supported by the ASIC chip accelerator card developed by Annapurna Labs in Israel. Technology acquisitions.

Now the latest Nitro VPC Card fully implements VPC Data Plane Offloading. Nitro instances can directly transfer data to Nitro VPC Card through ENA drivers, like SR-IOV Passthrough, by Bypass Kernel, which greatly improves I/O efficiency. At the same time, the ASIC chip on the Nitro VPC Card also implements functions such as multi-tenant platform network isolation, data packet encapsulation/decapsulation, Security Group, QoS and Routing.

In addition, Nitro VPC Card further implements richer network acceleration features. For example, EFA (Elastic Fabric Adapter) provides User Space Network Function, and customers can use OpenFabrics Alliance Libfabric SDK/API through EFA, such as: MPI (Message Passing Interface) or NCCL (NVIDIA Collective Communication Library).

Using EFA and Libfabric, User Space Applications can conduct RDMA/RoCE communication in the way of Bypass Linux Kernel, which combined with the NVMe-oF capability provided by Nitro EBS Card, can achieve higher performance with lower CPU usage.

In addition, with the development of network technology, from the 1000M era in 2006, to the 10GB era in 2012, to the 25GB era in 2016, to today's 100GB era. If the wider network virtualization technology (eg VxLAN / GRE Overlay) cannot be offloaded to the dedicated hardware for processing, thereby freeing the Host CPU core, the precious CPU core cannot handle any business except network traffic.

As introduced by Amazon Cloud Technology: "The new C5n EC2 instance is able to achieve 100Gbps network throughput because of the adoption of the fourth-generation Nitro VPC Card."

Nitro EBS Card

Storage-enhanced EC2 was the second attempt by the Amazon Nitro System team, introducing EBS Volumes based on NVMe SSDs with the C4 instance type introduced in 2015.

With the development of AI technology, it has entered the fast lane. Deep learning relies on massive sample data and powerful computing power, and also promotes the development of HPC (High Performance Computing). Efficient AI training requires very high network throughput and high-speed storage to process large amounts of data, which will be transmitted between computing nodes and storage nodes.

Under normal circumstances, in a low-load traffic environment with link bandwidth utilization less than 10%, the network packet loss rate caused by burst traffic will be close to 1%, and this 1% packet loss rate directly affects the AI computing system. There is a loss of nearly 50% computing power. In distributed AI training scenarios, network retransmissions caused by network jitter and data transmission delay will further reduce network throughput, greatly reducing the efficiency of model training, or even failing.

Therefore, we can see that in recent years, high-performance computing power centers have been continuously introducing new technologies such as RDMA/RoCE, NVMe/NVMe-oF, and NVMe over RoCE.

In terms of storage, compared to traditional HDD (Hard Disk Drive, mechanical hard disk) disk storage media, SSD (Solid-State Drive, solid-state drive) semiconductor storage media brings a nearly 100-fold improvement in storage performance. SSDs based on the NVMe (Non-Volatile Memory express, non-volatile memory host controller interface specification) standard can significantly reduce latency while improving performance.

So after releasing the NVMe SSD standard in 2015, the Nitro System team immediately aimed in this direction. Now, the capacity of an NVMe SSD can reach more than ten TB, 1 million IOPS performance, and at the same time, it has microsecond-level delay.

Although the traditional Forint-end/Back-end I/O architecture of Xen virtualization technology was still retained due to the compatibility of NVMe technology (until the Nitro Hypervisor was launched in 2017), it was still uninstalled according to dedicated hardware. Idea adds a storage network accelerator card also developed by Annapurna Labs, which can present Remote Storage to EC2 instances using NMVe protocol.

The benefits of this improvement are the further release of the Host CPU core and the practice of advanced NVMe SSD technology.

Now, the latest Nitro EBS Card can fully implement EBS Data Plane Offloading, EC2 instances communicate with it through standard NVMe drivers, and data transmission is based on the NVMe-oF protocol, while supporting EBS Volume Data encryption/decryption, QoS, storage I/O acceleration, etc. Not only that, but Nitro EBS Cards also implements isolation between EC2 instances and storage I/O resource consumption at the hardware layer, isolating performance interference between different tenants.

Nitro Instance Storage Card

The i3 storage-optimized EC2 instance, released in 2017, specifically addresses the hardware acceleration requirements for local storage of EC2 instances. The i3 instance type combines SR-IOV technology and Local NVMe SSD drivers based on past technology accumulation to realize Instance Storage Data Plane Offloading.

The performance benefits of local NVMe disks are self-evident, with significant improvements in IOPS and latency. Combined with SR-IOV, NVMe SSD can be directly used by multiple Local EC2 instances. Virtual machines only need to install the corresponding NVMe drivers to access these SSD disks, which can then provide more than 3 million IOPS performance. The Nitro Instance Storage Card can also monitor the wear of the Local SSD.

Nitro Security Chip Card

Different from the Nitro Cards mentioned above, the Nitro Security Chip Card integrates the Nitro Security Chip, which is mainly used to continuously monitor and protect the hardware resources of the Server, and independently verify the security of the Firmware each time the HostOS is started to ensure that there is no be modified or altered in any unauthorized manner. Therefore, the functions provided by the Nitro Security Chip Card are not perceived by the EC2 instance.

In the production process, the Firmware of various devices is very critical to the safe operation of the entire system, in order to manage these Firmware correctly. On the one hand, the Nitro Security Chip will track the I/O operations performed by the Host on various Firmware, and each checksum (device metric) of the Firmware will be checked against the verification value stored in the Security Chip; on the other hand, it can also manage these Firmware update, which is difficult to achieve on common standard servers.

end

In the past ten years of cloud computing, we have found that more and more enterprise CIOs will conduct cost accounting more rationally and scientifically when introducing cloud computing services. How to effectively deliver cost-effective cloud service products is more than any previous Competitive barriers must be effectively constructed at all times.

What is admirable is that Amazon Cloud Technology has always adhered to a cost-effective strategy. At present, statistics have reduced prices more than 70 times in a row. Looking back at the technological evolution of Nitro System, Amazon Cloud Technology's means of improving cost performance through revolutionary innovations in the direction of hardware virtualization technology are fundamentally different from market-based price wars. It is a more simple cost reduction and efficiency increase brought about by the technological revolution, and it is undoubtedly the most solid brick in its competition barrier.

Finally, let me introduce you to the diversified learning platform created by Amazon Cloud Technology for cloud computing developers. Interested friends can get a lot of high-quality official first-hand tutorial content from it.

Amazon Cloud Technology has created a variety of learning platforms for developers:

- Getting Started Resource Center: From 0 to 1, it is easy to get started with cloud services, covering: cost management, start-up training, and development resources. https://aws.amazon.com/cn/getting-started/?nc1=h_ls&trk=32540c74-46f0-46dc-940d-621a1efeedd0&sc_channel=el

- Architecture Center: The Amazon Cloud Technology Architecture Center provides cloud platform reference architecture diagrams, vetted architecture solutions, Well-Architected best practices, patterns, icons, and more. https://aws.amazon.com/cn/architecture/?intClick=dev-center-2021_main&trk=3fa608de-d954-4355-a20a-324daa58bbeb&sc_channel=el

- Builder's Library: Learn how Amazon Cloud Technologies builds and operates software. https://aws.amazon.com/en/builders-library/?cards-body.sort-by=item.additionalFields.sortDate&cards-body.sort-order=desc&awsf.filter-content-category=all&awsf.filter-content _ -type= all&awsf.filter-content-level=*all&trk=835e6894-d909-4691-aee1-3831428c04bd&sc_channel=el

- Toolkit for developing and managing applications on the Amazon Cloud Platform: https://aws.amazon.com/cn/tools/?intClick=dev-center-2021_main&trk=972c69e1-55ec-43af-a503-d458708bb645&sc_channel= el

【 Exclusive benefits 】

Benefit 1: Free packages for more than 100 products. Among them, the computing resource Amazon EC2 is free for 12 months for the first year, 750 hours/month; the storage resource Amazon S3 is free for the first year for 12 months, with a standard storage capacity of 5GB.

https://aws.amazon.com/cn/free/?nc2=h_ql_pr_ft&all-free-tier.sort-by=item.additionalFields.SortRank&all-free-tier.sort-order=asc&awsf.Free%20Tier%20Types=all&awsf .Free%20Tier%20Categories=all&trk=e0213267-9c8c-4534-bf9b-ecb1c06e4ac6&sc_channel=el

Benefit 2: The latest discount package, 200$ data and analysis coupons, 200$ machine learning coupons, 200$ microservices and application development coupons. https://www.amazonaws.cn/campaign/?sc_channel=el&sc_campaign=credit-acts-ldr&sc_country=cn&sc_geo=chna&sc_category=mult&sc_outcome=field&trkCampaign=request-credit-glb-ldr&trk=f45email&trk=02faebcb-3f61-4bcb-b68e-c63f3ae33c =el

Benefit 3: Solution CloudFormation one-click deployment template library

https://aws.amazon.com/cn/quickstart/?solutions-all.sort-by=item.additionalFields.sortDate&solutions-all.sort-order=desc&awsf.filter-tech-category=all&awsf.filter-industry=all&awsf .filter-content-type=all&trk=afdbbdf0-610b-4421-ac0c-a6b31f902e4b&sc_channel=el

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。