author:

Shimian|Microservice Engine MSE R&D Engineer

Yang Shao|Microservice Engine MSE R&D Engineer

This article is excerpted from the "Microservice Governance Technology White Paper", which has been prepared for more than half a year and is 379 pages long. I hope that through this book, I can play a little role in effectively solving the problem of microservice governance under the cloud native architecture. The free download address of the electronic version is:

https://developer.aliyun.com/ebook/7565

Long press the QR code to go to the download address

Service Publishing under Monolithic Architecture

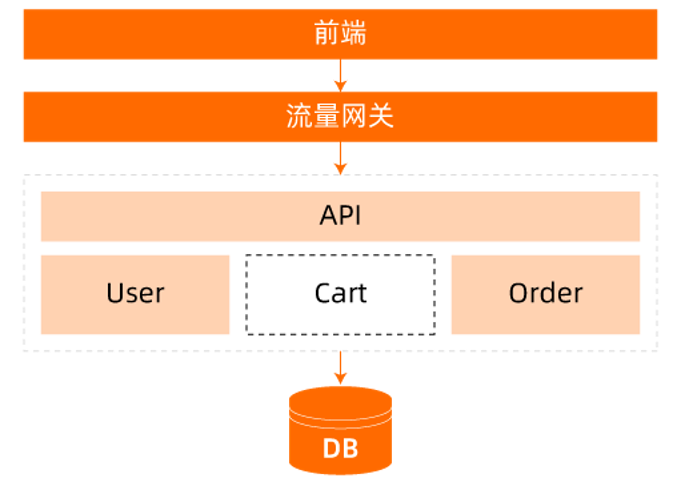

First, let's take a look at how to release a new version of a service module in an application in a monolithic architecture. As shown in the figure below, the Cart service module in the application has a new version iteration:

Since the Cart service is a part of the application, the entire application needs to be compiled, packaged, and deployed when the new version goes online. Service-level release issues become application-level release issues, and we need to implement effective release strategies for new versions of applications, not services.

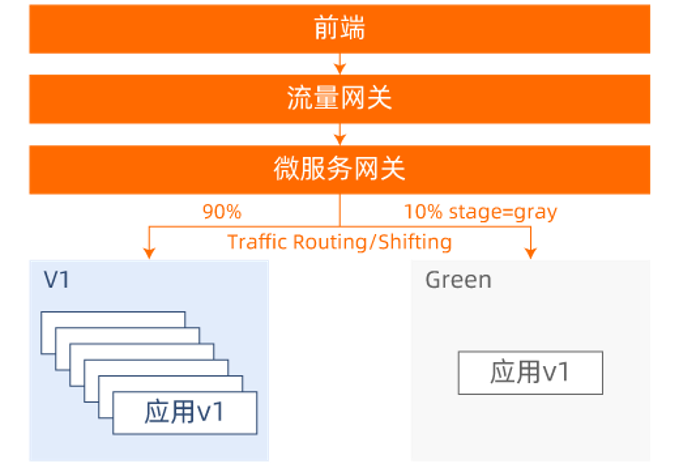

At present, the industry already has very mature service release solutions, such as blue-green release and grayscale release. The blue-green release requires redundant deployment of the new version of the service. Generally, the machine specifications and quantity of the new version are the same as the old version, which means that the service has two identical deployment environments, but only the old version is available at this time. The version provides services to the outside world, and the new version serves as a hot backup. When the service is upgraded, we only need to switch all traffic to the new version, and the old version is used as a hot backup. The schematic diagram of our example using the blue-green release is as follows, the traffic switching can be completed by the traffic gateway based on the four-layer proxy.

In the blue-green release, due to the overall switching of traffic, it is necessary to clone a set of environments for the new version according to the machine size occupied by the original service, which is equivalent to requiring twice the machine resources of the original. The core idea of grayscale release is to forward a small portion of online traffic to the new version according to the requested content or the proportion of requested traffic. After grayscale verification is passed, gradually increase the request traffic of the new version. way of publishing. The schematic diagram of our example using grayscale publishing is as follows. Content-based or proportion-based traffic control needs to be completed with the help of a seven-layer proxy microservice gateway.

Among them, Traffic Routing is a grayscale method based on content, for example, the traffic with the header stag=gray in the request is routed to the v2 version of the application; Traffic Shifting is a grayscale method based on ratio, in an indiscriminate way Divide online traffic by weight. Compared with blue-green release, grayscale release is better in terms of machine resource cost and flow control capability, but the disadvantage is that the release cycle is too long and requires higher operation and maintenance infrastructure.

Service Publishing under Microservice Architecture

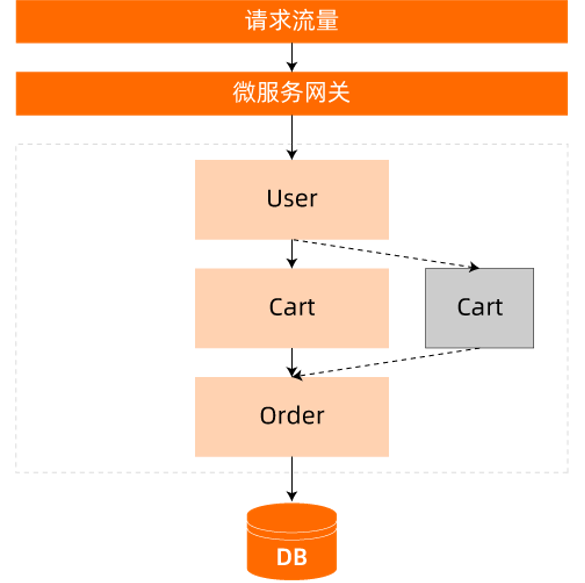

In a distributed microservice architecture, the subservices that are split out of an application are deployed, run, and iterated independently. When a new version of a single service goes online, we no longer need to release the entire application, but only need to pay attention to the release process of each microservice, as follows:

In order to verify the new version of the service Cart, the traffic can be selectively routed to the gray version of the Cart in some way on the entire call link, which belongs to the traffic governance problem in the field of microservice governance. Common governance strategies include provider-based and consumer-based approaches.

- Provider-based governance strategy. Configure Cart's traffic inflow rules. When User routes to Cart, Cart's traffic inflow rules are used.

- Consumer-based governance strategy. Configure User's traffic outflow rules. User's traffic outflow rules are used when User is routed to Cart.

In addition, using these governance strategies can be combined with the blue-green release and grayscale release schemes described above to implement true service-level release releases.

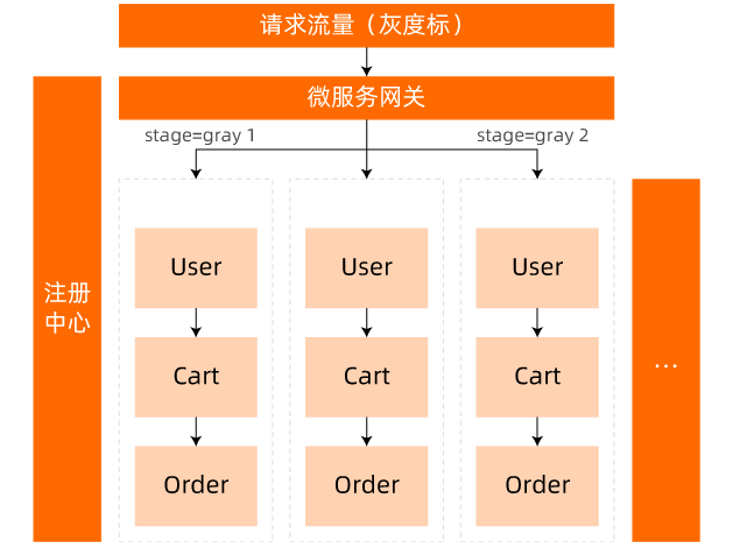

What is full link grayscale

Continue to consider the scenario of publishing the service Cart in the above microservice system. If the service Order also needs to release a new version at this time, since this new function involves the common changes of the service Cart and the Order, it is required to be verified in grayscale. Enables grayscale traffic to pass through both Cart and Order grayscale versions. As shown below:

According to the two governance strategies proposed in the previous section, we need to additionally configure the governance rules of the service Order to ensure that the traffic from the service Cart in the grayscale environment is forwarded to the grayscale version of the service Order. This approach seems to be in line with normal operation logic, but in real business scenarios, the scale and number of microservices in the business far exceed our example. One request link may go through dozens of microservices. When new functions are released, It may also involve multiple microservices changing at the same time, and the dependencies between business services are intricate, frequent service releases, and parallel development of multiple versions of services lead to the expansion of traffic governance rules, which increases the maintainability and stability of the entire system. brought disadvantages.

For the above problems, the developer proposes an end-to-end grayscale release scheme, that is, full-link grayscale , based on actual business scenarios and practical production experience. The full-link grayscale governance strategy mainly focuses on the entire call chain. It does not care which micro-services pass through the link. The traffic control perspective is transferred from the service to the request link, and only a small number of governance rules can be constructed. Multiple traffic isolation environments from the gateway to the entire back-end service effectively ensure the smooth and safe release of multiple intimate services and the parallel development of multiple versions of services, further promoting the rapid development of the business.

Full link grayscale solution

How to quickly implement full-link grayscale in actual business scenarios? At present, there are two main solutions, isolation based on physical environment and isolation based on logical environment.

Physical environment isolation

Physical environment isolation, as the name implies, builds real traffic isolation by adding machines. \

This solution needs to build a network-isolated and resource-independent environment for the grayscale service, and deploy the grayscale version of the service in it. Due to isolation from the formal environment, other services in the formal environment cannot access services that require grayscale. Therefore, these online services need to be redundantly deployed in the grayscale environment so that the entire call link can forward traffic normally. In addition, some other dependent middleware components such as the registry also need to be redundantly deployed in the grayscale environment to ensure the visibility between microservices and ensure that the obtained node IP addresses only belong to the current network environment.

This solution is generally used for enterprise testing and the establishment of a pre-release development environment. It is not flexible enough for the scenario of online grayscale release and drainage. Moreover, the existence of multiple versions of microservices is commonplace in the microservice architecture, and it is necessary to maintain multiple sets of grayscale environments for these business scenarios by means of stacking machines. If you have too many applications, the operation and maintenance and machine costs will be too large, and the cost and cost will far exceed the benefits; if the number of applications is very small, just two or three applications, this method is still very Convenient and acceptable.

Logical environment isolation

Another solution is to build a logical environment isolation. We only need to deploy the grayscale version of the service. When the traffic flows on the call link, the grayscale is identified by the gateway, middleware and microservices that flow through. traffic, and dynamically forwarded to the grayscale version of the corresponding service. As shown below:

The above figure can well show the effect of this scheme. We use different colors to represent the gray-scale traffic of different versions. It can be seen that both the microservice gateway and the microservice itself need to identify the traffic and make dynamic decisions according to the governance rules. . When the service version changes, the forwarding of this call link will also change in real time. Compared with the grayscale environment built by machines, this solution can not only save a lot of machine cost and O&M manpower, but also help developers to perform refined full-link control of online traffic in real time and quickly.

So how is the full-link grayscale implemented? Through the above discussion, we need to solve the following problems:

1. Each component and service on the link can be dynamically routed according to the request traffic characteristics.

2. All nodes under the service need to be grouped to be able to distinguish versions.

3. It is necessary to carry out grayscale identification and version identification for the traffic.

4. Different versions of grayscale traffic need to be identified.

Next, the techniques needed to solve the above problems will be introduced.

Label routing

Label routing groups all nodes under the service according to different label names and label values, so that service consumers who subscribe to the service node information can access a certain group of the service on demand, that is, a subset of all nodes. The service consumer can use any label information on the service provider node. According to the actual meaning of the selected label, the consumer can apply label routing to more business scenarios.

Node marking

So how to add different labels to service nodes? Driven by today's hot cloud-native technologies, most businesses are actively embarking on a containerization transformation journey. Here, I will take containerized applications as an example to introduce how to mark service workload nodes in two scenarios: using Kubernetes Service as service discovery and using the popular Nacos registry.

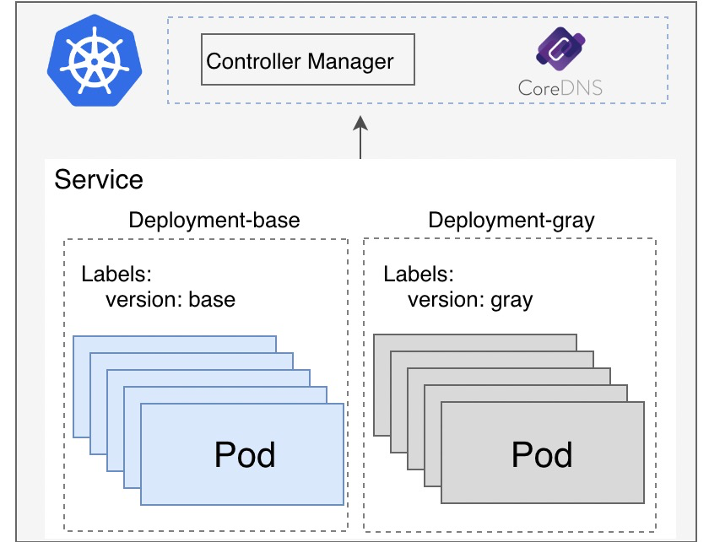

In a business system that uses Kubernetes Service as service discovery, the service provider completes service exposure by submitting Service resources to ApiServer, and the service consumer monitors the Endpoint resources associated with the Service resources, and obtains the associated business Pod resources from the Endpoint resources. , read the above Labels data and use it as the metadata information of the node. Therefore, we only need to add labels to the nodes in the Pod template in the business application description resource Deployment.

In a business system that uses Nacos as a service discovery, it is generally necessary for the business to decide the marking method according to the microservice framework it uses. If the Java application uses the Spring Cloud microservice development framework, we can add corresponding environment variables to the business container to complete the tag addition operation. For example, if we want to add a version grayscale label to the node, then add spring.cloud.nacos.discovery.metadata.version=gray to the business container, so that the framework will add a label verison=gray to the node when it registers with Nacos.

Flow staining

How do each component on the request link identify different grayscale traffic? The answer is traffic coloring, adding different grayscale marks to the request traffic for easy differentiation. We can dye the traffic at the source of the request, and the front-end marks the traffic according to the user information or platform information when initiating the request. If the front end cannot do this, we can also dynamically add traffic identifiers to requests matching specific routing rules on the microservice gateway. In addition, when the traffic flows through the grayscale nodes in the link, if the request information does not contain the grayscale logo, it needs to be automatically colored, and then the traffic can preferentially access the grayscale version of the service in the subsequent flow process.

Distributed Link Tracing

Another very important question is how to ensure that the grayscale logo can be transmitted all the time in the link? If the request source is dyed, when the request passes through the gateway, the gateway will forward the request to the ingress service as a proxy, unless the developer implements the request content modification policy in the gateway's routing policy. Then, the request traffic will call the next microservice from the entry service, and a new call request will be formed according to the business code logic, so how do we add the grayscale logo to this new call request, so that we can Pass it down the link?

Evolving from a monolithic architecture to a distributed microservice architecture, the calls between services have changed from method calls in the same thread to calling services in remote processes from services in local processes, and remote services may be in the form of multiple copies Deployment, so that the nodes that a request flows through is unpredictable and uncertain, and each hop of the call may fail due to network failure or service failure. The distributed link tracking technology records the request call links in large-scale distributed systems in detail. The core idea is to record the nodes passed by the request link through a globally unique traceid and each spanid. And the request time-consuming, in which the traceid needs to be transmitted across the link.

With the help of distributed link tracking idea, we can also pass some custom information, such as grayscale logo. The common distributed link tracking products in the industry all support the link to transmit user-defined data. The data processing flow is shown in the following figure:

Logical environment isolation

First, the dynamic routing function needs to be supported. For Spring Cloud and Dubbo development frameworks, a custom filter can be implemented for outbound traffic, and traffic identification and label routing can be completed in the filter. At the same time, it is necessary to use the distributed link tracking technology to complete the transmission of traffic identification links and automatic traffic coloring. In addition, a centralized traffic management platform needs to be introduced to facilitate developers of each business line to define their own full-link grayscale rules. As shown below:

In general, the ability to achieve full-link grayscale is relatively high in terms of cost and technical complexity, and later maintenance and expansion are very costly, but it is indeed a more refined improvement. application stability during the release process.

Click here to go to the official website of the microservice engine MSE for more details!

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。