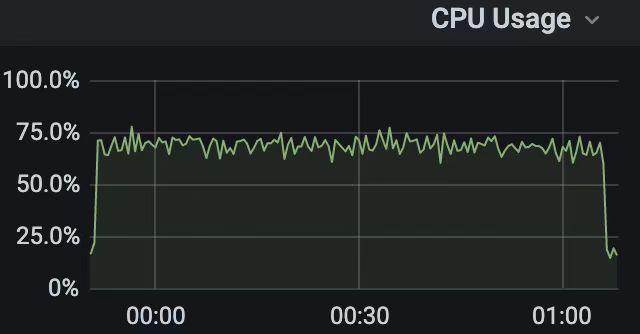

There was a thorny problem in the early days of TiFlash: for complex small queries, no matter how much concurrency is added, the CPU usage of TiFlash is far from full. As shown below:

After a period of understanding of TiFlash and the problem itself, I believe that the direction should be on "common components" (global locks, underlying storage, upper-level services, etc.). After doing a "carpet" investigation in this direction, an important cause of the problem was finally located: frequent thread creation and release under high concurrency, which would cause threads to queue and block during the creation/release process.

Since the working mode of TiFlash relies on starting a large number of temporary new threads to do some local calculations or other things, a large number of thread creation/release processes are queued and blocked, resulting in the blocking of the application's computing work. And the more concurrency, the more serious the problem, so CPU usage no longer increases with concurrency.

The specific investigation process, due to the limited space, will not be repeated in this article. First we can construct a simple experiment to reproduce this problem:

Experiment to reproduce and verify

definition

First define three working modes: wait, work, workOnNewThread

wait: while loop, wait condition_variable

work: while loop, each time memcpy 20 times (each time memcpy copy 1000000 bytes).

workOnNewThread: while loop, apply for a new thread each time, in the new thread memcpy 20 times, join wait for the thread to end, repeat this process.

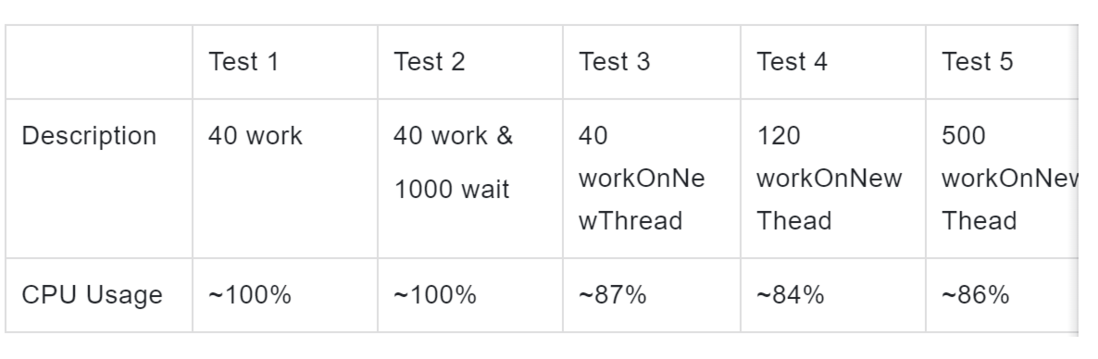

Next, do experiments according to different working mode combinations.

Each experiment

Experiment 1: 40 worker threads

Experiment 2: 1000 wait threads, 40 work threads

Experiment 3: 40 workOnNewThread threads

Experiment 4: 120 workOnNewThread threads

Experiment 5: 500 workOnNewThread threads

Specific experimental results

The CPU usage of each experiment is as follows:

Result analysis

Experiments 1 and 2 show that even though Experiment 2 has 1000 more wait threads than Experiment 1, the CPU will not be dissatisfied because the number of wait threads is very large. Too many wait threads will not make the CPU full. In terms of reasons, wait type threads do not participate in scheduling, which will be discussed later. In addition, linux uses the cfs scheduler, and the time complexity is O(lgn), so in theory, the large-scale number of schedulable threads will not add significant pressure to scheduling.

Experiments 3, 4, and 5 show that if the working mode of a large number of worker threads is to apply and release threads frequently, it can lead to the situation that the CPU is not full.

Next, I will take you to analyze, why the frequent creation and release of threads will bring about queuing and blocking, and the cost is so high?

What happens to thread creation and release under multiple concurrency?

Blocking phenomenon seen on GDB

Using GDB to view the program in the scenario of frequent thread creation and release, you can see that the thread creation and release process is blocked by the lock of lll_lock_wait_private . As shown in the figure:

#0 _lll_lock_wait_private () at ../nptl/sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:95

#1 0x00007fbc55f60d80 in _L_lock_3443 () from /lib64/libpthread.so.0

#2 0x00007fbc55f60200 in get_cached_stack (memp=<synthetic pointer>, sizep=<synthetic pointer>)

at allocatestack.c:175

#3 allocate_stack (stack=<synthetic pointer>, pdp=<synthetic pointer>,

attr=0x7fbc56173400 <__default_pthread_attr>) at allocatestack.c:474

#4 __pthread_create_2_1 (newthread=0x7fb8f6c234a8, attr=0x0,

start_routine=0x88835a0 <std::execute_native_thread_routine(void*)>, arg=0x7fbb8bd10cc0)

at pthread_create.c:447

#5 0x0000000008883865 in __gthread_create (__args=<optimized out>

__func=0x88835a0 <std::execute_native_thread_routine(void*)>,

__threadid=_threadid@entry=0x7fb8f6c234a8)

at /root/XXX/gcc-7.3.0/x86_64-pc-linux-gnu/libstdc++-v3/include/x86_64-pc-linux-gnu/b...

#6 std::thread::_M_start_thread (this=this@entry=0x7fb8f6c234a8,state=...)

at ../../../../-/libstdc++-v3/src/c++11/thread.cc:163<center>Figure 1: Stack when thread application is blocked</center>

#0 _lll_lock_wait_private () at ../nptl/sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:95

#1 0x00007fbc55f60e59 in _L_lock_4600 () from /lib64/libpthread.so.0

#2 0x00007fbc55f6089f in allocate_stack (stack=<synthetic pointer>, pdp=<synthetic pointer>

attr=0x7fbc56173400 <__default_pthread_attr>) at allocatestack.c:552

#3 __pthread_create_2_1 (newthread=0x7fb5f1a5e8b0, attr=0x0,

start_routine=0x88835a0 <std::execute_native_thread_routine(void*)>, arg=0x7fbb8bcd6500)

at pthread_create.c:447

#4 0x0000000008883865 in __gthread_create (__args=<optimized out>,

__func=0x88835a0 <std::execute_native_thread_routine(void*)>,

__threadid=__threadid@entry=0x7fb5f1a5e8b0)

at /root/XXX/gcc-7.3.0/x86_64-pc-linux-gnu/libstdc++-v3/include/...

#5 std::thread::_M_start_thread (this=this@entry=0x7fb5f1a5e8b0, state=...)

at ../../../.././libstdc++-v3/src/c++11/thread.cc:163<center>Figure 2: Stack when thread application is blocked</center>

#0 __lll_lock_wait_private () at ../nptl/sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:95

#1 0x00007fbc55f60b71 in _L_lock_244 () from /lib64/libpthread.so.0

#2 0x00007fbc55f5ef3c in _deallocate_stack (pd=0x7fbc56173320 <stack_cache_lock>, pd@entry=0x7fb378912700) at allocatestack.c:704

#3 0x00007fbc55f60109 in __free_tcb (pd=pd@entry=0x7fb378912700) at pthread_create.c:223

#4 0x00007fbc55f61053 in pthread_join (threadid=140408798652160, thread_return=0x0) at pthread_join.c:111

#5 0x0000000008883803 in __gthread_join (__value_ptr=0x0, __threadid=<optimized out>)

at /root/XXX/gcc-7.3.0/x86_64-pc-linux-gnu/libstdc++-v3/include/x86_64-pc-linux-gnu/bits/gthr-default.h:668

#6 std::thread::join (this=this@entry=0x7fbbc2005668) at ../../../.././libstdc++-v3/src/c++11/thread.cc:136<center>Figure 3: Stack when thread releases blocking</center>

As can be seen from the stack in the figure, allocate_stack and __deallocate_stack will be called when the thread is created, and __deallocate_stack 9ae462776bd04f65d64853c7b42ebf74--- will be called when the thread is released. Blocked due to a lock contention named lll_lock_wait_private .

In order to explain this situation, we need to understand the process of creating and releasing threads.

The work process of thread creation and release

The threads we use every day are pthreads implemented through NPTL. NPTL (native posix thread library), commonly known as native pthread library, is itself integrated in glibc. After analyzing the relevant source code of glibc, we can understand the working process of pthread creation and release.

The thread creation work will allocate the stack to the thread, and the destructor work will release the stack. During this period, stack_used and stack_cache two linked lists: stack_used are maintained. The stack is being used by the thread, and the stack_cache maintains the available stack that was reclaimed after the thread was released. When a thread applies for a stack, it does not directly apply for a new stack, but first tries to obtain it from stack_cache .

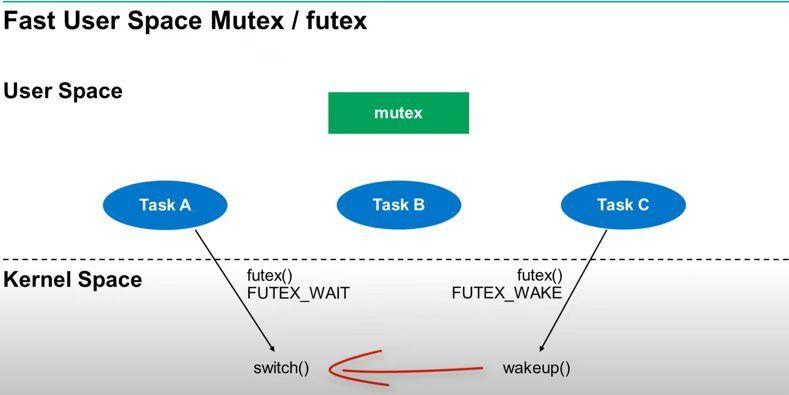

__lll_lock_wait_private is a private form __lll_lock_wait , which is actually a mutual exclusion lock based on futex. As will be mentioned later, private means that the lock is only used within the process, not across processes.

This lock contention occurs when the thread calls allocate_stack (when the thread applies), deallocate_stack (when the thread is released) to operate on the two linked lists.

allocate_stack process:

Returns a usable stack for a new thread either by allocating a

new stack or reusing a cached stack of sufficient size.

ATTR must be non-NULL and point to a valid pthread_attr.

PDP must be non-NULL. */

static int

allocate_stack (const struct pthread_attr *attr, struct pthread **pdp,

ALLOCATE_STACK_PARMS)

{

... // do something

/* Get memory for the stack. */

if (__glibc_unlikely (attr->flags & ATTR_FLAG_STACKADDR))

{

... // do something

}

else

{

// main branch

/* Allocate some anonymous memory. If possible use the cache. */

... // do something

/* Try to get a stack from the cache. */

reqsize = size;

pd = get_cached_stack (&size, &mem);

/*

If get_cached_stack() succeed, it will use cached_stack

to do rest work. Otherwise, it will call mmap() to allocate a stack.

*/

if (pd == NULL) // if pd == NULL, get_cached_stack() failed

{

... // do something

mem = mmap (NULL, size, prot,

MAP_PRIVATE | MAP_ANONYMOUS | MAP_STACK, -1, 0);

... // do something

/* Prepare to modify global data. */

lll_lock (stack_cache_lock, LLL_PRIVATE); // global lock

/* And add to the list of stacks in use. */

stack_list_add (&pd->list, &stack_used);

lll_unlock (stack_cache_lock, LLL_PRIVATE);

... // do something

}

... //do something

}

... //do something

return 0;

} /* Get a stack frame from the cache. We have to match by size since

some blocks might be too small or far too large. */

static struct pthread *

get_cached_stack (size_t *sizep, void **memp)

{

size_t size = *sizep;

struct pthread *result = NULL;

list_t *entry;

lll_lock (stack_cache_lock, LLL_PRIVATE); // global lock

/* Search the cache for a matching entry. We search for the

smallest stack which has at least the required size. Note that

in normal situations the size of all allocated stacks is the

same. As the very least there are only a few different sizes.

Therefore this loop will exit early most of the time with an

exact match. */

list_for_each (entry, &stack_cache)

{

... // do something

}

... // do something

/* Dequeue the entry. */

stack_list_del (&result->list);

/* And add to the list of stacks in use. */

stack_list_add (&result->list, &stack_used);

/* And decrease the cache size. */

stack_cache_actsize -= result->stackblock_size;

/* Release the lock early. */

lll_unlock (stack_cache_lock, LLL_PRIVATE);

... // do something

return result;

}<center>Figure 4: allocate_stack code analysis</center>

Combining the stack and source code, it can be seen that pthread_create will initially call allocate_stack to allocate the thread stack. The specific process is as shown in the figure above: First, check whether the user has provided stack space, and if so, allocate it directly with the space provided by the user. But this is rare. By default, the user does not provide it, but the system allocates it by itself. In this case get_cached_stack will be called first, trying to re-use from the already allocated stack list. If the stack acquisition fails, the syscall mmap will be called to allocate the stack. After the stack is acquired, it will try to acquire the global lock lll_lock Add the stack to the stack_used list . During this process, get_cached_stack will also internally try to acquire the same global lock lll_lock , first scan the stack_cache list, and then find the stack from the available stack stack_cache deleted from the list and added to the stack_used list.

deallocate_stack process:

void

internal_function

__deallocate_stack (struct pthread *pd)

{

lll_lock (stack_cache_lock, LLL_PRIVATE); //global lock

/* Remove the thread from the list of threads with user defined

stacks. */

stack_list_del (&pd->list);

/* Not much to do. Just free the mmap()ed memory. Note that we do

not reset the 'used' flag in the 'tid' field. This is done by

the kernel. If no thread has been created yet this field is

still zero. */

if (__glibc_likely (! pd->user_stack))

(void) queue_stack (pd);

else

/* Free the memory associated with the ELF TLS. */

_dl_deallocate_tls (TLS_TPADJ (pd), false);

lll_unlock (stack_cache_lock, LLL_PRIVATE);

} /* Add a stack frame which is not used anymore to the stack. Must be

called with the cache lock held. */

static inline void

__attribute ((always_inline))

queue_stack (struct pthread *stack)

{

/* We unconditionally add the stack to the list. The memory may

still be in use but it will not be reused until the kernel marks

the stack as not used anymore. */

stack_list_add (&stack->list, &stack_cache);

stack_cache_actsize += stack->stackblock_size;

if (__glibc_unlikely (stack_cache_actsize > stack_cache_maxsize))

//if stack_cache is full, release some stacks

__free_stacks (stack_cache_maxsize);

} /* Free stacks until cache size is lower than LIMIT. */

void

__free_stacks (size_t limit)

{

/* We reduce the size of the cache. Remove the last entries until

the size is below the limit. */

list_t *entry;

list_t *prev;

/* Search from the end of the list. */

list_for_each_prev_safe (entry, prev, &stack_cache)

{

struct pthread *curr;

curr = list_entry (entry, struct pthread, list);

if (FREE_P (curr))

{

... // do something

/* Remove this block. This should never fail. If it does

something is really wrong. */

if (munmap (curr->stackblock, curr->stackblock_size) != 0)

abort ();

/* Maybe we have freed enough. */

if (stack_cache_actsize <= limit)

break;

}

}

}<center>Figure 5: deallocate_stack code analysis</center>

//file path: nptl/allocatestack.c

/* Maximum size in kB of cache. */

static size_t stack_cache_maxsize = 40 * 1024 * 1024; /* 40MiBi by default. */

static size_t stack_cache_actsize;<center>Figure 6: Default value of stack_cache_maxsize of stack_cache list capacity</center>

Combining the stack and source code, it can be seen that when the thread ends, it will call __free_tcb to first release the thread's TCB (Thread Control Block, thread metadata), and then call deallocate_stack to recycle the stack . In this process, the main bottleneck is on deallocate_stack . deallocate_stack will try to hold the same lll_lock global lock as in allocate_stack, and delete the stack from the stack_used list. Then judge whether the stack is allocated by the system, and if so, add it to the stack_cache list.加入后,会检查stack_cache列表的大小stack_cache_maxsize ,如果是,那么__free_stacks stack 直到小于阈值stack_cache_maxsize . It is worth noting that the __free_stacks function will call syscall munmap to release the memory. For the threshold stack_cache_maxsize , as shown in the figure above, from the source code, its default value is 40 1024 1024. Combined with the comments in the code, it seems that the unit is kB. However, after the actual measurement, it was found that there is a problem with this comment. In fact, the unit of stack_cache_maxsize is Byte, which is 40MB by default. The default thread stack size is generally 8~10 MB, which means that glibc can help users cache 4~5 thread stacks by default.

It can be seen that in the process of creation and release, threads will grab the same global mutex lll_lock, so that when high-concurrency threads are created/released, these threads will be queued and blocked. Since only one thread can work at the same time in this process, assuming that the cost of thread creation/release is c, then the average delay of creation/release of n threads can be roughly calculated avg_rt = (1+2+…+n) c/ n = n(n+1)/2 c/n=(n+1)*c/2. That is, the average latency of creation/release increases linearly with the number of concurrency. After monitoring the thread creation on TiFlash, it is found that under 40 nested queries ( max_threads =4, note: this is the concurrency parameter of TiFlash), the number of threads created/released has reached At around 3500, the average thread creation delay actually reached 30ms! This is a very terrifying delay, and thread creation/release is not as "light" as imagined. The latency of a single operation is already so high, and it is conceivable that it will be more serious for a nested thread creation scenario like TiFlash.

At this point, you have already learned that the thread creation and release process will try to acquire the global mutex and block the queue, but you may still be confused about lll_lock . What is lll_lock ?

lll_lock and Futex

<center>Figure 7: futex</center>

lll is the abbreviation of low level lock, commonly known as the bottom lock, which is actually a mutual exclusion lock based on Futex. Futex, the full name of fast userspace mutex, is a non-vDSO system call. The mutex of higher versions of linux is also implemented based on futex. The design idea of futex believes that lock contention will not occur in most cases, and the lock operation can be completed directly in user mode. And when lock contention occurs, lll_lock through a non-vDSO system call sys_futex(FUTEX_WAIT) falls into kernel mode and waits to be woken up. The thread that successfully grabs the lock, after finishing the work, wakes up val threads through lll_unlock (val is generally set to 1), lll_unlock actually through the non-vDSO system call sys_futex(FUTEX_WAIT) to complete wake up operation.

From the above principles of lll_lock and futex, we can know that in the case of non-contention, this operation is relatively lightweight, and it will not fall into the kernel state. However, in the case of contention, not only queuing blocking occurs, but also the switching between user mode and kernel mode is triggered, and the efficiency of thread creation/release is even worse. The slow switching between kernel mode and user mode is mainly due to non-vDSO system calls. Let's talk about the cost of system calls.

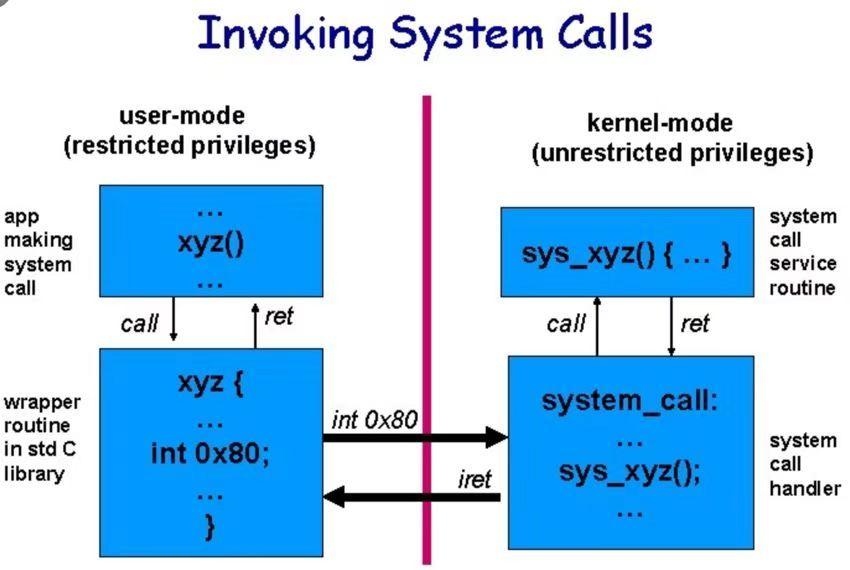

system call cost

In modern linux systems, part of the syscall set is generally exposed to the process in the form of vDSO. It is actually very efficient for the process to call the syscall in the way of vDSO, because it does not involve switching between user mode and kernel mode. Non-vDSO syscalls are not so lucky, and unfortunately Futex falls into the non-vDSO class.

<center>Figure 8: How system calls work</center>

The traditional syscall is carried out by means of int 0x80 interrupt. The CPU transfers control to the OS, and the OS will check the incoming parameters, such as SYS_gettimeofday , and then use the system call number in the register to find the system call table, Get the service function corresponding to the call number and execute, for example: gettimeofday . Interrupts force the CPU to save the execution state before the interruption, so that the state can be restored after the interruption. The kernel does a lot more than interrupts themselves. Linux is divided into user space and kernel space. The kernel space has the highest permission level and can operate directly on the hardware. In order to prevent malicious behavior of user programs, user applications cannot directly access the kernel space. If you want to do work that cannot be done in user mode, you need syscall to do it indirectly. The kernel must switch between the two memory segments of the user and the kernel to accomplish this. operate. This switching of memory addresses requires a "big change" of the contents of the CPU registers, because the previous register scene needs to be saved and restored. In addition, additional permission checks are performed on the content passed in by the user, so the impact on performance is relatively large. Modern CPUs provide syscall instructions, so higher versions of linux actually use syscall instructions to replace the original int 0x80 interrupt method, but the cost is still high.

Mutex is actually implemented based on futex. Why does thread creation/destruction slow down?

As mentioned in the previous introduction to futex, mutex is actually implemented based on futex. The same is based on futex implementation, why is thread creation/destruction slower?

By modifying the source code of glibc and kernel, adding trace code in it, locating thread creation/destruction in the futex critical section takes time mainly munmap contributed by this syscall. As mentioned in the previous source code analysis, when the thread is released, if the stack_cache list is full, it will call munmap to release the stack.

munmap This operation takes several us or even dozens of us. Almost contributed more than 90% of the time-consuming of the whole process. And because munmap is completed through the futex global lock, other thread creation/destruction work must be blocked during this period. Causes severe performance degradation.

Therefore, the deeper reason for the slow thread creation/destruction is: when the thread is destructed, if stack_cache is full, it needs to call munmap to release the stack, and the futex critical section of this process consumes too long! In this way, when the creation and destructor grab the same futex lock, blocking will occur.

But the same is to operate memory, mmap used to apply for memory is very fast, and it can be executed in less than 1us. Why is the contrast between the two so great? Next, let's analyze munmap why it is so slow.

munmap, TLB shootdown and inter-core interrupt IPI

First, briefly talk about the working process of munmap 78623792475fd254d8a8a376bdd7353f---: munmap will search for the corresponding virtual memory area VMA (virtual memory area) according to the memory range to be released. It just falls in the middle of a VMA, then the corresponding VMA needs to be split. Then unmap unmap and release the corresponding VMA and page table. And void the relevant TLB.

Through the trace added in the kernel, it is found that the time-consuming mainly occurs in tlb_flush_mmu , which is the process of expelling the TLB. This function is called because munmap after releasing the memory, the expired TLB needs to be invalidated.

Going deeper, if the involved TLBs exist on multiple CPU cores, tlb_flush_mmu will call smp_call_function_many to flush TLBs on all of these cores and wait in a synchronous manner The process is complete. The operation of the single-core Flush TLB is completed by the single-core interrupt, and the multi-core Flush TLB needs to be completed by the inter-core interrupt IPI.

Through trace positioning, the time consumption is mainly contributed by IPI. The time consumption of IPI communication alone is several us or even tens of us, while the flush TLB itself is less than 1 us.

<center>Figure 9: How Inter-Core Interrupt IPI Works</center>

The specific working method of IPI is as shown in the figure above. Multiple CPU cores communicate IPI messages through the system bus System Bus. When a CPU core needs to do IPI work on multiple CPU cores, the core will send an IPI request to the System Bus and wait. All other cores complete the IPI operation, and the relevant CPU core processes its own Interrupt task after receiving the IPI request, and notifies the initiator through the System Bus after completion. Because this process needs to be completed through the System Bus outside the CPU, and the initiator can only wait stupidly when sending IPI to wait for other cores to complete the interrupt operation, so the overhead is very high (a few us or even higher).

Looking at the research results of others, it is more verified that IPI is a very important operation. According to the research of the paper <<Latr: Lazy Translation Coherence>> published in 2018,

"an IPI takes up to 6.6 µs for 120 cores with 8 sockets and 2.7 µs for 16 cores with 2 sockets."

That is to say, the overhead of an IPI operation is probably at the us level.

Context switch and CFS

In addition to the issue of thread creation and release, the number of threads is also a matter of concern. Especially when the number of running threads is large, the cost of context switch and scheduling may have a performance impact. Why is the thread in the running state deliberately emphasized here? Because the thread in the blocking state (lock waiting, nanosleep, etc.) does not actually participate in the scheduling and no context switching occurs. Many people may have such a misunderstanding: if the number of threads (whether blocked or not) is large, the cost of context switching and scheduling must be high, which is actually not completely correct. Because for the thread in the blocking state, the scheduler will not assign him any CPU time until it is woken up. The Linux scheduler implementation is CFS (Completely Fair Scheduler), which actually maintains a red-black tree-based thread queue in the runnable state on each CPU core, also called runqueue. The scheduling cost of this runqueue is log(n) (n is the number of runnable threads in the queue). Since CFS only schedules running threads, scheduling and context switch mainly occur between running threads. I have just analyzed the cost of the scheduler CFS in detail, and then I will talk about the context switch.

Context switch is divided into intra-process and inter-process. Since we are generally multi-threaded development under a single process, the context switch here mainly refers to the switching cost of intra-process threads. In-process thread switching is relatively efficient compared to cross-process switching, because TLB (Translation lookaside buffer) flush does not occur. However, the cost of in-process thread switching is not low, because the save and restore of register scene, TCB (thread control block), and part of the CPU cache will fail.

In the previous version of TiFlash, the total number of threads can reach more than 5,000 under high concurrent queries, which is indeed a terrifying number. But the number of running threads is generally not more than 100. Assuming that it runs on a machine with 40 logical cores, the scheduling cost at this time does not exceed lg(100) in the worst case, and the ideal state should be lg(100/40), and the cost is relatively small. The cost of context switching is roughly equivalent to the order of dozens of running threads, which is also a relatively controllable state.

Here, I also did an experiment to compare the time consumption of 5000 running threads and 5000 blocked threads. First, three thread states are defined: work is to count from 0 to 50000000; Yield is to do it in a loop sched_yeild , allowing the thread to give up the CPU execution right and maintain the runnable state without doing any calculation work. It is to increase the number of running threads without introducing additional computational workload. Wait is doing condition_variable.wait(lock, false) . The time-consuming results are as follows:

It can be seen that because the lock waiting is a non-running thread, the time-consuming of experiment 1 and experiment 2 is not much different, indicating that 5000 blocked threads do not have a significant impact on performance. In experiment 3, there are 500 threads that only do context switching (equivalent to running threads that do not do computing work), which is not as many as the number of wait threads in experiment 2. Even if no other computing work is done, it will have a huge impact on performance. . The cost of scheduling and context switching brought about by this is quite obvious, and the time-consuming directly increased by nearly 10 times. This shows that the cost of scheduling and context switching is mainly related to the number of running threads in non-blocking state. This will help us to get a more accurate judgment when analyzing performance problems in the future.

Beware of misleading system monitoring

When we were troubleshooting the problem, we actually stepped on a lot of pits in monitoring, one of which was dug by the system monitoring tool top. We see under top that the number of running threads is lower than expected, often hovering in the single digits. Let's mistake the problem with the context of the system. However, the host CPU usage can reach 80%. But after thinking about it, it feels wrong: if a few or a dozen threads are working most of the time, it is impossible for a host with 40 logical cores to achieve such a high CPU utilization rate, how is this going? What's the matter?

//Entry Point

static void procs_refresh (void) {

...

read_something = Thread_mode ? readeither : readproc;

for (;;) {

...

// on the way to n_alloc, the library will allocate the underlying

// proc_t storage whenever our private_ppt[] pointer is NULL...

// read_something() is function readeither() in Thread_mode!

if (!(ptask = read_something(PT, private_ppt[n_used]))) break;

procs_hlp((private_ppt[n_used] = ptask)); // tally this proc_t

}

closeproc(PT);

...

} // end: procs_refresh // readeither() is function pointer of read_something() in Thread_mode;

// readeither: return a pointer to a proc_t filled with requested info about

// the next unique process or task available. If no more are available,

// return a null pointer (boolean false). Use the passed buffer instead

// of allocating space if it is non-NULL.

proc_t* readeither (PROCTAB *restrict const PT, proc_t *restrict x) {

...

next_proc:

...

next_task:

// fills in our path, plus x->tid and x->tgid

// find next thread

if ((!(PT->taskfinder(PT,&skel_p,x,path))) // simple_nexttid()

|| (!(ret = PT->taskreader(PT,new_p,x,path)))) { // simple_readtask

goto next_proc;

}

if (!new_p) {

new_p = ret;

canary = new_p->tid;

}

return ret;

end_procs:

if (!saved_x) free(x);

return NULL;

} // simple_nexttid() is function simple_nexttid() actually

// This finds tasks in /proc/*/task/ in the traditional way.

// Return non-zero on success.

static int simple_nexttid(PROCTAB *restrict const PT, const proc_t *restrict const p, proc_t *restrict const t, char *restrict const path) {

static struct dirent *ent; /* dirent handle */

if(PT->taskdir_user != p->tgid){ // init

if(PT->taskdir){

closedir(PT->taskdir);

}

// use "path" as some tmp space

// get iterator of directory /proc/[PID]/task

snprintf(path, PROCPATHLEN, "/proc/%d/task", p->tgid);

PT->taskdir = opendir(path);

if(!PT->taskdir) return 0;

PT->taskdir_user = p->tgid;

}

for (;;) { // iterate files in current directory

ent = readdir(PT->taskdir); // read state file of a thread

if(unlikely(unlikely(!ent) || unlikely(!ent->d_name[0]))) return 0;

if(likely(likely(*ent->d_name > '0') && likely(*ent->d_name <= '9'))) break;

}

...

return 1;

} // how TOP statisticizes state of threads

switch (this->state) {

case 'R':

Frame_running++;

break;

case 't': // 't' (tracing stop)

case 'T':

Frame_stopped++;

break;

case 'Z':

Frame_zombied++;

break;

default:

/* the following states are counted as sleeping state

currently: 'D' (disk sleep),

'I' (idle),

'P' (parked),

'S' (sleeping),

'X' (dead - actually 'dying' & probably never seen)

*/

Frame_sleepin++;

break;

}<center>Figure 10: top source code analysis</center>

After analyzing the source code of top, I finally understand the reason. The original top display is not the "instantaneous situation", because top will not stop the program. The specific working process is shown in the figure above. Top will scan the thread list at that time, and then retrieve the status one by one. During this process, the program continues to run, so after top scans the list, the newly started thread is not recorded, and Part of the old thread has ended, and the thread in the end state will be counted in sleeping. Therefore, in the scenario of frequent application and release of high concurrent threads, the number of runnings seen on the top will be relatively small.

Therefore, the number of running threads in top is inaccurate for programs where threads are frequently created and released.

In addition, for TiFlash in the form of pipeline, the same data will only appear in one link of the pipeline during the flow of data in the pipeline. The operator processes the data if it has data, and waits if there is no data (most threads in GDB are in this state). ). Most of the links in the pipeline are in a situation where there is no data waiting, and when there is data, it ends quickly. The entire TiFlash is not stopped in the monitoring project, so for each thread, there is a high probability that the thread's waiting state will be obtained.

Experience summary

In the whole process of troubleshooting, there are some methods that can be settled down and can still be used in future development and troubleshooting:

- In multi-threaded development, methods such as thread pools and coroutines should be used as much as possible to avoid frequent thread creation and release.

- Try to reproduce the problem in a simple environment to reduce the factors that interfere with the troubleshooting.

- Control the number of threads in the running state. If the number of threads is greater than the number of CPU cores, extra context switching costs will be incurred.

- In the development of scenarios where threads wait for resources, try to use lock, cv, etc. If sleep is used, the sleep interval should be set as long as possible to reduce unnecessary thread wakeups.

- Looking at monitoring tools dialectically, when there is a conflict between the analysis results and monitoring data, doubts about the monitoring tools themselves cannot be ruled out. In addition, carefully read the documentation and indicator descriptions of the monitoring tool to avoid misinterpreting the indicators.

- Multi-threaded hang, slow, and contention troubleshooting: pstack, GDB to see the status of each thread.

- Performance hotspot tools: perf, flamegraph.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。