This article is the final article in the TiDB query optimization and tuning series. It mainly collects some common SQL optimization cases for users, and analyzes them from the perspectives of background, analysis, impact, advice, and practical operation. See the previous chapters for an introduction to the principles of SQL tuning.

Related Reading:

TiDB Query Optimization and Tuning Series (1) Introduction to TiDB Optimizer

TiDB Query Optimization and Tuning Series (2) Introduction to TiDB Query Plan

TiDB Query Optimization and Tuning Series (3) Slow Query Diagnosis, Monitoring and Troubleshooting

Note: The following statements and results are basically recorded in the actual environment at that time. Due to the version update, they may be slightly different from the existing format. For example, count is equivalent to the current estRows.

Case 1: Delete involves excessive data volume and causes OOM

MySQL [db_stat]> explain delete from t_stat where imp_date<='20200202';

+---------------------+--------------+------+------------------------------------------------------+

| id | count | task | operator info |

+---------------------+--------------+------+------------------------------------------------------+

| TableReader_6 | 220895815.00 | root | data:Selection_5 |

| └─Selection_5 | 220895815.00 | cop | le(db_stat.t_stat.imp_date, "20200202") |

| └─TableScan_4 | 220895815.00 | cop | table:t_stat, range:[-inf,+inf], keep order:false |

+---------------------+--------------+------+------------------------------------------------------+

3 rows in set (0.00 sec)

MySQL [db_stat]> select count(*) from t_stat where imp_date<='20200202';

+-----------+

| count(*) |

+-----------+

| 184340473 |

+-----------+

1 row in set (17.88 sec)background

System resource consumption is high when cleaning data in large batches, which may lead to OOM when the memory of the TiDB node is insufficient.

analyze

imp_date Although there is an index on the field, the scan time range is too large. No matter whether the optimizer chooses IndexScan or Table Scan, TiDB must request TiKV Coprocessor to read a large amount of data

influences

- Coprocessor CPU usage of TiKV nodes rises rapidly

- The memory usage of TiDB nodes performing Delete operations increases rapidly because large batches of data are loaded into TiDB memory

Suggest

- When deleting data, narrow the scope of data filtering, or add limit N to delete a batch of data each time

- It is recommended to use the Range partition table to quickly delete according to the partition

Case 2 Unstable execution plan leads to increased query latency

MySQL [db_stat]> explain SELECT * FROM `tbl_article_check_result` `t` WHERE (articleid = '20190925A0PYT800') ORDER BY checkTime desc LIMIT 100 ;

+--------------------------+----------+------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| id | count | task | operator info |

+--------------------------+----------+------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Projection_7 | 100.00 | root | db_stat.t.type, db_stat.t.articleid, db_stat.t.docid, db_stat.t.version, db_stat.t.checkid, db_stat.t.checkstatus, db_stat.t.seclevel, db_stat.t.t1checkstatus, db_stat.t.t2checkstatus, db_stat.t.mdaichannel, db_stat.t.mdaisubchannel, db_stat.t.checkuser, db_stat.t.checktime, db_stat.t.addtime, db_stat.t.havegot, db_stat.t.checkcode |

| └─Limit_12 | 100.00 | root | offset:0, count:100 |

| └─IndexLookUp_34 | 100.00 | root | |

| ├─IndexScan_31 | 30755.49 | cop | table:t, index:checkTime, range:[NULL,+inf], keep order:true, desc |

| └─Selection_33 | 100.00 | cop | eq(db_dayu_1.t.articleid, "20190925A0PYT800") |

| └─TableScan_32 | 30755.49 | cop | table:tbl_article_check_result, keep order:false |

+--------------------------+----------+------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

6 rows in set (0.00 sec)background

articleid and checkTime have a single-column index on the field respectively. Under normal circumstances, the index on articleid is relatively fast, and occasionally when the execution plan is unstable checkTime The index on checkTime causes query latency to reach the minute level

analyze

LIMIT 100 limited to get 100 records, if the correlation between checkTime and articleid column is not high, when the independence assumption fails, the optimizer estimates The number of rows scanned when the index on checkTime 532cc8564afa2311666f3f280923e078--- and the condition articleid is satisfied, may be less than the number of rows scanned by the index on articleid

influences

The service response delay is unstable, and the monitoring Duration occasionally jitters

Suggest

- Manually analyze the table, and regularly analyze with crontab to maintain the accuracy of statistical information

Automatic auto analyze, lower analyze ratio threshold, increase collection frequency, and set running time window

- set global tidb_auto_analyze_ratio=0.2;

- set global tidb_auto_analyze_start_time='00:00 +0800';

- set global tidb_auto_analyze_end_time='06:00 +0800';

- Business modification SQL, use force index to fix the index on the articleid column

- Businesses can use the create binding of SPM (see the above section) to create the binding SQL of the force index without modifying the SQL, which can avoid the performance degradation caused by the unstable execution plan.

Case 3 The query field does not match the data type of the value

MySQL [db_stat]> explain select * from t_like_list where person_id=1535538061143263;

+---------------------+------------+------+-----------------------------------------------------------------------------------+

| id | count | task | operator info |

+---------------------+------------+------+-----------------------------------------------------------------------------------+

| Selection_5 | 1430690.40 | root | eq(cast(db_stat.t_like_list.person_id), 1.535538061143263e+15) |

| └─TableReader_7 | 1788363.00 | root | data:TableScan_6 |

| └─TableScan_6 | 1788363.00 | cop | table:t_like_list, range:[-inf,+inf], keep order:false |

+---------------------+------------+------+-----------------------------------------------------------------------------------+

3 rows in set (0.00 sec)background

person_id There is an index on the column and the selectivity is good, but the execution plan does not go as expected IndexScan

analyze

person_id is a string type, but the stored values are all numbers, and the business thinks that they can be assigned directly; and the optimizer needs to do cast type conversion on the field, so that the index cannot be used

Suggest

where The value of the condition is quoted, and then the execution plan uses the index:

MySQL [db_stat]> explain select * from table:t_like_list where person_id='1535538061143263';

+-------------------+-------+------+----------------------------------------------------------------------------------------------------------+

| id | count | task | operator info |

+-------------------+-------+------+----------------------------------------------------------------------------------------------------------+

| IndexLookUp_10 | 0.00 | root | |

| ├─IndexScan_8 | 0.00 | cop | table:t_like_list, index:person_id, range:["1535538061143263","1535538061143263"], keep order:false |

| └─TableScan_9 | 0.00 | cop | table:t_like_list, keep order:false |

+-------------------+-------+------+----------------------------------------------------------------------------------------------------------+

3 rows in set (0.00 sec)Case 4 Read hotspots lead to increased SQL latency

background

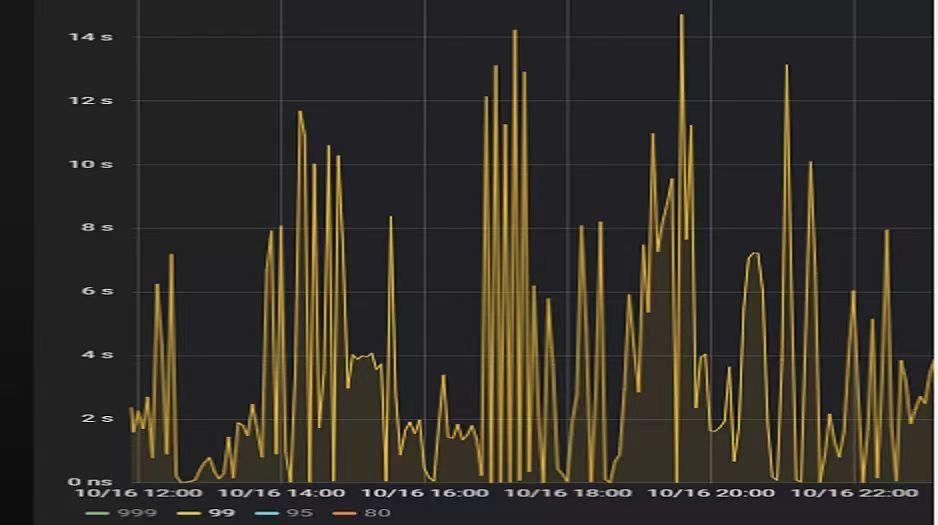

In a TiDB cluster with a data volume of about 600G and more reads and fewer writes, it was found that the Query Summary - Duration indicators monitored by TiDB increased significantly during a certain period of time. The p99 is shown in the figure below.

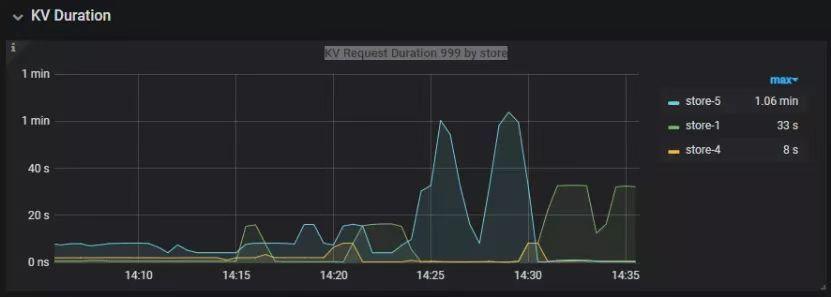

Check that the KV Duration under the monitoring of TiDB has increased significantly. Among them, the KV Request Duration 999 by store monitoring shows that the Duration of multiple TiKV nodes has increased.

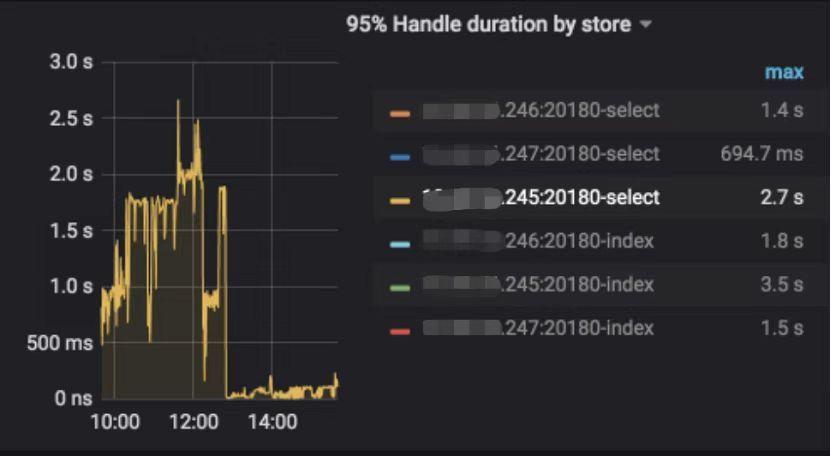

Check out TiKV Monitoring Coprocessor Overview:

Check out Monitoring Coprocessor CPUs:

It was found that the Coprocessor CPU thread pool was almost full. Let's start to analyze the logs and investigate the reason for the increase in Duration and Coprocessor CPU.

Slow query log analysis

Use the pt-query-digest tool to analyze the TiDB slow query log:

./pt-query-digest tidb_slow_query.log > result分析慢日志解析出来的TopSQL 发现Process keys和---7c3195da88aea405ef96b0ebcf234beb Process time线性相关, Process keys数量多的SQL 的Process time时间不It must be longer, such as the following SQL Process keys is 22.09M, Process time is 51s.

The SQL below Process keys is 12.68M, but Process time is as high as 142353s.

Filter Process time more SQL, find 3 typical ones slow query , and analyze the specific execution plan.

SQL1

select a.a_id, a.b_id,uqm.p_id from a join hsq on a.b_id=hsq.id join uqm on a.a_id=uqm.id; SQL2

select distinct g.abc, g.def, g.ghi, h.abcd, hi.jq from ggg g left join ggg_host gh on g.id = gh.ggg_id left join host h on gh.a_id = h.id left join a_jq hi on h.id = hi.hid where h.abcd is not null and h.abcd <> '' and hi.jq is not null and hi.jq <> ''; SQL3

select tb1.mt, tb2.name from tb2 left join tb1 on tb2.mtId=tb1.id where tb2.type=0 and (tb1.mt is not null and tb1.mt != '') and (tb2.name is not null or tb2.name != ''); No abnormality was found in the analysis of the execution plan, and the statistics of the related tables were not expired. Continue to analyze the TiDB and TiKV logs.

General log analysis

View the region distribution in the log line marked [slow-query] in the TiKV log.

more tikv.log.2019-10-16-06\:28\:13 |grep slow-query |awk -F ']' '{print $1}' | awk '{print $6}' | sort | uniq -c | sort –nFind the 3 most frequently accessed regions:

73 29452

140 33324

757 66625The number of visits to these regions is much higher than that of other regions, and then the table names to which these regions belong are located. First check the table_id and start_ts of the row where [slow-query] is located, and then query the TiDB log to get the table name. For example, table_id is 1318 and start_ts is 411837294180565013. Use the following command to filter and find that it is the table involved in the above slow query SQL.

more tidb-2019-10-14T16-40-51.728.log | grep '"/[1318/]"' |grep 411837294180565013solve

Do a split operation on these regions, taking region 66625 as an example, the command is as follows (replace xxxx with the actual pd address).

pd-ctl –u http://x.x.x.x:2379 operator add split-region 66625View PD log after operation

[2019/10/16 18:22:56.223 +08:00] [INFO] [operator_controller.go:99] ["operator finish"] [region-id=30796] [operator="\"admin-split-region (kind:admin, region:66625(1668,3), createAt:2019-10-16 18:22:55.888064898 +0800 CST m=+110918.823762963, startAt:2019-10-16 18:22:55.888223469 +0800 CST m=+110918.823921524, currentStep:1, steps:[split region with policy SCAN]) finished\""]The log shows that the region has been split, and then check the slow-query related to the region:

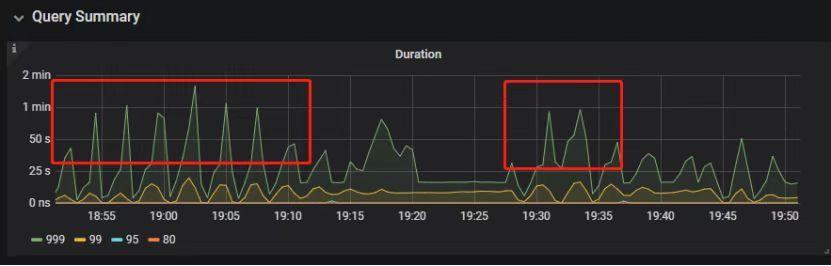

more tikv.log.2019-10-16-06\:28\:13 |grep slow-query | grep 66625After observing for a period of time, confirm that 66625 is no longer a hotspot region, and continue to process other hotspot regions. After all hot regions are processed, the monitoring Query Summary - Duration is significantly reduced.

Duration is stable for a period of time, and there is still a higher Duration after 18:55:

Observe the stressful tikv and remove the leader of the hot region:

pd-ctl –u http://x.x.x.x:2379 operator add transfer-leader 1 2 //把 region1 的 leader 调度到 store2After the leader is removed, the Duration of the original TiKV node decreases immediately, but the Duration of the new TiKV node increases accordingly.

After several split operations were performed on the hotspot region, the Duration dropped significantly and returned to stability.

Summary

For the read hotspot problem of distributed databases, it is sometimes difficult to solve the problem by optimizing SQL. It is necessary to analyze the monitoring and logs of the entire TiDB cluster to locate the cause. Severe read hotspots may cause some TiKVs to reach resource bottlenecks. This short-board effect limits the full performance of the entire cluster. By splitting regions, the hotspot regions can be distributed to more TiKV nodes, so that the load of each TiKV can be reduced. Balance as much as possible to mitigate the impact of read hotspots on SQL query performance. For more solutions to hot issues, please refer to TiDB Query Optimization and Tuning Series (4) Adjustment and Optimization Principles of Query Execution Plans .

Case 5 SQL execution plan is not allowed

background

SQL execution time suddenly increases

analyze

- SQL statement

select count(*)

from tods.bus_jijin_trade_record a, tods.bus_jijin_info b

where a.fund_code=b.fund_code and a.type in ('PURCHASE','APPLY')

and a.status='CANCEL_SUCCESS' and a.pay_confirm_status = 1

and a.cancel_app_no is not null and a.id >= 177045000

and a.updated_at > date_sub(now(), interval 48 hour) ;Execution result, it takes 1 minute 3.7s:

mysql> select count(*)

-> from tods.bus_jijin_trade_record a, tods.bus_jijin_info b

-> where a.fund_code=b.fund_code and a.type in ('PURCHASE','APPLY')

-> and a.status='CANCEL_SUCCESS' and a.pay_confirm_status = 1

-> and a.cancel_app_no is not null and a.id >= 177045000

-> and a.updated_at > date_sub(now(), interval 48 hour) ;

+----------+

| count(*) |

+----------+

| 708 |

+----------+

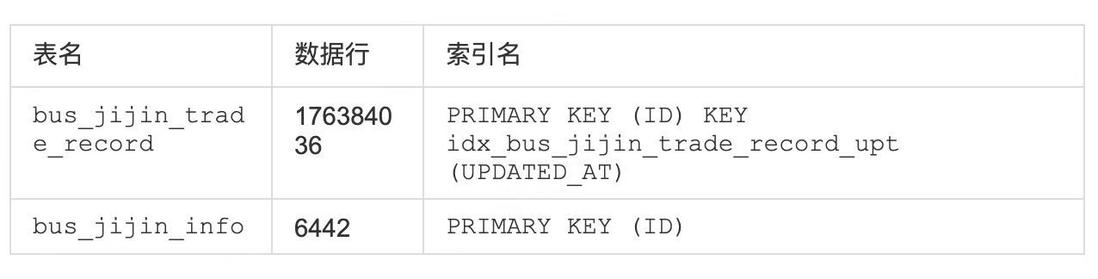

1 row in set (1 min 3.77 sec)- index information

- View execution plan

mysql> explain

-> select count(*)

-> from tods.bus_jijin_trade_record a, tods.bus_jijin_info b

-> where a.fund_code=b.fund_code and a.type in ('PURCHASE','APPLY')

-> and a.status='CANCEL_SUCCESS' and a.pay_confirm_status = 1

-> and a.cancel_app_no is not null and a.id >= 177045000

-> and a.updated_at > date_sub(now(), interval 48 hour) ;

+----------------------------+--------------+------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| id | count | task | operator info |

+----------------------------+--------------+------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| StreamAgg_13 | 1.00 | root | funcs:count(1) |

| └─HashRightJoin_27 | 421.12 | root | inner join, inner:TableReader_18, equal:[eq(a.fund_code, b.fund_code)] |

| ├─TableReader_18 | 421.12 | root | data:Selection_17 |

| │ └─Selection_17 | 421.12 | cop | eq(a.pay_confirm_status, 1), eq(a.status, "CANCEL_SUCCESS"), gt(a.updated_at, 2020-03-03 22:31:08), in(a.type, "PURCHASE", "APPLY"), not(isnull(a.cancel_app_no)) |

| │ └─TableScan_16 | 145920790.55 | cop | table:a, range:[177045000,+inf], keep order:false |

| └─TableReader_37 | 6442.00 | root | data:TableScan_36 |

| └─TableScan_36 | 6442.00 | cop | table:b, range:[-inf,+inf], keep order:false |

+----------------------------+--------------+------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

TableScan_16,TableScan_36:表示在 TiKV 端分别对表 a 和 b 的数据进行扫描,其中 TableScan_16 扫描了 1.46 亿的行数;

Selection_17:表示满足表 a 后面 where 条件的数据;

TableReader_37: 由于表 b 没有独立的附加条件,所以直接将这部分数据返回给 TiDB;

TableReader_18:将各个 coprocessor 满足 a 表条件的结果返回给 TiDB;

HashRightJoin_27:将 TableReader_37 和 TableReader_18 上的结果进行 hash join;

StreamAgg_13:进一步统计所有行数,将数据返回给客户端;You can see that the condition id of table a (bus_jijin_trade_record) in the statement >= 177045000, and updated_at > date_sub(now(), interval 48 hour), these two columns have indexes respectively, but TiDB still chooses full table scan.

Query data partitions according to the above two conditions

mysql> SELECT COUNT(*) FROM tods.bus_jijin_trade_record WHERE id >= 177045000 ;

+-----------+

| COUNT(*) |

+-----------+

| 145917327 |

+-----------+

1 row in set (16.86 sec)

mysql> SELECT COUNT(*) FROM tods.bus_jijin_trade_record WHERE updated_at > date_sub(now(), interval 48 hour) ;

+-----------+

| COUNT(*) |

+-----------+

| 713682 |

+-----------+ It can be seen that the table bus_jijin_trade_record has a data volume of 170 million, and the index on the updated_at field should be used.

Using the forced hint to execute, the execution is completed in 6.27 seconds, and the speed is increased by 10 times from the previous 63s to the current 6.3s.

mysql> select count(*)

-> from tods.bus_jijin_trade_record a use index(idx_bus_jijin_trade_record_upt), tods.bus_jijin_info b

-> where a.fund_code=b.fund_code and a.type in ('PURCHASE','APPLY')

-> and a.status='CANCEL_SUCCESS' and a.pay_confirm_status = 1

-> and a.cancel_app_no is not null and a.id >= 177045000

-> and a.updated_at > date_sub(now(), interval 48 hour) ;

+----------+

| count(*) |

+----------+

| 709 |

+----------+

1 row in set (6.27 sec)Execution plan after forcing hint:

mysql> explain

-> select count(*)

-> from tods.bus_jijin_trade_record a use index(idx_bus_jijin_trade_record_upt), tods.bus_jijin_info b

-> where a.fund_code=b.fund_code and a.type in ('PURCHASE','APPLY')

-> and a.status='CANCEL_SUCCESS' and a.pay_confirm_status = 1

-> and a.cancel_app_no is not null and a.id >= 177045000

-> and a.updated_at > date_sub(now(), interval 48 hour) ;

+------------------------------+--------------+------+----------------------------------------------------------------------------------------------------------------------------+

| id | count | task | operator info |

+------------------------------+--------------+------+----------------------------------------------------------------------------------------------------------------------------+

| StreamAgg_13 | 1.00 | root | funcs:count(1) |

| └─HashRightJoin_24 | 421.12 | root | inner join, inner:IndexLookUp_20, equal:[eq(a.fund_code, b.fund_code)] |

| ├─IndexLookUp_20 | 421.12 | root | |

| │ ├─Selection_18 | 146027634.83 | cop | ge(a.id, 177045000) |

| │ │ └─IndexScan_16 | 176388219.00 | cop | table:a, index:UPDATED_AT, range:(2020-03-03 23:05:30,+inf], keep order:false |

| │ └─Selection_19 | 421.12 | cop | eq(a.pay_confirm_status, 1), eq(a.status, "CANCEL_SUCCESS"), in(a.type, "PURCHASE", "APPLY"), not(isnull(a.cancel_app_no)) |

| │ └─TableScan_17 | 146027634.83 | cop | table:bus_jijin_trade_record, keep order:false |

| └─TableReader_31 | 6442.00 | root | data:TableScan_30 |

| └─TableScan_30 | 6442.00 | cop | table:b, range:[-inf,+inf], keep order:false |

+------------------------------+--------------+------+----------------------------------------------------------------------------------------------------------------------------+Using the execution plan after hint, it is estimated that the index on updated_at will be scanned 176388219, and the full table scan is selected without selecting the index. It can be determined that the execution plan is faulty due to incorrect statistics.

Check out the statistics on table bus_jijin_trade_record .

mysql> show stats_meta where table_name like 'bus_jijin_trade_record' and db_name like 'tods';

+---------+------------------------+---------------------+--------------+-----------+

| Db_name | Table_name | Update_time | Modify_count | Row_count |

+---------+------------------------+---------------------+--------------+-----------+

| tods | bus_jijin_trade_record | 2020-03-05 22:04:21 | 10652939 | 176381997 |

+---------+------------------------+---------------------+--------------+-----------+

mysql> show stats_healthy where table_name like 'bus_jijin_trade_record' and db_name like 'tods';

+---------+------------------------+---------+

| Db_name | Table_name | Healthy |

+---------+------------------------+---------+

| tods | bus_jijin_trade_record | 93 |

+---------+------------------------+---------+ According to the statistics, the table bus_jijin_trade_record has 176381997, and the number of modified rows is 10652939. The health of the table is: (176381997-10652939)/176381997 *100=93.

solve

Regather statistics

mysql> set tidb_build_stats_concurrency=10;

Query OK, 0 rows affected (0.00 sec)

#调整收集统计信息的并发度,以便快速对统计信息进行收集

mysql> analyze table tods.bus_jijin_trade_record;

Query OK, 0 rows affected (3 min 48.74 sec)View execution plan without hint statement

mysql> explain select count(*)

-> from tods.bus_jijin_trade_record a, tods.bus_jijin_info b

-> where a.fund_code=b.fund_code and a.type in ('PURCHASE','APPLY')

-> and a.status='CANCEL_SUCCESS' and a.pay_confirm_status = 1

-> and a.cancel_app_no is not null and a.id >= 177045000

-> and a.updated_at > date_sub(now(), interval 48 hour) ;;

+------------------------------+-----------+------+----------------------------------------------------------------------------------------------------------------------------+

| id | count | task | operator info |

+------------------------------+-----------+------+----------------------------------------------------------------------------------------------------------------------------+

| StreamAgg_13 | 1.00 | root | funcs:count(1) |

| └─HashRightJoin_27 | 1.99 | root | inner join, inner:IndexLookUp_23, equal:[eq(a.fund_code, b.fund_code)] |

| ├─IndexLookUp_23 | 1.99 | root | |

| │ ├─Selection_21 | 626859.65 | cop | ge(a.id, 177045000) |

| │ │ └─IndexScan_19 | 757743.08 | cop | table:a, index:UPDATED_AT, range:(2020-03-03 23:28:14,+inf], keep order:false |

| │ └─Selection_22 | 1.99 | cop | eq(a.pay_confirm_status, 1), eq(a.status, "CANCEL_SUCCESS"), in(a.type, "PURCHASE", "APPLY"), not(isnull(a.cancel_app_no)) |

| │ └─TableScan_20 | 626859.65 | cop | table:bus_jijin_trade_record, keep order:false |

| └─TableReader_37 | 6442.00 | root | data:TableScan_36 |

| └─TableScan_36 | 6442.00 | cop | table:b, range:[-inf,+inf], keep order:false |

+------------------------------+-----------+------+----------------------------------------------------------------------------------------------------------------------------+

9 rows in set (0.00 sec)It can be seen that after the statistics are collected, the current execution plan has gone through the index scan, which is consistent with the behavior of manually adding hints, and the number of rows scanned is 757743 as expected.

At this time, the execution time becomes 1.69s. If the execution plan does not change, it should be due to the increase in the cache hit rate.

mysql> select count(*)

-> from tods.bus_jijin_trade_record a, tods.bus_jijin_info b

-> where a.fund_code=b.fund_code and a.type in ('PURCHASE','APPLY')

-> and a.status='CANCEL_SUCCESS' and a.pay_confirm_status = 1

-> and a.cancel_app_no is not null and a.id >= 177045000

-> and a.updated_at > date_sub(now(), interval 48 hour) ;

+----------+

| count(*) |

+----------+

| 712 |

+----------+

1 row in set (1.69 sec)Summary

It can be seen that the deterioration of the SQL execution efficiency is caused by inaccurate statistical information, and a correct execution plan is obtained after collecting the statistical information.

From the final result of 712 rows of records, creating a joint index can greatly reduce the amount of scanned data and further improve performance. In the case that the performance has already met the business requirements, the joint index will have additional costs, which are reserved for later attempts.

This article is the fifth and final article in the "TiDB Query Optimization and Tuning" series. Through this series of articles, we introduced the theoretical knowledge of TiDB optimizer, query plan, slow query and tuning in detail, and shared the actual combat in this chapter. I hope that through this series of articles, you can have a deeper understanding of the TiDB optimizer and better system performance through these tuning techniques.

If you have any suggestions for TiDB products, welcome to communicate with us at internals.tidb.io .

Click to see more TiDB query optimization and tuning articles

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。