Author: Pan Shengwei (Ten Mian)

How to effectively use cloud products to prepare for our big business promotion is a question that everyone is more concerned about. Before the 618 sale hits today, let's talk about the best practices we've accumulated.

Click the link below to view the video explanation now!

https://yqh.aliyun.com/live/detail/28697

The challenge of uncertainty in the big promotion

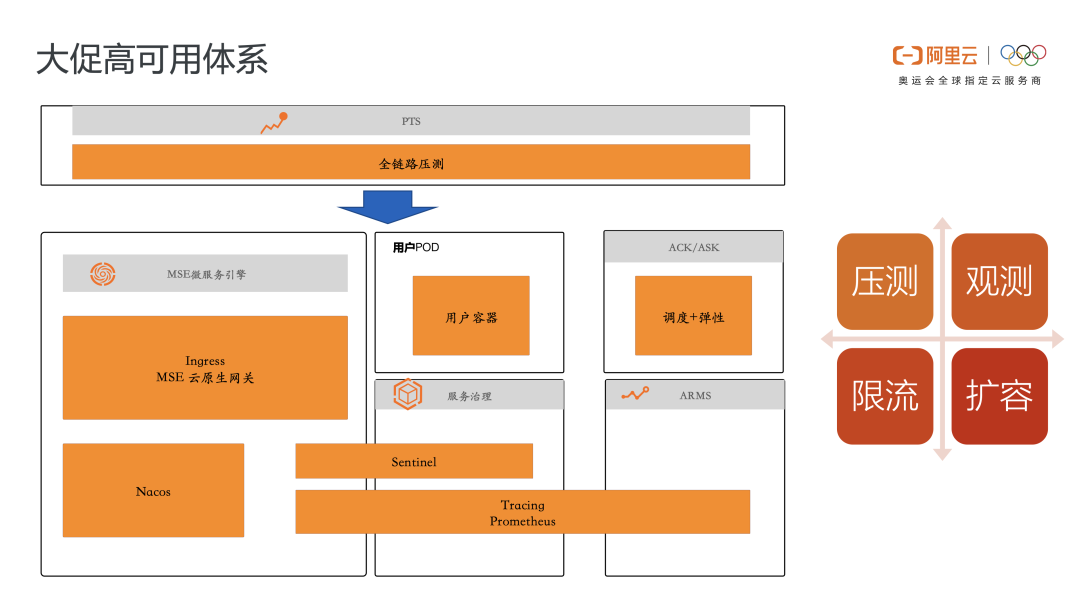

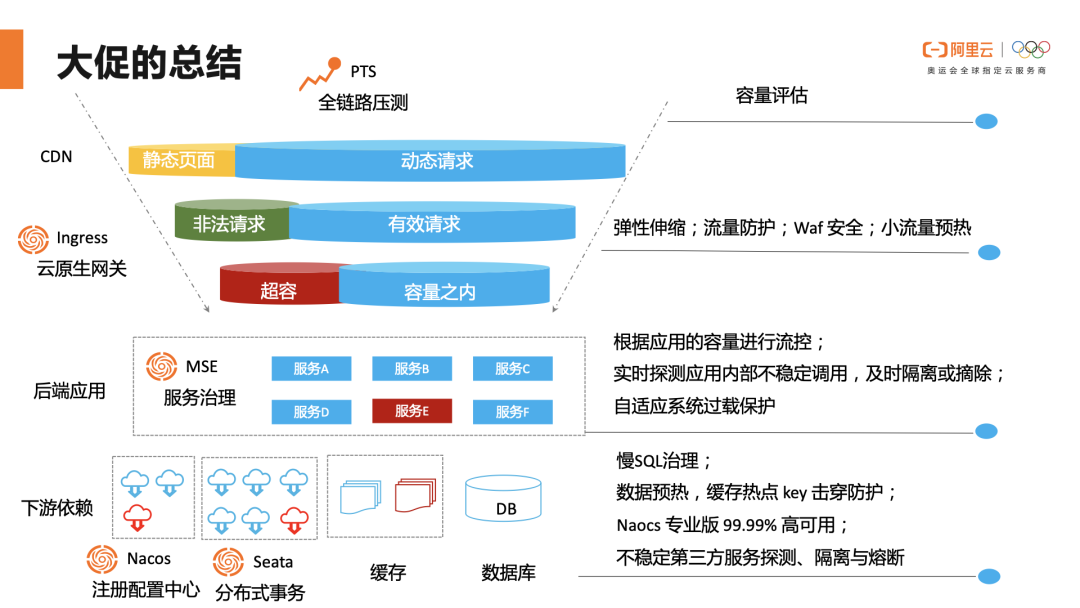

The above picture is a big picture of our business. During the big promotion, we will encounter many challenges with uncertain factors, such as uncertain traffic, uncertain user behavior, uncertain security attack, uncertain R&D change risk, and uncertain impact of failure. Sure. The best way is to simulate and protect from the entrance, but in fact, it cannot solve all problems, so further fault drills and traffic protection are required within the IDC.

Because traffic is uncertain, we need capacity assessment and business assessment to determine traffic peaks and then turn traffic into deterministic conditions through current limiting; user behavior is uncertain, so we need to simulate user behavior through simulation and multiple scenarios for stress testing and During the drill, we need to do a more realistic simulation and find the bottlenecks and optimization points of the system in time, and optimize them in time; the uncertainty of security attacks, we need the gateway to have the ability of waf protection, such as how to identify and improve the traffic of black production Limit its access through flow control to protect the traffic of normal users; regarding the uncertainty of R&D change risks, we need to limit unnecessary changes during big promotions through change control; because we are worried about the uncertainty of the impact in the event of a failure , so we need to temper our system through continuous failure drills, understand the impact of the failure and control the impact of the problem as much as possible to avoid the problem of system avalanches; the big promotion is a project, and the methodology of preparation is to make the big promotion not. Certainty has become certainty. I hope to provide you with some methodologies, and help you with the best practices of our products.

target determination

There are many goals for the big promotion, such as supporting the peak traffic of XX, 30% cost optimization, and smooth user experience. In the eyes of technical students, the goals of big promotion preparations generally revolve around cost, efficiency, and stability. Cost We consider the resource cost of Nacos, cloud native gateway, and the number of machines to be applied, and make good use of the elasticity of K8s to ensure a balance between stability and cost; in terms of efficiency, PTS full-link stress test provides out-of-the-box The full-link pressure measurement tool is closest to the user's simulated pressure measurement traffic, which can greatly improve efficiency. At the same time, the MSE Switch capability provides dynamic configuration capabilities to help the use of plans; in terms of stability, MSE service governance provides end-to-end traffic The control ability and the lossless online and offline ability during the elastic process ensure smooth and lossless traffic during the big promotion process; at the same time, we can cooperate with the full-link grayscale capability of MSE service management, and use the test traffic to perform full-link function rehearsal to expose as soon as possible. question.

Preparation process

To put it simply, the big promotion preparation process needs to focus on the following four points: capacity assessment, plan preparation, warm-up, and current limiting. By doing all the following points, the uncertain factors can be turned into deterministic conditions, so even in the face of the traffic peak of the big promotion, the whole system will be very stable.

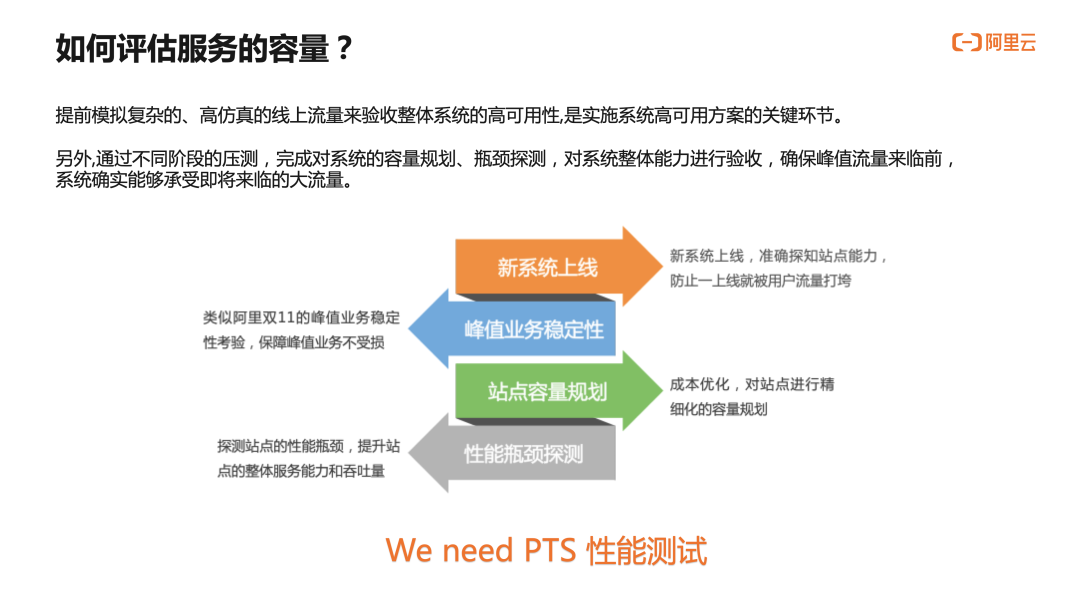

Capacity assessment

The purpose of capacity evaluation is to achieve the best balance between cost and stability. Therefore, we need to determine the performance baseline of our application under certain business conditions based on the application performance of the big promotion; on the other hand, for the goal of cost optimization, We need to determine the cost budget for the machine.

- Ensure application performance through performance evaluation and performance optimization

- Assess business changes as well as system changes

- Determine the full-link stress test model based on the service model evaluation, and conduct the full-link stress test acceptance

- Evaluate the limit water level of the capacity, keep the water level at 60% of the capacity, and enable elastic capacity expansion and contraction.

- performance evaluation

First of all, it is necessary to stress the service through the PTS platform to find out the service capability of the system's external core interfaces and the corresponding system water level. Alibaba Cloud products are the first to recommend PTS for stress testing and full-link stress testing.

Compared with general pressure measurement tools, PTS has the following advantages:

Advantage 1: powerful function

- Full SaaS form, no additional installation and deployment are required.

- 0 The installed cloud recorder is more suitable for mobile APP scenarios.

- Data factory function, 0-encoded API/URL request parameter formatting for stress testing.

- The fully visualized orchestration of complex scenarios supports login state sharing, parameter transfer, and business assertion. At the same time, the scalable command function supports multiple forms of thinking time, traffic flood storage, etc.

- The original RPS / concurrent multi-pressure measurement mode.

- The flow supports dynamic second-level adjustment, and millions of QPS can also be instantaneously pulsed.

- The powerful report function can display and count the real-time data of the pressure test client in multi-dimensional subdivisions, and automatically generate reports for viewing and exporting.

- The stress testing API/scenario can be debugged, and the stress testing process provides log details query.

**Advantage 2: Traffic is real

- The traffic comes from hundreds of cities across the country covering various operators (which can be expanded overseas), which truly simulates the traffic source of the end user, and the corresponding reports and data are closer to the real user experience.

- There is no upper limit on the pressure application capacity, and the pressure measurement flow of up to 10 million RPS is supported.

When the performance pressure detects the system bottleneck, we need to optimize the system hierarchically.

- Business and system changes

Evaluate the stability evaluation of each big promotion compared to the previous (if there is a reference), as well as the business trend, whether this business has an order of magnitude improvement compared to the previous one, and whether there are changes in the gameplay of the business, such as the pre-sale of 618 and the red envelope grab. Whether business gameplay such as full reduction and full reduction will cause stability risks to the system, analyze user trends, look at the key paths of the system from a global perspective, ensure core business, and sort out strong and weak dependencies.

- Based on business model evaluation, determine the full-link stress test model, and conduct full-link stress test acceptance \

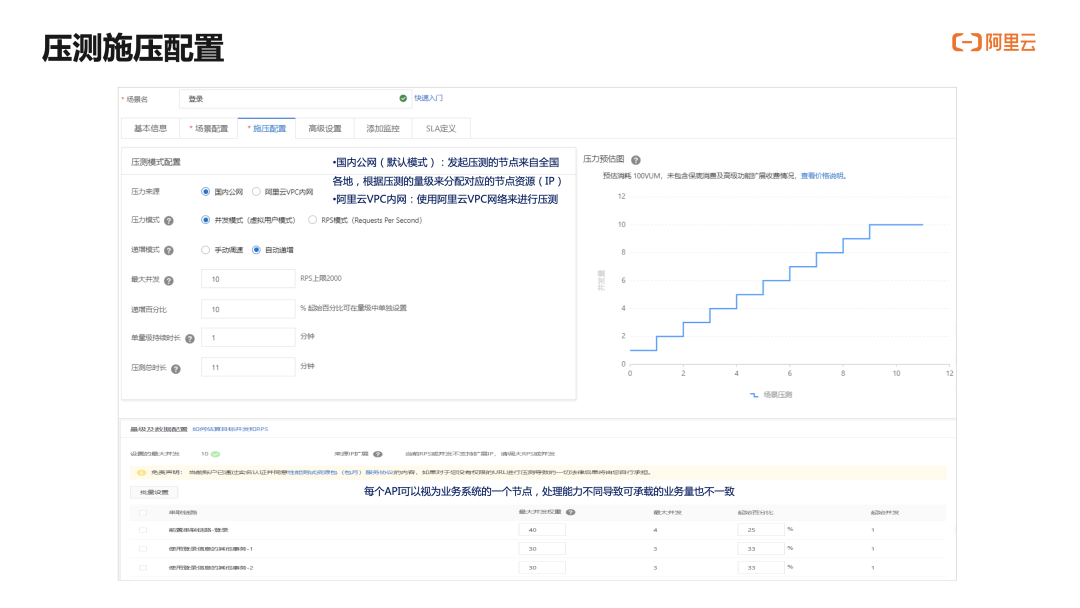

To initiate a performance stress test, you first need to create a stress test scenario. A stress test scenario includes one or more parallel services (ie, serial links), and each service includes one or more serial requests (ie, APIs).

Through stress testing, we can do a good job in risk identification and performance management of our system. Through full-link stress testing, we can discover system bottlenecks in advance, identify performance risks, and prevent system performance from corrupting.

- Resource capacity evaluation and reference for cloud products

- MSE Nacos Capacity Reference

To evaluate the water level of the Nacos registration and configuration center, we can evaluate it from the number of connections and services. Some applications may have a particularly large number of services. In addition, the increase in traffic during the big promotion makes the application dynamically expand. As the number of Pods increases, the number of connections and services provided. The number of users will also increase linearly, so it is necessary to ensure the water level within 60% before the big promotion.

- MSE Gateway Capacity Reference

The most intuitive way to evaluate the gateway water level is to judge it according to the CPU. It is recommended that it be 30%, and the maximum should not exceed 60%. Why is it set so low? The reference water level is based on the group's internal gateway operation and maintenance experience. The gateway often has some burst traffic, especially for e-commerce businesses, such as big promotions, spikes, etc. There are also factors to consider traffic attacks, such as ddos attacks, etc. The capacity cannot be evaluated based on the CPU running at 80% or 90%. Once there is an emergency, the gateway will easily be filled. Ali's internal gateway is relatively extreme, and the gateway CPU is generally controlled below 10%. At the same time, there are also some factors. The burst traffic of the gateway is generally instantaneous. It is possible that the instantaneous CPU of the gateway has been hit very high, but the average monitoring is not very high. Therefore, the second-level monitoring capability of the gateway is also very necessary.

plan

To describe in one sentence is to consciously formulate a response plan for potential or possible risks.

For example, there are many switches that affect user experience and performance, and some functions that have nothing to do with business, such as observation and analysis of user behavior, etc., we need to turn off these functions through the plan platform during the big promotion. Of course, there are some plans. The business traffic-related plans, involving flow control, current limiting, and downgrade, will be described in detail later.

warm up

Why do preheating? It is related to our traffic model. In many cases, our traffic model naturally goes up and down slowly. But this is not the case during the big promotion. The traffic may suddenly skyrocket dozens of times, and then maintain it and slow down. Preheat what to preheat? We did a lot of warm-up at that time, data warm-up, application warm-up, connection warm-up.

- data warm-up

Let’s talk about data preheating first. When we access a piece of data, its data link is too long. In fact, the closer it is to the application, the better the effect. First of all, what data should the data forecast depend on and how should it be warmed up? First of all, the data that is particularly large, the data related to users, that is, shopping carts, red envelopes, and coupons, are used to warm up the database.

- Simulate a user query, query the database, and put its data in our memory.

- Many applications are blocked by the cache. Once the cache fails, the database will hang because the database can't stop it. At this time, the data should be preheated to the cache in advance.

The purpose of data preheating is to reduce the links of key data. There is no need to read from the cache if you can read it from the memory, and you should not access the database if you can read from the cache.

- application warm-up

1. Small-traffic service warm-up

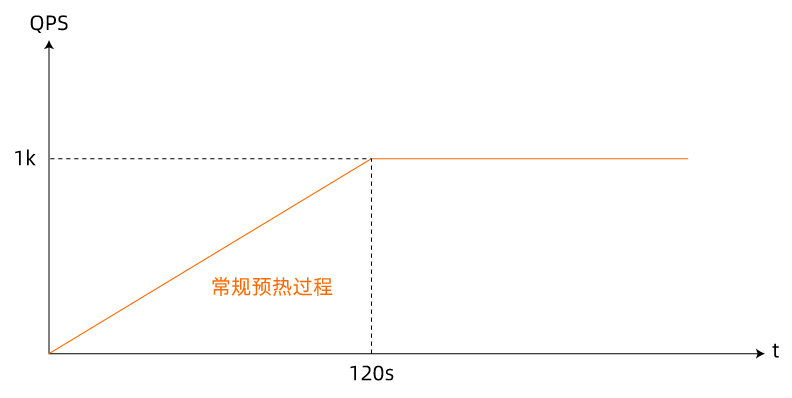

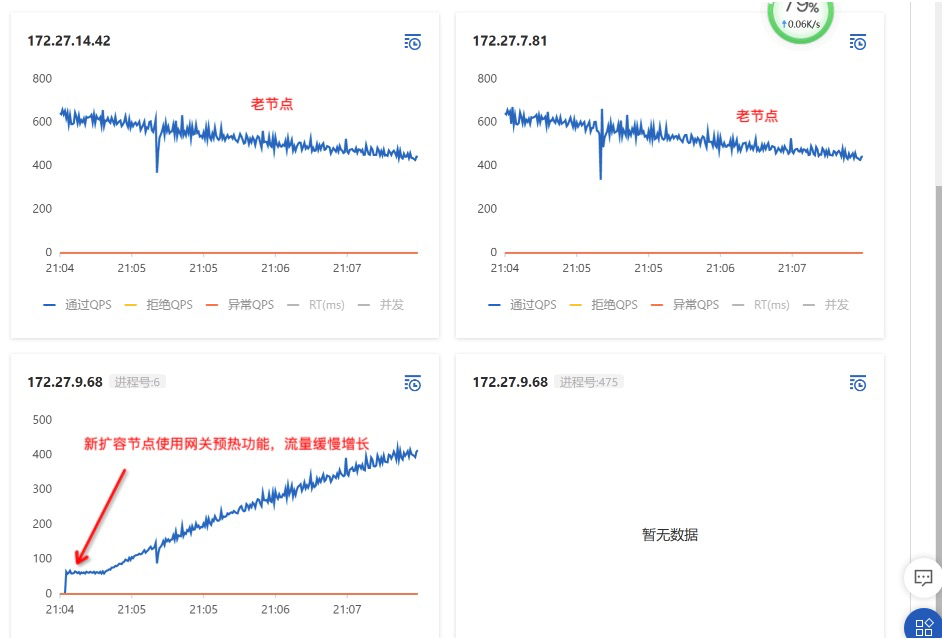

Compared with the general scenario, the newly released microservice application instance shares the total online QPS with other normal instances. The small-traffic warm-up method calculates the weight on the service consumer side according to the start-up time of each service provider instance, and combines the load balancing algorithm to control the process of the newly-started application traffic gradually increasing to a normal level with the start-up time, helping the newly-started operation to warm up , the detailed QPS curve with time is shown in the figure:

Applying the QPS curve of the small flow preheating process

At the same time, we can adjust the curve of small flow preheating to solve the problem of smooth and lossless machine flow during big promotion.

Schematic diagram of applying small flow preheating process

Through the small traffic warm-up method, it can be effectively solved. Under high concurrency and large traffic, a large number of requests caused by slow initialization of resources are slow to respond, requests are blocked, and resources are exhausted. Just started the Pod downtime accident. It is worth mentioning that the MSE cloud native gateway also supports small traffic warm-up. Let's take a look at the effect in actual combat. The 68 nodes are the instances that have just been expanded.

2. Parallel class loading

On JDK7, if the Classloader.registerAsParallelCapable method is called, the parallel class loading function will be enabled, and the lock level will be lowered from the ClassLoader object itself to the level of the class name to be loaded. In other words, the loadClass method will not be locked as long as multiple threads are not loading the same class.

We can see the introduction of the Classloader.registerAsParallelCapable method

protected static boolean registerAsParallelCapable() Registers the caller as parallel capable. The registration succeeds if and only if all of the following conditions are met:1. no instance of the caller has been created2. all of the super classes (except class Object) of the caller are registered as parallel capable

It requires that when the method is registered, the registered class loader has no instance and all class loaders on the inheritance link of the class loader have called registerAsParallelCapable. For the lower version of Tomcat/Jetty webAppClassLoader and fastjson's ASMClassLoader, the class is not enabled Loading, if there are multiple threads in the application calling the loadClass method at the same time for class loading, then the competition for locks will be very fierce.

MSE Agent enables its parallel class loading capability before the class loader is loaded in a non-invasive way, without requiring users to upgrade Tomcat/Jetty, and supports dynamic class loading and parallel class loading capability through configuration.

Reference material: http://140.205.61.252/2016/01/29/3721/

- connection warm-up

Taking the JedisPool pre-established connection as an example, the connection pool connection such as Redis is established in advance, instead of waiting for the traffic to start to establish the connection, causing a large number of business threads to wait for the connection to be established.

org.apache.commons.pool2.impl.GenericObjectPool#startEvictor

protected synchronized void startEvictor(long delay) {

if(null != _evictor) {

EvictionTimer.cancel(_evictor);

_evictor = null;

}

if(delay > 0) {

_evictor = new Evictor();

EvictionTimer.schedule(_evictor, delay, delay);

}

}JedisPool uses timed tasks to asynchronously ensure the establishment of the minimum number of connections, but this will result in the Redis connection not being established when the application starts.

Active pre-connection method: Use the GenericObjectPool#preparePool method to manually prepare the connection before using the connection.

During the online process of microservices, min-idle redis connections are created in advance during the process of initializing Redis to ensure that the connection is established before the service is published.

JedisPool warm-internal-pool also has similar problems. Asynchronous connection logic such as pre-built database connections ensures that all asynchronous connection resources are ready before business traffic comes in.

- Let's talk about preheating

Why do preheating. Alibaba Cloud even has systematic product functions such as: lossless online, preheating mode for traffic protection, etc., because preheating is a very important part to ensure the stability of the system during large-scale promotion. Helping our system enter a big boost state by preheating, this process is like a 100-meter sprint. Other businesses follow the natural flow of long-distance running, but for sprinting, you must warm up. Without warm-up, you may experience major problems such as cramps and strains in the initial stage.

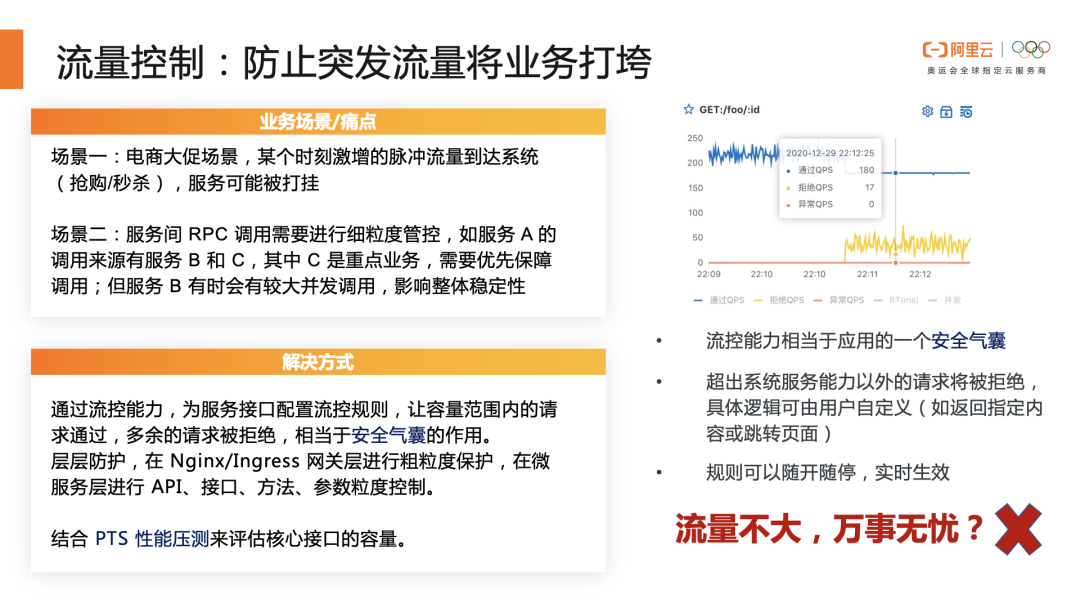

Flow control current limit

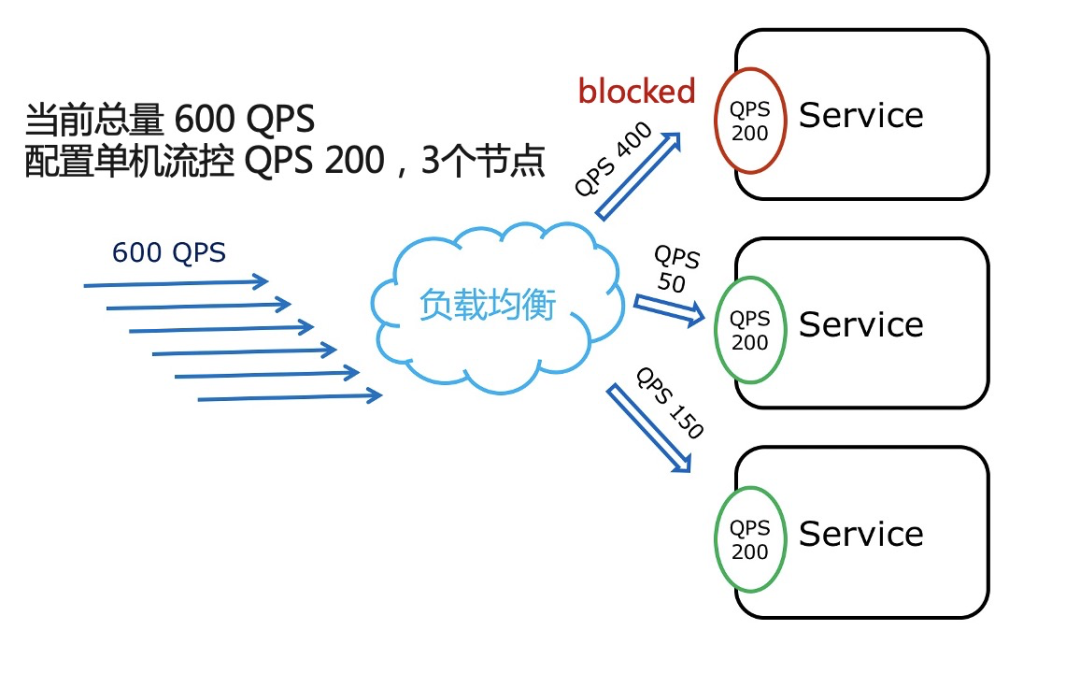

Flow control is the most common and direct control method to ensure the stability of microservices. Each system and service has an upper limit of the capacity it can carry. The idea of flow control is very simple. When the requested QPS of an interface exceeds a certain upper limit, it rejects the excess requests to prevent the system from being overwhelmed by sudden traffic. The most common solution on the market is the flow control of the stand-alone dimension. For example, the upper limit of the capacity of an interface is estimated to be 100 QPS through the PTS performance test, and there are 10 instances of the service, so configure a single-machine flow control of 10 QPS. However, in many cases, due to the uncertainty of the traffic distribution, the flow control of the single-machine dimension has some poor results.

Typical Scenario 1: Precisely control the total number of downstream calls

Scenario: Service A needs to frequently call the query interface of service B, but the capacities of services A and B are different. Service B agrees to provide service A with a total of 600 QPS of query capabilities at most, which is controlled by means such as flow control.

Pain point: If configured according to the single-machine flow control strategy, due to the calling logic, load balancing strategy, etc., the traffic distribution of A calls B to each instance may be very uneven, and some instances of service B with large traffic trigger single-machine flow control, but The overall limit has not been met, resulting in a missed SLA. This uneven situation often occurs when calling a dependent service or component (such as database access), which is also a typical scenario of cluster flow control: precise control of the microservice cluster's response to downstream services (or databases, caches) The total number of calls.

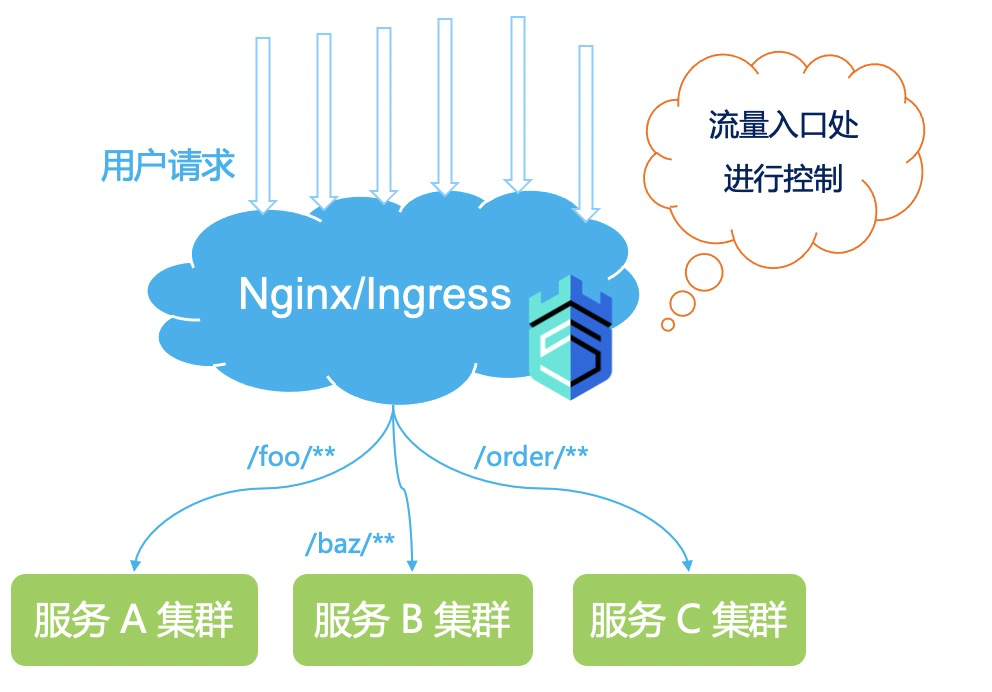

Typical Scenario 2: Service Link Entry for Total Request Control

Scenario: Ingress traffic control is performed on Nginx/Ingress gateway and API Gateway (Spring Cloud Gateway, Zuul), and it is hoped that the traffic of a certain or a group of APIs can be precisely controlled to protect in advance, and excess traffic will not hit the back-end system.

Pain point: If it is configured according to the stand-alone dimension, on the one hand, it is difficult to perceive changes in the number of gateway machines. On the other hand, uneven gateway traffic may lead to poor current limiting effect; and from the perspective of gateway entry, configuring the overall threshold is the most natural method.

- MSE Service Governance Cluster Flow Control

MSE service governance flow control degradation provides the following features:

1. Professional protective measures:

- Ingress flow control: Flow control is performed according to service capacity, which is often used for application ingress, such as Gateway, front-end applications, service providers, etc.

- Hotspot isolation: Isolate hotspots from normal traffic to prevent invalid hotspots from preempting normal traffic capacity.

- Isolation/degradation of relying parties: Use isolation/degradation methods between applications and within applications to minimize the impact of unstable dependencies on applications, thereby ensuring application stability.

- System protection: MSE application flow control degradation can dynamically adjust the ingress flow according to system capabilities (such as Load, CPU usage, etc.) to ensure system stability.

2. Rich traffic monitoring:

- Second-level traffic analysis function, dynamic rules are pushed in real time.

- The flow chart is arranged, and the core business scenarios are clearly understood.

3. Flexible access methods:

Provide SDK, Java Agent and container access and other methods, low intrusion and fast online.

The cluster flow control of MSE service governance can accurately control the total real-time calls of a service interface in the entire cluster, and can solve the problem that the flow control of a single machine is not effective due to uneven flow, frequent changes in the number of machines, and too small amortization threshold. The problem, combined with the single-machine flow control, can better play the effect of flow protection.

For the above scenario, cluster flow control managed by MSE service, whether it is Dubbo service call, Web API access, or custom business logic, supports precise control of the total number of calls, regardless of call logic, traffic distribution, instance distribution . It can not only support large flow control of hundreds of thousands of QPS, but also support precise control of small flow in minute-hour-level business dimension. The behavior after the protection is triggered can be customized by the user (such as returning customized content, objects).

- Prevent burst traffic from bringing down your business

By enabling the MSE traffic protection capability, you can configure current limiting, isolation, and system protection rules based on different interfaces and system indicators in the stress test results, so as to provide access in the shortest time and provide continuous protection. When the traffic in some special business scenarios is much larger than expected, by configuring the flow control rules in advance, the excess traffic is rejected or queued in time, thus protecting the front-end system from being down. At the same time, some core interfaces also experienced a sudden increase in response time. Downstream services were hung up, causing the RT of the entire link from the gateway to the back-end service to skyrocket. At this time, MSE real-time monitoring and link functions were used to quickly locate To slow calls and unstable services, timely flow control and concurrency control pulled the system back from the brink of collapse, and business quickly returned to normal levels. This process is like "cardiac resuscitation for the system", which can effectively ensure that the business system does not hang up, which is very impressive.

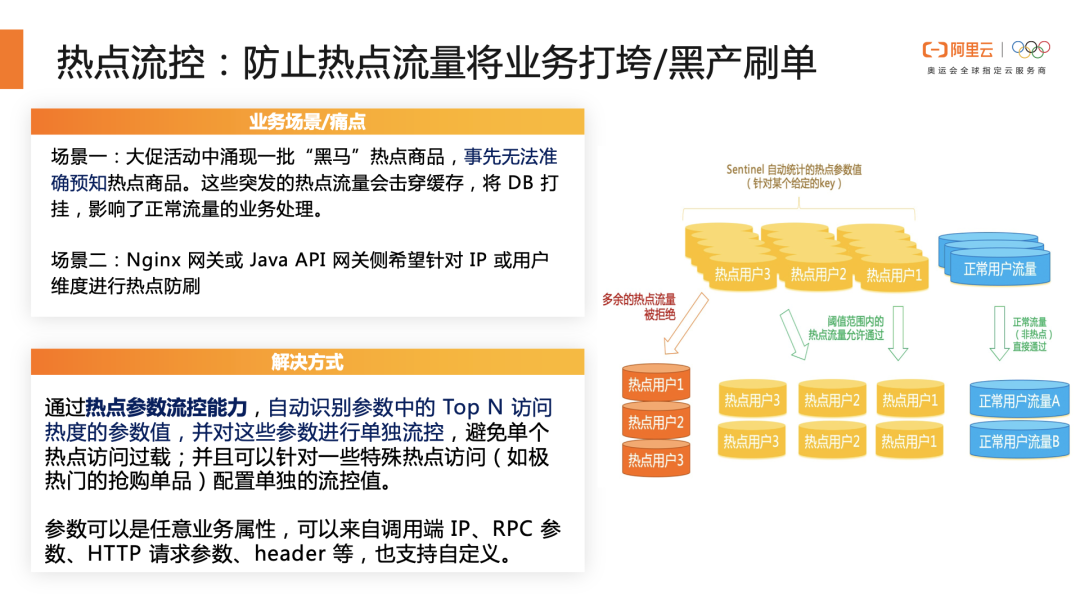

- Prevent hot traffic from bringing down your business

In the big promotion, we also need to prevent hot traffic, some "dark horse" hot commodities, or some traffic that exceeds business expectations after entering our system, it is likely to bring down our system, we pass the hot parameter flow. It can control the traffic of hot users and hot commodities within the range estimated by our business model, so as to protect the traffic of normal users and effectively protect the stability of our system.

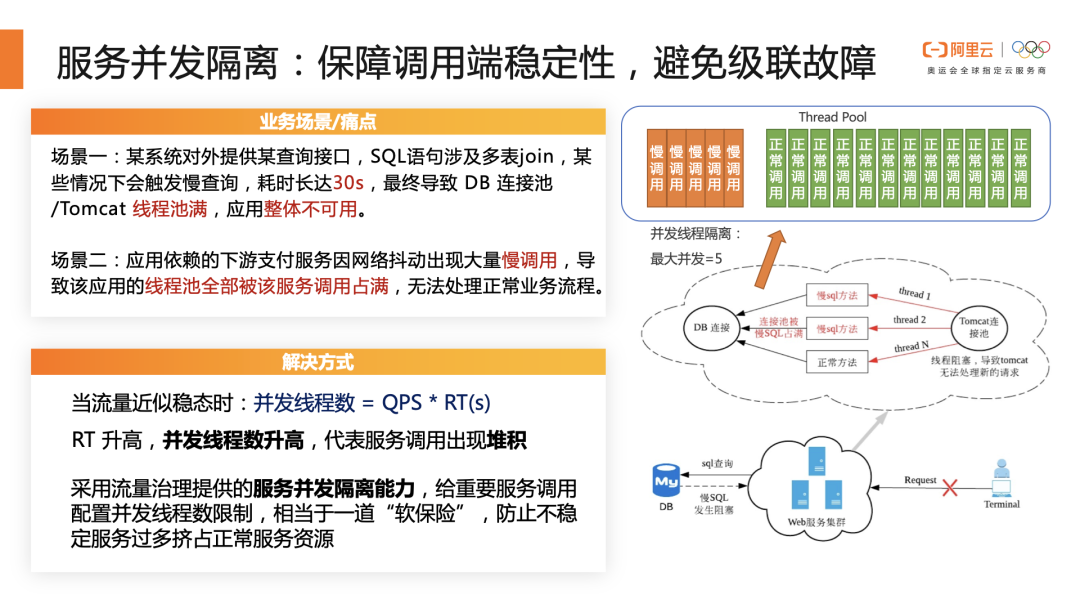

- Ensure the stability of the calling end and avoid cascading failures

We all know that when the traffic is approximately steady, the number of concurrent threads = QPS * RT(s), when the downstream RT we call increases, the number of concurrent threads will soar, resulting in the accumulation of service calls. This is also a common problem in big promotions, because the dependent downstream payment service has a large number of slow calls due to network jitter, resulting in the application's thread pool being fully occupied by the service calls, unable to handle normal business processes. The MSE real-time monitoring and link function can help us quickly locate slow calls and unstable services, accurately find the root cause of application slowdown, and configure concurrency isolation strategies for them, which can effectively ensure the stability of our applications.

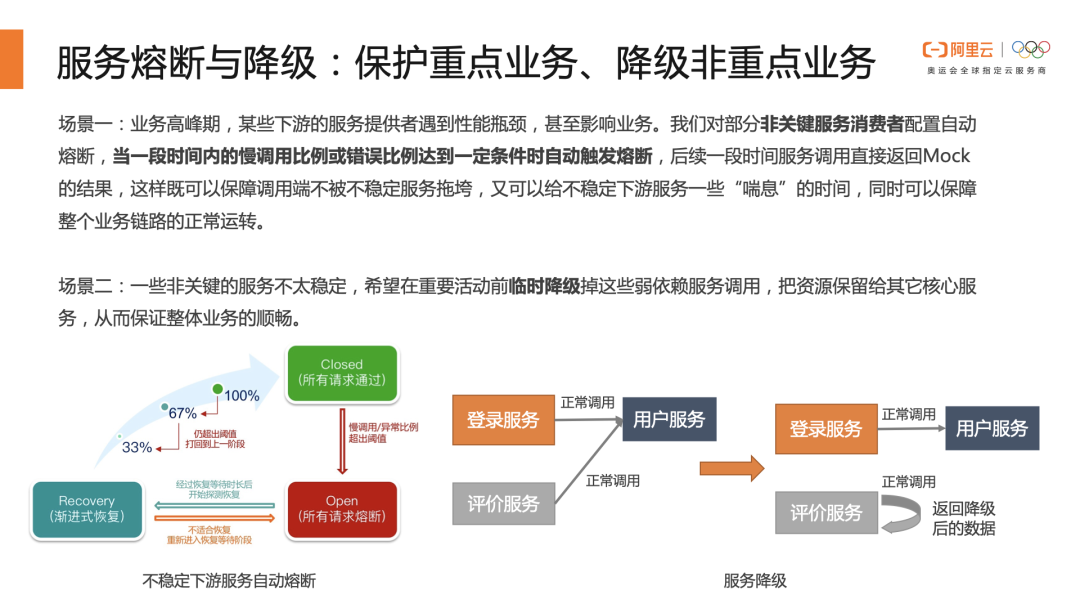

- Protect key businesses from avalanches

Avalanche is a very scary thing. When it happens, we can't even save it, we can only watch the applications go down one by one. Therefore, during peak periods of business, some downstream service providers encounter performance bottlenecks and even affect business. We can configure automatic circuit breakers for some non-critical service consumers. When the proportion of slow calls or errors in a period of time reaches a certain condition, circuit breakers are automatically triggered, and service calls for a period of time will directly return the result of the Mock, which not only ensures that the calling end does not Being dragged down by unstable services can give unstable downstream services some "breathing" time, while ensuring the normal operation of the entire business link.

big promotion summary

Every big promotion is a very valuable experience. After the big promotion, it is an indispensable part to do a good job of summarizing and reviewing the accumulated experience. We need to collect and sort out the peak indicators of the system, the bottlenecks and shortcomings of the system, and the pits that have been stepped on, and think about what can be deposited to help prepare for the next big promotion, so that the next big promotion does not need to start all over again, so that the next big promotion The user experience can be more silky smooth.

There are still many uncertainties in the big promotion. The traffic and user behavior of the big promotion are uncertain. How to turn the uncertainty risk into a relatively certain thing? The big promotion is a project. The methodology of preparation is to turn the uncertainty of the big promotion into certainty, and use the methodology to promote the best practices of the product to cooperate with the success of the big promotion. Best Practices. Here I wish everyone a smooth 618 exam and the system is rock solid. In order to use non-stop computing services, for always-on application business, Fighting!

10% discount for the first purchase of MSE Registration and Configuration Center Professional Edition, 10% discount for MSE Cloud Native Gateway Prepaid Full Specifications.

Click here to enjoy the discount now!

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。