a background

1 Introduction

The concept of knowledge graph, first proposed by Google in 2012, aims to realize a more intelligent search engine, and began to be popularized in academia and industry after 2013. At present, with the rapid development of artificial intelligence technology, knowledge graphs have been widely used in search, recommendation, advertising, risk control, intelligent scheduling, speech recognition, robotics and other fields.

2 Development status

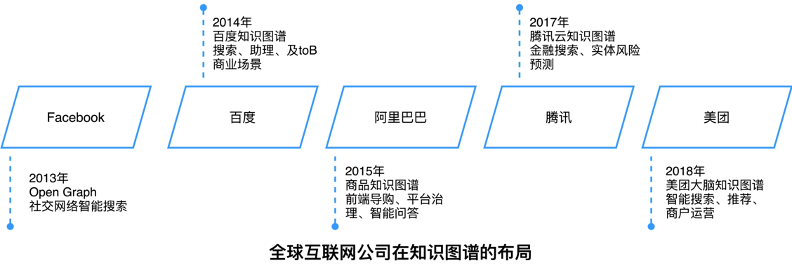

As the core technology driving force of artificial intelligence, knowledge graph can alleviate the problem of deep learning relying on massive training data and large-scale computing power. It can be widely adapted to different downstream tasks and has good interpretability. Therefore, the world's large Internet companies They are all actively deploying their own knowledge graphs.

For example, in 2013, Facebook released Open Graph, which was applied to social network intelligent search; in 2014, Baidu launched the knowledge map, which was mainly used in search, assistant, and toB business scenarios; in 2015, Ali launched the product knowledge map, which is used in front-end shopping guides and platform governance. It plays a key role in businesses such as smart Q&A; Tencent Cloud Knowledge Graph launched by Tencent in 2017 effectively helps financial search, physical risk prediction and other scenarios; Meituan Brain Knowledge Graph launched by Meituan in 2018 Landed in multiple businesses such as search recommendation and smart merchant operation.

3 Objectives and benefits

At present, domain graphs are mainly concentrated in commercial fields such as e-commerce, medical care, and finance, while the construction of semantic networks and knowledge graphs for automotive knowledge lacks systematic guidance methods. Taking the knowledge of the automotive field as an example, this paper provides a way of building a domain map from scratch around the entities and relationships of car series, models, dealers, manufacturers, brands, etc., and details the steps and methods in building a knowledge map. Explain, and introduce several typical landing applications based on this map.

Among them, the data source is the Autohome website. Autohome is an auto service platform composed of shopping guides, information, reviews, word-of-mouth and other sectors. It has accumulated a large amount of auto data in terms of viewing, buying, and using. The knowledge graph organizes and mines the content centered on automobiles, provides rich knowledge information, structures and accurately depicts interests, and supports multiple dimensions such as cold start, recall, sorting, and display of recommended users, which brings effects to business improvement.

Two-map construction

1 The challenge of building

The knowledge graph is the semantic representation of the real world. Its basic unit is the triplet of [entity-relationship-entity] and [entity-attribute-attribute value]. The entities are connected to each other through relationships to form semantics. network. Graph construction will face great challenges, but after construction, it can show rich application value in multiple scenarios such as data analysis, recommendation calculation, and interpretability.

Build Challenge:

- Schema is difficult to define: there is currently no unified and mature ontology construction process, and the definition of ontology in specific fields usually requires the participation of experts;

- Heterogeneity of data types: Usually, the data sources faced by a knowledge graph construction will not be of a single type, including structured, semi-structured, and unstructured data. Facing data with different structures, knowledge transformation And the difficulty of excavation is higher;

- Relying on professional knowledge: The domain knowledge map usually relies on strong professional knowledge, such as the maintenance method corresponding to the vehicle model, which involves knowledge in multiple fields such as machinery, electrical engineering, materials, mechanics, etc., and such relationships require high accuracy and need to be guaranteed. The knowledge is correct enough, so a better combination of experts and algorithms is needed for efficient graph construction;

- Data quality is not guaranteed: Mining or extracting information requires knowledge fusion or manual verification before it can be used as knowledge to help downstream applications.

- income:

- Knowledge graph unified knowledge representation: By integrating multi-source heterogeneous data, a unified view is formed;

- Rich semantic information: Through relational reasoning, new relational edges can be discovered and richer semantic information can be obtained;

- Strong interpretability: Explicit reasoning paths are more interpretable than deep learning results;

- High quality and continuous accumulation: Design a reasonable knowledge storage scheme according to business scenarios to realize knowledge update and accumulation.

2 Graph Architecture Design

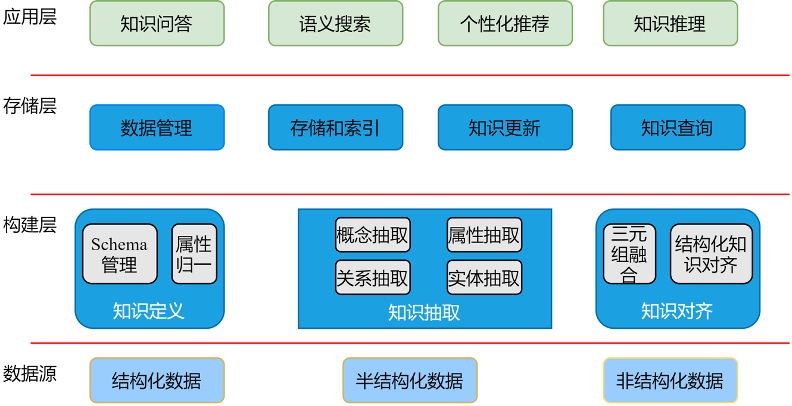

The technical architecture is mainly divided into three layers: construction layer, storage layer and application layer. The architecture diagram is as follows:

- Construction layer: including schema definition, structured data transformation, unstructured data mining, and knowledge fusion;

- Storage layer: including knowledge storage and indexing, knowledge update, metadata management, and support for basic knowledge query;

- Service layer: Including intelligent reasoning, structured query and other business-related downstream application layers.

3 Specific construction steps and processes

According to the architecture diagram, the specific construction process can be divided into four steps: ontology design, knowledge acquisition, knowledge storage, and application service design and use.

3.1 Ontology Construction

Ontology is a recognized collection of concepts. The construction of ontology refers to constructing the ontology structure and knowledge framework of knowledge graph according to the definition of ontology.

The main reasons for constructing graphs based on ontology are as follows:

- Clarify professional terms, relationships and their domain axioms. Only after a piece of data must meet the entity objects and types predefined by Schema, can it be updated to the knowledge graph.

- Separating domain knowledge from operational knowledge, through Schema, you can understand the graph architecture and related definitions macroscopically, and there is no need to summarize and organize from triples.

- To achieve a certain degree of domain knowledge reuse. Before building an ontology, you can first investigate whether any related ontology has been built, so that you can improve and expand based on the existing ontology, and achieve the effect of getting twice the result with half the effort.

- Based on the definition of the ontology, the disconnection between the graph and the application can be avoided, or the situation where modifying the graph schema is more expensive than rebuilding. For example, storing "BMW x3" and "2022 BMW x3" as car entities may cause confusion of instance relationships and poor usability during application. Class entity” to subdivide the subclasses of “car series” and “model” to avoid it.

According to the coverage of knowledge, knowledge graphs can be divided into general knowledge graphs and domain knowledge graphs. At present, there are many cases of general knowledge graphs, such as Google's Knowledge Graph, Microsoft's Satori and Probase, etc. The domain graphs are finance, electricity, etc. Business and other specific industry map. General-purpose graphs pay more attention to breadth, emphasizing the integration of a larger number of entities, but have low requirements for accuracy, and it is difficult to reason and use the axioms, rules and constraints with the help of ontology libraries; while domain graphs have a relatively small coverage of knowledge, But the depth of knowledge is deeper, often in the construction of a certain professional field.

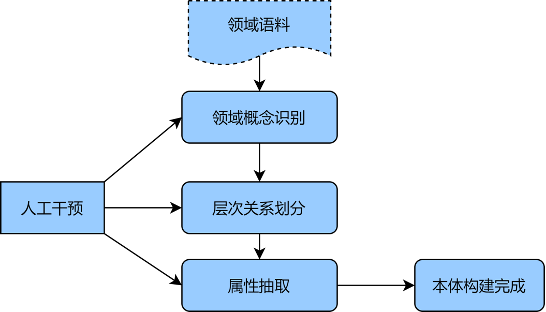

Considering the requirements for accuracy, the construction of domain ontology tends to be done manually, such as the representative seven-step method, IDEF5 method, etc. [1]. Analysis, summarize and construct the ontology that meets the application purpose and scope, and then optimize and verify the ontology, so as to obtain the first version of the ontology definition. If you want to obtain a wider range of domain ontology, you can supplement it from unstructured corpus. Considering that the manual construction process is relatively large, this paper takes the automotive field as an example to provide a semi-automatic ontology construction method. The detailed steps are as follows:

- First, collect a large amount of unstructured corpus of automobiles (such as car consultation, new car shopping guide articles, etc.) as the initial individual concept set, and use statistical methods or unsupervised models (TF-IDF, BERT, etc.) to obtain word features and word features;

- Secondly, the BIRCH clustering algorithm is used to divide the hierarchy between concepts, and the hierarchical relationship between concepts is initially constructed, and the clustering results are manually checked and summarized to obtain the equivalent and upper and lower concepts of the ontology;

- Finally, the convolutional neural network combined with the remote supervision method is used to extract the entity relationship of the ontology attributes, and supplemented by the artificial recognition of the concepts of classes and attributes in the ontology to construct the automotive domain ontology.

The above methods can effectively use deep learning technologies such as BERT to better capture the internal relationship between corpora, and use clustering to build each module of the ontology hierarchically, supplemented by manual intervention, which can quickly and accurately complete the initial ontology construction. The following figure is a schematic diagram of semi-automatic ontology construction:

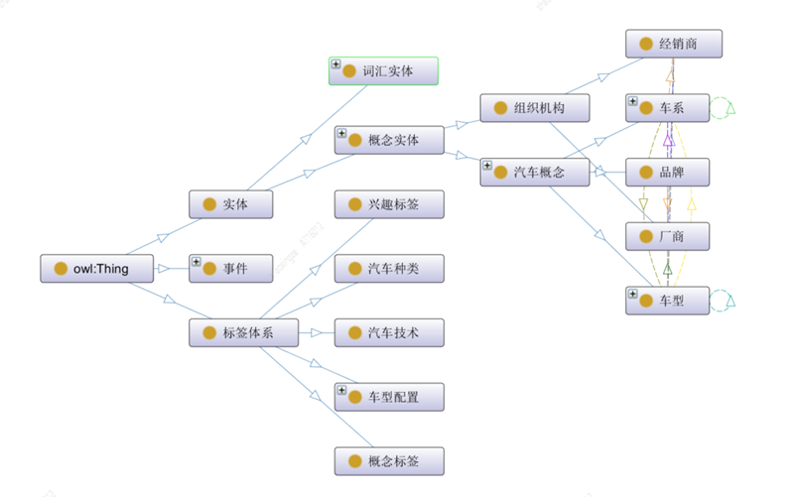

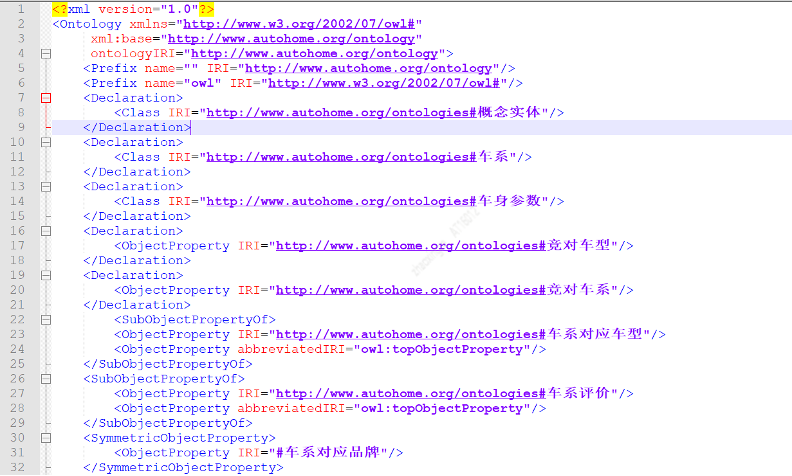

Using the Protégé ontology construction tool [2], the construction of ontology concept classes, relationships, attributes and instances can be carried out. The following figure is an example of the visualization of ontology construction:

This paper divides the top-level ontology concepts in the automotive field into three categories, entity, event and label system:

- Entity classes represent conceptual entities with specific meanings, including lexical entities and automobile entities, of which automobile entities include sub-entity types such as organizations and automobile concepts;

- The label system represents the label system of various dimensions, including content classification, concept labels, interest labels, etc., which are characterized by material dimensions;

- The event class represents the objective facts of one or more roles, and there are evolutionary relationships between different types of events.

Protégé can export different types of Schema configuration files, among which the owl.xml structure configuration file is shown in the following figure. The configuration file can be directly loaded and used in MYSQL and JanusGraph to realize the automatic creation of Schema.

3.2 Knowledge acquisition

The data sources of knowledge graphs usually include three types of data structures, namely structured data, semi-structured data, and unstructured data. For different types of data sources, the key technologies involved in knowledge extraction and the technical difficulties to be solved are different.

3.2.1 Transformation of structured knowledge

Structured data is the most direct source of knowledge for graphs. It can be used basically through preliminary conversion. Compared with other types of data, the cost is the lowest. Therefore, structured data is generally preferred for graph data. Structured data may involve multiple database sources, and it is usually necessary to use the ETL method to convert the model. ETL is Extract (extraction), Transform (transformation), and Load (loading). Extraction is to read data from various original business systems. , which is the premise of all work; conversion is to convert the extracted data according to pre-designed rules, so that the originally heterogeneous data formats can be unified; loading is to import the converted data incrementally or completely into the data according to the plan in warehouse.

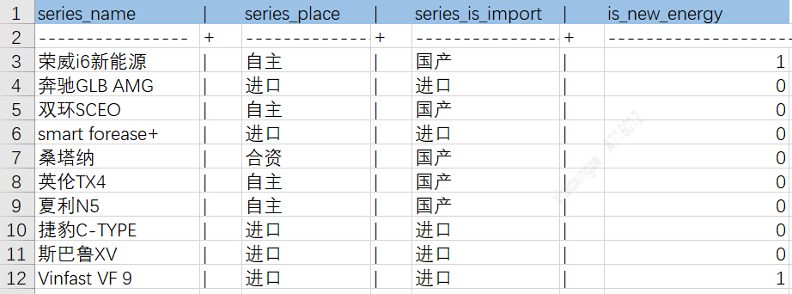

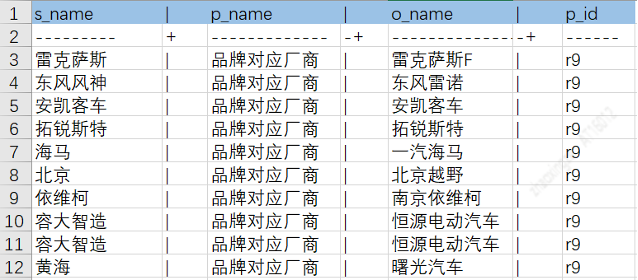

Through the above ETL process, data from different sources can be dropped into the intermediate table, which facilitates subsequent knowledge storage. The following figure is an example of the attributes and relationship table of the vehicle entity:

Car series and brand relationship table:

3.2.2 Unstructured Knowledge Extraction-Triple Extraction

In addition to structured data, there is also massive knowledge (triple) information in unstructured data. Generally speaking, the amount of unstructured data of an enterprise is much larger than that of structured data, and mining unstructured knowledge can greatly expand and enrich the knowledge graph.

Challenges of Triplet Extraction Algorithms <br>Problem 1: In a single field, the content and format of documents are diverse, requiring a large amount of labeled data, and the cost is high

Problem 2: The effect of migration between domains is not good enough, and the cost of scalable expansion across domains is high

The models are basically for specific industry-specific scenarios. If you change the scenario, the effect will be significantly reduced.

The solution, the paradigm of Pre-train + Finetune, pre-training: the heavyweight base allows the model to "see more and know more" and make full use of large-scale and multi-industry non-standard documents to train a unified pre-training base, enhance The model's ability to represent and understand various types of documents.

Fine-tuning: A lightweight document structuring algorithm. On the basis of pre-training, a lightweight document-oriented structuring algorithm is constructed to reduce the cost of labeling.

Pre-training methods for documents <br>Existing pre-training models for documents, if the text is short, Bert can completely encode the entire document; while our actual documents are usually long and need to be extracted Many of the attribute values are more than 1024 characters, and Bert encoding will cause the attribute value to be truncated.

Advantages and disadvantages of pre-training methods for long text

The Sparse Attention method optimizes the O(n2) calculation to O(n) by optimizing Self-Attention, which greatly improves the input text length. Although the text length of the normal model is increased from 512 to 4096, it still cannot completely solve the truncated text

Fragmentation problems of this book. Baidu proposed ERNIE-DOC [3] using the Recurrence Transformer method, which can theoretically model unlimited text. Since modeling requires all textual information to be input, the time-consuming process is very high.

The above two pre-training methods based on long text do not consider document characteristics, such as spatial (Spartial), visual (Visual) and other information. And the text-based PretrainTask is designed for plain text as a whole, but not for the logical structure of documents.

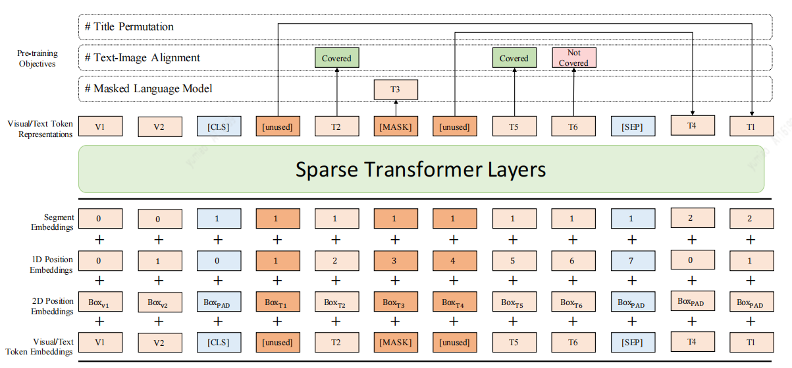

In view of the above shortcomings, a long document pre-training model DocBert[4] is introduced here. DocBert model design: using large-scale (millions) unlabeled document data for pre-training, based on the text semantics of documents (Text ), layout information

(Layout), visual features (Visual) to build self-supervised learning tasks, so that the model can better understand document semantics and structural information.

- Layout-Aware MLM: Consider the position and font size information of text in the Mask language model to achieve semantic understanding of document layout awareness.

- Text-Image Alignment: Integrate the visual features of the document, reconstruct the masked text in the image, and help the model learn the alignment relationship between text, layout, and image modalities.

- Title Permutation: Construct title reconstruction tasks in a self-supervised manner to enhance the model's ability to understand the logical structure of documents.

- Sparse Transformer Layers: Use the Sparse Attention method to enhance the model's ability to process long documents.

3.2.3 Mining concepts, tags of interest words, related to car series and entities

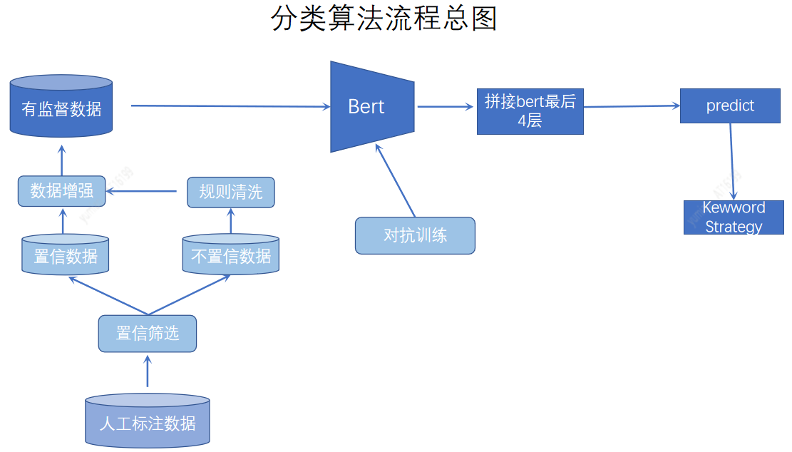

In addition to obtaining triples from structured and unstructured texts, Autohome also mines the categories, concept tags and interest keyword tags contained in materials, and establishes associations between materials and vehicle entities, bringing the automotive knowledge graph to the fore. new knowledge. The following introduces the content understanding part of the work and thinking done by Autohome from the classification, concept tags, and interest word tags.

The classification system serves as the basis for content characterization, and divides materials into coarse-grained divisions. The established unified content system is more based on manual definitions and is divided by AI models. In the classification method, we use active learning to label the more difficult data, and at the same time use data enhancement, adversarial training, and keyword fusion to improve the classification effect.

The granularity of concept labels is between classification and interest word labels, which is finer than that of classification, and more complete in describing points of interest than interest words. Refinement of label granularity. Rich and specific material tags are more convenient for searching and recommending tag-based model optimization, and can be used for tag outreach to attract users and attract secondary traffic. Concept label mining, combined with query and other important data, adopts machine mining method, and analyzes the generality. Through manual review, the concept label collection is obtained, and the multi-label model is used for classification.

Interest word tags are the most fine-grained tags, which are mapped to user interests, and can better perform personalized recommendations according to different user interest preferences. Keyword mining uses a combination of interest word mining methods, including Keybert extracting key substrings, and combining TextRank, positionRank, singlerank, TopicRank, MultipartiteRank, etc. + syntactic analysis methods to generate interest word candidates.

The excavated words have a high degree of similarity, and it is necessary to identify synonyms and improve the efficiency of manual labor. Therefore, we also perform automatic semantic similarity identification through clustering. The features used for clustering are word2vec, bert embding and other artificial features. Then using the clustering method, we finally generated a batch of high-quality keywords offline after manual correction.

For labels of different granularities, it is still at the material level. We need to associate the label with the car. First, we calculate the label to which the title\article belongs, and then identify the entities in the title\article, and get several labels-entity pseudo-labels , and finally, according to a large amount of corpus, the label with high co-occurrence probability will be marked as the label of the entity. Through the above three tasks, we have obtained rich and massive labels. Associating these labels with car series and entities will greatly enrich our car map and establish car labels that media and users pay attention to.

3.2.4 Human efficiency improvement:

With larger-scale training samples, how to obtain better model quality, and how to solve the problem of high labeling cost and long labeling cycle have become urgent problems to be solved. First, we can use semi-supervised learning to pre-train with massive unlabeled data. Then, the active learning method is adopted to maximize the value of the labeled data, and iteratively selects samples with high information content for labeling. Finally, remote supervision can be used to exploit the value of existing knowledge and discover the correlation between tasks. For example, after having the graph and the title, the NER training data can be constructed based on the graph using the remote supervision method.

3.3 Knowledge storage

The knowledge in the knowledge graph is represented by the RDF structure, and its basic unit is the fact. Each fact is a triple (S, P, O). In the actual system, according to the different storage methods, the storage of knowledge graph can be divided into storage based on RDF table structure and storage based on attribute graph structure. The library is more of a storage using a property graph structure. Common storage systems include Neo4j, JanusGraph, OritentDB, InfoGrid, etc.

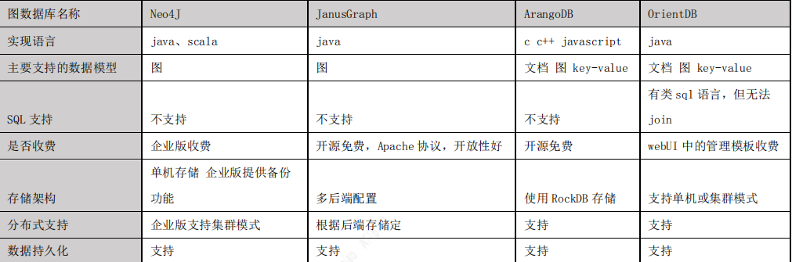

Graph database selection Through the comparison between JanusGraph and Neo4J, ArangoDB, OrientDB, these mainstream graph databases, we finally choose JanusGraph as the graph database of the project. The main reasons for choosing JanusGraph are as follows:

- Open source based on Apache 2 license agreement, good openness.

- Supports global graph analysis and batch graph processing using the Hadoop framework.

- Supports very large concurrent transaction processing and graph operation processing. By adding the transaction processing capabilities of the machine to scale out JanusGraph, complex queries of large graphs can be handled in milliseconds.

- Native support for the currently popular property graph data model described by Apache TinkerPop.

- Native support for the graph traversal language Gremlin.

The following figure is a comparison of mainstream graph databases

Introduction to Janusgraph

JanusGraph[5] is a graph database engine. It itself focuses on compact graph serialization, rich graph data modeling, and efficient query execution. The composition of the library schema can be expressed by the following formula:

janusgraph schema = vertex label + edge label + property keys

It is worth noting here that the property key is usually used for graph index.

For better graph query performance, janusgraph has built an index. The index is divided into Graph Index and Vertex-centric Indexes. Graph Index includes Composite Index and Mixed Index.

Composite indexes are limited to equality lookups only. (The composite index does not need to configure the external index backend, it is supported by the main storage backend (of course, hbase, Cassandra, Berkeley can also be configured))

Example:

mgmt.buildIndex('byNameAndAgeComposite', Vertex.class).addKey(name).addKey(age).buildCompositeIndex() #Build a composite index "name-age"

gV().has('age', 30).has('name', 'Xiao Ming')# The hybrid index to find the node named Xiaoming's age 30 requires ES as a backend index to support multi-condition queries other than equality ( Equality queries are also supported, but equality queries, composite indexes are faster). According to whether word segmentation is required, it is divided into full-text search, and string search

JanusGraph data storage model <br>Understanding how Janusgraph stores data will help us make better use of the library. JanusGraph stores graphs in adjacency list format, which means the graph is stored as a collection of vertices and their adjacency lists. A vertex's adjacency list contains all incident edges (and attributes) of the vertex.

JanusGraph stores each adjacency list as a row in the underlying storage backend. The (64-bit) vertex ID (which is uniquely assigned to each vertex by JanusGraph) is the key to the row containing the vertex adjacency list. Each edge and attribute is stored as a separate cell in the row, allowing efficient insertion and deletion. Therefore, the maximum number of cells allowed per row in a particular storage backend is also the maximum degree of vertices that JanusGraph can support for that backend.

If the storage backend supports key-order, the adjacency list will be sorted by vertex id, which JanusGraph can assign vertex ids to efficiently partition the graph. Assign ids such that vertices that are frequently visited in common have ids with small absolute differences.

3.4 Graph query service

Janusgraph uses the gremlin language for graph search. We provide a unified graph query service. External users do not need to care about the specific implementation of the gremlin language, and use a common interface to query. We divide it into three interfaces: conditional search interface, node-centric outward query, and inter-node path query interface. Here are a few examples of gremlin implementations:

- Conditional search: query about 100,000, the highest-selling car:

gV().has('price',gt(8)).has('price',lt(12)).order().by('sales',desc).valueMap().limit(1)

output:

==>{name=[xuanyi], price=[10], sales=[45767]}

Sylphy has the highest sales volume of 45767 - Query outward from the center of the node: query the node with Xiaoming as the center and degree 2

gV(xiaoming).repeat(out()).times(2).valueMap() - Path query between nodes: recommend two articles to Xiao Ming, these two articles introduce Corolla and Xuanyi respectively, query Xiao Ming and the paths of these two articles:

gV(xiaoming).repeat(out().simplePath()).until(or(has("car", 'name', 'kaluola'),has("car", 'name', 'xuanyi')) ).path().by("name")

output

==>path[xiaoming, around 10w, kaluola]

==>path[xiaoming, around 10w, xuanyi]

It was found that there is a node "about 100,000" between Xiaoming and these two articles

The application of three knowledge graphs in recommendation

There is a large amount of non-European data in the knowledge graph. The KG-based recommendation application effectively uses the non-European data to improve the accuracy of the recommendation system, so that the recommendation system can achieve the effect that the traditional system cannot achieve. KG-based recommendations can be divided into three categories, KG-based representation techniques (KGE), path-based methods, and graph neural networks. This chapter will introduce the applications and papers of KG in three aspects: cold start, reason, and ranking in recommender systems.

1 The application of knowledge graph in recommending cold start

Knowledge graph can model the hidden higher-order relations in KG from user-item interactions, which can well solve the data sparsity caused by users invoking a limited number of behaviors, and can be applied to solve the cold start problem. There are also related studies on this issue in the industry.

Sang et al. [6] proposed a two-channel neural interaction method called Knowledge Graph Augmented Residual Recurrent Neural Collaborative Filtering (KGNCF-RRN), which exploits the long-term relational dependencies of KG contexts and user-item interactions for recommendation. (1) For the KG context interaction channel, a residual recurrent network (RRN) is proposed to construct context-based path embeddings, and the residual learning is integrated into the traditional recurrent neural network (RNN) to effectively encode the long-term relational dependencies of KGs. . Self-attention networks are then applied to path embeddings to capture the ambiguity of various user interaction behaviors. (2) For the user-item interaction channel, user and item embeddings are input into the newly designed 2D interaction graph. (3) Finally, on top of the two-channel neural interaction matrix, a convolutional neural network is used to learn the complex correlations between users and items. This method can capture rich semantic information and also capture the complex implicit relationship between users and items for recommendation.

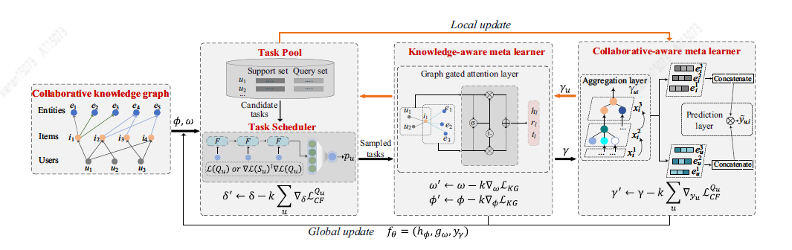

[7] proposed a new cold-start problem solution, MetaKG, based on a meta-learning framework, including collaborative-aware meta learner and knowledge-aware meta learner to capture user preferences and entity cold-start knowledge. The collaborative-aware meta learner learning task aims to aggregate each user's preference knowledge representation. In contrast, the knowledge-aware meta learner learns the task of generalizing knowledge representations of different user preferences globally. Guided by two learners, MetaKG can effectively capture high-order cooperative relations and semantic representations, which can easily adapt to cold-start scenarios. In addition, the authors design an adaptive task that adaptively selects KG information for learning to prevent the model from being disturbed by noisy information. The MetaKG architecture is shown in the figure below.

2 Application of Knowledge Graph in Recommendation Reason Generation

The recommendation reason can improve the interpretability of the recommendation system, let the user understand the calculation process to generate the recommendation result, and also explain the reasons for the popularity of the item. The user understands the generation principle of the recommendation result through the recommendation reason, which can enhance the user's confidence in the system recommendation result, and be more tolerant of the wrong result in the case of a recommendation error.

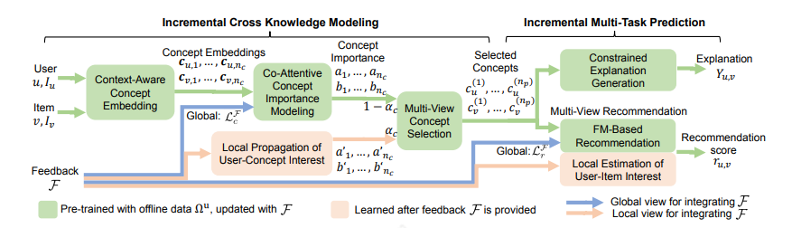

The earliest interpretable recommendations were based on templates. The advantages of templates are to ensure readability and high accuracy. However, the template needs to be manually organized, and the pan-Chinese is not strong, giving people a feeling of repetition. Later, the development does not require a preset free-form form, and adds a knowledge map, using one of the paths as an explanation, and with annotations, there are some generative methods that combine KG paths. Each point or edge selected in the model is a An inference process that can be shown to the user. Recently Chen Z [8] et al. proposed an incremental multi-task learning framework, ECR, which enables tight collaboration between recommendation prediction, explanation generation, and user feedback integration. It consists of two parts. The first part, Modeling Incremental Cross-Knowledge, learns the transferred cross-knowledge in the recommendation task and the interpretation task, and shows how to use the cross-knowledge to update by using incremental learning. The second part, Incremental Multi-Task Prediction, explains how to generate explanations based on cross-knowledge and how to predict recommendation scores based on cross-knowledge and user feedback.

3 The application of knowledge graph in recommendation ranking

KG can link items with different attributes, establish interaction between user-item, and combine the uesr-item graph and KG into a large graph, which can capture high-level connections between items. The traditional recommendation method is to model the problem as a supervised learning task, which ignores the internal connection between items (such as the Camry and Accord's competitive relationship), and cannot obtain synergistic signals from user behavior. The following introduces two papers on the application of KG in recommendation ranking.

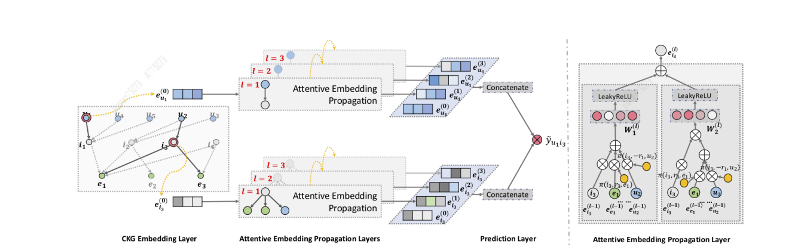

Wang [9] et al designed the KGAT algorithm, firstly using GNN iteratively to propagate and update the embedding, so as to quickly capture high-order connections; secondly, use the attention mechanism in aggregation to learn the weight of each neighbor during the propagation process , reflecting the importance of higher-order connections; finally, N implicit representations of user-item are obtained through N-order propagation update, and different layers represent connection information of different orders. KGAT can capture richer, less specific higher-order connections.

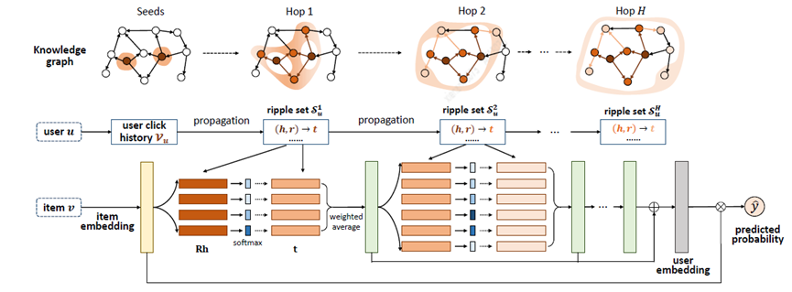

Zhang [20] and others proposed the RippleNet model, the key idea of which is interest propagation: RippleNet takes the user's historical interest as the seed set in the KG, and then expands the user's interest along the KG connection to form the user's interest in the KG. distribution of interest. The biggest advantage of RippleNet is that it can automatically mine the possible paths from the user's historically clicked items to the candidate items, without any manual design of meta-paths or meta-graphs.

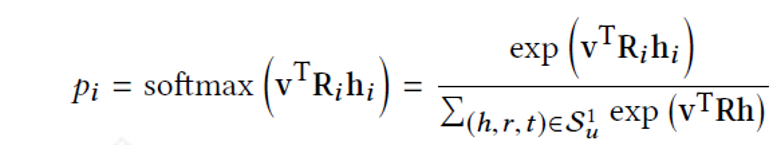

RippleNet takes user U and item V as input and outputs the predicted probability that user U clicks item V. For user U, take his historical interest V_{u} as a seed, we can see in the figure that the initial starting point is two, and then it continues to spread around. Given itemV and each triple \left( h_{i},r_{i},t_{i} \right) in the 1-hop ripple set V_{u_{}^{1}} of user U, by Comparing V with nodes h_{i} and relation r_{i} in triples assigns associated probabilities.

After obtaining the correlation probability, multiply the tails of the triples in V_{u_{}^{1}} by the corresponding correlation probability for weighted summation to obtain the first-order response of user U’s historical interest to V. The user’s interest is given by V_{u} is transferred to o_{u}^{1}, o_{u}^{2}, o_{u}^{3}...o_{u}^{n} can be calculated, and then calculated The feature of U with respect to item V can be computed as a fusion of all his order responses.

Four summary

In summary, we mainly focus on recommendation, introduce the detailed process of graph construction, and analyze the difficulties and challenges. At the same time, a lot of important work is reviewed, and specific solutions, ideas and suggestions are given. Finally, the application including knowledge graph is introduced, especially in the field of recommendation, interpretability, recall ranking, and the function and use of knowledge graph are introduced.

Quote :

[1] Kim S, Oh SG. Extracting and Applying Evaluation Criteria for Ontology Quality Assessment[J]. Library Hi Tech, 2019.

[2] Protege: https://protegewiki.stanford.edu

[3] Ding S, Shang J, Wang S, et al. ERNIE-DOC: The Retrospective Long-Document Modeling Transformer[J]. 2020.

[4] DocBert, [1] Adhikari A , Ram A , Tang R , et al. DocBERT: BERT for Document Classification[J]. 2019.

[5] JanusGraph, https://docs.janusgraph.org/

[6] Sang L, Xu M, Qian S, et al. Knowledge graph enhanced neural collaborative filtering with residual recurrent network[J]. Neurocomputing, 2021, 454: 417-429.

[7] Du Y , Zhu X , Chen L , et al. MetaKG: Meta-learning on Knowledge Graph for Cold-start Recommendation[J]. arXiv e-prints, 2022.

[8] Chen Z, Wang X, Xie X, et al. Towards Explainable Conversational Recommendation[C]// Twenty-Ninth International Joint Conference on Artificial Intelligence and Seventeenth Pacific Rim International Conference on Artificial Intelligence {IJCAI-PRICAI-20. 2020 .

[9] Wang X , He X , Cao Y , et al. KGAT: Knowledge Graph Attention Network for Recommendation[J]. ACM, 2019.

[10] Wang H , Zhang F , Wang J , et al. RippleNet: Propagating User Preferences on the Knowledge Graph for Recommender Systems[J]. ACM, 2018.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。