Basic Concepts

Architecture Concepts

Data Lake

- A system or repository of data stored in its natural/raw format, usually object blobs or files.

- Data lakes can be built as part of a data fabric architecture.

Benefits:

- Flexibility, as data scientists can quickly and easily configure queries

- Accessibility, as all users can access all data

- Affordability, as many data lake technologies are open source

- Compatibility with most data analytics methods

- Comprehensive, combining data from all of an enterprise’s data sources including IoT

- Amazon S3, Azure Data Lake, Google Cloud Storage, Databricks, Snowflake

Data Warehouse

- Data warehousing is a process for collecting and managing data from varied sources, typically used to connect and analyse data from heterogeneous sources.

- A system used for reporting and data analysis and is considered a core component of business intelligence. DWs are central repositories of integrated data from one or more disparate sources.

- Amazon Redshift, Azure Synapse, Google Cloud BigQuery, Databricks, Snowflake, IBM Db2, Teradata

- A virtual data warehouse gives a collective view of the completed data. A virtual data warehouse does not have historical data. It is considered as a logical data model having metadata.

Data Mart

- A structure / access pattern specific to data warehouse environments, used to retrieve client-facing data.

- The data mart is a subset of the data warehouse and is usually oriented to a specific business line or team.

Data Fabric

- An architecture and set of data services that provide consistent capabilities across a choice of endpoints spanning hybrid multicloud environments.

- IBM Cloud Pak, Denodo, Talend, K2View

Data Mesh

- A sociotechnical approach to build a decentralized data architecture by leveraging a domain-oriented, self-serve design, and borrows Eric Evans’ theory of domain-driven design and Manuel Pais’ and Matthew Skelton’s theory of team topologies.

Data Virtualization

- An approach to data management that allows an application to retrieve and manipulate data without requiring technical details about the data, such as how it is formatted at source, or where it is physically located, and can provide a single customer view of the overall data.

Comparison

| Data Lake | Data Warehouse | |

|---|---|---|

| Type of data | Structured and unstructured from any source, raw | Structured, curated |

| Schema | Not predetermined | Predetermined |

| Typical users | Data scientists, developers, and data analysts | Data analysts |

Database Concepts

DBMS

Database management system is a software for storing and retrieving user data. It consists of a group of programs which manipulate the database.

Characteristics:

- Provides security and removes redundancy

- Self-describing nature of database system

- Insulation between programs and data abstraction

- Support of multiple views of data.

- Sharing of data and multiuser transaction processing

- DBMS allows entities and relations among them to form tables.

- It follows the ACID concept (Atomicity, Consistency, Isolation, and Durability).

- DBMS supports a multi-user environment that allows users to access and access and manipulate data in parallel.

OLTP vs OLAP

| OLTP | OLAP |

|---|---|

| online transactional system | online analysis and data retrieving process |

| It is characterized by a large number of short online transactions. | It is characterized by a large volume of data. |

| OLTP uses traditional DBMS | OLAP uses a data warehouse. |

| Tables in OLTP database are normalized. | The tables in OLAP are not normalized. |

| Its response time is in a millisecond. | Its response time is in second to minutes. |

| OLTP is designed for real time business operations. | OLAP is designed for the analysis of business measures by category and attributes. |

Note: OLAP and OLTP are meant for SQL Databases, don't apply to NoSQL DBs.

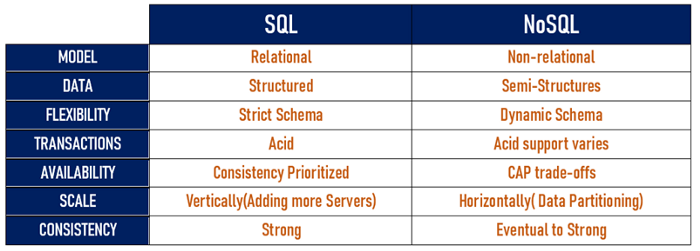

SQL vs NoSQL

- ACID vs BASE - NoSQL typically leaves out some of the ACID(atomicity, consistency, isolation, and durability) features of SQL, sort of 'cheating' it's way to higher performance by leaving this layer of abstraction to the programmer. This has already been covered by previous posters.

- Horizontal Scaling - The real advantage of NoSQL is horizontal scaling, aka sharding. Considering NoSQL 'documents' are sort of a 'self-contained' object, objects can be on different servers without worrying about joining rows from multiple servers, as is the case with the relational model.

NoSQL solutions usually offer record-level atomicity, but cannot guarantee a series of operations will succeed (transaction). It comes down to: to keep data integrity and support transactions, a multi-server RDBMS would need to have a fast backend communication channel to synchronize all possible transactions and writes, while preventing/handling deadlock.

NoSQL databases pros:

Flexibility

- They can store structured, semi-structured, or unstructured data

Dynamic Schema

- Evolve and change as quickly as needed

Sharding

- The process of splitting up and distributing data to smaller databases for faster access

Replication

- offer failover and better recovery options thanks to the replication

Scaling

- easily scalable, growing or shrinking as necessary

- Large Blobs/Rich Media

NoSQL databases cons:

- Limited built-in query language capacity: almost all the query languages and API methods of the NoSQL repositories were created based on certain SQL functions, but they have much less functionality.

- NoSQL solutions do not require defining a database schema before starting work: therefore, during the development process, you may encounter unforeseen difficulties that may lead to the abandonment of this NoSQL solution.

SQL databases are ideal for projects with the following features:

- Logical data requirements can be defined in advance;

- When data integrity is very important;

- A need for well-established technology based on well-established standards, using which one can count on extensive experience of developers and technical support.

You should choose NoSQL databases if in your products:

- Data requirements are vague, uncertain, or evolving with project development;

- The goal of the project can be adjusted over time, with the possibility of starting development immediately;

- One of the main database requirements is data processing speed and scalability.

NoSQL Databases are mainly categorized into four types:

Key-value:

- efficiently and shows high scalability, for instance: in caching web applications and session management.

- Redis, Memcached, Amazon DynamoDB

(DynamoDB is a key-value store with added support for JSON to provide document-like data structures that better match with objects in application code)

Document-based:

- similar to a key-value database, the only difference being is that the values are stored in the form of XML, JSON, BSON.

- MongoDB, Terrastore, RavenDB

Column-based:

- organize data in columns instead of rows

- families contain the group of correlated data which we can be accessed together meaning they can query large data sets faster than other conventional databases.

- mostly used for fraud detection recommendation engines and catalogs

- Cassandra, Hbase, Hypertable

Graph-based

- organize data as nodes and edges that show connections between nodes

- multi-relational in nature

- mostly used for social networks, logistics, spatial data

- Neo4J, Dgraph, Amazon Neptune, OrientDB

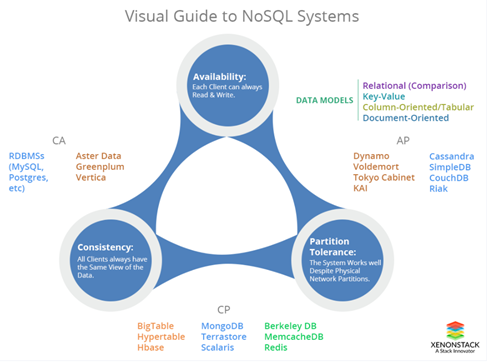

CAP Theorem

- Consistency: (Same Data.) The data should remain consistent even after the execution of an operation. For instance, after updating the database, all the queries we run should produce the same result.

- Availability: (Able to get data.) The database should not have any downtime, it should always be available and responsive.

- Partition Tolerance: The system should continue to function even if the communication among the servers is not stable.

| C+A | A+P | C+P | |

|---|---|---|---|

| Relational | MySQL, Postgres | ? | ? |

| Key-Value | ? | DynamoDB | Redis |

| Document-based | ? | CouchDB | MongoDB |

| Column-based | ? | Cassandra | HBase |

| Graph-based | Neo4j | ? | ? |

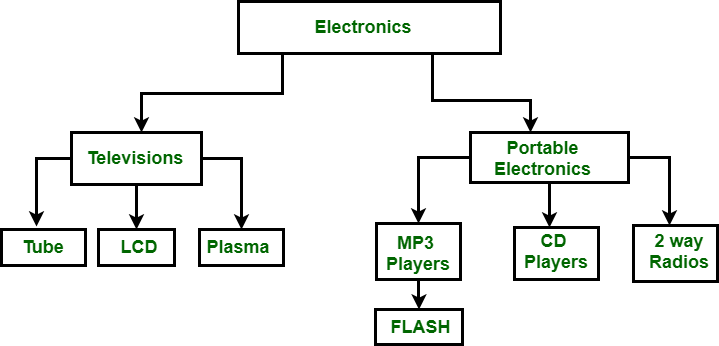

Hierarchical vs Network

Hierarchical Model

- In the hierarchical database, model data is organized in a tree-like structure. Data is stored in a hierarchical format. Data is represented using a parent-child relationship. In hierarchical DBMS parent may have many children, children have only one parent.

- 1:1 and 1:n relations

The drawbacks of the hierarchical data model are:

- It is not flexible as it takes time to adapt to the changing needs of the business.

- The structure poses the issue in, inter-departmental communication, vertical communication, as well as inter-agency communication.

- Hierarchical data model can create problems of disunity.

There are two types of Hierarchies:

- Level based hierarchies

- Parent-child hierarchies

Network Model

- It is a model which is built on hierarchical model.

- It allows more than one relationship to link records, which indicates that it has multiple records.

- 1:1, 1:n and also many to many relations.

- It is possible to construct a set of parent records and child records. Each record can belong to multiple sets that enable you to perform complex table relationships.

In-memory Analytics

In-memory analytics is a process of caching the database in RAM.

XMLA

XMLA is an XML analysis that is considered as standard for accessing data in Online Analytical Processing (OLAP).

Other Concepts

Modeling

Systems Modeling

Data Modeling

- Classic ER (Entity–Relationship)

- Post Classic ER

System Modeling

- Semantic

- UML: Unified Modeling Language

Metadata

Metadata is defined as “data about data.” In the context of data modeling, it’s the data that covers what types of data are in the system, what it’s used for, and who uses it.

Granularity

Granularity represents the level of information stored in a table. Granularity is defined as high or low. High granularity data contains transaction-level data. Low granularity has low-level information only, such as that found in fact tables.

Data Sparsity

Data sparsity defines how much data we have for a model’s specified dimension or entity. If there is insufficient information stored in the dimensions, then more space is needed to store these aggregations, resulting in an oversized, cumbersome database.

Bitmap Index

Bitmap indexes are a special type of database index that uses bitmaps (bit arrays) to answer queries by executing bitwise operations.

Idempotent

An operation is idempotent if it produces the same result when called over and over.

Data Modeling

Concepts

Database Design vs Data Modeling

Database Design

- Specific DBMS(Database Management System) model (e.g. relational)

- Goes below schema to physical storage

- Implementation/product specific restrictions from the very beginning

Data Modeling

- The process of creating a model for the data to store in a database.

- Conceptual / Semantic level. A conceptual representation of data objects, the association between different data objects, and the rules.

- Unconstraint by RDBMS(Relational Database Management System) or other implementation rules

- Closer to real world

Data Modeling Pros and Cons

Advantages of the data model are:

- The main goal of a designing data model is to make sure that data objects offered by the functional team are represented accurately.

- The data model should be detailed enough to be used for building the physical database.

- The information in the data model can be used for defining the relationship between tables, primary and foreign keys, and stored procedures.

- Data Model helps businesses to communicate within and across organizations.

- Data model helps to documents data mappings in the ETL process

- Help to recognize correct sources of data to populate the model

Disadvantages of Data model are:

- To develop Data model, one should know physical data stored characteristics.

- This is a navigational system that produces complex application development, management. Thus, it requires knowledge of the biographical truth.

- Even smaller changes made in structure require modification in the entire application.

- There is no set of data manipulation language in DBMS.

Characteristics

- Data Redundency: occurs when same data stores in multiple places

- Data Integrity: the accuracy and consistency of data over its life cycle

- Data Quality: measure of how well a dataset satisfies its intended use

Data Modeling Life Cycle

The three types of data models:

Conceptual Modeling:

- high-level, user’s view of the data in question

Logical Modeling:

- straddle between physical and theoretical data models, allowing the logical representation of data to exist apart from the physical storage

- It contains an entity, primary key attributes, Inversion keys, alternate key, rule, business relation, definition, etc.

Physical Modeling:

- where the framework or schema describes how data is physically stored in the database.

- It contains a table, key constraints, unique key, columns, foreign key, indexes, default values, etc.

Data Modeling Methodologies

Transactional:

- Conceptual level: mirror real world

Logical level:

- Relational: data normalization with deliberate denormalization

- Non Relational: NoSQL, OODBMS(Object-Oriented Database Management System) constructs, etc.

- Physical level: blocks/tracks, MPP(Massively Parallel Processing) distribution, etc.

Analytical (DW):

- Conceptual level: dimensional

Logical level:

- Relational: fact and dimension tables

- Non Relational: cubes, culumnardatabases, etc.

- Physical level: blocks/tracks, MPP(Massively Parallel Processing) distribution, AWS buckets, HDFS name nodes and data nodes, etc.

Data Modelling Techniques

Two types of data modelling techniques are:

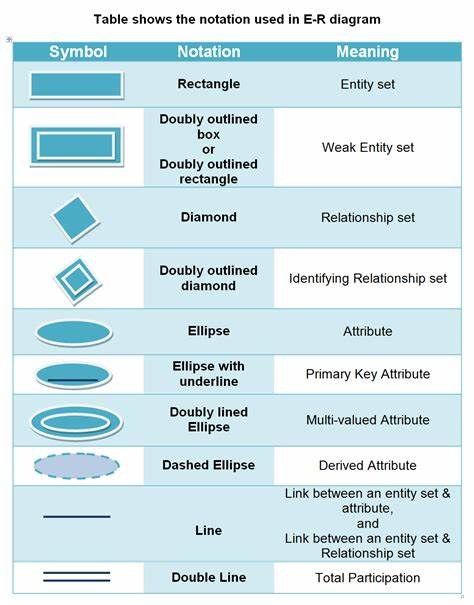

Entity-Relationship (E-R) Model

- An ERD is an example of a conceptual data model

UML (Unified Modelling Language):

- general-purpose, database development, modelling language in the field of software engineering. The main intention is to provide a generalized way to visualize system design.

Design Schemas

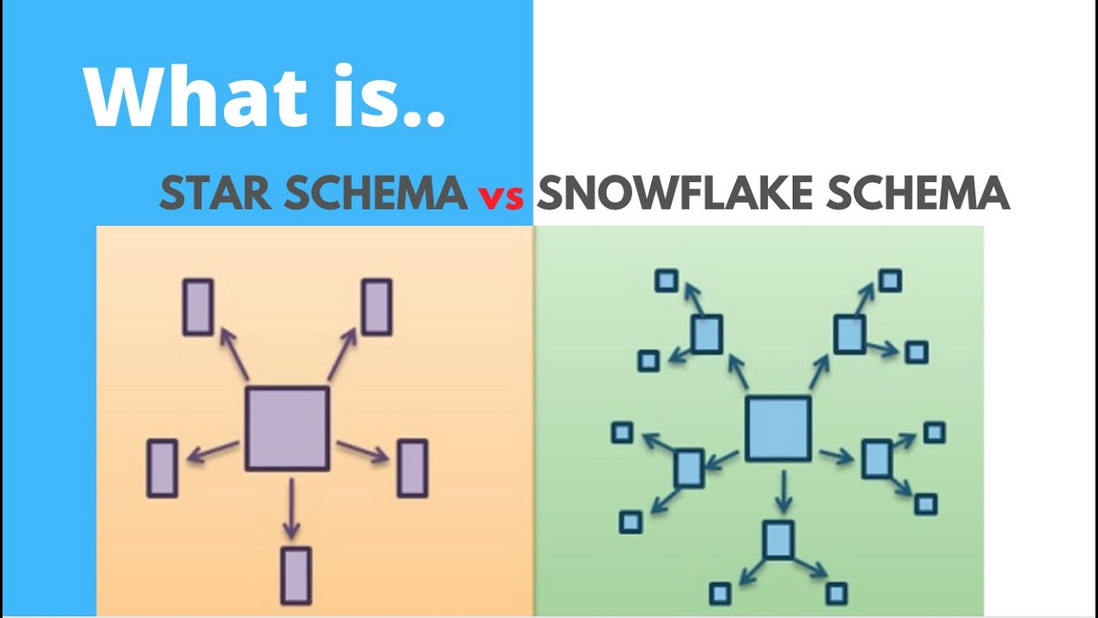

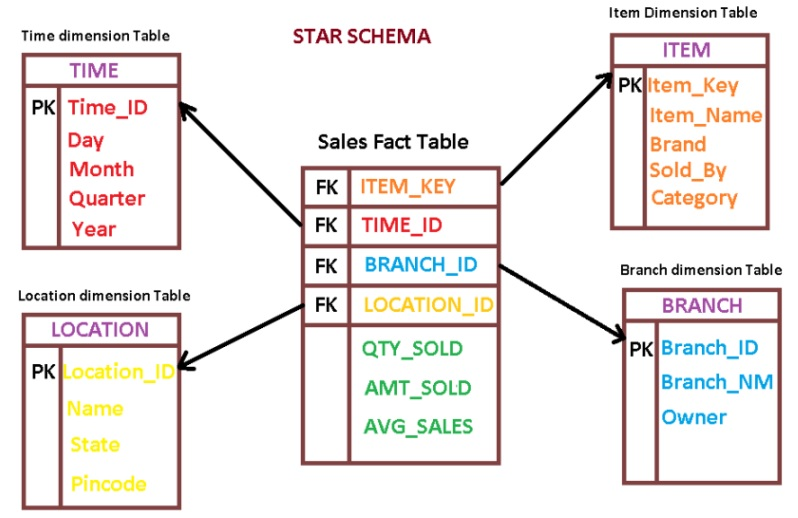

- Star schema: has a fact table centered with multiple dimension tables surrounding it.

- Snowflake schema: A snowflake schema is an arrangement of a dimension table and fact table. Generally, both tables are further broken down into more dimension tables. It is similar to Star schema, except that the level of normalization is higher, which results in the schema looking like a snowflake.

Forwarding and Reverse Engineering

- Forward engineering is a process where Data Definition Language (DDL) scripts are generated from the data model itself. DDL scripts can be used to create databases. The process of translating a logical model into a physical implement automatically.

- Reverse Engineering creates data models from a database or scripts. Some data modeling tools have options that connect with the database, allowing the user to engineer a database into a data model.

Table Types

Fact

The fact represents quantitative data. For example, the net amount which is due.

A fact table contains numerical data as well as foreign keys from dimensional tables.

- Transaction fact tables

- Periodic snapshot tables

- Accumulating snapshot

- Additive: It is a measure that is added to any dimension.

- Non-additive: It is a measure that can’t be added to any dimension.

- Semi-additive: It is a measure that can be added to a few dimensions.

Fact less fact is a table having no fact measurement. It contains only the dimension keys. For example, a table with 2 columns employee id and department id.

Dimension

Dimensions represent qualitative data. For example, product, class, plan, etc. A dimension table has textual or descriptive attributes. For example, the product category and product name are two attributes of the product dimension table.

Slowly Changing Dimensions: These are dimensions used to manage both historical data and current data in data warehousing.

- SCD Type 0: When a change happens, retain the original value.

- SCD Type 1: When a change happens, overwrite the original value.

- SCD Type 2: When a change happens, add a new row.

- SCD Type 3: When a change happens, add a new column.

- SCD Type 4: When a change happens, overwrite and maintain a separate history table.

- Rapidly Changing Dimensions

- Static Dimensions

- Junk Dimensions: Junk dimension combines two or more related cardinality into one dimension. It is usually Boolean or flag values. This is a grouping of low-cardinality attributes like indicators and flags, removed from other tables, and subsequently “junked” into an abstract dimension table. They are often used to initiate Rapidly Changing Dimensions within data warehouses.

- Stacked dimensions

- Inferred Dimensions

- Conformed Dimensions

- Degenerate Dimensions

- Role-Playing Dimensions

- Shrunken Dimensions

- Confirmed Dimension: If a dimension is confirmed, it’s attached to at least two fact tables.

Elements

Data Subjects / Entities: Something that "exists", like student, grade, etc. (Not equal to database tables, database tables are sometimes artificial, duplicative/aggregates).

Entities can be broken down into several sub-entities or grouped by specific features. Each sub-entity has relevant attributes and is called a subtype entity. Attributes common to every entity are placed in a higher or super level entity, which is why they are called supertype entities.- Strong Entity: has a primary key.

- Weak Entity: has the partial key which acts as a discriminator between the entities of a weak entity set.

- Data Attributes of the Data Subjects: field / database column, like ID, name, etc.

Relationship between Data Subjects: like instructor teaches a class, class is taught by an instructor.

- Gerund: A relationship that also exhibits characteristics of an entity, and can have attributes attached to it.

Business Rules applied to our data:

Cardinality:number of unique values

- Columns Cardinality: number of unique values in the column

- Data Modeling Cardinality: the relationships between the data in two database tables, how many instances of one entity are related to instances of another entity.

- mandatory or optional relationships

- permissible attribute values (like NULL)

- data change dynamics

- A different type of constraint could be unique, null values, foreign keys, composite key or check constraint, etc.

Key

Primary key is a column or group of columns that unequally identify each and every row in the table. The value of primary key must not be null. Every table must contain one primary key.

- Composite Primary key: referred to the case where more than one table column is used as a part of primary key.

- Surrogate key: An artificial key which aims to uniquely identify each record is called a surrogate key. These kinds of key are unique because they are created when you don’t have any natural primary key. They do not lend any meaning to the data in the table. Surrogate key is usually an integer. 代理关键字一般是指维度表中使用顺序分配的整数值作为主键,也称为“代理键”。代理关键字用于维度表和事实表的连接。在Kimball的维度建模领域里,是强烈推荐使用代理关键字的。在维度表和事实表的每一个联接中都应该使用代理关键字,而不应该使用自然关键字或者智能关键字(Smart Keys)。数据仓库中的主键不应该是智能的,也就是说,要避免通过主键的值就可以了解一些业务信息。

- Alternate key is a column or group of columns in a table that uniquely identifies every row in that table. A table can have multiple choices for a primary key, but only one can be set as the primary key. All the keys which are not primary key are called an Alternate Key.

- Foreign key is a group of attributes which is used to link parent and child table. The value of the foreign key column, which is available in the child table, is referred to the value of the primary key in the parent table.

Relationship

The main relationship types are:

- Identifying. A relationship line normally connects parent and child tables. But if a child table’s reference column is part of the table’s primary key, the tables are connected by a thick line, signifying an identifying relationship.

- Non-identifying. If a child table’s reference column is NOT a part of the table’s primary key, the tables are connected by a dotted line, signifying a no-identifying relationship.

- Self-recursive. A recursive relationship is a standalone column in a table connected to the primary key in the same table. Recursive relationships happen when a relationship exists between an entity and itself. For instance, a doctor could be in a health center’s database as a care provider, but if the doctor is sick and goes in as a patient, this results in a recursive relationship. You would need to add a foreign key to the health center’s number in each patient’s record.

Cardinalities of a relationships:

- One-to-One Relationships

- One-to-Many Relationships

- Many-to-One Relationships

- Many-to-Many Relationships

Classic ER Notation / Chen Notation

ERD stands for Entity Relationship Diagram and is a logical entity representation, defining the relationships between the entities. Entities reside in boxes, and arrows symbolize relationships.

Multi Valued Attribute (MVA): like one person can have multiple email address

Crow's Foot Notation

More closed aligned with logical modeling

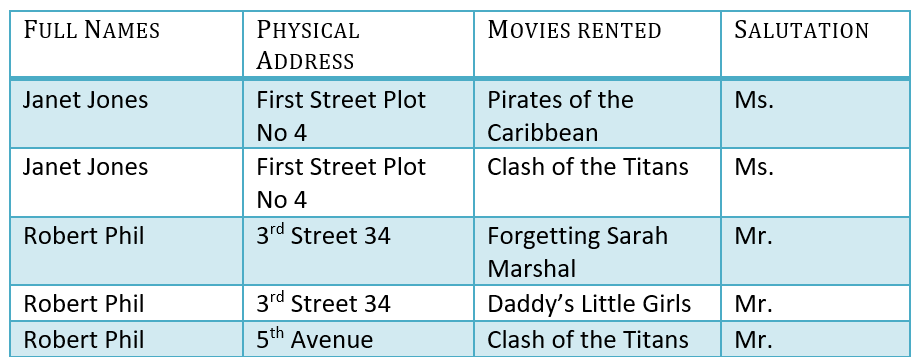

Normalization

Database normalization is the process of designing the database in such a way that it reduces data redundancy without sacrificing integrity.

Denormalization is a technique where redundant data is added to an already normalized database. The procedure enhances read performance by sacrificing write performance.

The purposes of normalization are:

- Remove useless or redundant data

- Reduce data complexity

- Ensure relationships between the tables in addition to the data residing in the tables

- Ensure data dependencies and that the data is stored logically.

Normal Forms:

original

- 1NF: the key. requires multi-valued attributes to be converted to some other data structure.

- 2NF: the whole key. If a table has a single column primary key, it automatically satisfies 2NF, but if a table has a multi-column or composite key then it may not satisfy 2NF.

we can’t move forward to make our simple database in 2nd Normalization form unless we partition the table above. - 3NF: nothing but the key

- boyce-codd fourth

- fifth normal forms

Normal Form Violations:

- 1NF violations (repeating groups): move offending data to a seperate table. For example, a student can have multiple email address, and an email address can be shared by multiple students. So instead of keeping email in Student table, we can seperate it out to a Email table to avoid duplication of other information.

- 2NF violations: partial key dependencies: Grade table, course Name partial dependency on course ID, so remove course name from the Grade table, and have a Course table storing course ID and course name.

- Transform many to many relationship: decompose M:M relationship into multiple "semantically equivalent" relationships.

- Adding foreign keys

Other

Softwares:

- Powerpoint

- Visio: general drawing tool

- CA ERwin: specializaed data modeling tool

Errors most likely encountered during data modeling:

- Building overly broad data models: If tables are run higher than 200, the data model becomes increasingly complex, increasing the likelihood of failure

- Unnecessary surrogate keys: Surrogate keys must only be used when the natural key cannot fulfill the role of a primary key

- The purpose is missing: Situations may arise where the user has no clue about the business’s mission or goal. It’s difficult, if not impossible, to create a specific business model if the data modeler doesn’t have a workable understanding of the company’s business model

- Inappropriate denormalization: Users shouldn’t use this tactic unless there is an excellent reason to do so. Denormalization improves read performance, but it creates redundant data, which is a challenge to maintain.

Nulls

If a Unique Constraint Gets Applied to a Column, Will It Generate an Error If You Attempt to Place Two Nulls in It?

No, it won’t, because null error values are never equal. You can put in numerous null values in a column and not generate an error.

Check Constraint

Check constraint is used to verify a range of values in a column.

PDAP (Part Distributive Aggregate Property)

It is a data cube that stores data as a summary. It helps the user to analyse data quickly. The data in PDAP is stored in a way that reporting can be done with ease.

Critical Success Factor

Critical Success Factor is a favorable result of any activity needed for organization to reach its goal.

Four types of critical success factor are:

- Industry CSFs

- Strategy CSFs

- Environmental CSFs

- Temporal CSFs

Object-Oriented Database Model

The object-oriented database model is a collection of objects. These objects can have associated features as well as methods.

many-to-many relationships in NoSQL data modeling

Many-to-many relationships are modeled using two document collections with embedded entity objects referencing related documents from a third collection with identifiers/keys.

The M:N relationships are achieved/maintained at the application level when retrieving data.

The following example uses the identifier class_id to resolve M:N relationships between professor and student collections.

The professor collection looks like this:

{

"Professor_id": "1021",

"Name": "John P Vess",

"Type": "Adjunct",

"class_details": [

{

"class_id": [

"B10101",

"B10203",

"B10112"

]

}

]

},

...And the student collection looks like this:

{

"Student_id": "201727542",

"Name": "Mary Carson",

"Type": "Under Graduate",

"class_details": [

{

"class_id": [

"B10101",

"B10107",

"B10119"

]

}

]

},

{

"Student_id": "201821230",

"Name": "Jerry Smith",

"Type": "Under Graduate",

"class_details": [

{

"class_id": [

"B10101",

"B10203",

"M10332",

"C12001"

]

}

]

},

...Finally, the class collection, referred to by the other two collections, looks like this:

{

"Class_id": "B10101",

"Class": "Business Principles"

},

{

"Class_id": "B10203",

"Class": "Introduction to Marketing"

},

...

SCD(Slowly changing schema)

SDC should be used to capture information in case there is an update in the data like the address or mob num for a customer.

Format of a data modeling interview round

The interviewer will throw a problem statement to model a practical situation, let’s say a retail store, university, e-commerce website, or Company.

The candidate is expected to break the problem down into different database entities (tables), and columns of the table such that you are able to write SQL queries on top of the entities to answer any business questions that the interviewers ask.

Which among the following methodology does the Universal Modelling Language belong to? Object Modelling

Query Modelling

Entity Modelling

Attribute Modelling

Object Modelling

Auditing Fields

Auditing fields: create dated time, create by (who/which system), update date time, updated by

Audit table: keep all the history record of editing

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。