foreword

In this case, our middle and Taiwan technical engineers encountered the special needs from customers to break the functional limitations of k8s products. Faced with this extremely challenging task, did Siege Lion finally overcome many difficulties and help customers to achieve perfection? demand? Let's see the K8S technical case sharing in this issue!

(Friendly reminder: The length of the article is long. It is recommended that you collect it before reading it. At the same time, pay attention to the combination of work and rest during the reading process to maintain physical and mental health!)

Part 1: The need for "quite personality"

One day, our technical middle-office engineer received a customer's request for help. The customer uses the managed K8S cluster product to deploy the test cluster in the cloud environment. Due to business needs, R&D colleagues need to be able to directly access the clusterIP-type service and back-end pods of the K8S cluster in the office network environment. Usually, K8S pods can only be accessed through other pods or cluster nodes in the cluster, and cannot be accessed directly outside the cluster. When the pod provides services inside and outside the cluster, it needs to expose the access address and port through the service. In addition to serving as the access entry for the pod application, the service will also probe the corresponding ports of the pod to implement health checks. At the same time, when there are multiple Pods in the backend, the service will also forward client requests to different pods according to the scheduling algorithm to achieve load balancing. Common service types are as follows:

ClusterIP type, if no type is specified when creating a service, this type of service will be created by default:

Introduction to service types

- clusterIP type

If you do not specify a type when creating a service, this type of service will be created by default. A service of clusterIP type can only be accessed by pods and nodes in the cluster through the cluster IP, and cannot be accessed outside the cluster. Usually, services such as K8S cluster system service kubernetes do not need to provide services outside the cluster, and only services that need to be accessed inside the cluster will use this type;

- The nodeport type is designed to meet the access requirements for services outside the cluster, and the nodeport type is designed to map the port of the service to the port of each node in the cluster. When the service is accessed outside the cluster, the request is forwarded to the backend pod by accessing the node IP and the specified port;

- Loadbalancer type This type usually needs to call the API interface of the cloud manufacturer, create a load balancing product on the cloud platform, and create a listener according to the settings. Inside K8S, the loadbalancer type service actually maps the service port to the fixed port of each node like the nodeport type. Then set the node as the backend of load balancing, and the listener forwards the client request to the service mapping port on the backend node. After the request reaches the node port, it is forwarded to the backend pod. The Loadbalancer type of service makes up for the deficiency that the client needs to access the IP addresses of multiple nodes when the nodeport type has multiple nodes, as long as the IP address of the LB is accessed uniformly. At the same time, LB-type services are used to provide external services. K8S nodes do not need to bind public network IPs, but only need to bind public network IPs to LBs, which improves node security and saves public network IP resources. Using LB's health check function for back-end nodes, high service availability can be achieved. Avoid the failure of a K8S node to cause the service to become inaccessible.

summary

Through the understanding of the K8S cluster service type, we can know that the customer wants to access the service outside the cluster, and the LB type service is recommended first. Since the nodes of K8S cluster products do not yet support binding to public network IPs, services of nodeport type cannot be accessed through the public network, unless customers use a dedicated line connection or IPSEC connects their office network with the cloud network, they can access nodeport type of service. For pods, they can only be accessed within the cluster using other pods or cluster nodes. At the same time, the clusterIP and pod of the K8S cluster are designed not to allow external access to the cluster, which is also for improving security. If the access restrictions are broken, it may lead to security problems. Therefore, we recommend that customers still use the LB type service to expose the service, or connect to the NAT host of the K8S cluster from the office network, and then connect to the K8S node through the NAT host, and then access the clusterIP type service, or access the backend pod.

The customer said that there are hundreds of clusterIP type services in the test cluster. If they are all transformed into LB type services, hundreds of LB instances will be created and hundreds of public network IPs will be bound. This is obviously unrealistic. The workload of becoming a Nodeport-type service is also huge. At the same time, if you log in to the cluster node through the NAT host jump, you need to give the R&D colleagues the system password of the NAT host and the cluster node, which is not conducive to operation and maintenance management. Simple.

Part 2: Ways Are More Than Difficult?

Although the customer's access method violates the design logic of the K8S cluster, it appears to be "non-mainstream", but it is also a strong demand for the customer's usage scenarios. As a siege lion in the technology middle stage, we must do our best to help customers solve technical problems! Therefore, we plan the implementation plan according to the customer's needs and scenario architecture.

Since it is a network connection, the first step is to analyze the network architecture of the customer's office network and the K8S cluster on the cloud. The customer's office network has a unified public network egress device, and the network architecture of the K8S cluster on the cloud is as follows. The master node of the K8S cluster is invisible to the user. After the user creates the K8S cluster, three subnets will be created under the VPC network selected by the user. They are the node subnet used for K8S node communication, the NAT and LB subnets used to deploy NAT hosts and load balancing instances created by LB type serivce, and the pod subnet used for pod communication. The nodes of the K8S cluster are built on the cloud host, and the next hop of the route for accessing the public network address from the node subnet points to the NAT host, which means that the cluster nodes cannot be bound to the public network IP. SNAT, to achieve public network access. Since the NAT host only has the SNAT function and does not have the DNAT function, the node cannot be accessed through the NAT host from outside the cluster.

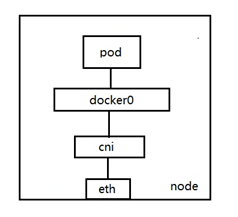

Regarding the planning purpose of the pod subnet, we first need to introduce the network architecture of the pod on the node. As shown below:

On the node, the container in the pod is connected to the docker0 device through the veth pair, and the network card between docker0 and the node is connected through the self-developed CNI network plug-in. In order to separate cluster control traffic from data traffic and improve network performance, the cluster binds an elastic network card to each node for pod communication. When a pod is created, an IP address is assigned to the pod on the elastic NIC. A maximum of 21 IPs can be allocated to each elastic network card. When the IP addresses on an elastic network card are full, a new network card will be bound for use by subsequent newly created pods. The subnet to which the elastic network card belongs is the pod subnet. Based on this architecture, the load pressure on the main network card of the node eth0 can be reduced, and the control traffic and data traffic can be separated. At the same time, the IP of the pod has the actual corresponding network interface and IP in the VPC network. , which can implement routing of pod addresses within the VPC network.

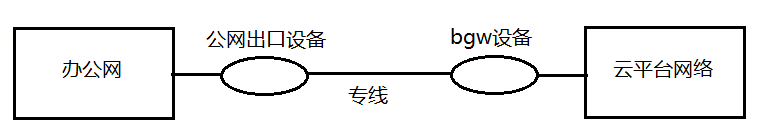

- The connection method you need to know After understanding the network architecture at both ends, let's choose the connection method. Usually, the network under the cloud and the network on the cloud are connected, and there is a dedicated line product connection method, or a user-built VPN connection method. To connect private line products, a network private line from the customer's office network to the cloud computer room needs to be deployed, and then the network egress device on the customer's office network side and the bgw border gateway on the cloud network side are configured to route to each other. As shown below:

Based on the functional limitations of the existing private line product BGW, the route on the cloud side can only point to the VPC where the K8S cluster is located, and cannot point to a specific K8S node. To access clusterIP type services and pods, nodes and pods in the cluster must access them. Therefore, the next hop of the route to access services and pods must be a cluster node. Therefore, the use of dedicated line products is obviously unable to meet the demand.

Let's look at the self-built VPN method. The self-built VPN has an endpoint device with a public IP address on the customer's office network and the cloud network. An encrypted communication tunnel is established between the two devices. The actual bottom layer is still based on public network communication. If this solution is used, the endpoints on the cloud can choose cloud hosts with public IP addresses under different subnets of the same VPC as the cluster nodes. After the access data packets to services and pods on the office network side are sent to the cloud host through the VPN tunnel, the data packets can be routed to a cluster node by configuring the subnet where the cloud host is located, and then configured to the client on the subnet where the cluster node is located. The routing next hop of the endpoint points to the endpoint cloud host, and the same routing configuration needs to be done on the pod subnet. As for the implementation of VPN, we choose the ipsec tunnel method by communicating with customers.

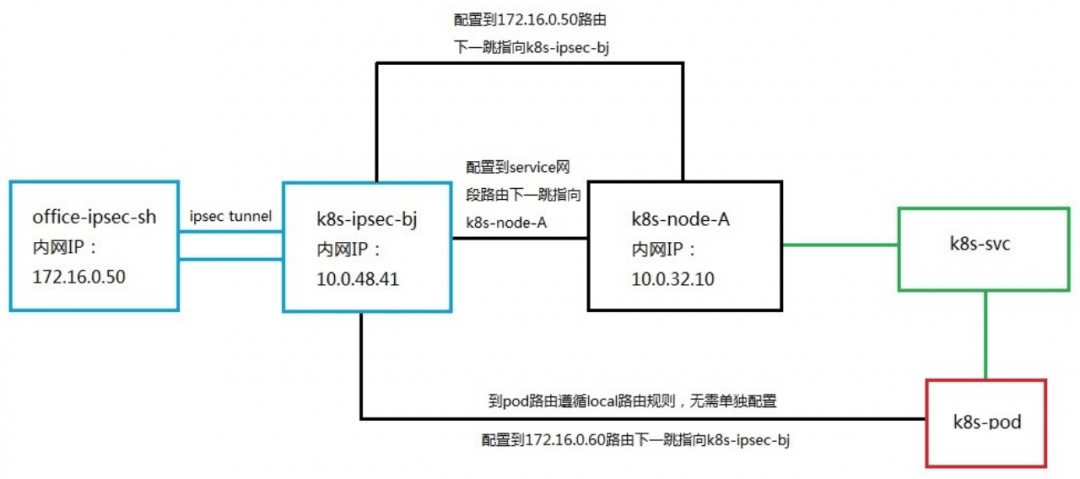

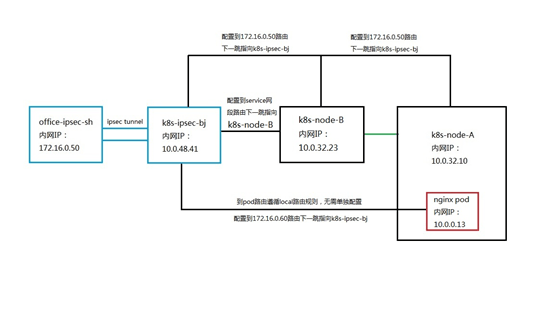

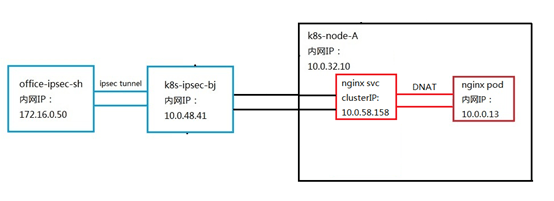

Having determined the scheme, we need to implement the scheme in the test environment to verify the feasibility. Since we do not have a cloud environment, we choose cloud hosts in different regions from the K8S cluster to replace the client's office network endpoint devices. Create a cloud host office-ipsec-sh in the East China region of Shanghai to simulate the client office network client, and create a cloud host K8S-ipsec- bj, simulate the ipsec cloud endpoint in the customer scenario, and establish an ipsec tunnel with the East China Shanghai cloud host office-ipsec-sh. Set the routing table of the NAT/LB subnet, and the next hop of the route added to the service network segment points to the K8S cluster node k8s-node-vmlppp-bs9jq8pua, hereinafter referred to as node A. Since the pod subnet and the NAT/LB subnet belong to the same VPC, there is no need to configure the route to the pod network segment. When accessing the pod, the local route will be directly matched and forwarded to the corresponding elastic network card. In order to realize the return of data packets, routes to the Shanghai cloud host office-ipsec-sh are configured on the node subnet and pod subnet respectively, and the next hop points to K8S-ipsec-bj. The complete architecture is shown in the following figure:

Part 3: Practice creates "problems"

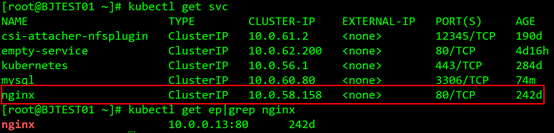

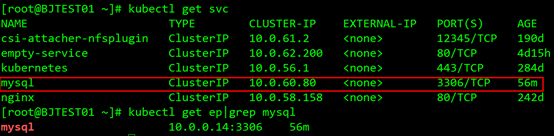

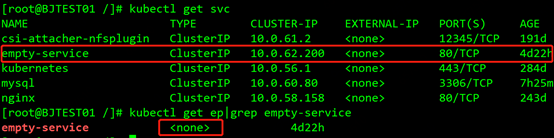

Now that the plan is determined, we start to build the environment. First, create a k8s-ipsec-bj cloud host in the NAT/LB subnet of the K8S cluster and bind it to the public IP. Then establish an ipsec tunnel with the Shanghai cloud host office-ipsec-sh. There are many documents on the Internet about the configuration method of the ipsec part, which will not be described in detail here. Interested children's shoes can refer to the documents to practice by themselves. After the tunnel is established, ping the peer's intranet IP at both ends. If the ping is successful, it proves that ipsec is working properly. Configure the routes for the NAT/LB subnet, node subnet, and pod subnet as planned. In the serivce of the k8s cluster, we select a serivce named nginx, and the cluster IP is 10.0.58.158, as shown in the figure:

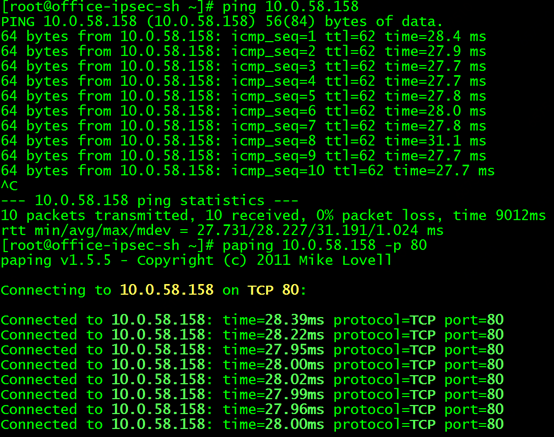

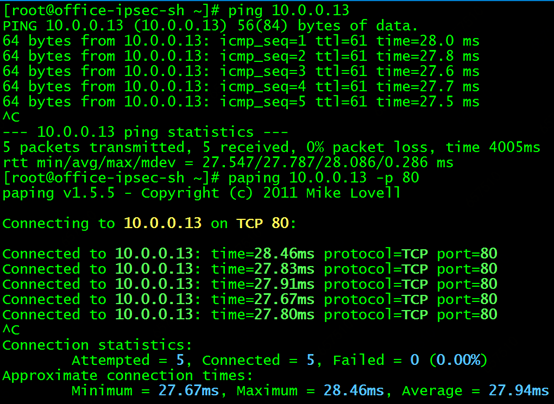

The backend pod of the service is 10.0.0.13, the default nginx page is deployed, and port 80 is monitored. Test the IP 10.0.58.158 of the ping service on the Shanghai cloud host, you can ping it, and use the paping tool to ping the 80 port of the service, you can also ping it!

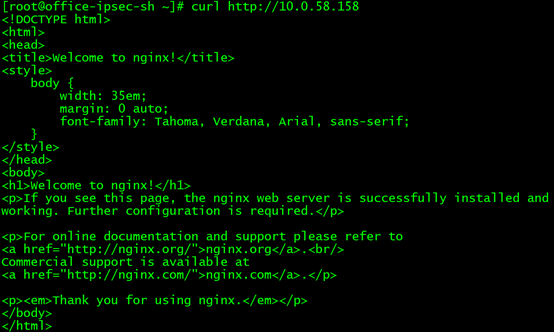

Use curl http://10.0.58.158 to make http requests, and it can also be successful!

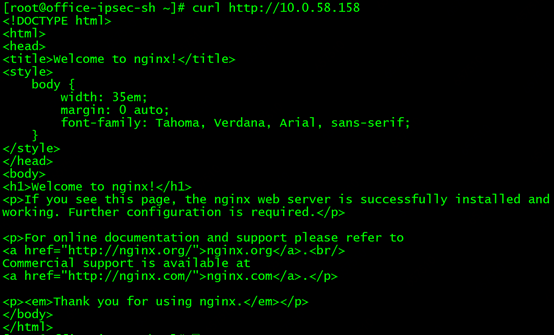

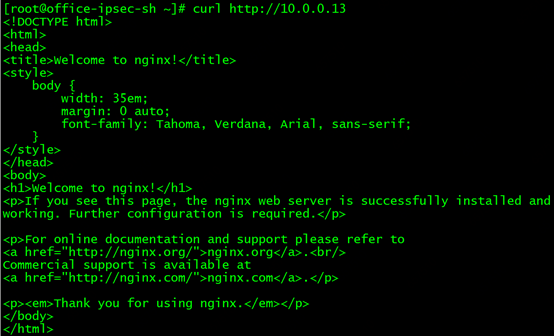

Then test the direct access to the backend pod, there is no problem :)

Just when the siege lion was so happy, thinking that everything was done, the result of testing access to another service was like a pouring cold water. We then selected the mysql service to test access to port 3306. The cluster IP of the service is 10.0.60.80, and the IP of the backend pod is 10.0.0.14

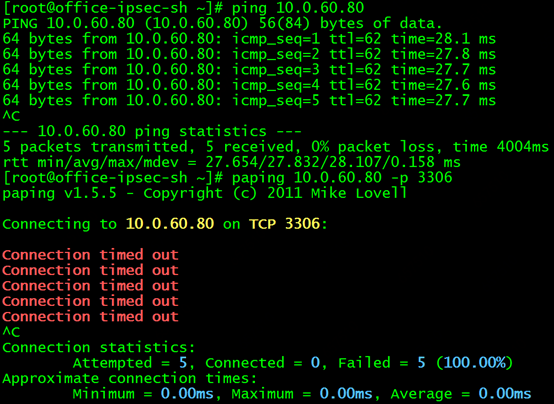

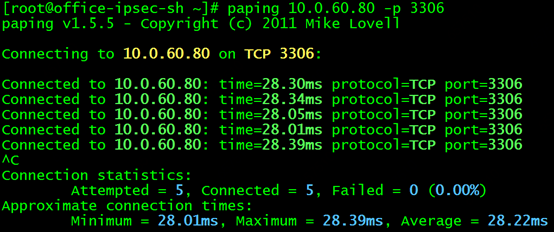

There is no problem in pinging the clusterIP of the service directly from the Shanghai cloud host. But when paping port 3306, it doesn't work!

Then we test the backend pod that directly accesses the serivce. The weird thing is that the backend pod can be connected whether it is ping IP or paping port 3306!

What's the matter? What's going on?

After a comparative analysis of the siege lion, it was found that the only difference between the two serivce is that the backend pod 10.0.0.13 that can connect to the nginx service is deployed on node A to which the client request is forwarded. The backend pod of the mysql service that cannot be connected is not on node A, but on another node.

In order to verify whether this is the cause of the problem, we modify the NAT/LB subnet routing separately, and the next hop to the mysql service points to the node where the backend pod is located. Then test again. really! Now you can access port 3306 of the mysql service!

**Explore Reasons Part 4: Three Whys? **

At this moment, Siege Lion has three questions in his heart:

(1) Why can the request be forwarded to the node where the service backend pod is located?

(2) Why can't the connection be connected when the request is forwarded to the node where the service backend pod is not located?

(3) Why can the IP of the service be pinged no matter which node it is forwarded to?

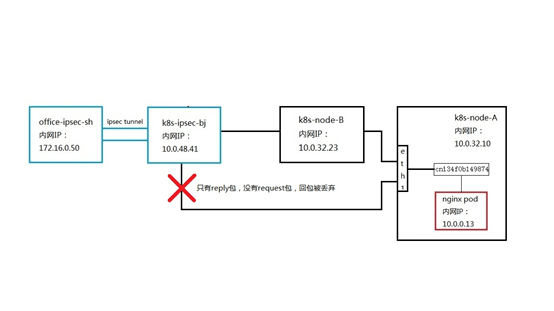

- In-depth analysis to eliminate question marks In order to eliminate the small question marks in our hearts, we need to conduct in-depth analysis to understand the cause of the problem, and then prescribe the right medicine. Since we want to troubleshoot network problems, of course, we still have to resort to the classic magic weapon - tcpdump packet capture tool. In order to focus, we have adjusted the architecture of the test environment. The ipsec part from Shanghai to Beijing remains the same as the existing structure. We expand the K8S cluster nodes and create a new empty node k8s-node-vmcrm9-bst9jq8pua without any pods, hereinafter referred to as node B, which only performs request forwarding. Modify the NAT/LB subnet route so that the next hop of the route accessing the service address points to this node. For the test service, we select the nginx service 10.0.58.158 and the back-end pod 10.0.0.13 used before, as shown in the following figure:

When we need to test the scenario where the request is forwarded to the node where the pod is located, we can change the next hop of the service route to k8s-node-A.

Everything is ready, let's start the journey of solving puzzles! Go Go Go!

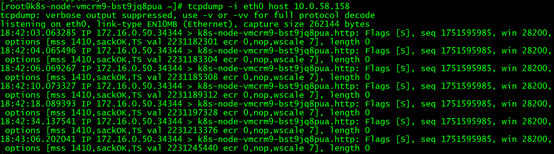

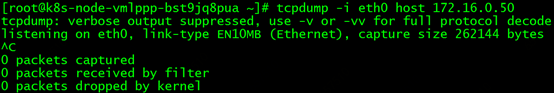

First explore the question 1 scenario, we execute the command on k8s-node-A to grab the package with the Shanghai cloud host 172.16.0.50, the command is as follows:

tcpdump -i any host 172.16.0.50 -w /tmp/dst-node-client.cap

Do you still remember what we mentioned before that in a managed K8S cluster, all pod data traffic is sent and received through the node's elastic network card?

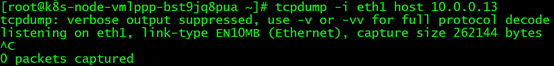

The elastic network card used by the pod on k8s-node-A is eth1. We first use the curl command to request http://10.0.58.158 on the Shanghai cloud host, and at the same time execute the command to capture whether there is any packet sending and receiving of pod 10.0.0.13 on eth1 of k8s-node-A. The command is as follows: tcpdump –i eth1 host The result of 10.0.0.13 is as follows:

There is no 10.0.0.13 packet sent and received from eth1, but the curl operation on the Shanghai cloud host can be successfully requested, indicating that 10.0.0.13 must have sent back packets to the client, but it did not return packets through eth1.

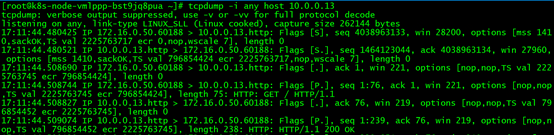

Then we will expand the capture scope to all interfaces, the command is as follows:

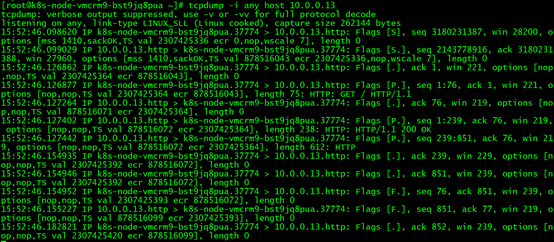

tcpdump -i any host 10.0.0.13

The result is as follows:

It can be seen that the packets interacting between 10.0.0.13 and 172.16.0.50 are indeed captured this time. For the convenience of analysis, we use the command tcpdump -i any host 10.0.0.13 -w /tmp/dst-node-pod.cap to output the packets for the cap file.

At the same time, we execute tcpdump -i any host 10.0.58.158 to capture the service IP,

It can be seen that 172.16.0.50 can capture data packets when executing the curl request, and only the data packets interacting with 172.16.0.50 between 10.0.58.158 and 172.16.0.50, and no data packets when the request is not executed.

Since this part of the data packets will be included in the capture of 172.16.0.50, we will not analyze them separately.

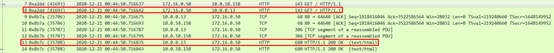

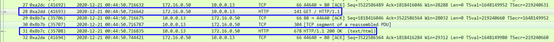

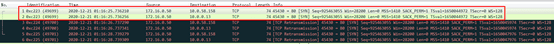

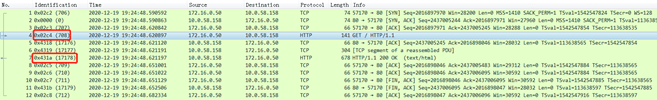

Take out the packet capture files for 172.16.0.50 and 10.0.0.13, use the wireshark tool to analyze, first analyze the packet capture of the client 172.16.0.50, as shown in the following figure:

It can be found that the client 172.16.0.50 first sent a packet to the service IP 10.0.58.158, and then sent a packet to the pod IP 10.0.0.13. The IDs and contents of the two packets are exactly the same. When the last packet was returned, pod 10.0.0.13 returned a packet to the client, and then service IP 10.0.58.158 also returned a packet with the same ID and content to the client. What is the reason for this?

Through the previous introduction, we know that the service forwards the client request to the backend pod. In this process, the client requests the service's IP, and then the service performs DNAT (NAT forwarding based on the destination IP), and forwards the request to the backend pod. The pod IP of the end. Although we captured the packet and saw that the client sent the packet twice to the service and pod respectively, in fact, the client did not resend the packet, but the service completed the destination address translation. When the pod returns the packet, it also sends the packet back to the service, which is then forwarded to the client by the service. Because it is a request within the same node, this process should be completed in the internal virtual network of the node, so we did not capture any packets interacting with the client on the eth1 network card used by the pod. Combined with the packet capture in the pod dimension, we can see that the http get request packets captured when capturing packets for the client can also be captured in the packet capture of the pod, which also verifies our analysis.

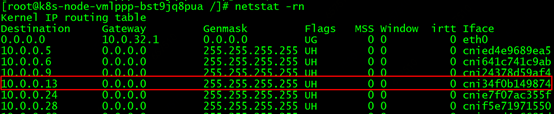

So which network interface does the pod send and receive packets through? Execute the command netstat -rn to view the network routing on node A, and we have the following findings:

Within the node, all routes to 10.0.0.13 point to the network interface cni34f0b149874. Obviously this interface is a virtual network device created by the CNI network plugin. In order to verify whether all the traffic of the pod is sent and received through this interface, we request the service address from the client again, and capture packets in the client dimension and pod dimension in node A, but this time, when capturing packets in the pod dimension, we no longer use - i any parameter, replace it with -i cni34f0b149874. After analyzing and comparing the packets, we found that, as we expected, all the request packets from the client to the pod can be found in the packet capture of cni34f0b149874, and at the same time, the packets of other network interfaces in the system except cni34f0b149874 were not captured. Any packets interacting with the client. Therefore, our inference can be proved correct.

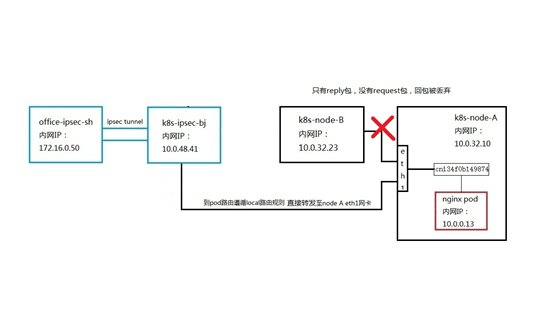

To sum up, when the client request is forwarded to the node where the pod is located, the data path is shown in the following figure:

Next, we explore the most concerned problem 2 scenario, modify the next hop of the NAT/LB subnet route to the service to point to the newly created node node B, as shown in the figure

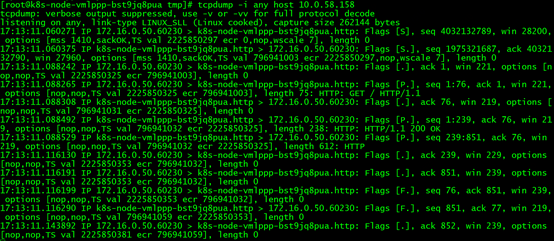

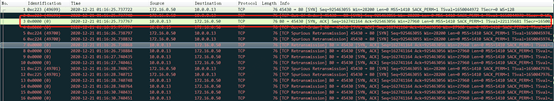

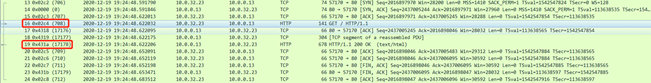

This time we need to capture packets on node B and node A at the same time. The client still uses curl to request the service address. On the forwarding node node B, we first execute the command tcpdump -i eth0 host 10.0.58.158 to capture the data packets of the service dimension and find that the request packets from the client to the service are captured, but the service does not have any return packets, as shown in the figure :

You may have doubts, why is 10.0.58.158 captured, but the destination displayed in the captured packet is the node name?

In fact, this is related to the implementation mechanism of the service. After the service is created in the cluster, the cluster network component will select a random port on each node to monitor, and then configure the forwarding rules in the iptables of the node. Any request for service IP in the node will be forwarded to the random port, and then sent to the cluster by the cluster. The network components are processed. Therefore, when accessing a service in a node, what is actually accessed is a port on the node. If you export the captured packet as a cap file, you can see that the destination IP of the request is still 10.0.58.158, as shown in the figure:

This also explains why clusterIP can only be accessed by nodes or pods in the cluster, because the devices outside the cluster do not have the iptables rules created by the k8s network component, and cannot convert the requested service address to the port of the requesting node, even if the packet is sent to the cluster, Since the clusterIP of the service does not actually exist in the node's network, it will be discarded. (The strange posture has grown again) Back to the problem itself, grab the service-related packets on the forwarding node, and find that the service does not return packets to the client as when it is forwarded to the node where the pod is located. We then execute the command tcpdump -i any host 172.16.0.50 -w /tmp/fwd-node-client.cap to capture packets in the client dimension. The contents of the packets are as follows:

We found that after the client requests to forward the service on node B, the service also performs DNAT and forwards the request to 10.0.0.13 on node A. However, the forwarding node did not receive any data packets from 10.0.0.13 back to the client. After that, the client retransmitted the request packet several times, but there was no response.

So has node A received the client's request packet? Does the pod send packets back to the client?

We move to node A to capture packets. In the packet capture on node B, we can learn that there should be only the interaction between the client IP and the pod IP on node A, so we capture packets from these two dimensions. According to the analysis results of the previous packet capture, after the data packet enters the node, it should interact with the pod through the virtual device cni34f0b149874. The node B node access pod should enter the node from node A's elastic network card eth1, not eth0. In order to verify, first execute the commands tcpdump -i eth0 host 172.16.0.50 and tcpdump -i eth0 host 10.0.0.13, no data was captured Bag.

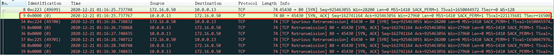

It means that the data packet does not go to eth0. Then execute tcpdump -i eth1 host 172.16.0.50 -w /tmp/dst-node-client-eth1.cap and tcpdump -i cni34f0b149874 host 172.16.0.50 -w /tmp/dst-node-client-cni.cap respectively to grab The client dimension data packets are compared and found to be completely consistent, indicating that after the data packets enter Node A from eth1, they are forwarded to cni34f0b149874 through the routing in the system. The contents of the packet are as follows:

You can see that after the client sends a packet to the pod, the pod returns the packet to the client. Execute tcpdump -i eth1 host 10.0.0.13 -w /tmp/dst-node-pod-eth1.cap and tcpdump -i host 10.0.0.13 -w /tmp/dst-node-pod-cni.cap to capture pod dimension data The comparison found that the contents of the data packets are exactly the same, indicating that the pod's return packet to the client is sent through cni34f0b149874, and then leaves the node A node from the eth1 network card. The content of the data packet can also be seen that the pod returned the packet to the client, but did not receive the client's response to the returned packet, triggering a retransmission.

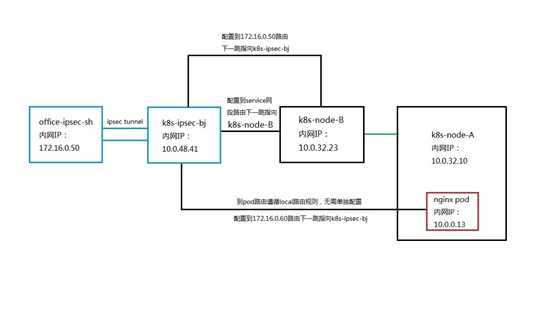

So since the pod's return packet has been sent, why does the node B not receive the return packet and the client does not receive the return packet? Looking at the routing table of the pod subnet to which the eth1 NIC belongs, we suddenly realized!

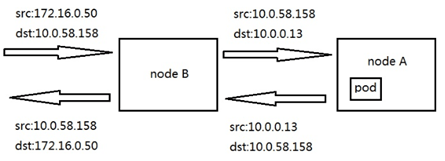

Since the pod's return packet to the client is sent from the eth1 network card of node A, although according to the normal DNAT rules, the data packet should be sent back to the service port on node B, but affected by the eth1 subnet routing table, the data packet is directly sent to the "Hijacked" to the host k8s-ipsec-bj. After the data packet arrives on this host, since it has not been converted by service, the source address of the returned packet is pod address 10.0.0.13, the destination address is 172.16.0.50, and the packet reply is source address 172.16.0.50, destination address 10.0 .58.158 this packet. It is equivalent to the inconsistency between the destination address of the request packet and the source address of the reply packet. For k8s-ipsec-bj, only the reply packet from 10.0.0.13 to 172.16.0.50 was seen, but the reply packet from 172.16.0.50 to 10.0.0.13 was not received. The mechanism of the virtual network of the cloud platform is to discard the request packet when there is only a reply packet and no request packet, so as to avoid using address spoofing to initiate network attacks. Therefore, the client will not receive the return packet of 10.0.0.13, and will not be able to complete the request for the service. In this scenario, the path of the data packet is shown in the following figure:

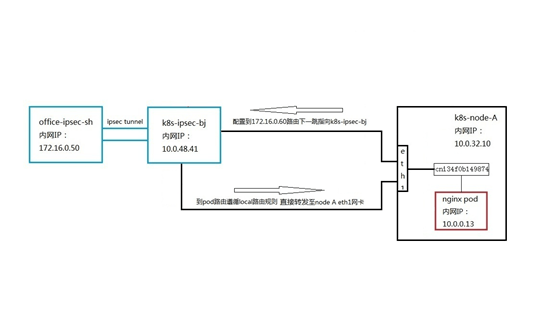

At this point, the reason why the client can successfully request the pod is also clear at a glance. The data path of the request pod is as follows:

The paths of the request packet and the return packet are the same, both pass through the k8s-ipsec-bj node and the source and destination IPs have not changed, so the pod can be connected.

Seeing this, the witty children's shoes may have thought, then modify the pod subnet routing to which eth1 belongs, so that the next hop of packets destined for 172.16.0.50 is not sent to k8s-ipsec-bj, but returned to k8s-node- B, isn't it possible to let the returned packets return along the same way as they came, and won't they be discarded?

Yes, as verified by our tests, this does allow the client to successfully request the service. But don't forget, the user also has a requirement that the client can directly access the backend pod. If the pod returns the packet to node B, what is the data path when the client requests the pod?

As shown in the figure, it can be seen that after the client's request to the Pod arrives at k8s-ipsec-bj, since it is accessed from an address within the same vpc, it is directly forwarded to the node A eth1 network card according to the local routing rules, and the pod returns to the client. When the packet is sent, it is controlled by the eth1 network card routing and sent to node B. Node B has not received the client's request packet for the pod before, and also encounters the problem that only the reply packet does not have the request packet, so the reply packet is discarded, and the client cannot request the pod.

So far, we have figured out why the client request cannot successfully access the service when it is forwarded to a node where the service backend pod is not. So why is it possible to ping the service address even though the request for the port of the service fails at this time?

The siege lion infers that since the service plays the role of DNAT and load balancing for the back-end pod, when the client pings the service address, the ICMP packet should be directly answered by the service, that is, the service replaces the back-end pod to reply to the client's ping. Bag. In order to verify whether our inference is correct, we create a new empty service without any backend associated with it in the cluster, as shown in the figure:

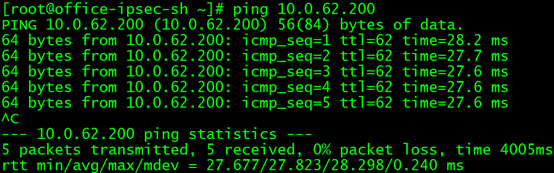

Then ping 10.0.62.200 on the client, the result is as follows:

Sure enough, even if the service backend does not have any pods, it can be pinged, so it proves that the ICMP packets are all the service proxy, and there is no problem when actually requesting the backend pods, so it can be pinged.

Part 5: There is no way out of the sky

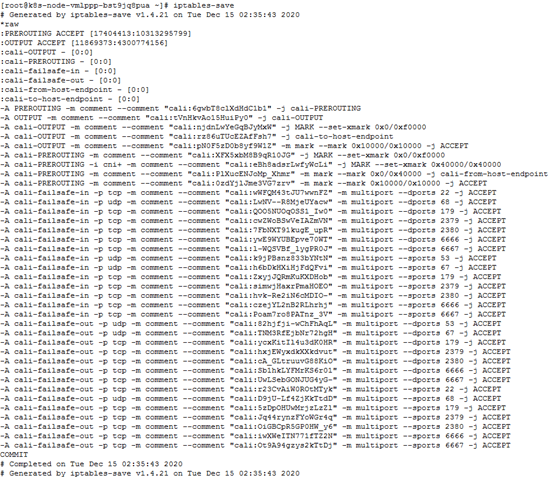

Now that we have taken great pains to find the reason for the failure of the access, we must find a way to solve this problem. In fact, as long as you find a way to make the pod hide its IP when it returns packets to the client across nodes, and display the IP of the service to the outside world, you can avoid the packet being discarded. Similar in principle to SNAT (source IP based address translation). It can be analogized that a LAN device without a public network IP has its own intranet IP. When accessing the public network, it needs to pass through a unified public network exit. At this time, the client IP seen externally is the public network exit IP, not a LAN. Intranet IP of the device. To implement SNAT, we will first think through the iptables rules on the node operating system. We execute the iptables-save command on the node A where the pod is located to check the existing iptables rules of the system.

Knock on the blackboard, pay attention <br>You can see that the system has created nearly a thousand iptables rules, most of which are related to k8s. We focused on the nat type rules in the above figure, and found the following that caught our attention:

- First look at the red box part of the rules

-A KUBE-SERVICES -m comment --comment "Kubernetes service cluster ip + port for masquerade purpose" -m set --match-set KUBE-CLUSTER-IP src,dst -j KUBE-MARK-MASQ

This rule indicates that if the source address or destination address of the visit is cluster ip + port, for the purpose of masquerade, it will jump to the KUBE-MARK-MASQ chain, masquerade means address masquerading! Address masquerading is used in NAT translation. Next, look at the blue box part of the rules

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

This rule means that the data packet is marked with the mark 0x4000/0x4000 that needs to be masqueraded.

- Finally, look at the rules of the yellow box

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

This rule indicates that for packets marked 0x4000/0x4000 that need to be SNATed, it will jump to the MASQUERADE chain for address masquerading.

It seems that the operations of these three rules are exactly what we need iptables to help us achieve, but from the previous test, it is clear that these three rules do not take effect. Why is this? Is there a parameter in the network component of k8s that controls whether to perform SNAT on the data packets when accessing the clusterIP?

This needs to be studied from the working mode and parameters of kube-proxy, the component responsible for network proxy forwarding between service and pod. We already know that the service will perform load balancing and proxy forwarding on the backend pods. To achieve this function, the kube-proxy component is relied on. From the name, it can be seen that this is a proxy network component. It runs on each k8s node in the form of a pod. When accessed in the form of clusterIP+port of the service, the request is forwarded to the corresponding random port on the node through the iptables rule, and then the request is taken over by the kube-proxy component and processed by the kube-proxy component. The internal routing and scheduling algorithm is forwarded to the corresponding backend Pod. Initially, the working mode of kube-proxy is userspace (user space proxy) mode, and the kube-proxy process is a real TCP/UDP proxy during this period, similar to HA Proxy. Since this mode has been replaced by the iptables mode since the 1.2 version of k8s, I will not go into details here. Interested children's shoes can study it by themselves.

The iptables mode introduced in version 1.2 is used as the default mode of kube-proxy. Kube-proxy itself no longer acts as a proxy, but realizes traffic forwarding from service to pod by creating and maintaining corresponding iptables rules. However, there are unavoidable defects in relying on iptables rules to implement proxy. After a large number of services and pods in the cluster, the number of iptables rules will also increase sharply, which will lead to a significant decrease in forwarding performance, and even rule loss in extreme cases.

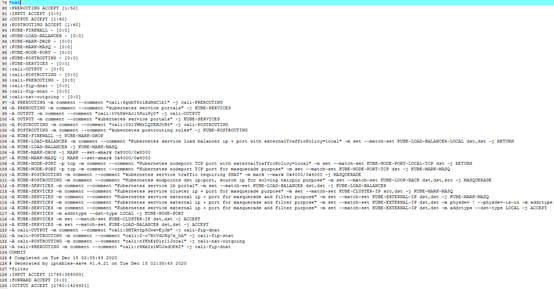

In order to solve the drawbacks of iptables mode, K8S began to introduce IPVS (IP Virtual Server) mode in version 1.8. IPVS mode is specially used for high-performance load balancing, using a more efficient hash table data structure, giving better scalability and performance for large clusters. Supports more complex load balancing scheduling algorithms than iptables mode. The kube-proxy hosting the cluster uses IPVS mode.

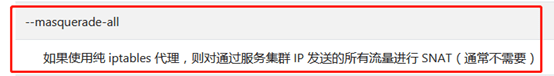

However, IPVS mode cannot provide functions such as packet filtering, address masquerading, and SNAT. Therefore, in scenarios where these functions are required, IPVS should be used with iptables rules. Wait, address masquerading and SNAT, isn't that what we've seen in iptables rules before? That is to say, when iptables does not perform address masquerading and SNAT, it will not follow the corresponding iptables rules, and once a parameter is set to enable address masquerading and SNAT, the iptables rules seen before will take effect! So we went to the kubernetes official website to find the working parameters of kube-proxy, and found an exciting discovery:

What a sudden look back! The sixth sense of the siege lion tells us that the --masquerade-all parameter is the key to solving our problem!

Part 6: The Real Way Is More Than Difficult

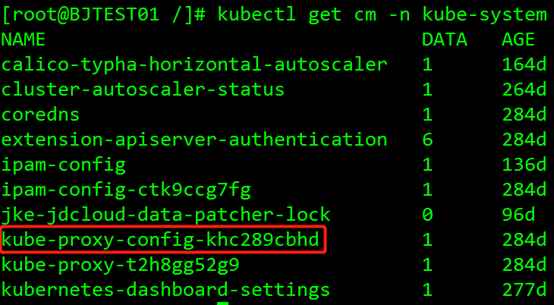

We decided to test turn on the --masquerade-all parameter. kube-proxy runs as a pod on each node in the cluster, and the parameter configuration of kube-proxy is mounted on the pod in the form of a configmap. We execute kubectl get cm -n kube-system to view the configmap of kube-proxy, as shown in the figure:

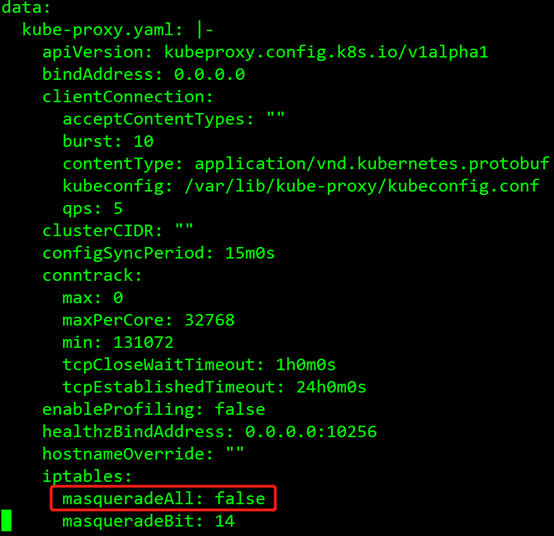

The red box is the configuration configmap of kube-proxy, execute kubectl edit cm kube-proxy-config-khc289cbhd -n kube-system to edit this configmap, as shown in the figure

The masqueradeALL parameter is found, the default is false, we modify it to true, and then save the modification.

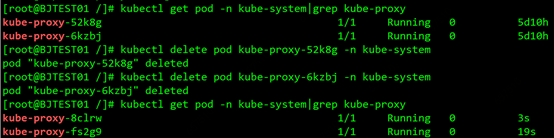

To make the configuration take effect, you need to delete the current kube-proxy pods one by one, daemonset will automatically rebuild the pod, the rebuilt pod will mount the modified configmap, and the masqueradeALL function will be enabled. as the picture shows:

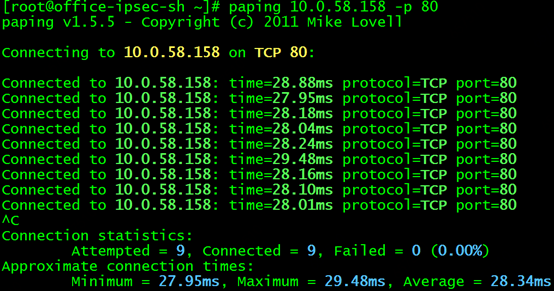

Anticipating rubbing hands The next exciting time is coming. We will point the route for accessing the service to node B, and then execute paping 10.0.58.158 -p 80 on the Shanghai client to observe the test results (rubbing hands expectantly):

In this situation, the siege lion could not help but shed tears of joy...

Then test curl http://10.0.58.158 and it can also be successful! Ollie~

There is no problem in directly accessing the backend Pod under the test and forwarding the request to the node where the pod is located. At this point, the customer's needs have finally been solved, and I can breathe a sigh of relief!

Finale: know why

Although the problem has been solved, our inquiry is not over yet. After enabling the masqueradeALL parameter, how does the service perform SNAT on the data packets to avoid the previous packet loss problem? Or through packet capture for analysis.

First, analyze the scenario of forwarding to the node where the pod is not located. When the client requests the service, the client IP is captured on the node where the pod is located, and no packets are captured.

Note that after the parameter is enabled, the request to the backend pod is no longer initiated by the client IP.

Capturing the pod IP at the forwarding node can capture the interaction packets between the service port of the forwarding node and the pod

It means that the pod did not directly return the packet to the client 172.16.0.50. In this way, it is equivalent that the client and the pod do not know each other's existence, and all interactions are forwarded through the service.

Then capture the client at the forwarding node. The content of the packet is as follows:

At the same time, the pod is captured on the node where the pod is located. The contents of the package are as follows:

It can be seen that after the forwarding node receives the curl request packet with sequence number 708, the node where the pod is located receives the request packet with the same sequence number, but the source and destination IP are converted from 172.16.0.50/10.0.58.158 to 10.0.32.23/10.0.0.13. Here 10.0.32.23 is the intranet IP of the forwarding node, which is actually the random port corresponding to the service on the node, so it can be understood that the source and destination IP are converted to 10.0.58.158/10.0.0.13. The process of returning a packet is the same. The pod sends a packet with sequence number 17178, the forwarding node sends the packet with the same sequence number to the client, and the source and destination IP is converted from 10.0.0.13/10.0.58.158 to 10.0.58.158/172.16.0.50

According to the above phenomenon, it can be seen that the service performs SNAT on both the client and the backend, which can be understood as turning off the load balancing of the source IP of the transparent transmission of the client, that is, the client and the backend do not know each other's existence, only the service the address of. The data path in this scenario is as follows:

The request to the Pod does not involve SNAT translation, which is the same as when the masqueradeALL parameter is not enabled, so we will no longer analyze it.

When the client request is forwarded to the node where the pod is located, the service will still perform SNAT translation, but this process is completed inside the node. Through the previous analysis, we have also learned that when the client request is forwarded to the node where the pod is located, whether SNAT is performed has no effect on the access result.

Summarize

So far, for the needs of customers, we can give the optimal solution at the emerging stage. Of course, in the production environment, for business security and stability, it is not recommended that users directly expose clusterIP type services and pods to the outside of the cluster. At the same time, after the masqueradeALL parameter is turned on, it has not been tested and verified whether it affects the performance of the cluster network and other functions. The risk of turning it on in the production environment is unknown and needs to be treated with caution. Through the process of solving customer needs, we have a certain degree of understanding of the service and pod network mechanism of the K8S cluster, and the masqueradeALL parameter of kube-proxy, which will benefit us a lot for future learning and operation and maintenance work.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。