1 Introduction

Docker is an open source engine that makes it easy to create a lightweight, portable, self-sufficient container for any application. Developers compile and test containers on laptops and deploy them in production environments in batches, including VMs (virtual machines), bare metal, OpenStack clusters and other basic application platforms.

Docker's goals:

Provide a lightweight and simple modeling method;

logical separation of duties;

Fast and efficient development life cycle;

A service-oriented architecture, where a single container runs a single application, is encouraged.

Docker is essentially a process running on the host machine. It implements resource isolation through namespaces, and resource constraints through cgroups. At the same time, it achieves efficient file operations through copy-on-write.

Cgroups is a mechanism provided by the Linux kernel. This mechanism can integrate (or separate) a series of system tasks and their sub-tasks into different groups based on resources according to requirements, thereby providing a unified framework for system resource management. . In other words, cgroups can limit and record the physical resources (including CPU, memory, IO, etc.) used by task groups, provide basic guarantees for container virtualization, and are the cornerstone of building a series of virtualization management tools such as Docker.

Cgroups provide the following four functions:

Resource limits: cgroups can limit the total amount of resources used by tasks.

Priority allocation: By the number of allocated CPU time slices and the size of disk IO bandwidth, it is actually equivalent to controlling the priority of task running.

Resource statistics: cgroups can count system resource usage, such as CPU usage time.

Task control: cgroups can perform operations such as suspending and resuming tasks.

2 docker and virtual machine

The Docker daemon can communicate directly with the main operating system to allocate resources to individual Docker containers; it can also isolate containers from the main operating system and isolate containers from each other. Virtual machines take minutes to start, while Docker containers can be started in milliseconds. With no bloated slave operating system, Docker can save a lot of disk space and other system resources.

Having said so many advantages of Docker, there is no need to completely deny virtual machine technology, because the two have different usage scenarios. Virtual machines are better at completely isolating the entire operating environment. For example, cloud service providers often use virtual machine technology to isolate different users. Docker is usually used to isolate different applications, such as front-end, back-end and database, similar to a "sandbox".

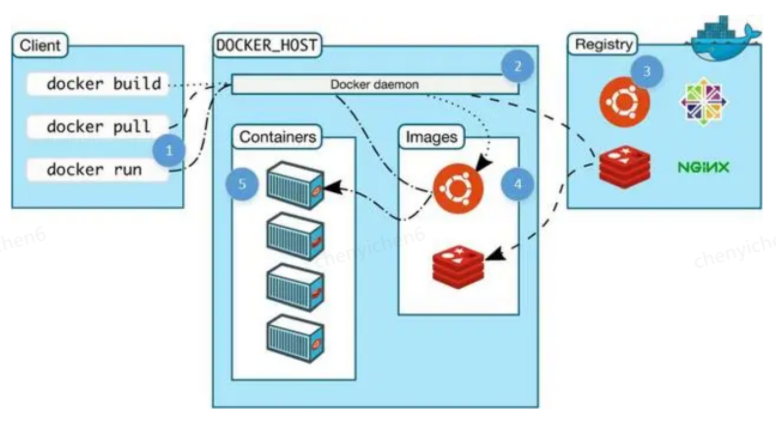

3 docker basics

3.1 Three core concepts of Docker and two slogans:

Image

Container

Repository

Two slogans:

Build, Ship and Run

Build once, Run anywhere

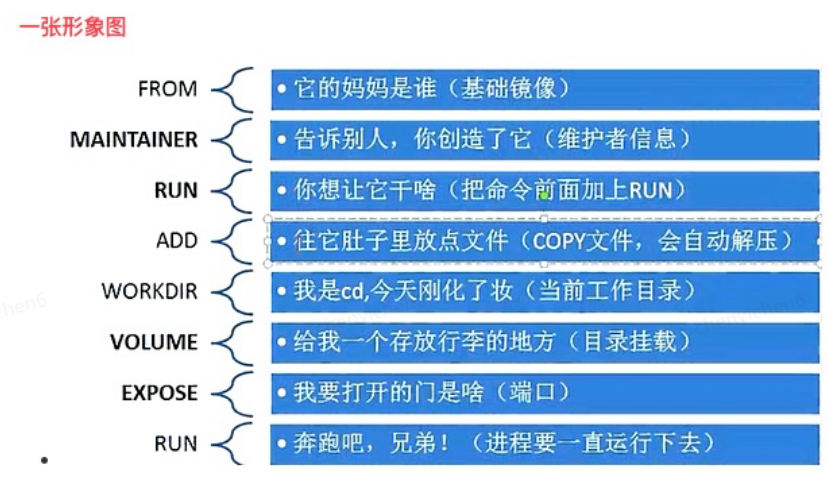

3.2 Understanding Dockerfile

Dockerfile is just a source file used to make an image. It is an instruction in the process of building a container. Docker can read the dockerfile file and automatically build the specified container.

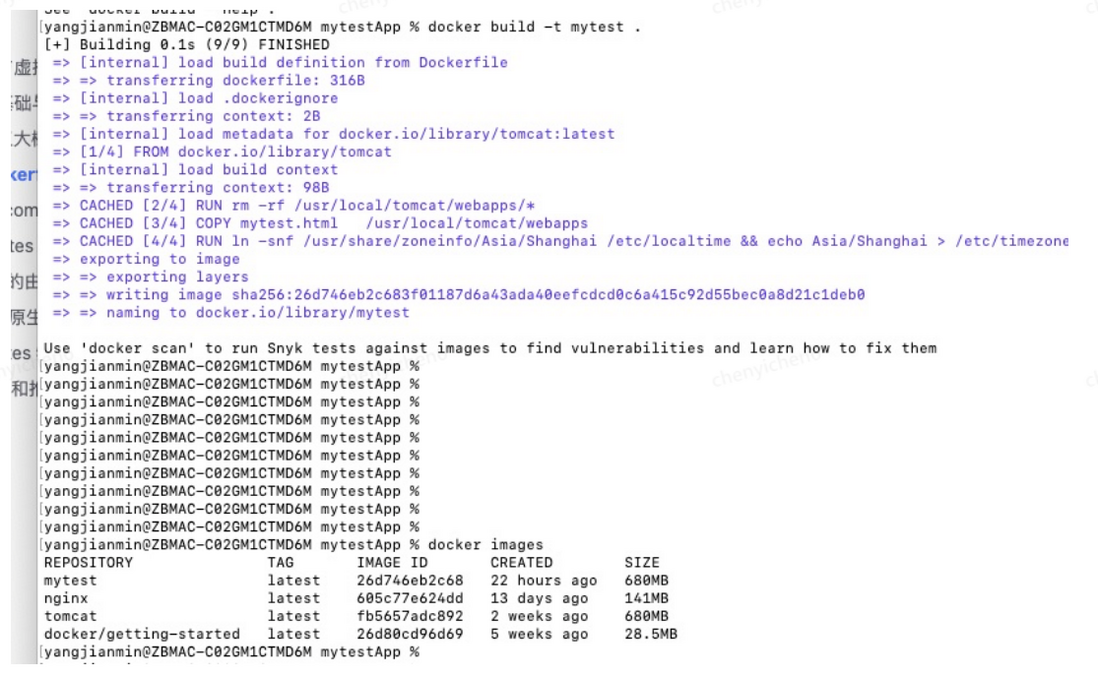

In addition to dockerfile, which is a custom way to build images, it also supports pulling from public repositories (dockerHub): https://hub.docker.com/ , or optimizing docker commi based on existing images, the following is a Custom Dockerfile, and the process of building mytest image based on the Dockerfile

from tomcat

MAINTAINER yangjianmin@jd.com

RUN rm -rf /usr/local/tomcat/webapps/*

COPY jhjkhkj.zip /usr/local/tomcat/webapps

ENV TZ=Asia/Shanghai

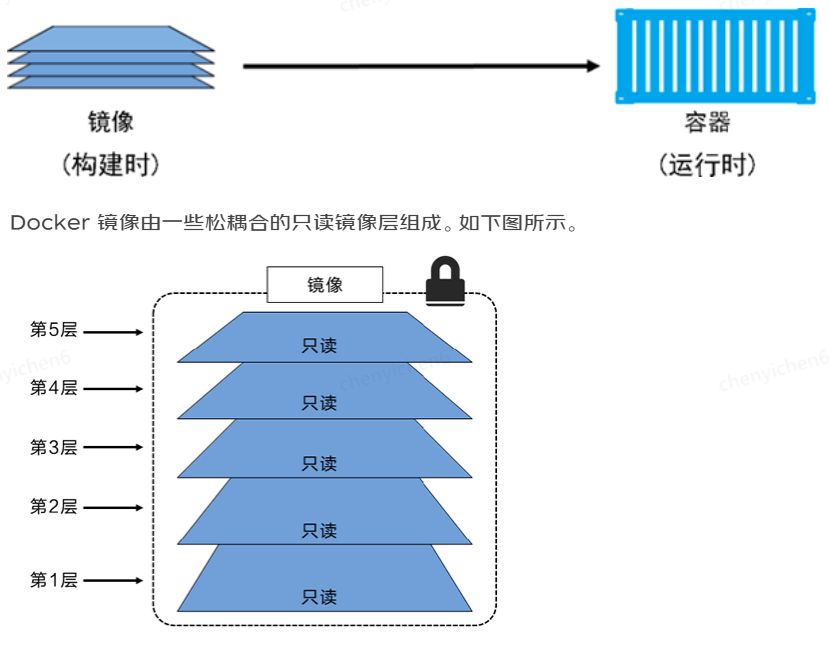

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone 3.3 Mirroring and Layering Mirroring is composed of multiple layers, and after each layer is superimposed, it looks like an independent object from the outside. Inside the image is a stripped-down operating system (OS) that also contains files and dependencies necessary for the application to run. Because containers are designed to be fast and small, images are usually small. An image can be understood as a build-time structure, and a container can be understood as a run-time structure.

Taking my local nginx as an example, use the docker image inspect command to view the image layering method:

% docker image inspect nginx:latest

[

{

"Id": "sha256:605c77e624ddb75e.....9dc3a85",

"RepoTags": [

"nginx:latest"

],

略过一些内容。。。

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:2edcec3590a4ec7f40.....41ef9727da6c851f",

"sha256:e379e8aedd4d72bb4c.....80179e8f02421ad8",

"sha256:b8d6e692a25e11b0d3.....e68cbd7fda7f70221",

"sha256:f1db227348d0a5e0b9.....cbc31f05e4ef8df8c",

"sha256:32ce5f6a5106cc637d.....75dd47cbf19a4f866da",

"sha256:d874fd2bc83bb3322b.....625908d68e7ed6040a6"

]

},

"Metadata": {

"LastTagTime": "0001-01-01T00:00:00Z"

}It can be seen that the latest nginx image I pulled from the remote end is composed of a six-layer structure. When we pull the image, we can also see the record of layered pull. In order to minimize the number of image layers, we are writing Dockerfile The RUN command should be integrated as much as possible, because the number of layers will increase by 1 every time the RUN command is run.

3.4 Mirror operation

Docker images: View the image list, TAG is equivalent to the jar package version and describes the version of the image

docker run -d -p 91:80 nginx: run the nginx image, -d indicates the background display of the relevant startup log, -p is to map the port of the local operating system and the internal port of the container, so as to expose the designated port of the docker container to the outside world effect. We can request to the just started nginx by accessing port 91.

docker ps: You can view the status of already running containers.

The operation commands of docker are not introduced in this article, and interested partners can learn by themselves.

% docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mytest latest 26d746eb2c68 26 hours ago 680MB

nginx latest 605c77e624dd 13 days ago 141MB

tomcat latest fb5657adc892 2 weeks ago 680MB

docker/getting-started latest 26d80cd96d69 5 weeks ago 28.5MB

yangjianmin@192 ~ % docker run -d -p 91:80 nginx

dcf193ebf5dd1b3267eddff37158535036918451938c4c90f98d2b12edf6c608

yangjianmin@192 ~ % docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

dcf193ebf5dd nginx "/docker-entrypoint.…" 10 seconds ago Up 9 seconds 0.0.0.0:91->80/tcp nifty_stonebraker 4 docker-compose, docker-machine

Compose is a tool for defining and running multi-container Docker applications. With Compose, you can use YML files to configure all the services your application needs. Then, with one command, all services can be created and started from the YML file configuration.

Compose allows users to define a set of associated application containers as a project through a single docker-compose.yml template file (YAML format).

The Docker-Compose project is written in Python and calls the API provided by the Docker service to manage containers. Therefore, as long as the operating platform supports the Docker API, you can use Compose on it for orchestration management.

docker-compose.yml

mysql:

image: daocloud.io/yjmyzz/mysql-osx:latest

volumes:

- ./mysql/db:/var/lib/mysql

ports:

- 3306:3306

environment:

- MYSQL_ROOT_PASSWORD=123456

service1:

image: java:latest

volumes:

- ./java:/opt/app

expose:

- 8080

#ports:

# - 9081:8080

links:

- mysql:default

command: java -jar /opt/app/spring-boot-rest-framework-1.0.0.jar

service2:

image: java:latest

volumes:

- ./java:/opt/app

expose:

- 8080

#ports:

# - 9082:8080

links:

- mysql:default

command: java -jar /opt/app/spring-boot-rest-framework-1.0.0.jar

nginx1:

image: nginx:latest

volumes:

- ./nginx/html:/usr/share/nginx/html:ro

- ./nginx/nginx.conf:/etc/nginx/nginx.conf:ro

- ./nginx/conf.d:/etc/nginx/conf.d:ro

#expose:

# - 80

ports:

- "80:80"

links:

- service1:service1

- service2:service2The above file can be regarded as a batch version of docker run. In terms of the smallest unit, it includes the image name and version (nginx:latest), the file mount path (Volumes), and the exposed port (expose, but this only allows internal access to the container, Not mapped to the host), port mapping (ports), linking to other services (links). You can build and run through commands such as docker compose build and docker compose up. The corresponding process is no longer displayed, and interested partners can practice locally.

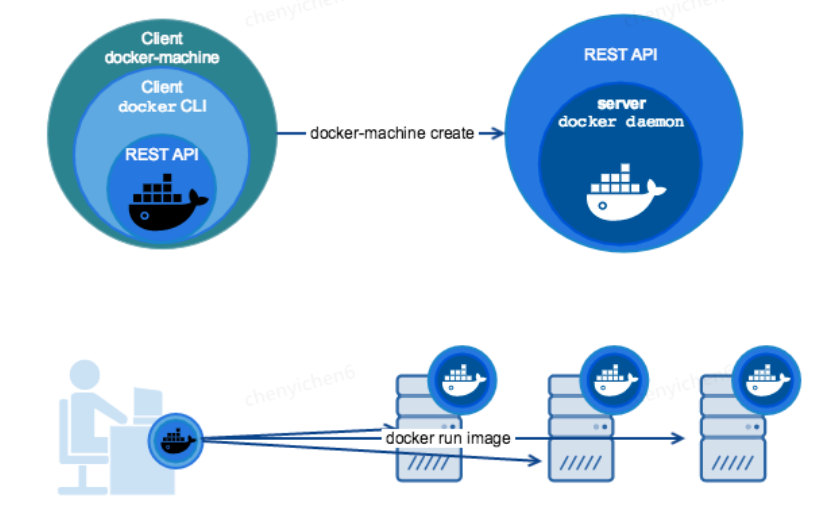

4.1 docker-machine

Docker Machine is a tool for configuring and managing Dockerized hosts (hosts with Docker Engine). You can use Machine to install Docker Engine on one or more virtual systems. These virtual systems can be local (as when using Machine to install and run Docker Engine in VirtualBox on Mac or Windows) or remote (as when using Machine to configure a Dockerized host on a cloud provider). One can think of the Dockerized host itself, sometimes referred to as the hosting "machine".

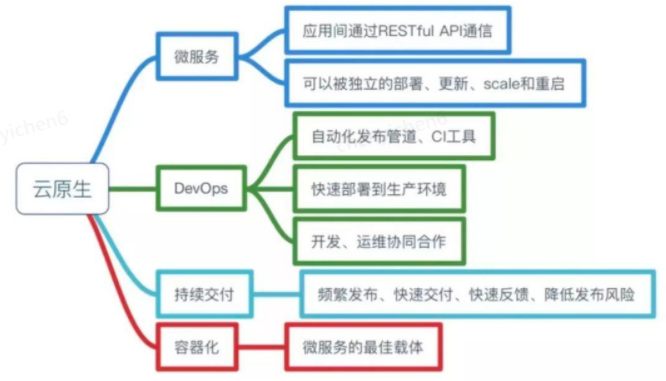

5 The topic of cloud native is relatively large, and some explanations are selected here, which have great limitations.

Cloud native can literally be divided into two parts: cloud and native. The cloud is relative to the local. Traditional applications must run on local servers. Now popular applications are running in the cloud. The cloud includes IaaS, PaaS and SaaS.

Native means home-grown. When we started designing the application, we considered that the application will run in the cloud environment in the future, and we should make full use of the advantages of cloud resources, such as the elasticity and distributed advantages of cloud services.

Cloud Native = Microservices + DevOps + Continuous Delivery + Containerization

6 Kubernetes (K8S)

Docker advocates "container-as-a-service". In the face of large and complex practical application scenarios, it will face problems such as multi-container management, scheduling, and cluster expansion. People urgently need a container management system to provide more advanced Docker and containers. More flexible management.

Thus, Kubernetes appeared.

6.1 The origin of the name of K8S This is actually related to the naming of people in Silicon Valley. They have a bad habit of abbreviation of the first letter of a word + the number of skipped letters, in order to make grandparents unable to understand, such as Amazon The Algorithms is abbreviated as A9, and kubernetes is abbreviated as k8s, which means that after k skips 8 letters and then reaches s, it becomes k8s.

6.2 Kubernetes features are portable: support public cloud, private cloud, hybrid cloud, multi-cloud

Extensible: modular, pluggable, mountable, composable Automation: automatic deployment, automatic restart, automatic replication, automatic scaling/expansion

Kubernetes (k8s) is an open source platform for automating container operations. These container operations include: deployment, scheduling, and scaling across clusters of nodes.

Specific functions:

Automate container deployment and replication.

Real-time elastic shrink container size.

Containers are orchestrated into groups and provide load balancing between containers.

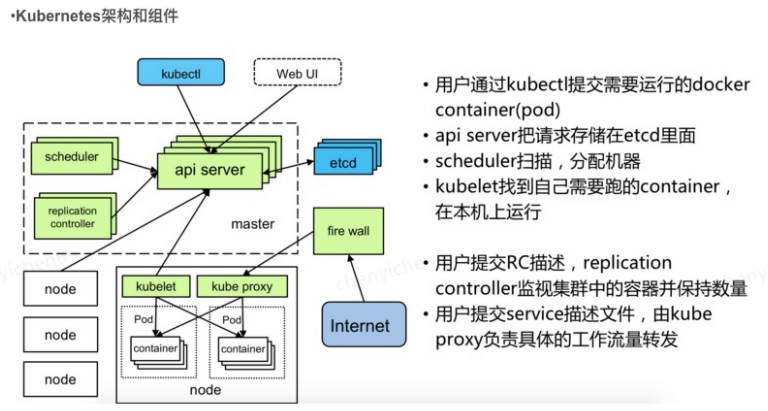

6.3 K8S Architecture and Components

Pod: Pod is the smallest scheduling unit of k8s. A pod can contain one or more containers. A pod shares a namespace, and they can communicate through localhost before.

The core components of the K8s cluster are as follows:

etcd: A highly available K/V key-value pair storage and service discovery system.

flannel: Implements container network communication across hosts.

kube-apiserver: Provides API calls for Kubernetes clusters.

kube-controller-manager: Ensure cluster services.

kube-scheduler: Schedules containers and assigns them to Node.

kubelet: Start the container on the Node node according to the container specification defined in the configuration file.

kube-proxy: Provides network proxy services.

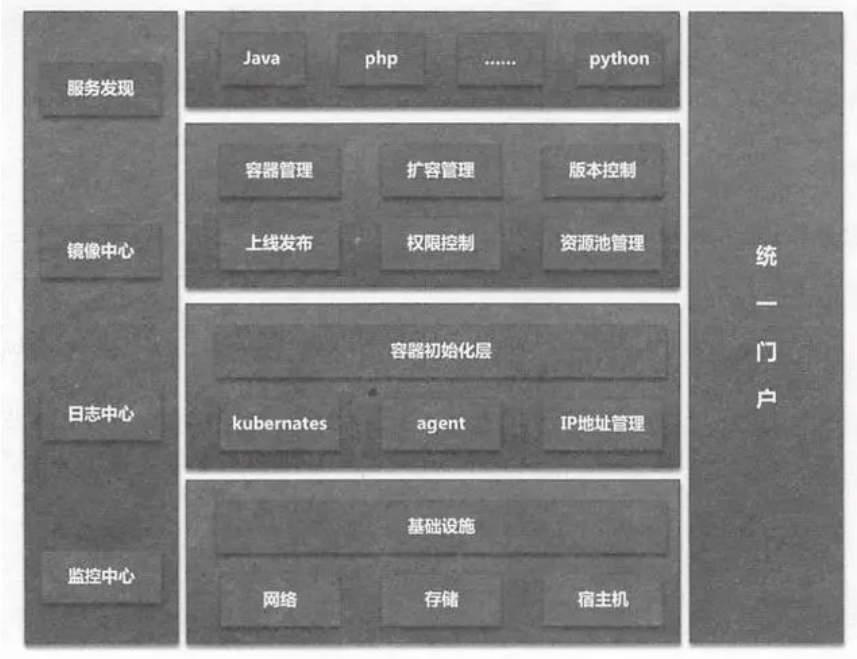

6.4 Private Cloud Architecture

If there is anything inappropriate in this article, please criticize and correct it. K8S practice and core component analysis will be launched in the future, and continue to pay attention!

7 Reference articles:

Kernel knowledge behind Docker - cgroups resource limitation https://www.infoq.cn/article/docker-kernel-knowledge-cgroups-resource-isolation/

Kernel knowledge behind Docker https://www.cnblogs.com/beiluowuzheng/p/10004132.html

Comparison of swarm and kuberneteshttps: //blog.csdn.net/weixin_41282397/article/details/80771237

K8S super detailed summary! https://blog.csdn.net/weixin_38320674/article/details/114684086

K8S Chinese Society https://www.kubernetes.org.cn/k8s

Author: Yang Jianmin

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。