On a certain day during the long Spring Festival holiday, the sun was shining and the spring flowers were blooming, which coincided with the opening of the Winter Olympics. Unexpectedly, I encountered a customer report with a little twists and turns.

Cause of failure

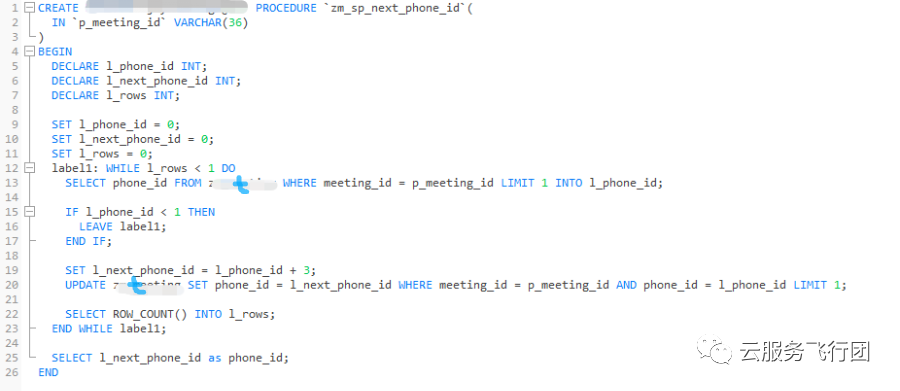

The cause of the failure was that the customer migrated from self-built MySQL to RDS on the cloud the day before, and a large number of lock waits occurred when executing a business with high concurrency. The customer upgraded the instance to the highest specification at that time, but the failure persisted. Customers have reported that the upgraded instance specifications are twice as high as that of self-built instances, and this has never happened to self-built instances. Later, the customer simulated the scene according to the business failure at that time, mainly because the performance was poor when the following stored procedures were executed concurrently:

initial diagnosis

From the perspective of the logic of the stored procedure, it is relatively simple, mainly involving two SQLs, one is queried from the meeting_id in the table t (the real table name is hidden) according to the incoming parameter value, and the specific input parameter is brought in by the character variable p_meeting_id; the other Update the phone_id in t to phone_id+3 according to the meeting_id and the phone_id just found. Table t data volume is about 40w.

The first feeling is that this is a simple problem. It is estimated that the meeting_id indexes of the two SQLs do not take effect. After querying the indexes on the table, it is found that there are no indexes on meeting_id and phone_id. It is recommended that customers create indexes on the two fields respectively, and meeting_id is the primary key. At this time, the feedback speed of the user's simulated concurrent script has been significantly improved. The maximum execution time of 200 concurrent scripts is about 40s, but when 500 concurrent scripts are simulated, it has not been executed for more than 8 minutes. Users reported that 500 concurrent executions on self-built MySQL were completed in seconds. At this point in the console, this stored procedure appears in batches in the slow query log, and the number of scanned rows is huge, and the client has completely hanged:

further optimization

Although the optimization has achieved preliminary results, it is still far from the performance description of the customer's self-built environment. Due to the high concurrency, the CPU reached 100% during the test from the monitoring, and it is suspected that the parameter innodb_thread_concurrency may be set improperly. The purpose of this parameter is to control the upper limit of concurrent threads for InnoDB. That is to say, once the number of concurrent threads reaches this value, InnoDB will enter a waiting state when it receives a new request until a thread exits. The default value of RDS is 0, that is, there is no upper limit. In high concurrency scenarios, more context switches may occur, resulting in increased CPU. After consulting with the customer, the value of their self-built environment is set to 32, and it is recommended that they change the value of RDS to 32 to see the effect. The customer quickly reported that the modification was indeed effective. 500 concurrent operations were completed within 3 minutes, and there was no more hang situation, and the performance was further improved. However, further adjustment of the parameter innodb_thread_concurrency has little effect.

Add trace diagnosis

Customers are also very confident in seeing the continuous improvement in performance, but there is still a big gap with the self-built environment. Where else might there be problems? I suddenly thought that after creating the index, I saw a lot of stored procedure calling sql in the slow query list of the console, and the number of scanned records was huge. If the unique index of meeting_id is used, it should scan a small number of records. index? Or not take the meeting_id primary key index? Contact the customer and hope to provide a test environment for login testing.

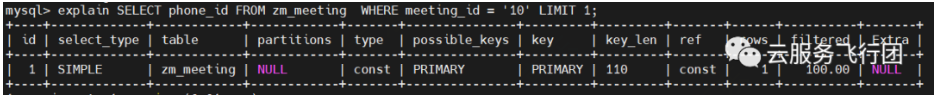

In the test environment, we first want to verify what the execution plans of the two SQLs look like. After logging in to the instance, execute explain on the SQL in the two stored procedures respectively, and find that the primary key (meeting_id) is indeed the one going:

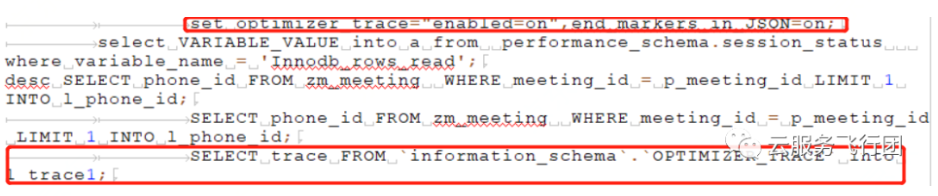

In order to further confirm the actual execution plan of SQL in the stored procedure, the stored procedure logic of the test was modified, and the explain result of SQL execution and the trace of actual execution were added. The main code added in the process is as follows:

The execution plan results are as follows:

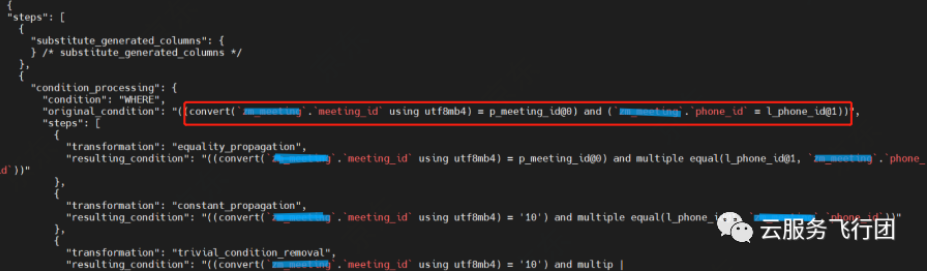

From the results, the two SQLs actually did not use the primary key meeting_id index, but both used the ordinary secondary index of phone_id. The first query SQL used a full scan of the index, the number of rows scanned was 397399, and the table The number of records is the same. Obviously, the full index scan is performed. Although it is better than the full table scan, the efficiency is still low. Another update SQL is performed by the normal index scan, and the rows are only 2, and the performance is efficient. Why didn't the two SQLs use the meeting_id primary key index? Look at some of the content printed by trace:

The trace shows that when the two SQLs are analyzed by the optimizer, the meeting_id is implicitly converted, and the conversion function is convert ('meeting_id' using utf8mb4), that is, the meeting_id is converted to the character set. Students who are familiar with the indexing mechanism know that, In this case, the optimizer will not take the meeting_id index. This can also explain why there is a performance improvement when the customer creates an index for the first time, but the effect is not obvious. The reason is that only the update statement really uses the performance improvement brought by the index, and it is the improvement brought by the phone_id index, not the Higher performance primary key meeting_id.

The truth is revealed

Now focus on the most critical question, why does meeting_id do the implicit conversion of the character set? Check out the instance-related character set settings:

The character set of the table and column is utf8;

The character set of the library where the table is located is utf8mb4;

server character set ((character_set_server)) is utf8

character_set_client/character_set_connection/character_set_results is utf8mb4

Sure enough, the character sets of server, database, and table are not exactly the same. Guess the actual process should be like this: the character set of the character parameter passed in the stored procedure is utf8mb4, and when comparing with the field meeting_id whose character set is utf8 in the table, meeting_id Implicit conversion of character set is done, converted to utf8mb4 and then compared with the input parameters, so that the index on meeting_id cannot be used.

According to this guess, it is recommended that users change the character set of the table to utf8mb4, which should avoid character set conversion. Since this function has not been launched yet, the user directly modifies the character set of the table:

alter table zm_meeting convert to character set utf8mb4;

After the modification, let the user test again, and the expected effect finally appeared. The concurrent 500 tests were completed in seconds. When the trace checked the execution plan, the primary key index of meeting_id was gone, and the implicit conversion also disappeared, and the performance problem was completely solved.

Follow-up thinking

Why does the stored procedure use utf8mb4? This is the core of this case. Check the mysql documentation. There is a description in the stored procedure introduction:

Simply put, it is the character parameter of the stored procedure. If the character set is not explicitly specified, the character set of the database where it is located will be used by default. In this case, the character set of the database where the table is located is utf8mb4, so the parameter uses utf8mb4 by default, resulting in Implicit conversion of the matching process. Why doesn't this happen when writing SQL directly outside the stored procedure? I guess that the strings compared should automatically match the character set of the table field on the left side of the '='.

In this case, in theory, directly modifying the character set of the parameter should also achieve the same result. In a simple test, add the stored procedure parameter to the character set attribute on the table:

CREATE PROCEDURE zm_sp_next_phone_id (IN p_meeting_id VARCHAR(36) character set utf8)

The test result is as we expected, no implicit conversion occurs, and the execution plan is correct.

Although the problem is solved and the reason is found, but if you reflect on the whole process, if the user's server, library, and table character sets can be kept consistent, this failure will be completely avoided. Similar failures related to character sets can also be avoided with a high probability, so there must be certain design specifications on the client side; if there are certain inspection rules on the product side, it can help customers find similar hidden dangers, which is also very valuable for improving customer experience. service.

Author: Zhai Zhenxing

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。