前言

本文介绍如何在k8s集群中使用helm来创建logstash,供大家参考学习。

准备

- 阿里云K8S集群

- 安装helm

安装

我们首先添加一下helm库,并且搜索到logstash

$ helm repo add bitnami https://charts.bitnami.com/bitnami

$ helm search repo logstash

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami/logstash 5.4.1 8.7.1 Logstash is an open source data processing engi...

stable/dmarc2logstash 1.3.1 1.0.3 DEPRECATED Provides a POP3-polled DMARC XML rep...

stable/logstash 2.4.3 7.1.1 DEPRECATED - Logstash is an open source, server...

bitnami/dataplatform-bp2 12.0.5 1.0.1 DEPRECATED This Helm chart can be used for the ...

我们安装的Chart版本:5.4.1 ,App版本:8.7.1,接着我们把源码pull下来,如下:

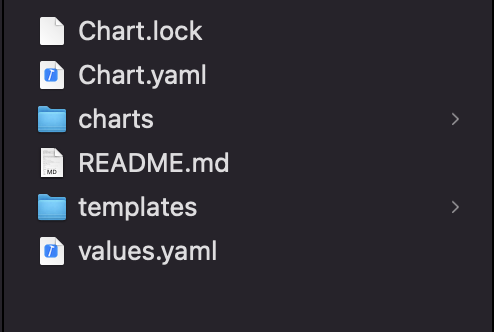

$ helm pull bitnami/logstash解压一下下载后的logstash-5.4.1.tgz文件,如下所示 :

之后我们打开values.yaml文件,如下所示:

## @section Global parameters

## Global Docker image parameters

## Please, note that this will override the image parameters, including dependencies, configured to use the global value

## Current available global Docker image parameters: imageRegistry, imagePullSecrets and storageClass

## @param global.imageRegistry Global Docker image registry

## @param global.imagePullSecrets Global Docker registry secret names as an array

## @param global.storageClass Global StorageClass for Persistent Volume(s)

##

global:

imageRegistry: ""

## E.g.

## imagePullSecrets:

## - myRegistryKeySecretName

##

imagePullSecrets: []

storageClass: ""

## @section Common parameters

## @param kubeVersion Force target Kubernetes version (using Helm capabilities if not set)

##

kubeVersion: ""

## @param nameOverride String to partially override logstash.fullname template (will maintain the release name)

##

nameOverride: ""

## @param fullnameOverride String to fully override logstash.fullname template

##

fullnameOverride: ""

## @param clusterDomain Default Kubernetes cluster domain

##

clusterDomain: cluster.local

## @param commonAnnotations Annotations to add to all deployed objects

##

commonAnnotations: {}

## @param commonLabels Labels to add to all deployed objects

##

commonLabels: {}

## @param extraDeploy Array of extra objects to deploy with the release (evaluated as a template).

##

extraDeploy: []

## Enable diagnostic mode in the deployment

##

diagnosticMode:

## @param diagnosticMode.enabled Enable diagnostic mode (all probes will be disabled and the command will be overridden)

##

enabled: false

## @param diagnosticMode.command Command to override all containers in the deployment

##

command:

- sleep

## @param diagnosticMode.args Args to override all containers in the deployment

##

args:

- infinity

## @section Logstash parameters

## Bitnami Logstash image

## ref: https://hub.docker.com/r/bitnami/logstash/tags/

## @param image.registry Logstash image registry

## @param image.repository Logstash image repository

## @param image.tag Logstash image tag (immutable tags are recommended)

## @param image.digest Logstash image digest in the way sha256:aa.... Please note this parameter, if set, will override the tag

## @param image.pullPolicy Logstash image pull policy

## @param image.pullSecrets Specify docker-registry secret names as an array

## @param image.debug Specify if debug logs should be enabled

##

image:

registry: docker.io

repository: bitnami/logstash

tag: 8.6.0-debian-11-r0

digest: ""

## Specify a imagePullPolicy. Defaults to 'Always' if image tag is 'latest', else set to 'IfNotPresent'

## ref: https://kubernetes.io/docs/user-guide/images/#pre-pulling-images

##

pullPolicy: IfNotPresent

## Optionally specify an array of imagePullSecrets (secrets must be manually created in the namespace)

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

## Example:

## pullSecrets:

## - myRegistryKeySecretName

##

pullSecrets: []

## Set to true if you would like to see extra information on logs

##

debug: false

## @param hostAliases Add deployment host aliases

## https://kubernetes.io/docs/concepts/services-networking/add-entries-to-pod-etc-hosts-with-host-aliases/

##

hostAliases: []

## @param configFileName Logstash configuration file name. It must match the name of the configuration file mounted as a configmap.

##

configFileName: logstash.conf

## @param enableMonitoringAPI Whether to enable the Logstash Monitoring API or not Kubernetes cluster domain

##

enableMonitoringAPI: true

## @param monitoringAPIPort Logstash Monitoring API Port

##

monitoringAPIPort: 9600

## @param extraEnvVars Array containing extra env vars to configure Logstash

## For example:

## extraEnvVars:

## - name: ELASTICSEARCH_HOST

## value: "x.y.z"

##

extraEnvVars: []

## @param extraEnvVarsSecret To add secrets to environment

##

extraEnvVarsSecret: ""

## @param extraEnvVarsCM To add configmaps to environment

##

extraEnvVarsCM: ""

## @param input [string] Input Plugins configuration

## ref: https://www.elastic.co/guide/en/logstash/current/input-plugins.html

##

input: |-

# udp {

# port => 1514

# type => syslog

# }

# tcp {

# port => 1514

# type => syslog

# }

http { port => 8080 }

## @param filter Filter Plugins configuration

## ref: https://www.elastic.co/guide/en/logstash/current/filter-plugins.html

## e.g:

## filter: |-

## grok {

## match => { "message" => "%{COMBINEDAPACHELOG}" }

## }

## date {

## match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

## }

##

filter: ""

## @param output [string] Output Plugins configuration

## ref: https://www.elastic.co/guide/en/logstash/current/output-plugins.html

##

output: |-

# elasticsearch {

# hosts => ["${ELASTICSEARCH_HOST}:${ELASTICSEARCH_PORT}"]

# manage_template => false

# index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

# }

# gelf {

# host => "${GRAYLOG_HOST}"

# port => ${GRAYLOG_PORT}

# }

stdout {}

## @param existingConfiguration Name of existing ConfigMap object with the Logstash configuration (`input`, `filter`, and `output` will be ignored).

##

existingConfiguration: ""

## @param enableMultiplePipelines Allows user to use multiple pipelines

## ref: https://www.elastic.co/guide/en/logstash/master/multiple-pipelines.html

##

enableMultiplePipelines: false

## @param extraVolumes Array to add extra volumes (evaluated as a template)

## extraVolumes:

## - name: myvolume

## configMap:

## name: myconfigmap

##

extraVolumes: []

## @param extraVolumeMounts Array to add extra mounts (normally used with extraVolumes, evaluated as a template)

## extraVolumeMounts:

## - mountPath: /opt/bitnami/desired-path

## name: myvolume

## readOnly: true

##

extraVolumeMounts: []

## ServiceAccount for Logstash

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/configure-service-account/

##

serviceAccount:

## @param serviceAccount.create Enable creation of ServiceAccount for Logstash pods

##

create: true

## @param serviceAccount.name The name of the service account to use. If not set and `create` is `true`, a name is generated

## If not set and create is true, a name is generated using the logstash.serviceAccountName template

##

name: ""

## @param serviceAccount.automountServiceAccountToken Allows automount of ServiceAccountToken on the serviceAccount created

## Can be set to false if pods using this serviceAccount do not need to use K8s API

##

automountServiceAccountToken: true

## @param serviceAccount.annotations Additional custom annotations for the ServiceAccount

##

annotations: {}

## @param containerPorts [array] Array containing the ports to open in the Logstash container (evaluated as a template)

##

containerPorts:

- name: http

containerPort: 8080

protocol: TCP

- name: monitoring

containerPort: 9600

protocol: TCP

## - name: syslog-udp

## containerPort: 1514

## protocol: UDP

## - name: syslog-tcp

## containerPort: 1514

## protocol: TCP

##

## @param initContainers Add additional init containers to the Logstash pod(s)

## ref: https://kubernetes.io/docs/concepts/workloads/pods/init-containers/

## e.g:

## initContainers:

## - name: your-image-name

## image: your-image

## imagePullPolicy: Always

## command: ['sh', '-c', 'echo "hello world"']

##

initContainers: []

## @param sidecars Add additional sidecar containers to the Logstash pod(s)

## e.g:

## sidecars:

## - name: your-image-name

## image: your-image

## imagePullPolicy: Always

## ports:

## - name: portname

## containerPort: 1234

##

sidecars: []

## @param replicaCount Number of Logstash replicas to deploy

##

replicaCount: 1

## @param updateStrategy.type Update strategy type (`RollingUpdate`, or `OnDelete`)

## ref: https://kubernetes.io/docs/tutorials/stateful-application/basic-stateful-set/#updating-statefulsets

##

updateStrategy:

type: RollingUpdate

## @param podManagementPolicy Pod management policy

## https://kubernetes.io/docs/concepts/workloads/controllers/statefulset/#pod-management-policies

##

podManagementPolicy: OrderedReady

## @param podAnnotations Pod annotations

## Ref: https://kubernetes.io/docs/concepts/overview/working-with-objects/annotations/

##

podAnnotations: {}

## @param podLabels Extra labels for Logstash pods

## ref: https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/

##

podLabels: {}

## @param podAffinityPreset Pod affinity preset. Ignored if `affinity` is set. Allowed values: `soft` or `hard`

## ref: https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#inter-pod-affinity-and-anti-affinity

##

podAffinityPreset: ""

## @param podAntiAffinityPreset Pod anti-affinity preset. Ignored if `affinity` is set. Allowed values: `soft` or `hard`

## Ref: https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#inter-pod-affinity-and-anti-affinity

##

podAntiAffinityPreset: soft

## Node affinity preset

## Ref: https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity

##

nodeAffinityPreset:

## @param nodeAffinityPreset.type Node affinity preset type. Ignored if `affinity` is set. Allowed values: `soft` or `hard`

##

type: ""

## @param nodeAffinityPreset.key Node label key to match. Ignored if `affinity` is set.

## E.g.

## key: "kubernetes.io/e2e-az-name"

##

key: ""

## @param nodeAffinityPreset.values Node label values to match. Ignored if `affinity` is set.

## E.g.

## values:

## - e2e-az1

## - e2e-az2

##

values: []

## @param affinity Affinity for pod assignment

## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity

## Note: podAffinityPreset, podAntiAffinityPreset, and nodeAffinityPreset will be ignored when it's set

##

affinity: {}

## @param nodeSelector Node labels for pod assignment

## ref: https://kubernetes.io/docs/user-guide/node-selection/

##

nodeSelector: {}

## @param tolerations Tolerations for pod assignment

## ref: https://kubernetes.io/docs/concepts/configuration/taint-and-toleration/

##

tolerations: []

## @param priorityClassName Pod priority

## ref: https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/

##

priorityClassName: ""

## @param schedulerName Name of the k8s scheduler (other than default)

## ref: https://kubernetes.io/docs/tasks/administer-cluster/configure-multiple-schedulers/

##

schedulerName: ""

## @param terminationGracePeriodSeconds In seconds, time the given to the Logstash pod needs to terminate gracefully

## ref: https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods

##

terminationGracePeriodSeconds: ""

## @param topologySpreadConstraints Topology Spread Constraints for pod assignment

## https://kubernetes.io/docs/concepts/workloads/pods/pod-topology-spread-constraints/

## The value is evaluated as a template

##

topologySpreadConstraints: []

## K8s Security Context for Logstash pods

## Configure Pods Security Context

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-pod

## @param podSecurityContext.enabled Enabled Logstash pods' Security Context

## @param podSecurityContext.fsGroup Set Logstash pod's Security Context fsGroup

##

podSecurityContext:

enabled: true

fsGroup: 1001

## Configure Container Security Context

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-pod

## @param containerSecurityContext.enabled Enabled Logstash containers' Security Context

## @param containerSecurityContext.runAsUser Set Logstash containers' Security Context runAsUser

## @param containerSecurityContext.runAsNonRoot Set Logstash container's Security Context runAsNonRoot

##

containerSecurityContext:

enabled: true

runAsUser: 1001

runAsNonRoot: true

## @param command Override default container command (useful when using custom images)

##

command: []

## @param args Override default container args (useful when using custom images)

##

args: []

## @param lifecycleHooks for the Logstash container(s) to automate configuration before or after startup

##

lifecycleHooks: {}

## Logstash containers' resource requests and limits

## ref: https://kubernetes.io/docs/user-guide/compute-resources/

## We usually recommend not to specify default resources and to leave this as a conscious

## choice for the user. This also increases chances charts run on environments with little

## resources, such as Minikube. If you do want to specify resources, uncomment the following

## lines, adjust them as necessary, and remove the curly braces after 'resources:'.

## @param resources.limits The resources limits for the Logstash container

## @param resources.requests The requested resources for the Logstash container

##

resources:

## Example:

## limits:

## cpu: 100m

## memory: 128Mi

limits: {}

## Examples:

## requests:

## cpu: 100m

## memory: 128Mi

requests: {}

## Configure extra options for Logstash containers' liveness, readiness and startup probes

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-probes/#configure-probes

## @param startupProbe.enabled Enable startupProbe

## @param startupProbe.initialDelaySeconds Initial delay seconds for startupProbe

## @param startupProbe.periodSeconds Period seconds for startupProbe

## @param startupProbe.timeoutSeconds Timeout seconds for startupProbe

## @param startupProbe.failureThreshold Failure threshold for startupProbe

## @param startupProbe.successThreshold Success threshold for startupProbe

##

startupProbe:

enabled: false

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 6

## @param livenessProbe.enabled Enable livenessProbe

## @param livenessProbe.initialDelaySeconds Initial delay seconds for livenessProbe

## @param livenessProbe.periodSeconds Period seconds for livenessProbe

## @param livenessProbe.timeoutSeconds Timeout seconds for livenessProbe

## @param livenessProbe.failureThreshold Failure threshold for livenessProbe

## @param livenessProbe.successThreshold Success threshold for livenessProbe

##

livenessProbe:

enabled: true

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 6

## @param readinessProbe.enabled Enable readinessProbe

## @param readinessProbe.initialDelaySeconds Initial delay seconds for readinessProbe

## @param readinessProbe.periodSeconds Period seconds for readinessProbe

## @param readinessProbe.timeoutSeconds Timeout seconds for readinessProbe

## @param readinessProbe.failureThreshold Failure threshold for readinessProbe

## @param readinessProbe.successThreshold Success threshold for readinessProbe

##

readinessProbe:

enabled: true

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 6

## @param customStartupProbe Custom startup probe for the Web component

##

customStartupProbe: {}

## @param customLivenessProbe Custom liveness probe for the Web component

##

customLivenessProbe: {}

## @param customReadinessProbe Custom readiness probe for the Web component

##

customReadinessProbe: {}

## Service parameters

##

service:

## @param service.type Kubernetes service type (`ClusterIP`, `NodePort`, or `LoadBalancer`)

##

type: ClusterIP

## @param service.ports [array] Logstash service ports (evaluated as a template)

##

ports:

- name: http

port: 8080

targetPort: http

protocol: TCP

## - name: syslog-udp

## port: 1514

## targetPort: syslog-udp

## protocol: UDP

## - name: syslog-tcp

## port: 1514

## targetPort: syslog-tcp

## protocol: TCP

##

## @param service.loadBalancerIP loadBalancerIP if service type is `LoadBalancer`

##

loadBalancerIP: ""

## @param service.loadBalancerSourceRanges Addresses that are allowed when service is LoadBalancer

## https://kubernetes.io/docs/tasks/access-application-cluster/configure-cloud-provider-firewall/#restrict-access-for-loadbalancer-service

## e.g:

## loadBalancerSourceRanges:

## - 10.10.10.0/24

##

loadBalancerSourceRanges: []

## @param service.externalTrafficPolicy External traffic policy, configure to Local to preserve client source IP when using an external loadBalancer

## ref https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ip

##

externalTrafficPolicy: ""

## @param service.clusterIP Static clusterIP or None for headless services

## ref: https://kubernetes.io/docs/concepts/services-networking/service/#choosing-your-own-ip-address

## e.g:

## clusterIP: None

##

clusterIP: ""

## @param service.annotations Annotations for Logstash service

##

annotations: {}

## @param service.sessionAffinity Session Affinity for Kubernetes service, can be "None" or "ClientIP"

## If "ClientIP", consecutive client requests will be directed to the same Pod

## ref: https://kubernetes.io/docs/concepts/services-networking/service/#virtual-ips-and-service-proxies

##

sessionAffinity: None

## @param service.sessionAffinityConfig Additional settings for the sessionAffinity

## sessionAffinityConfig:

## clientIP:

## timeoutSeconds: 300

##

sessionAffinityConfig: {}

## Persistence parameters

##

persistence:

## @param persistence.enabled Enable Logstash data persistence using PVC

##

enabled: false

## @param persistence.existingClaim A manually managed Persistent Volume and Claim

## If defined, PVC must be created manually before volume will be bound

## The value is evaluated as a template

##

existingClaim: ""

## @param persistence.storageClass PVC Storage Class for Logstash data volume

## If defined, storageClassName: <storageClass>

## If set to "-", storageClassName: "", which disables dynamic provisioning

## If undefined (the default) or set to null, no storageClassName spec is

## set, choosing the default provisioner.

##

storageClass: ""

## @param persistence.accessModes PVC Access Mode for Logstash data volume

##

accessModes:

- ReadWriteOnce

## @param persistence.size PVC Storage Request for Logstash data volume

##

size: 2Gi

## @param persistence.annotations Annotations for the PVC

##

annotations: {}

## @param persistence.mountPath Mount path of the Logstash data volume

##

mountPath: /bitnami/logstash/data

## @param persistence.selector Selector to match an existing Persistent Volume for WordPress data PVC

## If set, the PVC can't have a PV dynamically provisioned for it

## E.g.

## selector:

## matchLabels:

## app: my-app

##

selector: {}

## Init Container parameters

## Change the owner and group of the persistent volume(s) mountpoint(s) to 'runAsUser:fsGroup' on each component

## values from the securityContext section of the component

##

volumePermissions:

## @param volumePermissions.enabled Enable init container that changes the owner and group of the persistent volume(s) mountpoint to `runAsUser:fsGroup`

##

enabled: false

## The security context for the volumePermissions init container

## @param volumePermissions.securityContext.runAsUser User ID for the volumePermissions init container

##

securityContext:

runAsUser: 0

## @param volumePermissions.image.registry Init container volume-permissions image registry

## @param volumePermissions.image.repository Init container volume-permissions image repository

## @param volumePermissions.image.tag Init container volume-permissions image tag (immutable tags are recommended)

## @param volumePermissions.image.digest Init container volume-permissions image digest in the way sha256:aa.... Please note this parameter, if set, will override the tag

## @param volumePermissions.image.pullPolicy Init container volume-permissions image pull policy

## @param volumePermissions.image.pullSecrets Specify docker-registry secret names as an array

##

image:

registry: docker.io

repository: bitnami/bitnami-shell

tag: 11-debian-11-r70

digest: ""

## Specify a imagePullPolicy

## Defaults to 'Always' if image tag is 'latest', else set to 'IfNotPresent'

## ref: https://kubernetes.io/docs/user-guide/images/#pre-pulling-images

##

pullPolicy: IfNotPresent

## Optionally specify an array of imagePullSecrets (secrets must be manually created in the namespace)

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

## Example:

## pullSecrets:

## - myRegistryKeySecretName

##

pullSecrets: []

## Init Container resource requests and limits

## ref: https://kubernetes.io/docs/user-guide/compute-resources/

## We usually recommend not to specify default resources and to leave this as a conscious

## choice for the user. This also increases chances charts run on environments with little

## resources, such as Minikube. If you do want to specify resources, uncomment the following

## lines, adjust them as necessary, and remove the curly braces after 'resources:'.

## @param volumePermissions.resources.limits Init container volume-permissions resource limits

## @param volumePermissions.resources.requests Init container volume-permissions resource requests

##

resources:

## Example:

## limits:

## cpu: 100m

## memory: 128Mi

limits: {}

## Examples:

## requests:

## cpu: 100m

## memory: 128Mi

requests: {}

## Configure the ingress resource that allows you to access the

## Logstash installation. Set up the URL

## ref: https://kubernetes.io/docs/user-guide/ingress/

##

ingress:

## @param ingress.enabled Enable ingress controller resource

##

enabled: false

## @param ingress.selfSigned Create a TLS secret for this ingress record using self-signed certificates generated by Helm

##

selfSigned: false

## @param ingress.pathType Ingress Path type

##

pathType: ImplementationSpecific

## @param ingress.apiVersion Override API Version (automatically detected if not set)

##

apiVersion: ""

## @param ingress.hostname Default host for the ingress resource

##

hostname: logstash.local

## @param ingress.path The Path to Logstash. You may need to set this to '/*' in order to use this with ALB ingress controllers.

##

path: /

## @param ingress.annotations Additional annotations for the Ingress resource. To enable certificate autogeneration, place here your cert-manager annotations.

## For a full list of possible ingress annotations, please see

## ref: https://github.com/kubernetes/ingress-nginx/blob/master/docs/user-guide/nginx-configuration/annotations.md

## Use this parameter to set the required annotations for cert-manager, see

## ref: https://cert-manager.io/docs/usage/ingress/#supported-annotations

##

## e.g:

## annotations:

## kubernetes.io/ingress.class: nginx

## cert-manager.io/cluster-issuer: cluster-issuer-name

##

annotations: {}

## @param ingress.tls Enable TLS configuration for the hostname defined at ingress.hostname parameter

## TLS certificates will be retrieved from a TLS secret with name: {{- printf "%s-tls" .Values.ingress.hostname }}

## You can use the ingress.secrets parameter to create this TLS secret or relay on cert-manager to create it

##

tls: false

## @param ingress.extraHosts The list of additional hostnames to be covered with this ingress record.

## Most likely the hostname above will be enough, but in the event more hosts are needed, this is an array

## extraHosts:

## - name: logstash.local

## path: /

##

extraHosts: []

## @param ingress.extraPaths Any additional arbitrary paths that may need to be added to the ingress under the main host.

## For example: The ALB ingress controller requires a special rule for handling SSL redirection.

## extraPaths:

## - path: /*

## backend:

## serviceName: ssl-redirect

## servicePort: use-annotation

##

extraPaths: []

## @param ingress.extraRules The list of additional rules to be added to this ingress record. Evaluated as a template

## Useful when looking for additional customization, such as using different backend

##

extraRules: []

## @param ingress.extraTls The tls configuration for additional hostnames to be covered with this ingress record.

## see: https://kubernetes.io/docs/concepts/services-networking/ingress/#tls

## extraTls:

## - hosts:

## - logstash.local

## secretName: logstash.local-tls

##

extraTls: []

## @param ingress.secrets If you're providing your own certificates, please use this to add the certificates as secrets

## key and certificate should start with -----BEGIN CERTIFICATE----- or

## -----BEGIN RSA PRIVATE KEY-----

##

## name should line up with a tlsSecret set further up

## If you're using cert-manager, this is unneeded, as it will create the secret for you if it is not set

##

## It is also possible to create and manage the certificates outside of this helm chart

## Please see README.md for more information

##

## secrets:

## - name: logstash.local-tls

## key:

## certificate:

##

secrets: []

## @param ingress.ingressClassName IngressClass that will be be used to implement the Ingress (Kubernetes 1.18+)

## This is supported in Kubernetes 1.18+ and required if you have more than one IngressClass marked as the default for your cluster .

## ref: https://kubernetes.io/blog/2020/04/02/improvements-to-the-ingress-api-in-kubernetes-1.18/

##

ingressClassName: ""

## Pod disruption budget configuration

## ref: https://kubernetes.io/docs/concepts/workloads/pods/disruptions/

## @param pdb.create If true, create a pod disruption budget for pods.

## @param pdb.minAvailable Minimum number / percentage of pods that should remain scheduled

## @param pdb.maxUnavailable Maximum number / percentage of pods that may be made unavailable

##

pdb:

create: false

minAvailable: 1

maxUnavailable: ""

- 配置一:选择StorageClass

因为我们使用的是阿里云,所以这里我们只能使用阿里云存储,另外阿里云存储分为云盘和NAS、OSS等,因为云盘最低要20Gi我们用不到这么大的,所以我们使用NAS即可,而NAS服务要开通和创建StorageClass,这里前期我写了一篇如何创建阿里云NAS的StorageClass文章,参考上面的来操作即可创建名为alicloud-nas-fs的StorageClass,这样系统就会自动通过PVC(存储卷声明)绑定择StorageClass动态创建出PV(存储卷)。

persistence:

enabled: true

# NAS

storageClass: alibabacloud-cnfs-nas

size: 2Gi- 配置二:配置service

设置service成外部也可以访问的NodePort模式,然后把官方注释的syslog-udp和syslog-tcp全部放开,如下所示:

service:

type: NodePort

ports:

- name: http

port: 8080

targetPort: http

protocol: TCP

- name: syslog-udp

port: 1514

targetPort: syslog-udp

protocol: UDP

- name: syslog-tcp

port: 1514

targetPort: syslog-tcp

protocol: TCP- 配置三:配置containerPorts

把cotainerPorts注释部分全部放开,如下所示:

containerPorts:

- name: http

containerPort: 8080

protocol: TCP

- name: monitoring

containerPort: 9600

protocol: TCP

- name: syslog-udp

containerPort: 1514

protocol: UDP

- name: syslog-tcp

containerPort: 1514

protocol: TCP- 配置四:配置input和output

这里要配置input中codec为json_lines格式,并且type=syslog(这个是自定义标签可以是任何名称,如:abc),配置output中elasticsearch的连接信息,如下所示:

input: |-

udp {

port => 1514

type => syslog

codec => json_lines

}

tcp {

port => 1514

type => syslog

codec => json_lines

}

http { port => 8080 }

output: |-

if [active] != "" {

elasticsearch {

hosts => ["xxx.xxx.xxx.xxx:xxxx"]

index => "%{active}-logs-%{+YYYY.MM.dd}"

}

} else {

elasticsearch {

hosts => ["xxx.xxx.xxx.xxx:xxxx"]

index => "ignore-logs-%{+YYYY.MM.dd}"

}

}

stdout { }上面的output中的active是logback里面的变量来设置es的索引值,后面我会写篇文章介绍下logback+logstash搭建日志系统,如果不需要去掉即可,如下所示:

output: |-

elasticsearch {

hosts => ["xxx.xxx.xxx.xxx:xxxx"]

index => "ignore-logs-%{+YYYY.MM.dd}"

}

stdout { }配置完成之后,完整的values.yaml文件如下所示 :

values.yaml

global:

storageClass: alibabacloud-cnfs-nas

service:

type: NodePort

ports:

- name: http

port: 8080

targetPort: http

protocol: TCP

- name: syslog-udp

port: 1514

targetPort: syslog-udp

protocol: UDP

- name: syslog-tcp

port: 1514

targetPort: syslog-tcp

protocol: TCP

persistence:

enabled: true

# NAS

storageClass: alibabacloud-cnfs-nas

size: 2Gi

containerPorts:

- name: http

containerPort: 8080

protocol: TCP

- name: monitoring

containerPort: 9600

protocol: TCP

- name: syslog-udp

containerPort: 1514

protocol: UDP

- name: syslog-tcp

containerPort: 1514

protocol: TCP

input: |-

udp {

port => 1514

type => syslog

codec => json

}

tcp {

port => 1514

type => syslog

codec => json

}

http { port => 8080 }

output: |-

if [active] != "" {

elasticsearch {

hosts => ["xxx.xxx.xxx.xxx:xxxx"]

index => "%{active}-logs-%{+YYYY.MM.dd}"

}

} else {

elasticsearch {

hosts => ["xxx.xxx.xxx.xxx:xxxx"]

index => "logs-%{+YYYY.MM.dd}"

}

}

stdout { }接着,我们来通过如下helm命令来创建logstash,

$ kubectl create namespace logstash

$ helm install -f values.yaml logstash bitnami/logstash --namespace logstash创建成功之后,会有如下输出,

NAME: logstash

LAST DEPLOYED: Wed Sep 27 10:58:35 2023

NAMESPACE: logstash

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: logstash

CHART VERSION: 5.4.1

APP VERSION: 8.7.1

** Please be patient while the chart is being deployed **

Logstash can be accessed through following DNS names from within your cluster:

Logstash: logstash.logstash.svc.cluster.local

To access Logstash from outside the cluster execute the following commands:

export NODE_PORT=$(kubectl get --namespace logstash -o jsonpath="{.spec.ports[0].nodePort}" services logstash)

export NODE_IP=$(kubectl get nodes --namespace logstash -o jsonpath="{.items[0].status.addresses[0].address}")

echo "http://${NODE_IP}:${NODE_PORT}"这样等待logstash的创建了,我们也可以通过命令查看是否创建成功:

☁ kubectl get all -n logstash

NAME READY STATUS RESTARTS AGE

pod/logstash-0 1/1 Running 0 34m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/logstash NodePort 192.168.132.104 <none> 8080:32345/TCP,1514:30227/UDP,1514:30227/TCP 34m

service/logstash-headless ClusterIP None <none> 8080/TCP,1514/UDP,1514/TCP 34m

NAME READY AGE

statefulset.apps/logstash 1/1 34m获取ip和port

通过下面命令获取:

export NODE_PORT=$(kubectl get --namespace logstash -o jsonpath="{.spec.ports[0].nodePort}" services logstash)

export NODE_IP=$(kubectl get nodes --namespace logstash -o jsonpath="{.items[0].status.addresses[0].address}")

echo "http://${NODE_IP}:${NODE_PORT}"输出:

http://xxx.xxx.xxx.xxx:xxxx使用curl测试logstash是否安装成功

1、首先把logstash上面的http端口8080映射成本地,如下所示:

kubectl port-forward service/logstash 8080:8080 -nlogstash2、使用curl测试是否成功,如下所示:

curl -X POST -d '{"message": "Hello World","env": "dev"}' http://localhost:8080注意:上面的32345对应的是http协议,而30227对应的是TCP协议,这个不要弄错了。

3、查看logstash记录

运行:

kubectl logs pod/logstash-0 -nlogstash查看日志:

{

"level" => "INFO",

"context" => "",

"message" => "[fixed-f9f3f5bc-ff64-460e-bcba-6f75983ef2ec-47.97.208.153_31226] [subscribe] gatewayserver-dev.yaml+DEFAULT_GROUP+f9f3f5bc-ff64-460e-bcba-6f75983ef2ec",

"thread" => "main",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.client.config.impl.ClientWorker",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.243Z,

"timestamp" => "2023-09-27 14:50:25,243",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "[fixed-f9f3f5bc-ff64-460e-bcba-6f75983ef2ec-47.97.208.153_31226] [add-listener] ok, tenant=f9f3f5bc-ff64-460e-bcba-6f75983ef2ec, dataId=gatewayserver.yaml, group=DEFAULT_GROUP, cnt=1",

"thread" => "main",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.client.config.impl.CacheData",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.243Z,

"timestamp" => "2023-09-27 14:50:25,243",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "[Nacos Config] Listening config: dataId=gatewayserver, group=DEFAULT_GROUP",

"thread" => "main",

"service" => "gatewayserver",

"logger" => "com.alibaba.cloud.nacos.refresh.NacosContextRefresher",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.243Z,

"timestamp" => "2023-09-27 14:50:25,243",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "[9958260f-3313-4e5a-9506-24c140c7d6c1] Receive server push request, request = NotifySubscriberRequest, requestId = 35420",

"thread" => "nacos-grpc-client-executor-47.97.208.153-30",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.common.remote.client",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.552Z,

"timestamp" => "2023-09-27 14:50:25,552",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "[9958260f-3313-4e5a-9506-24c140c7d6c1] Ack server push request, request = NotifySubscriberRequest, requestId = 35420",

"thread" => "nacos-grpc-client-executor-47.97.208.153-30",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.common.remote.client",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.555Z,

"timestamp" => "2023-09-27 14:50:25,555",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "new ips(1) service: DEFAULT_GROUP@@gatewayserver -> [{\"ip\":\"172.16.12.11\",\"port\":7777,\"weight\":1.0,\"healthy\":true,\"enabled\":true,\"ephemeral\":true,\"clusterName\":\"DEFAULT\",\"serviceName\":\"DEFAULT_GROUP@@gatewayserver\",\"metadata\":{\"preserved.register.source\":\"SPRING_CLOUD\"},\"instanceHeartBeatInterval\":5000,\"instanceHeartBeatTimeOut\":15000,\"ipDeleteTimeout\":30000}]",

"thread" => "nacos-grpc-client-executor-47.97.208.153-30",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.client.naming",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.554Z,

"timestamp" => "2023-09-27 14:50:25,554",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "current ips:(1) service: DEFAULT_GROUP@@gatewayserver -> [{\"ip\":\"172.16.12.11\",\"port\":7777,\"weight\":1.0,\"healthy\":true,\"enabled\":true,\"ephemeral\":true,\"clusterName\":\"DEFAULT\",\"serviceName\":\"DEFAULT_GROUP@@gatewayserver\",\"metadata\":{\"preserved.register.source\":\"SPRING_CLOUD\"},\"instanceHeartBeatInterval\":5000,\"instanceHeartBeatTimeOut\":15000,\"ipDeleteTimeout\":30000}]",

"thread" => "nacos-grpc-client-executor-47.97.208.153-30",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.client.naming",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.554Z,

"timestamp" => "2023-09-27 14:50:25,554",

"@version" => "1",

"type" => "syslog"

}删除

1、如果想删除,直接到阿里云的后台选择helm应用点击删除即可

2、记得还要把PVC也要删除,否则一直在记费。

总结

1、使用helm安装logstash集群非常方便

2、如果想开发本地访问,可以配置service使用NodePort类型,如果是生产首选默认的ClusterIP模式

3、因为阿里云云盘最低要求20G,而我们不需要这么大的,所以使用NAS更划算(有钱当我没说)

4、logback是使用tcp连接的logstash,测试的时候我使用的是http协议,这个要分清楚!

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。