I was working on a pull request to improve the performance of executemany() in asyncpg, who talks to the PostgreSQL server directly in its wire protocol (comparing to psycopg2 who uses libpq to speak the wire language for itself). Though the documentation for the protocol explained mostly everything, I found it a bit boring to read and it's easy to miss some very important details if you don't read every word. So I shall try to explain the message flow again here, hopefully in a nutshell.

I've also wrote some PoC code in Python for this post, and the source of the diagrams can be found here. please feel free to download and play with it.

The Start

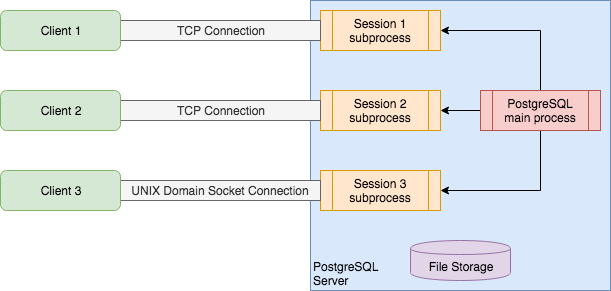

Most PostgreSQL clients exchange data with the server through a single streaming connection - a TCP connection or UNIX domain socket connection for example. Each connection keeps exactly one Session on the server side. Sessions are maintained by separate processes, so they are isolated from each other, like this:

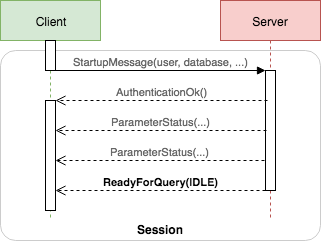

When the connection is established, the client must authenticate itself to the server, and tell the server which database to use for this Session, by sending some Messages defined in the protocol back and forth. Once the authentication process is done successfully, the server always send a ReadyForQuery Message to the client indicating that it's good to move forward.

In practice, the actual start-up message flow may be more complex than this. I'm skipping the details here and jumping to the query message flow.

Simple Query

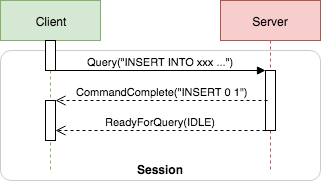

Client may initiate two types of queries: the Simple Query or the Extended Query. We'll start with the simple one. It is actually as simple as this:

As you can see, Simple Query starts with the client sending a Query Message with the actual SQL command. Once received, the server will process the SQL and execute it, and return the result status string back to the client in a CommandComplete Message. Likewise, the server will send a ReadyForQuery Message at the end, telling the client that it is ready for more queries.

Pipelining, Batching and Transactions

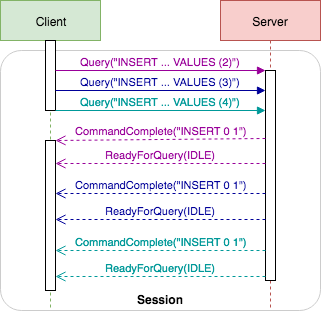

However, it is unnecessary for the client to wait for ReadyForQuery to send the next query - server sends it only to indicate that the job for the query is done, there won't be further responses returned for that query. The server can handle all the queries coming from the stream like a pipeline worker, returning the responses in the same order. So as far as the client could recognize who is who, it is actually okay to do this:

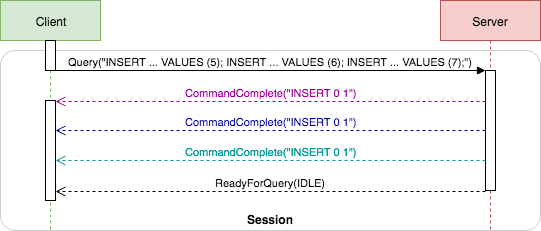

Simple Query also allows one Query Message with multiple SQL commands separated with semicolons (;). So here is a similar way to insert 3 rows with a single Query Message:

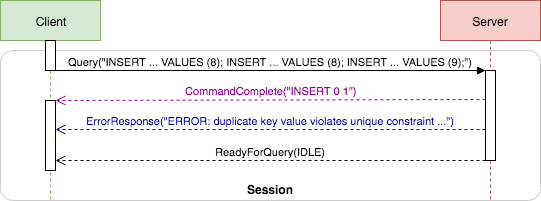

Much less ReadyForQuery noises, nice! However, this approach is actually different than the previous one. The server shall execute all SQL commands from the same Query Message in an implicit transaction, unless there is an explicit transaction in current Session. In this case, failure from any of the three SQL commands will rollback the implicit transaction, thus none of the commands could insert anything. For example:

Here the second INSERT ... VALUES (8) fails with a unique constraint check error, then the implicit transaction gets rolled back, skipping the third INSERT ... VALUES (9) at all.

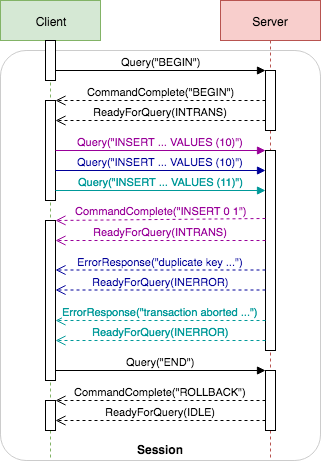

Instead in previous example with three Query Messages, each SQL command lives in their own transaction, so one failing command couldn't stop another from inserting successfully. In order to achieve the same transactional effect, we need to create an explicit transaction with the BEGIN...END SQL commands:

As you might have noticed, the ReadyForQuery Messages now provide different values: INTRANS means the Session has an active transaction when the server sends the Message, INERROR means the Session is in a failed transaction (indicating that further queries like INSERT ... 11 will be rejected until the transaction is closed), and IDLE means the Session is not in a transaction block any more.

You might also have noticed that, this example had two ErrorResponses, but previous example had only one. This is because ReadyForQuery cleared the "error returned, skipping all messages" state on the server side. For example, if we combine the INSERT ... 11 into previous Message like this: Query("INSERT ... VALUES (10); INSERT ... VALUES (11)"), then we'll have one ErrorResponse again. We'll get to this again later.

Row Sets

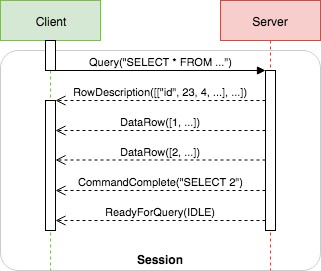

Now let's take a look at the SQL that actually returns data, e.g. a SELECT command:

In this diagram, there are two more types of Messages before the usual CommandComplete:

-

RowDescriptioncontains all information of each column of the result, including column name, column type and more. -

DataRowis simply one data row of the result, including all values of each column in the same order as described in theRowDescriptionMessage.

Obviously DataRow Message may repeat as many times as needed. Also, there can be no DataRow Message at all, indicating an empty result with "table header" (RowDescription) only.

Different types of SQL commands may also output other types of responses like CopyInResponse, CopyOutResponse or NoticeResponse, but I'm not covering them here.

Behind the Scenes

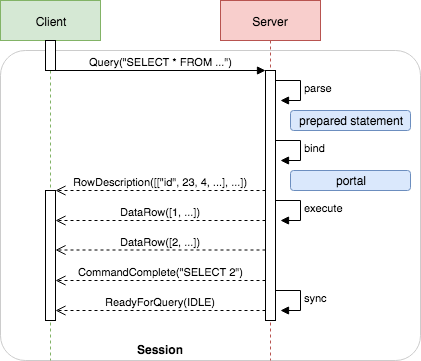

On the backend, the PostgreSQL server is actually doing more to generate such responses. The following diagram describes roughly how this process looks like:

- On receiving a

QueryMessage, the server will firstly start an implicit transaction if there is no transaction in the Session. -

parse - PostgreSQL server will then parse the given SQL string into a prepared statement in the Session. And yes it is the same kind of prepared statement as you can generate with SQL

PREPARE, only that this one here has no name. Thus it is also called unnamed prepared statement, or unnamed statement in short. -

bind - Then the server will create a portal in current transaction with above prepared statement, and run the query planning. Likewise, portal is also known as server-side cursor, which you can create with SQL

DECLARE CURSOR. Similarly the portal in Simple Query is an unnamed portal. - With a portal, the server has enough knowledge about the result, so it will send over a

RowDescriptionMessage. -

execute - Eventually, the server executes the portal, and result rows get generated and sent to the client. When the cursor hits the end, a

CommandCompleteMessage is sent. -

sync - At last, the server closes the implicit transaction - that means either to commit if all good, or rollback on error. However, it will not close an explicit transaction, because in that case

Query("BEGIN")won't work. Finally, aReadyForQueryMessage is sent to the client after all this.

If there are multiple SQL commands in one Query Message, above process is simply repeated several times, but sync runs only once at the very end so that all SQL commands share the same implicit transaction.

Extended Query

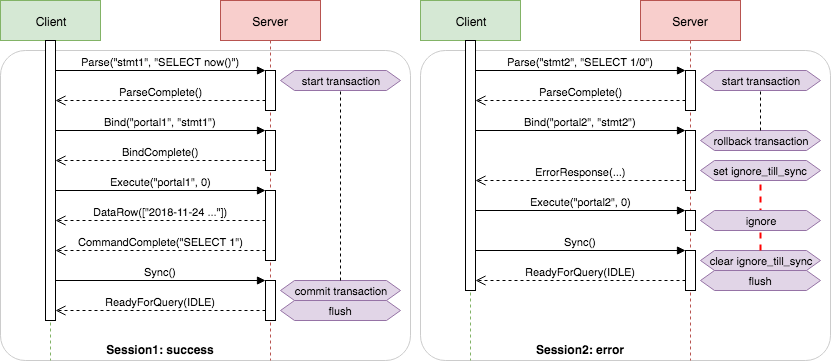

For a PostgreSQL server, Extended Query runs in the same way as above - only that it breaks down each step into separate Messages, allowing fine control to gain much more possibilities. For the same example with Extended Query:

In brief, we parsed the SQL string into stmt1, bound it into portal1, described the portal for RowDescription, executed the portal for DataRows, then put an end with Sync. The difference is, the prepared statement and portal now have names. It is possible to create multiple prepared statements or portals and use them alternatively.

Pipelining

Actually in previous diagram, the client wasn't waiting for any responses to send the next Message. Because the responses won't arrive at all until Sync is issued (if everything goes well). We put it that way to better explain the consequences of each Message. In fact the network sequence is more like this:

It is okay for the client to send each Message one by one, or concatenate them into a big buffer and send altogether. Because the server will do the same buffering thing to save round-trip time, and only flush the buffer into the wire when Sync or Flush is issued, or as soon as an error occurred.

Comparing to Sync generates ReadyForQuery, Flush generates nothing at all but only flushes the server-side buffer.

Transaction

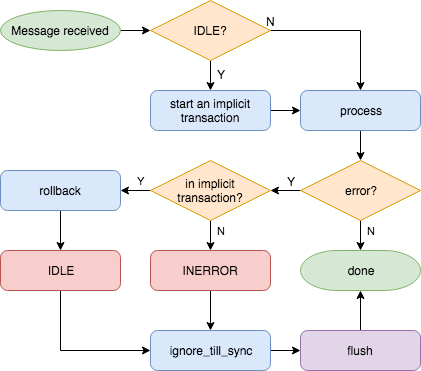

Because Extended Query is simply Simple Query extended, some similar rules we discussed previously also apply here. Take implicit transaction for example: within a Session when the PostgreSQL server receives a Message (one of Parse, Bind, Execute or Describe) from the client, it will firstly check current transaction state. If there was no transaction (either explicit or implicit - in IDLE state), it will start an implicit one immediately before handling the Message.

Handling Messages may cause errors. In implicit transactions, errors lead to rollbacks; but in explicit transactions, they put the Session into INERROR state:

In addition for extended query, you may issue as many Messages as you want before Sync - you can Parse 5 different SQLs, Bind 3 of them, and Execute them all. But a failing Message will set the Session into ignore_till_sync mode, meaning that the server will ignore any Message until Sync is found. This is similar to using one Simple Query Message to execute many SQLs joint with semicolons (;), one failing SQL cause the rest ignored. At last, errors always cause an immediate flush of the server-side buffer.

If the Message turns out to be a Sync, it shall clear the ignore_till_sync mode first. Then the server will check if the current transaction is an implicit one. If yes, it will try to commit the transaction. Sync won't touch an explicit transaction, but it will send a ReadyForQuery response and flush the server-side buffer anyhow:

Therefore, Sync is the normal way closing an implicit transaction - it would commit a good transaction, or reset an aborted transaction to normal:

You may also issue an explicit COMMIT or ROLLBACK SQL command to end an implicit transaction in the way you want. But 1), the PostgreSQL server shall complain about it with an extra NoticeResponse warning, and 2), in ignore_till_sync mode, the server will ignore any Messages other than Sync, even if it is a ROLLBACK. Therefore, it is only meaningful to rollback an implicit transaction with an explicit ROLLBACK while there was no error:

Behind the scene, the PostgreSQL server is using a much more complex state machine to maintain the transaction block state. This diagram only shows an essential part of it:

Note that the purple tags are actual transaction boundaries. The transaction state we get from ReadyForQuery Message are simply judged by the colors of each state. Please note that, if you get a ReadyForQuery from an implicit transaction (the two states in dashed frame), it always states that the transaction is IDLE because Sync does the commit first. I guess that's why the STARTED state is actually categorized as an IDLE state in PostgreSQL source code, but I'm marking it as INTRANS anyway here because the transaction did start beforehand. It is also interesting to see that, any transaction starts as an implicit one - only BEGIN commands (or its varient) could mark it as an explicit transaction.

In explicit transactions, Extended Query may also trigger the ignore_till_sync mode with a failing Message. As Sync won't rollback the explicit transaction, it is only used to clear the ignore_till_sync mode, making space for ROLLBACK command. Therefore, it is rare to execute multiple SQL commands within one Sync. The usual pattern is Bind -> Execute -> Sync, with optional Parse and Describe. Externally, the state transitioning looks like this:

If an operation is not seen for a state in this diagram, it means this missing operation won't lead to a state change. For example, issuing a Sync in explicit INTRANS has no effect but flushing the buffer. And success means any other Message that runs successfully (e.g. executing SELECT now()), while error means it results with an error (e.g. executing SELECT 1/0).

Additionally, please be aware that, using Query is identical to call Parse -> Bind -> Describe -> Execute -> Sync. If you are mixing Simple Query in an Extended Query, the Query will close the implicit transaction in Extended Query.

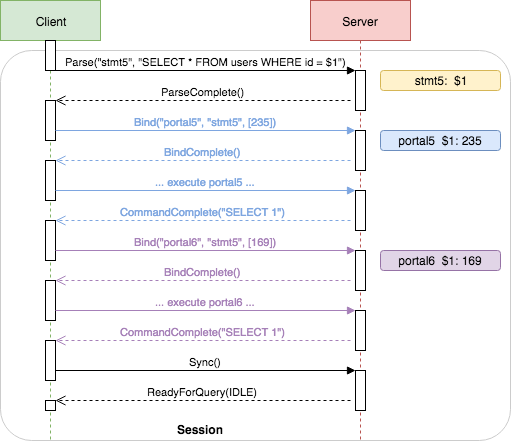

Parameter

You may wonder why having the portal trouble at all when there is already prepared statement - why didn't PostgreSQL merge the cursor feature into prepared statements? The reason is about caching prepared statements. It is a waste to repeatingly parse the same SQL over and over again: say for a web server we need to load user data for every request, we may use SELECT * FROM users WHERE id = 235 for user 235, and SELECT * FROM users WHERE id = 169 for user 169. The only difference in SQL is the user's ID - if we can turn that into a parameter, we can then parse the SQL string only once and reuse the prepared statement for different users.

This is exactly how portal helps: it allows binding the actual parameter values with the same prepared statements into different portals. For example:

The parameter placeholder is $1, indicating the first parameter in the list provided in Bind. Each portal is an actual execution handle of the query with specific parameter values. You can have as many portals as you need, as far as the stmt5 lives.

Lifetime

Named prepared statements live until the end of the Session, unless explicitly freed with the Close("S", stmt1") Message ("S" for Statement). That means if not closed, you may use stmt1 whenever you want within the same TCP connection. But before closing, you cannot create another prepared statement with the same again in the same Session, doing so will end up with an ErrorResponse.

Prepared statements are usually managed by PostgreSQL drivers like asyncpg in a way of LRU cache, in order to save resources parsing the same SQL strings.

However, named portals cannot live that long - they lasts until the end of the transaction if not explicitly closed with Close("P", "portal1") Message ("P" for Portal). In previous example, there was only one implicit transaction that ended at Sync(), so it is possible to reuse the names portal1 and portal2 after that.

It is possible to manually create or close unnamed prepared statements and portals in Extended Query - just setting the name to an empty string ("") will do. They are identical to named statements and portals except for one thing: it is not an error to create a new unnamed statement or portal if an existing one wasn't closed - it's simply overwritten. This is extremely convenient if you need some one-time use statements or portals - just create one and use it at once, then forget about it. But be aware that, Simple Query also creates unnamed statements and portals, mixing using it will cause unnamed statements or portals you created overwritten.

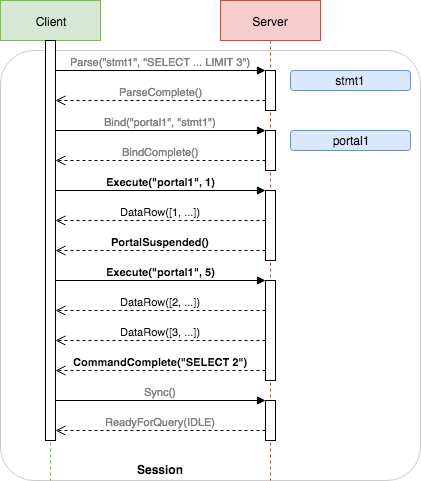

Server-side Cursor

If not closed, portals may live throughout a transaction. It is designed this way so that it can yield results incrementally like a cursor. Note the second parameter 0 in Execute Messages previously - it meant to fetch all results. If given a positive number, it will fetch at most that many rows from the result set. For other SQL commands that doesn't return results, this parameter has no effect - the command is always executed to completion.

If the limit is reached before the end of the result, a PortalSuspended Message will be returned, like the first Execute in this diagram. But, receiving a PortalSuspended doesn't necessarily mean there are more rows to receive - the second fetch may also get an empty list if the limit number of the first fetch is exactly the same as the row count. It is also worth noting that, the final row count in CommandComplete Message reflects only the number of rows in the last fetch, not the total number of all the rows returned.

There is no MOVE equivalent in Extended Query to move the cursor without returning. Instead you may directly use the SQL command, using the portal name as cursor name.

Describe

The Describe Message can be used on either prepared statements or portals, in order to get information of them. This selection is specified by the first parameter, where P means portal, and S means statement.

Describing a portal generates a RowDescription response like the one we got in Simple Query. It is usually essential for processing following DataRows if any. It returns NoData if describing a portal that returns nothing like an UPDATE command without RETURNING.

Describing a statement is a bit complicated - it returns two Messages. The first is ParameterDescription which describes the parameters and their types in the given statement. The second is still a RowDescription (or NoData), but it may lack some typing information because the statement is not bound to specific parameters thus some types of variables are still unknown.

The End

There are still a lot more I didn't cover here like COPY, NOTIFY, TLS and more. But it's already been days to write those above and I'm already getting messy with part of it, so I'll end this with two more closing topics.

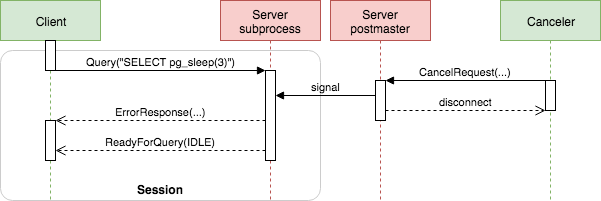

Cancel a Running Query

PostgreSQL allows canceling a running query from outside, by establishing a new connection to the server and send over a CancelRequest Message instead of the normal StartupMessage:

The Canceller may or may not be a a part of Client, as far as the CancelRequest contains essential subprocess ID and secret key of the target Session, which the Client could obtain during its initial authentication. Because the signal may arrive at any time, the cancellation is not a consistent event. That is to say, after sending the CancelRequest, the Client should be ready to face any possible responses including successful results or errors.

Bye

For a graceful shutdown, it's usually the Client sending a Terminate Message and close the connection. On receiving Terminate, the server will also close the connection and terminate the Session, rolling back any pending transactions. It is worth noting that, even though a broken connection without Terminate may do the same, the server would firstly finish any query at hand before noticing the disconnection, therefore separate SQL commands without transaction may still succeed during ungraceful shutdown.

References

https://www.pgcon.org/2014/sc...

https://www.postgresql.org/do...

https://www.postgresql.org/do...

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。