概述

Scrapy 是一个用 Python 开发的 web 抓取框架,用于抓取 web 站点并从页面中提取结构化的数据。Scrapy用途广泛,可以用于数据挖掘、监测和自动化测试。

- Scrapy 1.1 开始支持 Python3。(2016上半年)

- Scrapy 1.5 不再支持 Python 3.3。(2017下半年)

- Scrapy 官网:https://scrapy.org/

- Scrapy GitHub:https://github.com/scrapy/scrapy

- Scrapy pypi:https://pypi.org/project/Scrapy/

- Scrapy 官方文档:https://docs.scrapy.org/en/la...

- Scrapy 中文网 1.5 文档:http://www.scrapyd.cn/doc/

硬核知识点

基本的 request 和 response 对象

request: scrapy.http.request.Request

# HtmlResponse 继承自 TextResponse 继承自 HtmlResponse

response: scrapy.http.response.html.HtmlResponse

response: scrapy.http.response.text.TextResponse

response: scrapy.http.response.Response在 spider 内打印该 spider 的配置(settings)

for k in self.settings:

print(k, self.settings.get(k))

if isinstance(self.settings.get(k), scrapy.settings.BaseSettings):

for kk in self.settings.get(k):

print('\t', kk, self.settings.get(k).get(kk))Scrapy 队列中的请求个数

(How to get the number of requests in queue in scrapy?)

# scrapy.core.scheduler.Scheduler

# spider

len(self.crawler.engine.slot.scheduler)

# pipeline

len(spider.crawler.engine.slot.scheduler)Scrapy 当前正在网络请求的个数

# scrapy.core.engine.Slot.inprogress 就是个 set

# spider

len(self.crawler.engine.slot.inprogress)

# pipeline

len(spider.crawler.engine.slot.inprogress)Scrapy 在 spider 中获取 pipeline 对象

(How to get the pipeline object in Scrapy spider)

# Pipline

class MongoDBPipeline(object):

def __init__(self, mongodb_db=None, mongodb_collection=None):

self.connection = pymongo.Connection(settings['MONGODB_SERVER'], settings['MONGODB_PORT'])

def get_date(self):

pass

def open_spider(self, spider):

spider.myPipeline = self

def process_item(self, item, spider):

pass

# spider

class MySpider(Spider):

def __init__(self):

self.myPipeline = None

def start_requests(self):

# 可直接存储数据

self.mysqlPipeline.process_item(item, self)

def parse(self, response):

self.myPipeline.get_date()单 spider 多 cookie session

(Multiple cookie sessions per spider)

# Scrapy通过使用 cookiejar Request meta key来支持单spider追踪多cookie session。

# 默认情况下其使用一个cookie jar(session),不过您可以传递一个标示符来使用多个。

for i, url in enumerate(urls):

yield scrapy.Request("http://www.example.com", meta={'cookiejar': i},

callback=self.parse_page)

# 需要注意的是 cookiejar meta key不是”黏性的(sticky)”。 您需要在之后的request请求中接着传递。

def parse_page(self, response):

# do some processing

return scrapy.Request("http://www.example.com/otherpage",

meta={'cookiejar': response.meta['cookiejar']},

callback=self.parse_other_page)spider finished 的条件

Closing spider (finished)

# scrapy.core.engine.ExecutionEngine

def spider_is_idle(self, spider):

if not self.scraper.slot.is_idle():

# scraper is not idle

return False

if self.downloader.active:

# downloader has pending requests

return False

if self.slot.start_requests is not None:

# not all start requests are handled

return False

if self.slot.scheduler.has_pending_requests():

# scheduler has pending requests

return False

return True

# spider 里面打印条件

self.logger.debug('engine.scraper.slot.is_idle: %s' % repr(self.crawler.engine.scraper.slot.is_idle()))

self.logger.debug('\tengine.scraper.slot.active: %s' % repr(self.crawler.engine.scraper.slot.active))

self.logger.debug('\tengine.scraper.slot.queue: %s' % repr(self.crawler.engine.scraper.slot.queue))

self.logger.debug('engine.downloader.active: %s' % repr(self.crawler.engine.downloader.active))

self.logger.debug('engine.slot.start_requests: %s' % repr(self.crawler.engine.slot.start_requests))

self.logger.debug('engine.slot.scheduler.has_pending_requests: %s' % repr(self.crawler.engine.slot.scheduler.has_pending_requests()))判断空闲 idle 信号,添加请求

(Scrapy: How to manually insert a request from a spider_idle event callback?)

class FooSpider(BaseSpider):

yet = False

@classmethod

def from_crawler(cls, crawler, *args, **kwargs):

from_crawler = super(FooSpider, cls).from_crawler

spider = from_crawler(crawler, *args, **kwargs)

crawler.signals.connect(spider.idle, signal=scrapy.signals.spider_idle)

return spider

def idle(self):

if not self.yet:

self.crawler.engine.crawl(self.create_request(), self)

self.yet = True部分配置项说明

- HTTPERROR_ALLOW_ALL

默认值: False| non-200 response | timeout | |

|---|---|---|

| True | callback | errback |

| False | errback | errback |

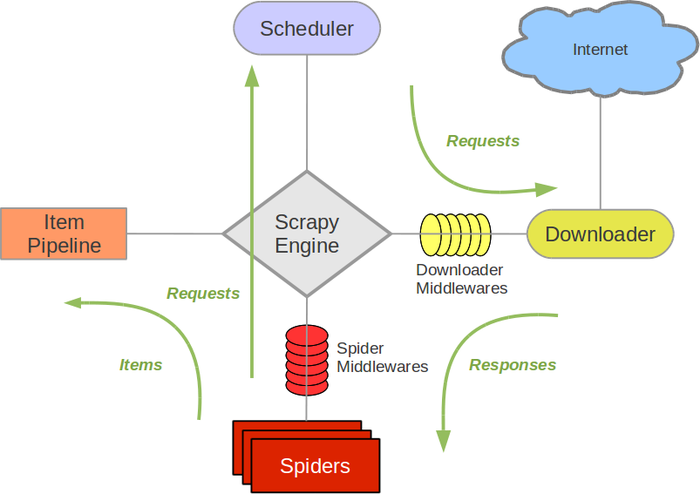

架构图

walker 看起来新图只是旧图的细化,无实质性差异。

本文出自 walker snapshot

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。