前言

最近在使用k8s搭建微服务时,发现需要手动修改yaml文件里面的pod name、pod image、svc name、ingress tls等等,非常麻烦,但是有了helm之后情况就不一样了,helm是k8s的包管理器,类似ubuntu的apt-get,centos的yum一样,有了helm安装包就非常方便了,下面就讲解通过helm来安装rabbitmq。

准备工作

- 安装k8s

我使用的是阿里云的ACK k8s服务。 - 安装k8s客户端:kubectl

kubectl安装地址 - 安装helm客户端

安装helm 配置helm repo源

下面是我添加的三个源:stable、bitnami和alihelm repo add stable https://charts.helm.sh/stable helm repo add bitnami https://charts.bitnami.com/bitnami helm repo add ali https://apphub.aliyuncs.com/stable查看已经安装好的repo源

$ helm repo list NAME URL stable https://charts.helm.sh/stable bitnami https://charts.bitnami.com/bitnami ali https://apphub.aliyuncs.com/stable/

Rabbitmq安装方式

安装rabbitmq方法有很多下面列举几个常规安装方法:

- centos 7/8安装rabbitmq阿里云ECS CentOS提供安装

- k8s安装rabbitmq官方文档提供安装

- helm安装rabbitmq社区大佬提供安装

安装前准备

首先我们看看helm里面有没有rabbitmq的chart,

$ helm search repo rabbitmq

NAME CHART VERSION APP VERSION DESCRIPTION

ali/prometheus-rabbitmq-exporter 0.5.5 v0.29.0 Rabbitmq metrics exporter for prometheus

ali/rabbitmq 6.18.2 3.8.2 DEPRECATED Open source message broker software ...

ali/rabbitmq-ha 1.47.0 3.8.7 Highly available RabbitMQ cluster, the open sou...

bitnami/rabbitmq 8.16.1 3.8.18 Open source message broker software that implem...

stable/prometheus-rabbitmq-exporter 0.5.6 v0.29.0 DEPRECATED Rabbitmq metrics exporter for promet...

stable/rabbitmq 6.18.2 3.8.2 DEPRECATED Open source message broker software ...

stable/rabbitmq-ha 1.47.1 3.8.7 DEPRECATED - Highly available RabbitMQ cluster,...可以看到不同的repo源提供的rabbitmq的chart的版本也不同,我们选用的是bitnami/rabbitmq,chart版本:8.16.1,APP版本:3.8.18 。

接着,我们要把stable/rabbitmq的chart文件下载下来,下载下来的chart文件是.tgz的压缩文件,解压一下即可,

helm pull bitnami/rabbitmq

tar zxf rabbitmq-8.16.1.tgz$ cd rabbitmq && ls -ls

total 168

8 -rwxr-xr-x 1 zhangwei staff 435 1 1 1970 Chart.yaml

72 -rwxr-xr-x 1 zhangwei staff 34706 1 1 1970 README.md

0 drwxr-xr-x 5 zhangwei staff 160 6 30 14:43 ci

0 drwxr-xr-x 19 zhangwei staff 608 6 30 14:43 templates

40 -rwxr-xr-x 1 zhangwei staff 19401 1 1 1970 values-production.yaml

8 -rwxr-xr-x 1 zhangwei staff 2854 1 1 1970 values.schema.json

40 -rwxr-xr-x 1 zhangwei staff 18986 1 1 1970 values.yaml然后,我们查看一下values.yaml对外提供的可用参数,也可以通过命令查看:

helm show values bitnami/rabbitmqvalues.yaml

## Global Docker image parameters

## Please, note that this will override the image parameters, including dependencies, configured to use the global value

## Current available global Docker image parameters: imageRegistry and imagePullSecrets

##

# global:

# imageRegistry: myRegistryName

# imagePullSecrets:

# - myRegistryKeySecretName

# storageClass: myStorageClass

## Bitnami RabbitMQ image version

## ref: https://hub.docker.com/r/bitnami/rabbitmq/tags/

##

image:

registry: docker.io

repository: bitnami/rabbitmq

tag: 3.8.2-debian-10-r30

## set to true if you would like to see extra information on logs

## it turns BASH and NAMI debugging in minideb

## ref: https://github.com/bitnami/minideb-extras/#turn-on-bash-debugging

debug: false

## Specify a imagePullPolicy

## Defaults to 'Always' if image tag is 'latest', else set to 'IfNotPresent'

## ref: http://kubernetes.io/docs/user-guide/images/#pre-pulling-images

##

pullPolicy: IfNotPresent

## Optionally specify an array of imagePullSecrets.

## Secrets must be manually created in the namespace.

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

##

# pullSecrets:

# - myRegistryKeySecretName

## String to partially override rabbitmq.fullname template (will maintain the release name)

##

# nameOverride:

## String to fully override rabbitmq.fullname template

##

# fullnameOverride:

## Use an alternate scheduler, e.g. "stork".

## ref: https://kubernetes.io/docs/tasks/administer-cluster/configure-multiple-schedulers/

##

# schedulerName:

## does your cluster have rbac enabled? assume yes by default

rbacEnabled: true

## RabbitMQ should be initialized one by one when building cluster for the first time.

## Therefore, the default value of podManagementPolicy is 'OrderedReady'

## Once the RabbitMQ participates in the cluster, it waits for a response from another

## RabbitMQ in the same cluster at reboot, except the last RabbitMQ of the same cluster.

## If the cluster exits gracefully, you do not need to change the podManagementPolicy

## because the first RabbitMQ of the statefulset always will be last of the cluster.

## However if the last RabbitMQ of the cluster is not the first RabbitMQ due to a failure,

## you must change podManagementPolicy to 'Parallel'.

## ref : https://www.rabbitmq.com/clustering.html#restarting

##

podManagementPolicy: OrderedReady

## section of specific values for rabbitmq

rabbitmq:

## RabbitMQ application username

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

##

username: user

## RabbitMQ application password

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

##

# password:

# existingPasswordSecret: name-of-existing-secret

## Erlang cookie to determine whether different nodes are allowed to communicate with each other

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

##

# erlangCookie:

# existingErlangSecret: name-of-existing-secret

## Node name to cluster with. e.g.: `clusternode@hostname`

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

##

# rabbitmqClusterNodeName:

## Value for the RABBITMQ_LOGS environment variable

## ref: https://www.rabbitmq.com/logging.html#log-file-location

##

logs: '-'

## RabbitMQ Max File Descriptors

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

## ref: https://www.rabbitmq.com/install-debian.html#kernel-resource-limits

##

setUlimitNofiles: true

ulimitNofiles: '65536'

## RabbitMQ maximum available scheduler threads and online scheduler threads

## ref: https://hamidreza-s.github.io/erlang/scheduling/real-time/preemptive/migration/2016/02/09/erlang-scheduler-details.html#scheduler-threads

##

maxAvailableSchedulers: 2

onlineSchedulers: 1

## Plugins to enable

plugins: "rabbitmq_management rabbitmq_peer_discovery_k8s"

## Extra plugins to enable

## Use this instead of `plugins` to add new plugins

extraPlugins: "rabbitmq_auth_backend_ldap"

## Clustering settings

clustering:

address_type: hostname

k8s_domain: cluster.local

## Rebalance master for queues in cluster when new replica is created

## ref: https://www.rabbitmq.com/rabbitmq-queues.8.html#rebalance

rebalance: false

loadDefinition:

enabled: false

secretName: load-definition

## environment variables to configure rabbitmq

## ref: https://www.rabbitmq.com/configure.html#customise-environment

env: {}

## Configuration file content: required cluster configuration

## Do not override unless you know what you are doing. To add more configuration, use `extraConfiguration` of `advancedConfiguration` instead

configuration: |-

## Clustering

cluster_formation.peer_discovery_backend = rabbit_peer_discovery_k8s

cluster_formation.k8s.host = kubernetes.default.svc.cluster.local

cluster_formation.node_cleanup.interval = 10

cluster_formation.node_cleanup.only_log_warning = true

cluster_partition_handling = autoheal

# queue master locator

queue_master_locator=min-masters

# enable guest user

loopback_users.guest = false

## Configuration file content: extra configuration

## Use this instead of `configuration` to add more configuration

extraConfiguration: |-

#disk_free_limit.absolute = 50MB

#management.load_definitions = /app/load_definition.json

## Configuration file content: advanced configuration

## Use this as additional configuraton in classic config format (Erlang term configuration format)

##

## If you set LDAP with TLS/SSL enabled and you are using self-signed certificates, uncomment these lines.

## advancedConfiguration: |-

## [{

## rabbitmq_auth_backend_ldap,

## [{

## ssl_options,

## [{

## verify, verify_none

## }, {

## fail_if_no_peer_cert,

## false

## }]

## ]}

## }].

##

advancedConfiguration: |-

## Enable encryption to rabbitmq

## ref: https://www.rabbitmq.com/ssl.html

##

tls:

enabled: false

failIfNoPeerCert: true

sslOptionsVerify: verify_peer

caCertificate: |-

serverCertificate: |-

serverKey: |-

# existingSecret: name-of-existing-secret-to-rabbitmq

## LDAP configuration

##

ldap:

enabled: false

server: ""

port: "389"

user_dn_pattern: cn=${username},dc=example,dc=org

tls:

# If you enabled TLS/SSL you can set advaced options using the advancedConfiguration parameter.

enabled: false

## Kubernetes service type

service:

type: ClusterIP

## Node port

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

##

# nodePort: 30672

## Set the LoadBalancerIP

##

# loadBalancerIP:

## Node port Tls

##

# nodeTlsPort: 30671

## Amqp port

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

##

port: 5672

## Amqp Tls port

##

tlsPort: 5671

## Dist port

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

##

distPort: 25672

## RabbitMQ Manager port

## ref: https://github.com/bitnami/bitnami-docker-rabbitmq#environment-variables

##

managerPort: 15672

## Service annotations

annotations: {}

# service.beta.kubernetes.io/aws-load-balancer-internal: 0.0.0.0/0

## Load Balancer sources

## https://kubernetes.io/docs/tasks/access-application-cluster/configure-cloud-provider-firewall/#restrict-access-for-loadbalancer-service

##

# loadBalancerSourceRanges:

# - 10.10.10.0/24

## Extra ports to expose

# extraPorts:

## Extra ports to be included in container spec, primarily informational

# extraContainerPorts:

# Additional pod labels to apply

podLabels: {}

## Pod Security Context

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/

##

securityContext:

enabled: true

fsGroup: 1001

runAsUser: 1001

extra: {}

persistence:

## this enables PVC templates that will create one per pod

enabled: true

## rabbitmq data Persistent Volume Storage Class

## If defined, storageClassName: <storageClass>

## If set to "-", storageClassName: "", which disables dynamic provisioning

## If undefined (the default) or set to null, no storageClassName spec is

## set, choosing the default provisioner. (gp2 on AWS, standard on

## GKE, AWS & OpenStack)

##

# storageClass: "-"

accessMode: ReadWriteOnce

## Existing PersistentVolumeClaims

## The value is evaluated as a template

## So, for example, the name can depend on .Release or .Chart

# existingClaim: ""

# If you change this value, you might have to adjust `rabbitmq.diskFreeLimit` as well.

size: 8Gi

# persistence directory, maps to the rabbitmq data directory

path: /opt/bitnami/rabbitmq/var/lib/rabbitmq

## Configure resource requests and limits

## ref: http://kubernetes.io/docs/user-guide/compute-resources/

##

resources: {}

networkPolicy:

## Enable creation of NetworkPolicy resources. Only Ingress traffic is filtered for now.

## ref: https://kubernetes.io/docs/concepts/services-networking/network-policies/

##

enabled: false

## The Policy model to apply. When set to false, only pods with the correct

## client label will have network access to the ports RabbitMQ is listening

## on. When true, RabbitMQ will accept connections from any source

## (with the correct destination port).

##

allowExternal: true

## Additional NetworkPolicy Ingress "from" rules to set. Note that all rules are OR-ed.

##

# additionalRules:

# - matchLabels:

# - role: frontend

# - matchExpressions:

# - key: role

# operator: In

# values:

# - frontend

## Replica count, set to 1 to provide a default available cluster

replicas: 1

## Pod priority

## https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/

# priorityClassName: ""

## updateStrategy for RabbitMQ statefulset

## ref: https://kubernetes.io/docs/concepts/workloads/controllers/statefulset/#update-strategies

updateStrategy:

type: RollingUpdate

## Node labels and tolerations for pod assignment

## ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#nodeselector

## ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#taints-and-tolerations-beta-feature

nodeSelector: {}

tolerations: []

affinity: {}

podDisruptionBudget: {}

# maxUnavailable: 1

# minAvailable: 1

## annotations for rabbitmq pods

podAnnotations: {}

## Configure the ingress resource that allows you to access the

## Wordpress installation. Set up the URL

## ref: http://kubernetes.io/docs/user-guide/ingress/

##

ingress:

## Set to true to enable ingress record generation

enabled: false

## The list of hostnames to be covered with this ingress record.

## Most likely this will be just one host, but in the event more hosts are needed, this is an array

## hostName: foo.bar.com

path: /

## Set this to true in order to enable TLS on the ingress record

## A side effect of this will be that the backend wordpress service will be connected at port 443

tls: false

## If TLS is set to true, you must declare what secret will store the key/certificate for TLS

tlsSecret: myTlsSecret

## Ingress annotations done as key:value pairs

## If you're using kube-lego, you will want to add:

## kubernetes.io/tls-acme: true

##

## For a full list of possible ingress annotations, please see

## ref: https://github.com/kubernetes/ingress-nginx/blob/master/docs/user-guide/nginx-configuration/annotations.md

##

## If tls is set to true, annotation ingress.kubernetes.io/secure-backends: "true" will automatically be set

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: true

## The following settings are to configure the frequency of the lifeness and readiness probes

livenessProbe:

enabled: true

initialDelaySeconds: 120

timeoutSeconds: 20

periodSeconds: 30

failureThreshold: 6

successThreshold: 1

readinessProbe:

enabled: true

initialDelaySeconds: 10

timeoutSeconds: 20

periodSeconds: 30

failureThreshold: 3

successThreshold: 1

metrics:

enabled: false

image:

registry: docker.io

repository: bitnami/rabbitmq-exporter

tag: 0.29.0-debian-10-r28

pullPolicy: IfNotPresent

## Optionally specify an array of imagePullSecrets.

## Secrets must be manually created in the namespace.

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

##

# pullSecrets:

# - myRegistryKeySecretName

## environment variables to configure rabbitmq_exporter

## ref: https://github.com/kbudde/rabbitmq_exporter#configuration

env: {}

## Metrics exporter port

port: 9419

## RabbitMQ address to connect to (from the same Pod, usually the local loopback address).

## If your Kubernetes cluster does not support IPv6, you can change to `127.0.0.1` in order to force IPv4.

## ref: https://kubernetes.io/docs/concepts/workloads/pods/pod-overview/#networking

rabbitmqAddress: localhost

## Comma-separated list of extended scraping capabilities supported by the target RabbitMQ server

## ref: https://github.com/kbudde/rabbitmq_exporter#extended-rabbitmq-capabilities

capabilities: "bert,no_sort"

resources: {}

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9419"

livenessProbe:

enabled: true

initialDelaySeconds: 15

timeoutSeconds: 5

periodSeconds: 30

failureThreshold: 6

successThreshold: 1

readinessProbe:

enabled: true

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 30

failureThreshold: 3

successThreshold: 1

## Prometheus Service Monitor

## ref: https://github.com/coreos/prometheus-operator

## https://github.com/coreos/prometheus-operator/blob/master/Documentation/api.md#endpoint

serviceMonitor:

## If the operator is installed in your cluster, set to true to create a Service Monitor Entry

enabled: false

## Specify the namespace in which the serviceMonitor resource will be created

# namespace: ""

## Specify the interval at which metrics should be scraped

interval: 30s

## Specify the timeout after which the scrape is ended

# scrapeTimeout: 30s

## Specify Metric Relabellings to add to the scrape endpoint

# relabellings:

## Specify honorLabels parameter to add the scrape endpoint

honorLabels: false

## Specify the release for ServiceMonitor. Sometimes it should be custom for prometheus operator to work

# release: ""

## Used to pass Labels that are used by the Prometheus installed in your cluster to select Service Monitors to work with

## ref: https://github.com/coreos/prometheus-operator/blob/master/Documentation/api.md#prometheusspec

additionalLabels: {}

## Custom PrometheusRule to be defined

## The value is evaluated as a template, so, for example, the value can depend on .Release or .Chart

## ref: https://github.com/coreos/prometheus-operator#customresourcedefinitions

prometheusRule:

enabled: false

additionalLabels: {}

namespace: ""

rules: []

## List of reules, used as template by Helm.

## These are just examples rules inspired from https://awesome-prometheus-alerts.grep.to/rules.html

## Please adapt them to your needs.

## Make sure to constraint the rules to the current rabbitmq service.

## Also make sure to escape what looks like helm template.

# - alert: RabbitmqDown

# expr: rabbitmq_up{service="{{ template "rabbitmq.fullname" . }}"} == 0

# for: 5m

# labels:

# severity: error

# annotations:

# summary: Rabbitmq down (instance {{ "{{ $labels.instance }}" }})

# description: RabbitMQ node down

# - alert: ClusterDown

# expr: |

# sum(rabbitmq_running{service="{{ template "rabbitmq.fullname" . }}"})

# < {{ .Values.replicas }}

# for: 5m

# labels:

# severity: error

# annotations:

# summary: Cluster down (instance {{ "{{ $labels.instance }}" }})

# description: |

# Less than {{ .Values.replicas }} nodes running in RabbitMQ cluster

# VALUE = {{ "{{ $value }}" }}

# - alert: ClusterPartition

# expr: rabbitmq_partitions{service="{{ template "rabbitmq.fullname" . }}"} > 0

# for: 5m

# labels:

# severity: error

# annotations:

# summary: Cluster partition (instance {{ "{{ $labels.instance }}" }})

# description: |

# Cluster partition

# VALUE = {{ "{{ $value }}" }}

# - alert: OutOfMemory

# expr: |

# rabbitmq_node_mem_used{service="{{ template "rabbitmq.fullname" . }}"}

# / rabbitmq_node_mem_limit{service="{{ template "rabbitmq.fullname" . }}"}

# * 100 > 90

# for: 5m

# labels:

# severity: warning

# annotations:

# summary: Out of memory (instance {{ "{{ $labels.instance }}" }})

# description: |

# Memory available for RabbmitMQ is low (< 10%)\n VALUE = {{ "{{ $value }}" }}

# LABELS: {{ "{{ $labels }}" }}

# - alert: TooManyConnections

# expr: rabbitmq_connectionsTotal{service="{{ template "rabbitmq.fullname" . }}"} > 1000

# for: 5m

# labels:

# severity: warning

# annotations:

# summary: Too many connections (instance {{ "{{ $labels.instance }}" }})

# description: |

# RabbitMQ instance has too many connections (> 1000)

# VALUE = {{ "{{ $value }}" }}\n LABELS: {{ "{{ $labels }}" }}

##

## Init containers parameters:

## volumePermissions: Change the owner of the persist volume mountpoint to RunAsUser:fsGroup

##

volumePermissions:

enabled: false

image:

registry: docker.io

repository: bitnami/minideb

tag: buster

pullPolicy: Always

## Optionally specify an array of imagePullSecrets.

## Secrets must be manually created in the namespace.

## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

##

# pullSecrets:

# - myRegistryKeySecretName

resources: {}

## forceBoot: executes 'rabbitmqctl force_boot' to force boot cluster shut down unexpectedly in an

## unknown order.

## ref: https://www.rabbitmq.com/rabbitmqctl.8.html#force_boot

##

forceBoot:

enabled: false

## Optionally specify extra secrets to be created by the chart.

## This can be useful when combined with load_definitions to automatically create the secret containing the definitions to be loaded.

##

extraSecrets: {}

# load-definition:

# load_definition.json: |

# {

# ...

# }

values.yaml文件是helm chart对外公开的配置信息,可根据需要自行修改,因为我们使用的是阿里云,所以存储使用alicloud-disk-ssd,注意alicloud-disk-ssd最低要求ssd为20G:

storageClass: "alicloud-disk-ssd"

size: 20Gi接着,我们创建rabbit命名空间

kubectl create namespace rabbit安装

因为开发环境我们要使用ip:port来访问,所以我们要配置service。

最终完整的values.yaml文件如下:

values.yaml

auth:

password: xxx

service:

type: NodePort

persistence:

# 云盘

# storageClass: "alicloud-disk-ssd"

# size: 20Gi

# NAS

storageClass: alibabacloud-cnfs-nas

size: 8Gi现在我们来安装rabbitmq,通过下面命令运行:

# 创建rabbitmq集群

helm install -f values.yaml test-rabbitmq bitnami/rabbitmq --namespace rabbit下面是安装时的输出:

输出开始

$ helm install -f test-values.yaml test-rabbitmq bitnami/rabbitmq --namespace rabbit

NAME: test-rabbitmq

LAST DEPLOYED: Thu Jul 8 10:07:50 2021

NAMESPACE: rabbit

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

Credentials:

echo "Username : user"

echo "Password : $(kubectl get secret --namespace rabbit test-rabbitmq -o jsonpath="{.data.rabbitmq-password}" | base64 --decode)"

echo "ErLang Cookie : $(kubectl get secret --namespace rabbit test-rabbitmq -o jsonpath="{.data.rabbitmq-erlang-cookie}" | base64 --decode)"

Note that the credentials are saved in persistent volume claims and will not be changed upon upgrade or reinstallation unless the persistent volume claim has been deleted. If this is not the first installation of this chart, the credentials may not be valid.

This is applicable when no passwords are set and therefore the random password is autogenerated. In case of using a fixed password, you should specify it when upgrading.

More information about the credentials may be found at https://docs.bitnami.com/general/how-to/troubleshoot-helm-chart-issues/#credential-errors-while-upgrading-chart-releases.

RabbitMQ can be accessed within the cluster on port at test-rabbitmq.rabbit.svc.

To access for outside the cluster, perform the following steps:

Obtain the NodePort IP and ports:

export NODE_IP=$(kubectl get nodes --namespace rabbit -o jsonpath="{.items[0].status.addresses[0].address}")

export NODE_PORT_AMQP=$(kubectl get --namespace rabbit -o jsonpath="{.spec.ports[1].nodePort}" services test-rabbitmq)

export NODE_PORT_STATS=$(kubectl get --namespace rabbit -o jsonpath="{.spec.ports[3].nodePort}" services test-rabbitmq)

To Access the RabbitMQ AMQP port:

echo "URL : amqp://$NODE_IP:$NODE_PORT_AMQP/"

To Access the RabbitMQ Management interface:

echo "URL : http://$NODE_IP:$NODE_PORT_STATS/"输出结束

查看rabbit命名空间下所有的rabbitmq资源,是否创建成功!

$ kubectl get all -n rabbit

NAME READY STATUS RESTARTS AGE

pod/test-rabbitmq-0 1/1 Running 0 3h56m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/test-rabbitmq ClusterIP 172.21.15.81 <none> 4369/TCP,5672/TCP,25672/TCP,15672/TCP 3h56m

service/test-rabbitmq-headless ClusterIP None <none> 4369/TCP,5672/TCP,25672/TCP,15672/TCP 3h56m

NAME READY AGE

statefulset.apps/test-rabbitmq 1/1 3h56m等待一段时间会发现pod、svc、pvc、pv、statefulset全部创建完成,我们怎么来访问Rabbitmq管理界面呢?从输出的内容我们发现:

- NODE_IP

命令:kubectl get nodes --namespace rabbit -o jsonpath="{.items[0].status.addresses[0].address}" - NODE_PORT_AMQP

命令:kubectl get --namespace rabbit -o jsonpath="{.spec.ports[1].nodePort}" services test-rabbitmq - NODE_PORT_STATS

命令:kubectl get --namespace rabbit -o jsonpath="{.spec.ports[1].nodePort}" services test-rabbitmq

上面的Node_ip其实就是k8s结点的真实ip(看看是否是公网可访问的,如果不是可申请阿里云EIP绑定成公网可访问即可),如:192.168.0.1,而NODE_PORT_AMQP是集群5672对应的NodePort端口,如31020,NODE_PORT_STATS是15672对应的NodePort端口,如31010

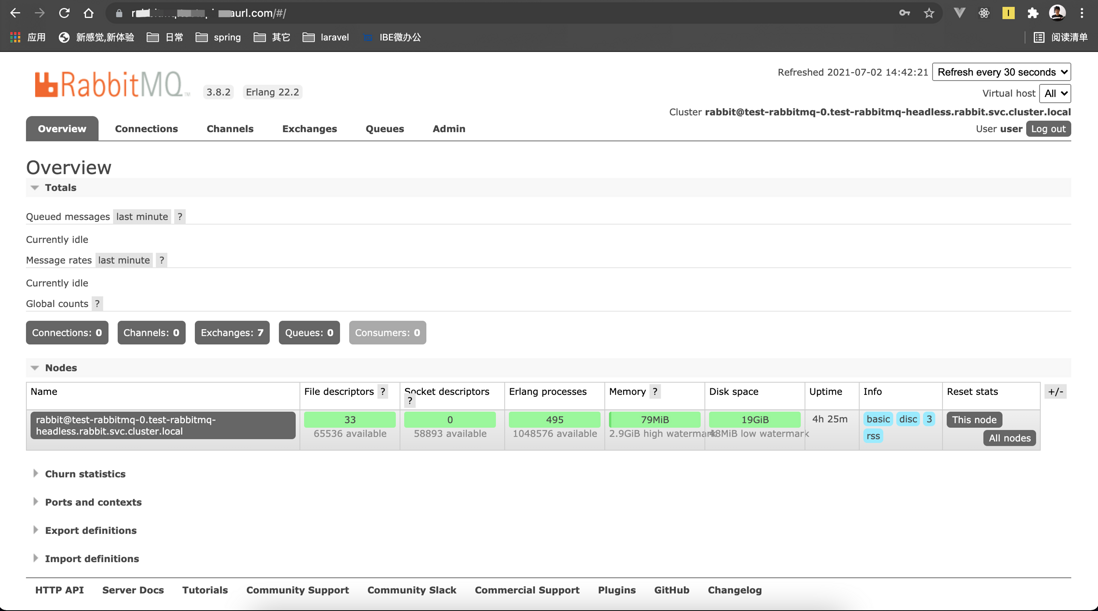

这样,我们访问Rabbitmq管理界面在浏览器中输入:http://192.168.0.1:31010即可访问:

这样,springboot就可以通过ip地址来访问Rabbitmq,

spring:

rabbitmq:

host: 192.168.0.1

port: 5672

username: user

password:本地环境下安装

因为本地开发我们要通过域名访问所以要启用ingress及域名配置:

ingress:

enabled: true

annotations:

kubernetes.io/ingress.class: nginx

hostName: rabbitmq.demo.com最后就是要通过https访问,我们要启用tls:

tls: true

tlsSecret: tls-secret-name这里我们注意一下,因为我使用的是cert-manager.io/cluster-issuer注解(我已经在k8s中已经生成了),所以可以直接生成了tls证书,非常方便,感兴趣的可以看看使用cert-manager申请免费的HTTPS证书

annotations:

cert-manager.io/cluster-issuer: your-cert-manager-name最终完整的values.yaml文件如下:

values.yaml

ingress:

enabled: true

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: your-cert-manager-name

nginx.ingress.kubernetes.io/force-ssl-redirect: 'true'

hostName: rabbitmq.demo.com

tls: true

tlsSecret: tls-secret-name

persistence:

storageClass: "alicloud-disk-ssd"

size: 20Gi现在我们来安装rabbitmq,通过下面命令运行:

# 创建rabbitmq集群

helm install -f values.yaml test-rabbitmq bitnami/rabbitmq --namespace rabbit下面是安装时的输出:

输出开始

NAME: test-rabbitmq

LAST DEPLOYED: Thu Jul 8 19:06:58 2021

NAMESPACE: rabbit

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

Credentials:

echo "Username : user"

echo "Password : $(kubectl get secret --namespace rabbit test-rabbitmq -o jsonpath="{.data.rabbitmq-password}" | base64 --decode)"

echo "ErLang Cookie : $(kubectl get secret --namespace rabbit test-rabbitmq -o jsonpath="{.data.rabbitmq-erlang-cookie}" | base64 --decode)"

Note that the credentials are saved in persistent volume claims and will not be changed upon upgrade or reinstallation unless the persistent volume claim has been deleted. If this is not the first installation of this chart, the credentials may not be valid.

This is applicable when no passwords are set and therefore the random password is autogenerated. In case of using a fixed password, you should specify it when upgrading.

More information about the credentials may be found at https://docs.bitnami.com/general/how-to/troubleshoot-helm-chart-issues/#credential-errors-while-upgrading-chart-releases.

RabbitMQ can be accessed within the cluster on port at test-rabbitmq.rabbit.svc.

To access for outside the cluster, perform the following steps:

To Access the RabbitMQ AMQP port:

1. Create a port-forward to the AMQP port:

kubectl port-forward --namespace rabbit svc/test-rabbitmq 5672:5672 &

echo "URL : amqp://127.0.0.1:5672/"

2. Access RabbitMQ using using the obtained URL.

To Access the RabbitMQ Management interface:

1. Get the RabbitMQ Management URL and associate its hostname to your cluster external IP:

export CLUSTER_IP=$(minikube ip) # On Minikube. Use: `kubectl cluster-info` on others K8s clusters

echo "RabbitMQ Management: http://rabbitmq.demo.com/"

echo "$CLUSTER_IP rabbitmq.testapi.seaurl.com" | sudo tee -a /etc/hosts

2. Open a browser and access RabbitMQ Management using the obtained URL.

输出结束

查看rabbit命名空间下所有的rabbitmq资源,是否创建成功!

$ kubectl get all -n rabbit

NAME READY STATUS RESTARTS AGE

pod/test-rabbitmq-0 1/1 Running 0 3h56m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/test-rabbitmq ClusterIP 172.21.15.81 <none> 4369/TCP,5672/TCP,25672/TCP,15672/TCP 3h56m

service/test-rabbitmq-headless ClusterIP None <none> 4369/TCP,5672/TCP,25672/TCP,15672/TCP 3h56m

NAME READY AGE

statefulset.apps/test-rabbitmq 1/1 3h56m等待一段时间会发现pod、svc、ingress、pvc、pv、statefulset全部创建完成,我们怎么来访问Rabbitmq管理界面呢?从输出的内容我们发现http://rabbitmq.demo.com/

地址,这样,我们访问Rabbitmq管理界面在浏览器中输入:http://rabbitmq.demo.com/即可完成访问:

这样,springboot就可以通过k8s intranet dns解析的name来访问Rabbitmq,具体如何解析可以考这篇文章

spring:

rabbitmq:

host: test-rabbitmq-headless.rabbit.svc.cluster.local

port: 5672

username: user

password:划分环境

使用rabbitmq非常方便,但是我们开发是有环境区分的:开发dev、测试test、预生产uat和生产pro,那么如何划分rabbitmq的环境呢?两种方式

1、安装四种环境下的rabbitmq

2、安装一次rabbitmq,通过visualhost划分不同环境

第1种的话如果你的服务器资源非常充足的话,可以使用,但是如果你希望尽可能少的使用服务资源,你可以使用第2种方法,如下所示操作:

这样就完成了划分不同环境下的rabbitmq,对了,不要忘了配置一下java后台:

# Spring

spring:

# rabbitmq

rabbitmq:

host: 192.168.6.1

# rabbitmq的端口

port: 5672

# rabbitmq的用户名

username: xxx

# rabbitmq的用户密码

password: xxx

# 虚拟主机,用来区分不同环境的队列

virtual-host: dev

#开启重试机制

listener:

retry:

enabled: true

#重试次数,默认为3次

max-attempts: 3问题

1、访问rabbitmq报:503

如果你配置的域名路径如:demo.com/rabbitmq,这样的域名,那么你要配置成下面这样,才能正确访问,另外:推荐使用一级或者二级、三级域名做为hostName不要使用path:/rabbitmq这种形式 参考这篇文章:

rabbitmq:

extraConfiguration: |-

management.path_prefix = /rabbitmq/

ingress:

...

hostName: demo.com

path: /rabbitmq/

...2、kubectl describe svc your-service-name -n rabbit发现service endpoint为空

- 原因:pvc没有删除参考这篇文章了解

解决:

kubectl get pvc kubectl delete pvc <name>

3、running "VolumeBinding" filter plugin for pod "test-rabbitmq-0": pod has unbound immediate PersistentVolumeClaims

- 原因:要设置storageClass以及阿里云盘最低要求20Gi

解决:

persistence: storageClass: "alicloud-disk-ssd" size: 20Gi

4、PersistentVolumeClaim "data-test-rabbitmq-0" is invalid: spec: Forbidden: is immutable after creation except resources.requests for bound claims

- 原因:pvc的容器不支持在线修改的

- 解决:删除pvc重新创建

5、Warning ProvisioningFailed 6s (x4 over 14m) diskplugin.csi.alibabacloud.com_iZbp1d2cbgi4jt9oty4m9iZ_3408e051-98e8-4295-8a21-7f1af0807958 (combined from similar events): failed to provision volume with StorageClass "alicloud-disk-ssd": rpc error: code = Internal desc = SDK.ServerError

ErrorCode: InvalidAccountStatus.NotEnoughBalance

- 原因:阿里云账户余额最低100元才能创建ssd云盘

- 解决:阿里云账户充值

6、登录rabbitmq报401

原因:密码错误

解决:运行下面命令获取密码

echo "Password : $(kubectl get secret --namespace rabbit test-rabbitmq -o jsonpath="{.data.rabbitmq-password}" | base64 --decode)"

7、springcloud微服务访问5672端口连接报:time out

原因:spring.rabbitmq.host地址不应该是你的ingress外网域名:rabbitmq.demo.com,而应该是你内网集群dns解析的地址

解决:使用下面命令获取test-rabbitmq的内网解析地址,然后赋值到spring.rabbitmq.host重新连接即可

# 如果 pod 和服务的命名空间不相同,则 DNS 查询必须指定服务所在的命名空间。

# test-rabbitmq-headless.rabbit命名空间是rabbit

# 如果没有指定命名空间,可以使用kubectl exec -i -t dnsutils -- nslookup test-rabbitmq-headless即可

$ kubectl exec -i -t dnsutils -- nslookup test-rabbitmq-headless.rabbit

Server: 172.21.0.10

Address: 172.21.0.10#53

Name: test-rabbitmq-headless.rabbit.svc.cluster.local

Address: 172.20.0.124application.yml

spring.rabbitmq.host=test-rabbitmq-headless.rabbit.svc.cluster.local8、开发测试环境下获取5672对应的NodePort端口不正确

原因:如下命令获取的5672端口不正确

kubectl get --namespace rabbit -o jsonpath="{.spec.ports[1].nodePort}" services test-rabbitmq解决:使用如下命令查看获取5672对应的NodePort端口31972

$ kubectl get svc test-rabbitmq -n rabbit

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

test-rabbitmq NodePort 172.21.14.17 <none> 5672:31972/TCP,4369:31459/TCP,25672:31475/TCP,15672:31696/TCP 14m总结

1、kubectl要配置k8s的连接配置信息才能使用,而helm默认就拿到了连接信息,默认是在~/.kube/config

2、helm3已经移除了helm tillers和helm init命令,所以就不要讨论helm2了。

3、如果安装过程中或者之后出现错误,可以通过阿里云ack控制台下的应用->helm release列表删除即可。

4、不需要配置rabbitmq-plugins enable rabbitmq_management,因为helm安装rabbitmq后默认启动了。

5、如果你想本地调试,可以使用下面方式

To Access the RabbitMQ AMQP port:

kubectl port-forward --namespace rabbit svc/test-rabbitmq 5672:5672

echo "URL : amqp://127.0.0.1:5672/"

To Access the RabbitMQ Management interface:

kubectl port-forward --namespace rabbit svc/test-rabbitmq 15672:15672

echo "URL : http://127.0.0.1:15672/"6、rabbitmq 5672端口只支持amqp:协议,也就是说不能用ingress暴露,而ingress只能暴露http和https,所以开发测试环境我们设置service的type: NodePort也就是这个原因!!!

2022-01-18更新

一般如果你设置了rabbmit.service.type=NodePort的话,如果是集群内的pod可以直接通过dns 集群域名,如:host: test-rabbitmq,headless.rabbit.svc.cluster.local,port: 5672来访问,但是你如果想通过外网来访问图形化界面,如何做呢?

解决:因为我们的rabbmitmq是StatefulSet有状态的部署,所以在节点上的IP是不变的,基于这个我们可以直接获取Node节点的IP指定一下15672对外的端口即可访问,前提是Node安全组要放开15672对应的对外端口。

如下面的15672对应的是31961端口,这样就可以访问图形化界面了。

service/test-rabbitmq NodePort 172.21.10.248 <none> 5672:31469/TCP,4369:32745/TCP,25672:32057/TCP,15672:31961/TCP 183d引用

Helm部署RabbitMQ集群 _

helm文档

kubectl exec是如何工作的?

Helm教程

Rabbitmq 配置开发 测试不同环境

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。