一.下载数据集

数据集来自kaggle 数据集的Dogs vs Cats数据集

百度云盘下载地址

链接:https://pan.baidu.com/s/177uL...

提取码:9j40

二.对数据进行划分

1.创建文件夹如下:

train

- cats

- dogs

val

- cats

- dogs

- test

2.对数据进行划分,代码如下

def data_cats_processing():

base_train_path = r'E:\mldata\dogvscat\data\train'

base_dest_train_path = r'E:\mldata\dogvscat\train\cats'

base_dest_test_path = r'E:\mldata\dogvscat\test\cats'

base_dest_val_path = r'E:\mldata\dogvscat\val\cats'

cat_fnames = ['cat.{}.jpg'.format(i) for i in range(10000)]

for fname in cat_fnames:

src_path = os.path.join(base_train_path, fname)

dest_path = os.path.join(base_dest_train_path, fname)

shutil.copyfile(src_path, dest_path)

cat_fnames = ['cat.{}.jpg'.format(i) for i in range(10001, 11501)]

for fname in cat_fnames:

src_path = os.path.join(base_train_path, fname)

dest_path = os.path.join(base_dest_val_path, fname)

shutil.copyfile(src_path, dest_path)

cat_fnames = ['cat.{}.jpg'.format(i) for i in range(11501, 12500)]

for fname in cat_fnames:

src_path = os.path.join(base_train_path, fname)

dest_path = os.path.join(base_dest_test_path, fname)

shutil.copyfile(src_path, dest_path)

def data_dog_processing():

base_train_path = r'E:\mldata\dogvscat\data\train'

base_dest_train_path = r'E:\mldata\dogvscat\train\dogs'

base_dest_test_path = r'E:\mldata\dogvscat\test\dogs'

base_dest_val_path = r'E:\mldata\dogvscat\val\dogs'

dog_fnames = ['dog.{}.jpg'.format(i) for i in range(10000)]

for fname in dog_fnames:

src_path = os.path.join(base_train_path, fname)

dest_path = os.path.join(base_dest_train_path, fname)

shutil.copyfile(src_path, dest_path)

dog_fnames = ['dog.{}.jpg'.format(i) for i in range(10001, 11501)]

for fname in dog_fnames:

src_path = os.path.join(base_train_path, fname)

dest_path = os.path.join(base_dest_val_path, fname)

shutil.copyfile(src_path, dest_path)

dog_fnames = ['dog.{}.jpg'.format(i) for i in range(11501, 12500)]

for fname in dog_fnames:

src_path = os.path.join(base_train_path, fname)

dest_path = os.path.join(base_dest_test_path, fname)

shutil.copyfile(src_path, dest_path)三.批量读取数据

代码:

def datagen():

# 使用keras 中ImageDataGenerator分批加载图片

train_datagen = ImageDataGenerator(

rescale=1 / 255.)

test_datagen = ImageDataGenerator(rescale=1 / 255.)

train_dir = r'E:\mldata\dogvscat\train'

val_dir = r'E:\mldata\dogvscat\val'

train_datagen = train_datagen.flow_from_directory(

train_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary'

)

val_datagen = test_datagen.flow_from_directory(

val_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary'

)

return train_datagen, val_datagen四.创建神经网络模型

神经网络

def create_model2():

model = Sequential()

model.add(Conv2D(32, (3, 3), activation='relu', input_shape=(150, 150, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(128, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(128, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Flatten())

model.add(Dense(512, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer=RMSprop(learning_rate=1e-4), metrics=['acc'])

return model五.开始训练

# 加载数据

train_datagen, val_datagen = datagen()

# 创建模型

model = create_model2()

# 创建tensorboard使用的log文件夹

log_dir = "logs/fit/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

# 创建tensorboard callback 回调

tensorboard_callback = callbacks.TensorBoard(log_dir=log_dir, histogram_freq=1)

# 开始训练

model.fit_generator(train_datagen,

steps_per_epoch=100,

epochs=100,

validation_data=val_datagen,

validation_steps=50,

callbacks=[tensorboard_callback])

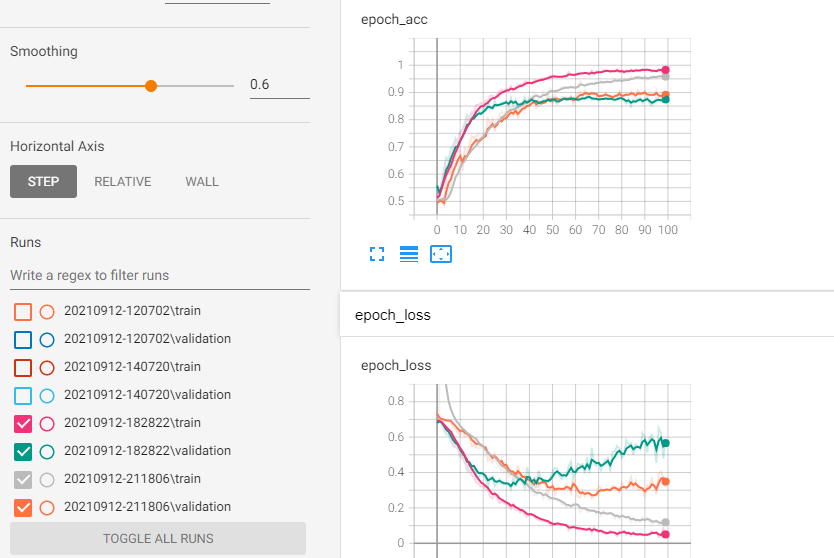

训练结果:

Epoch 96/100

100/100 [==============================] - 82s 819ms/step - loss: 0.1315 - acc: 0.9507 - val_loss: 0.4925 - val_acc: 0.8200

Epoch 97/100

100/100 [==============================] - 87s 866ms/step - loss: 0.1234 - acc: 0.9537 - val_loss: 0.3646 - val_acc: 0.8706

Epoch 98/100

100/100 [==============================] - 87s 872ms/step - loss: 0.1209 - acc: 0.9542 - val_loss: 0.3609 - val_acc: 0.8619

Epoch 99/100

100/100 [==============================] - 77s 769ms/step - loss: 0.1225 - acc: 0.9521 - val_loss: 0.3553 - val_acc: 0.8731

Epoch 100/100

100/100 [==============================] - 73s 731ms/step - loss: 0.1213 - acc: 0.9544 - val_loss: 0.4488 - val_acc: 0.8444结果分析:

1.训练精度可以达到0.95,还可以提高

2.训练精度0.95,验证精度0.85,严重过拟合

解决:

- 训练更大的网络

- 使用Adam优化

- 增大学习率

修改模型如下:

def base_model1():

model = Sequential()

model.add(Conv2D(32, (3, 3), activation='relu', input_shape=(150, 150, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

# 新加

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(128, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(128, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Flatten())

model.add(Dense(512, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer=Adam(learning_rate=1e-3), metrics=['acc'])

结果:

Epoch 95/100

100/100 [==============================] - 55s 551ms/step - loss: 0.0693 - acc: 0.9763 - val_loss: 0.5669 - val_acc: 0.8694

Epoch 96/100

100/100 [==============================] - 51s 513ms/step - loss: 0.0528 - acc: 0.9814 - val_loss: 0.4546 - val_acc: 0.8800

Epoch 97/100

100/100 [==============================] - 55s 550ms/step - loss: 0.0351 - acc: 0.9870 - val_loss: 0.6491 - val_acc: 0.8756

Epoch 98/100

100/100 [==============================] - 55s 551ms/step - loss: 0.0328 - acc: 0.9894 - val_loss: 0.6218 - val_acc: 0.8694

Epoch 99/100

100/100 [==============================] - 51s 514ms/step - loss: 0.0899 - acc: 0.9699 - val_loss: 0.4788 - val_acc: 0.8744

Epoch 100/100

100/100 [==============================] - 57s 566ms/step - loss: 0.0361 - acc: 0.9877 - val_loss: 0.5918 - val_acc: 0.8775

结果分析:

训练精度提高到0.9877 但是验证精度只有 0.8775,严重过拟合

解决方式:

尝试使用Dropout 和L2 正则化解决

修改模型为:

def base_model1():

model = Sequential()

model.add(Conv2D(32, (3, 3), activation='relu', input_shape=(150, 150, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

# 新加

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(128, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(128, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Flatten())

# 添加Dropout

model.add(Dropout(0.2))

# model.add(Dense(512, activation='relu'))

model.add(Dense(512, activation='relu', kernel_regularizer=regularizers.L2(0.01)))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer=Adam(learning_rate=1e-3), metrics=['acc'])

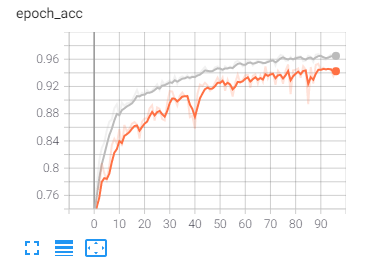

return model训练结果:

可以看到过拟合有所降低,但是还是有很高的过拟合,训练精度也有所降低

解决:

- 增加数据集

将数据集扩充到200000,修改数据读取,

数据下载地址:

链接:https://pan.baidu.com/s/1nC6W...

提取码:6h20

def datagen():

# 使用keras 中ImageDataGenerator分批加载图片

train_datagen = ImageDataGenerator(

rescale=1 / 255.)

test_datagen = ImageDataGenerator(rescale=1 / 255.)

train_dir = r'E:\mldata\dogvscat\train'

val_dir = r'E:\mldata\dogvscat\val'

train_datagen = train_datagen.flow_from_directory(

train_dir,

target_size=(150, 150),

batch_size=32,

class_mode='binary'

)

val_datagen = test_datagen.flow_from_directory(

val_dir,

target_size=(150, 150),

batch_size=32,

class_mode='binary'

)

return train_datagen, val_datagen最终计算结果:

Epoch 95/100

100/100 [==============================] - 143s 1s/step - loss: 0.0994 - acc: 0.9683 - val_loss: 0.1546 - val_acc: 0.9447

Epoch 96/100

100/100 [==============================] - 129s 1s/step - loss: 0.0990 - acc: 0.9632 - val_loss: 0.1641 - val_acc: 0.9353

Epoch 97/100

100/100 [==============================] - 128s 1s/step - loss: 0.1072 - acc: 0.9632 - val_loss: 0.1564 - val_acc: 0.9441

Epoch 98/100

100/100 [==============================] - 135s 1s/step - loss: 0.1132 - acc: 0.9604 - val_loss: 0.1509 - val_acc: 0.9456

Epoch 99/100

100/100 [==============================] - 149s 1s/step - loss: 0.1035 - acc: 0.9644 - val_loss: 0.1327 - val_acc: 0.9536

Epoch 100/100

100/100 [==============================] - 155s 2s/step - loss: 0.0958 - acc: 0.9717 - val_loss: 0.1491 - val_acc: 0.9464

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。