In this issue of IDP Inspiration, we will continue to share with you Dr. Huan Chengying's research results on his "Sequence Diagram Neural Network System Based on Efficient Sampling Algorithm".

The previous article described the efficient graph sampling algorithm in the time series graph neural network. This article will focus on how to train the efficient time series graph neural network.

The scale of the existing graph data is very large, which makes the training of the sequence graph neural network take an extraordinarily long time. Therefore, it becomes particularly important to use multiple GPUs for training. How to effectively use multiple GPUs for the sequence graph neural network training has become a very important task. important research topics. This paper provides two ways to improve the performance of multi-GPU training, including locality-aware data partitioning strategies and efficient task scheduling strategies.

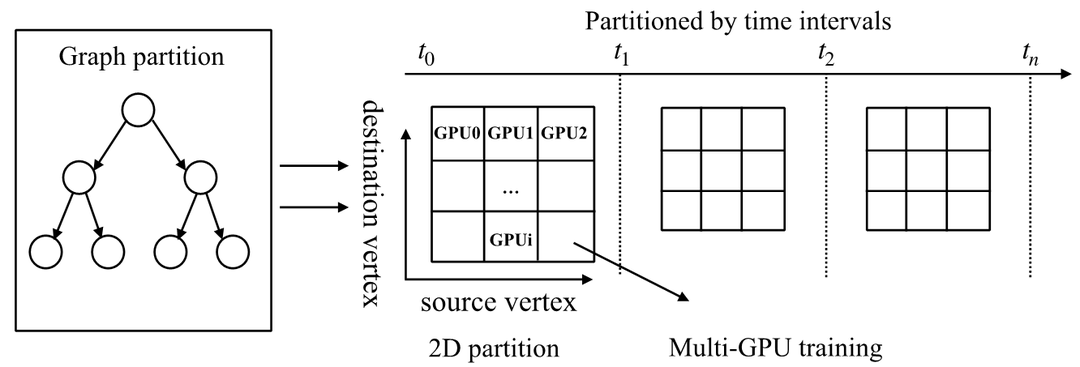

First, this paper uses an efficient data partition strategy to effectively divide the graph data and point feature vectors to reduce the extra communication overhead between GPUs. The feature vectors of points occupy a large storage space, so we divide the feature vectors of points according to three dimensions and store them in different GPUs. As shown in Figure 1, first we divide the sequence diagram according to the time dimension. In different time ranges, the point data in the time range is divided into two dimensions according to source points and sink points, wherein each GPU stores a feature vector of the source points in a segment. For the storage of edge data, we store the edge data directly in CPU memory. During training, the corresponding edge data is loaded into the corresponding GPU.

图1. 局部性感知的数据划分策略Since different data are stored in different GPUs, how to perform efficient task scheduling to reduce communication overhead will become a crucial issue. To solve this problem, we propose an efficient task scheduling strategy :

First of all, our task scheduling schedules data stored on different GPUs, so as to make full use of the advantages of NVLink high-speed communication between GPUs. During training, each GPU needs to transmit the feature vector of the sink corresponding to the current edge data because each GPU stores the point feature vector in a segment. Among the 8 V100 GPUs, there are two NVLink high-speed links between some GPUs. We will make full use of these NVLink links to transmit the feature vectors of points, thereby improving the transmission efficiency.

Taking 8 GPUs as an example, we perform a total of 4 rounds of point data interaction. In each round, we first interact with four pairs of GPUs numbered 0 and 1, 2 and 3, 4 and 5, and 6 and 7. After the swap is complete, training is performed on the data within each GPU. Next, the four pairs of GPUs numbered 0 and 4, 1 and 2, 3 and 7, and 5 and 6 are exchanged for point data. After 4 epochs, the graph data in the current time frame will be trained. At the same time, in data exchange, we make full use of 2 NVLink links between GPUs.

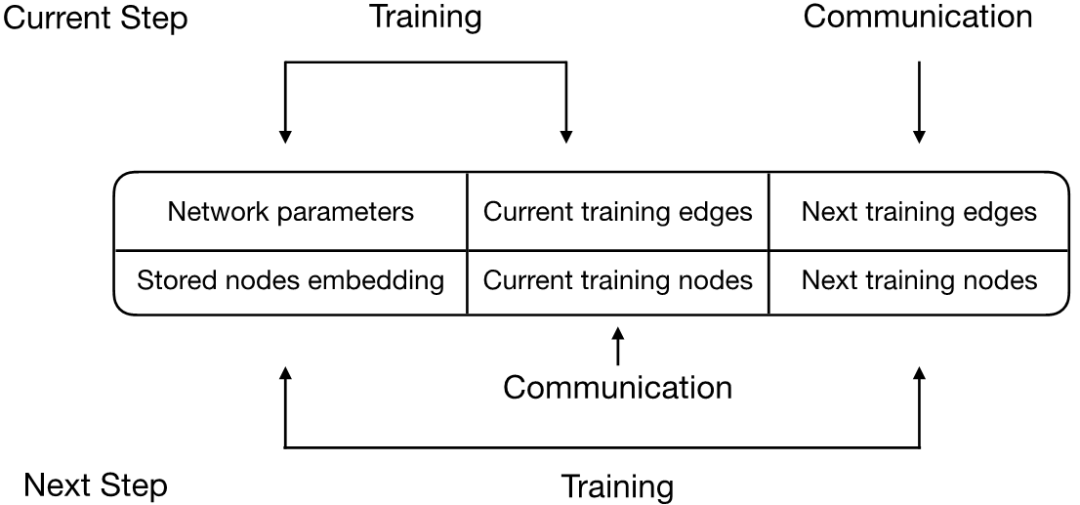

图2. 数据与传输过程进行流水线化处理Second, our efficient task scheduling pipelines the training on different data blocks as well as the communication on the GPU to hide part of the communication overhead. As shown in Figure 2, we pipeline the training process with the data transfer process. Specifically, we divide the GPU memory into three parts: the first part stores fixed data (neural network parameters and feature vectors of source points), and the second part stores the data required for current neural network training (including edge data and sinks point feature vector), the third part is used for data transmission and storage of the data required for the next training. At the next training time, we swap the data of the second and third parts, similar to the Ping-Pong operation. On this basis, we can overlap the training process and data transfer to increase throughput and improve training performance.

To sum up, in view of the high communication overhead between devices in the sequence graph neural network, this paper proposes a locality-aware data partitioning strategy and an efficient task scheduling strategy to reduce the communication overhead during the training of the sequence graph neural network. At the same time, this paper introduces graph sampling in the time series graph neural network to further accelerate the training of the neural network and reduce the communication overhead. Based on the above communication reduction strategy, this paper proposes a sequence graph neural network system T-GCN. Experimental results show that T-GCN achieves an overall performance improvement of up to 7.9 times . In terms of graph sampling performance, the line segment binary search sampling algorithm proposed in this paper can achieve a maximum sampling performance improvement of 38.8 times .

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。