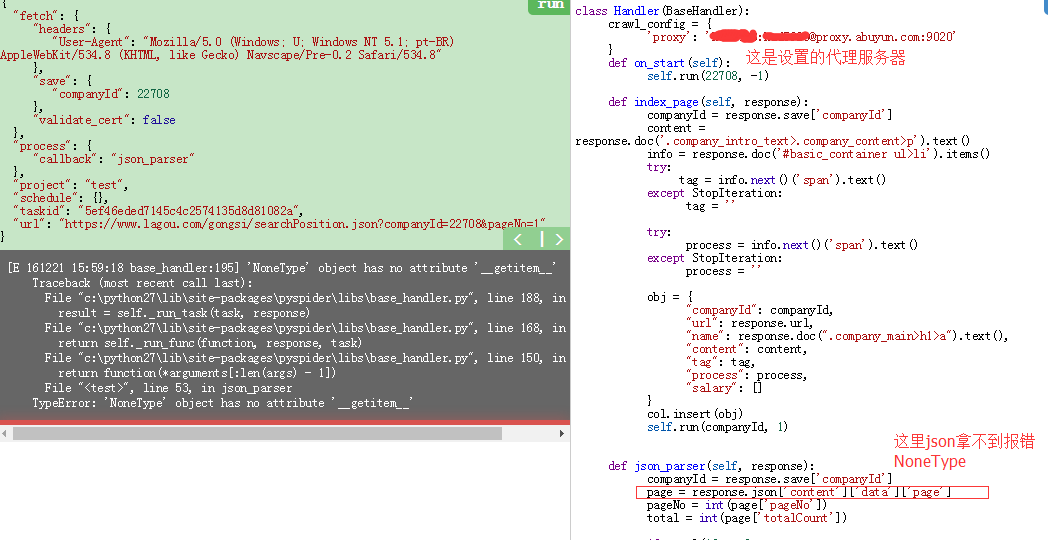

需要爬取的网站服务器把我的ip封了,于是我用了可以动态切换ip的代理ip,但是还是拿不到数据,crawl_config设置无效,求解

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

# Created on 2017-07-28 14:13:14

# Project: mimvp_proxy_pyspider

#

# mimvp.com

from pyspider.libs.base_handler import *

class Handler(BaseHandler):

crawl_config = {

'proxy' : 'http://188.226.141.217:8080', # http

'proxy' : 'https://182.253.32.65:3128' # https

}

@every(minutes=24 * 60)

def on_start(self):

self.crawl('http://proxy.mimvp.com/exist.php', callback=self.index_page)

@config(age=10 * 24 * 60 * 60)

def index_page(self, response):

for each in response.doc('a[href^="http"]').items():

self.crawl(each.attr.href, callback=self.detail_page)

@config(priority=2)

def detail_page(self, response):

return {

"url": response.url,

"title": response.doc('title').text(),

}撰写回答

你尚未登录,登录后可以

- 和开发者交流问题的细节

- 关注并接收问题和回答的更新提醒

- 参与内容的编辑和改进,让解决方法与时俱进

如果你是执行到这然后改的 crawl_config, 后退到上一个请求,重新点 run 进来