问题描述

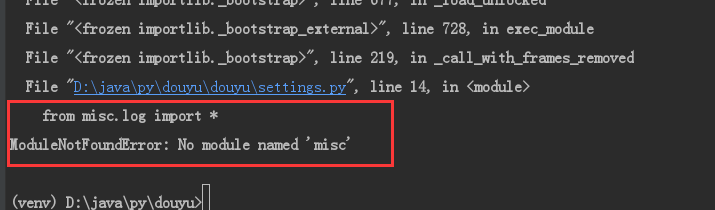

从GitHub上下载了几个scrapy的项目,放到自己的目录下执行,居然报错了。

我看到好像是少叫一个misc的模块,所以想用pip 装一下 ,但是却报错!

不知道这个是怎么回事,怎么安装这玩意。

上图是出错的位置。

额,头疼。。。

问题出现的环境背景及自己尝试过哪些方法

window7

Python3.7

scrapy 1.5.1

目录结构

相关代码

// 请把代码文本粘贴到下方(请勿用图片代替代码)

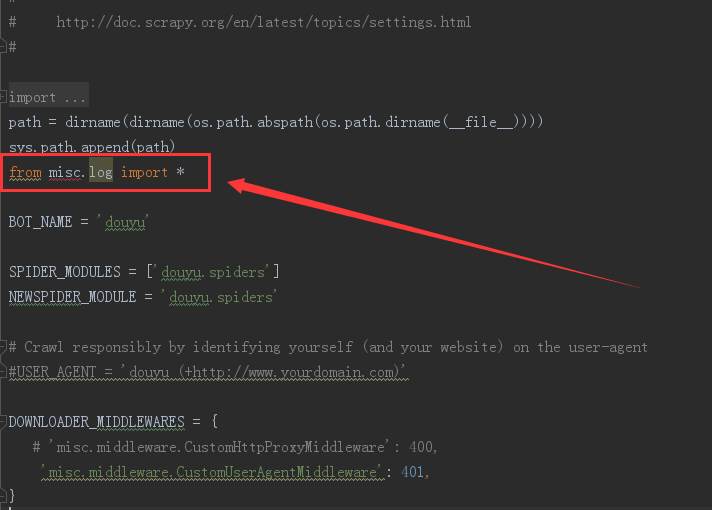

settings.py

# Scrapy settings for douyu project

#

# For simplicity, this file contains only the most important settings by

# default. All the other settings are documented here:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

#

import sys

import os

from os.path import dirname

path = dirname(dirname(os.path.abspath(os.path.dirname(__file__))))

sys.path.append(path)

from misc.log import *

BOT_NAME = 'douyu'

SPIDER_MODULES = ['douyu.spiders']

NEWSPIDER_MODULE = 'douyu.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'douyu (+http://www.yourdomain.com)'

DOWNLOADER_MIDDLEWARES = {

# 'misc.middleware.CustomHttpProxyMiddleware': 400,

'misc.middleware.CustomUserAgentMiddleware': 401,

}

ITEM_PIPELINES = {

'douyu.pipelines.JsonWithEncodingPipeline': 300,

#'douyu.pipelines.RedisPipeline': 301,

}

LOG_LEVEL = 'INFO'

DOWNLOAD_DELAY = 1

spider.py

import re

import json

from urlparse import urlparse

import urllib

import pdb

from scrapy.selector import Selector

try:

from scrapy.spiders import Spider

except:

from scrapy.spiders import BaseSpider as Spider

from scrapy.utils.response import get_base_url

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor as sle

from douyu.items import *

from misc.log import *

from misc.spider import CommonSpider

class douyuSpider(CommonSpider):

name = "douyu"

allowed_domains = ["douyu.com"]

start_urls = [

"http://www.douyu.com/directory/all"

]

rules = [

Rule(sle(allow=("http://www.douyu.com/directory/all")), callback='parse_1', follow=True),

]

list_css_rules = {

'#live-list-contentbox li': {

'url': 'a::attr(href)',

'room_name': 'a::attr(title)',

'tag': 'span.tag.ellipsis::text',

'people_count': '.dy-num.fr::text'

}

}

list_css_rules_for_item = {

'#live-list-contentbox li': {

'__use': '1',

'__list': '1',

'url': 'a::attr(href)',

'room_name': 'a::attr(title)',

'tag': 'span.tag.ellipsis::text',

'people_count': '.dy-num.fr::text'

}

}

def parse_1(self, response):

info('Parse '+response.url)

#x = self.parse_with_rules(response, self.list_css_rules, dict)

x = self.parse_with_rules(response, self.list_css_rules_for_item, douyuItem)

print(len(x))

# print(json.dumps(x, ensure_ascii=False, indent=2))

# pp.pprint(x)

# return self.parse_with_rules(response, self.list_css_rules, douyuItem)

return x

pipelines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import redis

from scrapy import signals

import json

import codecs

from collections import OrderedDict

class JsonWithEncodingPipeline(object):

def __init__(self):

self.file = codecs.open('data_utf8.json', 'w', encoding='utf-8')

def process_item(self, item, spider):

line = json.dumps(OrderedDict(item), ensure_ascii=False, sort_keys=False) + "\n"

self.file.write(line)

return item

def close_spider(self, spider):

self.file.close()

class RedisPipeline(object):

def __init__(self):

self.r = redis.StrictRedis(host='localhost', port=6379)

def process_item(self, item, spider):

if not item['id']:

print 'no id item!!'

str_recorded_item = self.r.get(item['id'])

final_item = None

if str_recorded_item is None:

final_item = item

else:

ritem = eval(self.r.get(item['id']))

final_item = dict(item.items() + ritem.items())

self.r.set(item['id'], final_item)

def close_spider(self, spider):

return

items.py

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

from scrapy.item import Item, Field

class douyuItem(Item):

# define the fields for your item here like:

url = Field()

room_name = Field()

people_count = Field()

tag = Field()

你期待的结果是什么?实际看到的错误信息又是什么?

希望可以给一个必有特否的回答。

https://stackoverflow.com/que...